Question Bank for Elective 5 (ANN)

Question1:

1- Human brain is comprised of a huge number of simple processing elements called………… each one is

capable of ………………with others.

2- Each neuron in ANN sends …………. ..or…………. signals to other neurons while its activation depends on

……….. from other neurons to which it is connected.

3- Multilayer ANNs have applications such as …………………….., ………………… and …………………..

4- ANNs are fault tolerant and high computational speed due to their………………structure.

5- ANNs when faced with new situations, they have the ability to ……………………….

6- ANNs are classified as ……………. when they do not contain any memory elements.

7- ANNs are classified as ……………. when they involve memory elements.

8- Finding a dynamic relationship between the physical plant inputs and outputs can be defined as

………………………

9- An ANN controller with Specialized training architecture uses ………………… as the driving force and has

the advantages of……………………….. and ……………………………….

10- An ANN controller with Indirect Learning architecture involves 2 ANN performing as ……………………

and ……………………..

11- ANN classifier developed applications to biomedical signal processing such as …………………….

12- An Autonomous Land Vehicle In a Neural Network (ALVINN) project takes ………………… and

……………………as an input and produces …………………………..as an o/p.

13- For an ANN working as memories once memory is initialized at its o/p, it should terminate at …………..

14- ……………..is a quantitative description of an object, event or phenomenon.

15- Classification may involve …………..patterns like pictures, weather maps, fingerprints and

…………….patterns such as speech signals, electrocardiogram.

16- In classification System, the converted data at transducer o/p can be compressed using…………. then called

…………………. Compression performs……………..of dimensionality.

17- Dichotomizer is a special case of the classifier with R classes and R equals …………….

18- The only limitation regarding the unknown patterns to be recognized in a trainable classifier is that they are

drawn from ………………………….

19- We can avoid the use of nonlinear discriminant functions by changing the classifier’s ff architecture to a

…………………………

20- …………………….. is a simple case of a multiclass minimum distance classifier (MDC).

21- ………………………is an approach in which MDC assign category membership based on closest match

between each prototype and the current i/p pattern.

22- ………………………are regions where no class membership of an input pattern can be uniquely determined

based on the response of the classifier.

23- The………………………….problem is the evaluation of the contribution of each particular weight to the

output error.

24- If local minimas are not very deep, inserting some form of ………………to the training may be sufficient to

get out.

Question 2:

Choose ONLY One Answer

1- ANN as a/an …………………………….is useful for mathematical modeling.

(a) Classifier

(b) function approximator

(c) Optimizer

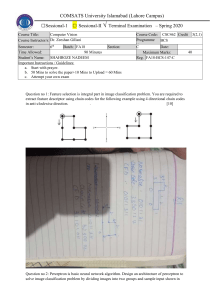

2- Output “ O “ of next McCulloch model represents:

(a) OR logic gate

(b) AND logic gate

(c) NOR logic gate

(d)Pattern Recognizer

(d)NAND gate

3- One of the problems of backpropagation algorithm is that the error minimization procedure may

produce only a………………..of the error function.

(a)Global minimum

(b) Local minimum

(c) Local maximum

(d) b or c

4- The …………… the neural network, the better should be the training convergence speed & accuracy.

(a)Larger

(b) Smaller

(c) Larger hidden layers in (d) a or c

5- Purpose of ANN ………….is to minimize certain cost functions, usually defined by user.

(a) Classifier

(b) Function approximator (c) Optimizer

(d) Pattern Recognizer

6- The weights of the ANN to be trained are typically initialized at …………….values.

(a)Small statistical

(b) Small random

(c) Large statistical

(d) Large random

7- The factor that strongly affects the ultimate solution of an ANN is:

(a)Initial weights

(b) Learning Factor

(c) Steepness Factor(ƛ)

(d) All

8- For …………learning constant (η), the learning speed can be …………..

(a)Large/decreased

(b) Large/increased

(c) Small/decreased

(d) b or c

9-For problems with steep and narrow minima, a …………value of η must be chosen to avoid

overshooting the solution.

(a)Large

(b) Small

(c) Zero

(d) Random

10- What is classification?

A. Deciding which features to use in a pattern recognition problem.

B. Deciding which class an input pattern belongs to.

C. Deciding which type of neural network to use.

11. What is the biggest difference between Widrow & Hoff’s Delta Rule and the Perceptron Learning Rule for

learning in a single-layer feedforward network?

A. There is no difference.

B. The Delta Rule is defined for step activation functions, but the Perceptron Learning Rule is defined for linear

activation functions.

C. The Delta Rule is defined for sigmoid activation functions, but the Perceptron Learning Rule is defined for linear

activation functions.

D. The Delta Rule is defined for linear activation functions, but the Perceptron Learning Rule is defined for step

activation functions.

E. The Delta Rule is defined for sigmoid activation functions, but the Perceptron Learning Rule is defined for step

activation functions

12. Is the following statement true or false? “The XOR problem can be solved by a multi-layer perceptron, but a

multi-layer perceptron with bipolar step activation functions cannot learn to do this.”

A. TRUE.

B. FALSE.

13. Is the following statement true or false? “The generalized Delta rule solves the credit assignment problem in

the training of multi-layer feedforward networks.”

A. TRUE.

B. FALSE.

14. Many pattern recognition problems require the original input variables to be combined together to make a

smaller number of new variables. These new input variables are called

A. patterns.

B. features.

C. weights.

D. classes.

15. The process described in question 14 is

A. a type of pre-processing which is often called feature extraction.

B. a type of pattern recognition which is often called classification.

C. a type of post-processing which is often called winner-takes-all.

16. An artificial neural network may be trained on one data set and tested on a second data set. The system designer can

then experiment with different numbers of hidden layers, different numbers of hidden units, etc. For real world

applications, it is therefore important to use a third data set to evaluate the final performance of the system. Why?

A. The error on the third data set provides a better (unbiased) estimate of the true generalization error.

B. The error on the third data set is used to choose between lots of different possible systems.

C. It's not important { testing on the second data set indicates the generalization performance of the system.

17. “A perceptron is trained on the data shown above, which has two classes (shown by the symbols ‘+’ and ‘o’). After how

many iterations of training, the perceptron will converge and the decision line will reach a steady state?”

A. few iterations.

B. Large number of iterations.

C. It’ll not converge.

18- A perceptron with a unipolar binary function has two inputs with weights w 1 = 0.2 and w2 = −0.5, and w3 = - 0.2 (where w3

is the weight for bias which is -1). For a given training example x = [0, 1] t, the desired output is 1. Using the learning rule=c

(d − y) x, where learning rate = 0.2. What are the new values of the weights and threshold after one step of training with the

input?

A. w1 = 0.2, w2 = − 0.3, w3 = − 0.4.

C. w1 = 0.2, w2 = − 0.5, w3 = − 0.2.

B. w1 = 0.4, w2 = −0.5, w3= − 0.4.

D. w1 = 0.2 , w2 = −0.3, w3= 0.

19- What is a feature in a pattern classification problem?

A. An output variable.

B. An input variable.

C. A hidden variable or weight.

20- What is a decision boundary in a pattern classification problem with two input variables?

A. A histogram defined for each image in the training set.

B. A line or curve which separates two different classes.

C. A plane or hypercurve defined in the space of possible inputs.

21- Which of the following statements is true for the winner takes all learning method?

A. The learning rate is a function of the distance of the adapted units from the winning unit.

B. The weights of the winning unit k are adapted by Δwk = η (x - wk), where x is the input vector.

C. The weights of the neighbours j of the winning unit are adapted by Δwj = ηj (x - wj ), where ´ η j < η ´ and j ≠ k.