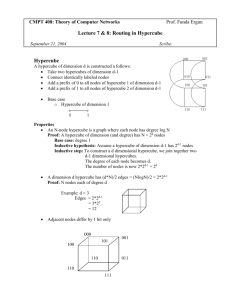

Running head: HYPERCUBE MULTIPROCESSORS Jomo Kenyatta University of Agriculture and Technology ICS 2410: Parallel Systems Hypercube Multiprocessors Group 3 SHARON ACHIENG SCT211-0025/2017 KENNEDY MUIA SCT211-0202/2017 ALLAN WASEGA SCT211-0758/2016 ANNETTE IRUNGU SCT211-0002/2017 ELIJAH KYULE SCT211-0014/2017 AMOS MBEKI SCT211-0466/2017 Dr. Eunice Njeri February 21, 2022 1 HYPERCUBE MULTIPROCESSORS 2 Table of Contents TABLE OF CONTENTS ..............................................................................................................................................2 ABSTRACT ..................................................................................................................................................................3 INTRODUCTION .........................................................................................................................................................4 DEFINITIONS ..............................................................................................................................................................4 HYPERCUBE INTERCONNECTION .........................................................................................................................6 THE WHY BEHIND HYPERCUBE MULTIPROCESSORS ......................................................................................7 OPERATING PRINCIPLES OF HYPERCUBE MULTIPROCESSORS ....................................................................8 PROCESSOR ALLOCATION IN HYPERCUBE MULTIPROCESSORS .................................................................9 MAJOR STEPS IN PROCESSOR ALLOCATION ............................................................................................................. 11 TYPES OF PROCESSOR ALLOCATION POLICIES ......................................................................................................... 11 APPROACHES TO PROCESSOR ALLOCATION ............................................................................................................. 11 Top-down Approach ........................................................................................................................................... 12 Bottom-up Approach ........................................................................................................................................... 12 TASK MIGRATION IN HYPERCUBE MULTIPROCESSORS ............................................................................... 13 MAJOR STEPS IN TASK MIGRATION ......................................................................................................................... 14 FAULT-TOLERANT HYPERCUBE MULTIPROCESSORS ................................................................................... 15 HYPERCUBE MULTIPROCESSORS: ROUTING ALGORITHMS ........................................................................ 18 SOURCE VS. DISTRIBUTED ROUTING ........................................................................................................................ 20 DETERMINISTIC VS. ADAPTIVE ROUTING ................................................................................................................. 20 MINIMAL VS. NONMINIMAL ROUTING...................................................................................................................... 21 TOPOLOGY DEPENDENT VS. TOPOLOGY AGNOSTIC ROUTING .................................................................................. 21 ILLUSTRATION: ADAPTIVE ROUTING ....................................................................................................................... 21 PERFORMANCE MEASURES FOR HYPERCUBE MICROPROCESSORS ......................................................... 22 SPEEDUP .................................................................................................................................................................. 22 Limits to Speedup ................................................................................................................................................ 23 EFFICIENCY .............................................................................................................................................................. 23 SCALABILITY ........................................................................................................................................................... 24 CASE STUDY: CEDAR MULTIPROCESSOR ......................................................................................................... 24 CEDAR MACHINE ORGANIZATION ........................................................................................................................... 24 Cedar Cluster ...................................................................................................................................................... 25 Memory Hierarchy .............................................................................................................................................. 25 Importance of Cedar ........................................................................................................................................... 26 CONCLUSION ........................................................................................................................................................... 26 REFERENCES ............................................................................................................................................................ 28 HYPERCUBE MULTIPROCESSORS 3 Abstract Various parallel computing machines have been developed over the years to achieve the main goal of faster processing times at the lowest cost. One of these computers are hypercube multiprocessors, which comprise D = 2d processing elements (PEs). Hypercubes were a popular technology in the late 1980s, but they proved to be too expensive for the performance merits they offered. Regardless, there is still a lot to learn from the technology to inform the field of parallel computing. This paper focuses on the architecture, processor allocation methods, and task migration algorithms for these machines. It is established that activities within a hypercube are coordinated through messages among processors. Therefore, various message routing algorithms were developed to ensure that this process was undertaken effectively. Additionally, this paper looks at the Cedar Multiprocessor, an early-generation hypercube that provides a practical view regarding how the technology worked. Overall, this research shows the advances that parallel processing has made over the years, with each technology contributing to making the field better. Keywords: hypercube multiprocessors, processing elements, processor allocation, parallel computing HYPERCUBE MULTIPROCESSORS 4 Hypercube Multiprocessors Introduction Hypercubes were a popular class of parallel machines that emerged in the late 1980s and early 1990s (Matloff, 2011). A hypercube computer consisted of several ordinary Intel processors – each processor had memory and some serial I/O hardware for connections to its neighboring processors. However, hypercubes proved to be too expensive for the performance merits they offered. That combined with the small market share they enjoyed, hypercube computers were slowly phased out. Nonetheless, they are essential for historical reasons as old techniques are often recycled in the computing field. The algorithms driving hypercube computers have become immensely popular among general machines. Therefore, this report will discuss the architecture, processor allocation methods, and task migration algorithms for these machines: Definitions A hypercube of dimension, d, consists of D = 2d processing elements (PEs) and is called a d-cube. Processing elements describe processor-memory pairs that enjoy fast serial I/O connections. The PEs in a d-cube will often have numbers 0 through D-1, meaning for the 4-cube shown in Figure 1 below where D=16 (24), the PEs would be numbered 0000 through 1111, and the PE numbered 1011 would have four neighbors – 0011, 1111, 1001, and 1010 – flipping a given bit defines neighbor pairs across a specific dimension (Ostrouchov, 1987). HYPERCUBE MULTIPROCESSORS 5 Figure 1: Hypercubes of dimensions 0 through 4 (Ostrouchov, 1987). At times, it is easier to build up cubes from lower-dimensional cases using a simple twostep procedure: 1. Take a d-dimensional cube and duplicate it – the two duplicates can be referred to as subcubes 0 and 1. Therefore, a subcube is a sub-graph of a hypercube that preserves the hypercube's properties (Rai et al., 1995). 2. For each pair of same-numbered PEs, add 0 to the front of the label for the PE in subcube 0 and 1 for the PE in subcube 1 (Matloff, 2011). Create a link between the two same-numbered PEs. HYPERCUBE MULTIPROCESSORS 6 Figure 2 below shows how a 4-cube may be constructed from two 3-cubes: Figure 2: How a 4-cube can be constructed from two 3-cubes (Matloff, 2011). Hypercube Interconnection A hypercube/binary d-cube multiprocessor represents a loosely-coupled system with D=2d interconnected processors. Each processor comprises a PE in the cube, and the direct communication paths among neighbor processors represent the cube's edges. Additionally, two different d-bit binary addresses may be assigned to each processor so that every processor address varies from each of its d neighbors by 1-bit position. Hence, a Boolean d-cube consists of 2d vertices, such that an edge exists between any two vertices if their binary labels differ by 1. Each link between two PEs acts as a dedicated connection, meaning if a PE needs to communicate with a non-neighbor PE, multiple links (usually as many as d of them) have to be traversed. This phenomenon may lead to significantly high communication costs since routing messages through a d-cube structure may require from as many as one to d links from the source HYPERCUBE MULTIPROCESSORS 7 to destination PE (Matloff, 2011). Also, a hypercube employs the multiple-instruction multiple-data (MIMD) architecture by allowing direct point-to-point communication between PEs (Das et al., 1990). Since each PE has its local memory, shared global memory is unnecessary, implying that messages are passed over the hypercube network. The Why behind Hypercube Multiprocessors A multiprocessor's speedup is one of the most objective methods of measuring a parallel processing system's progress. Therefore, the desire for cost-effective computing became the ultimate driving force for the hypercube industry. Advancing microelectronic technology by developing the hypercube was considered progress towards the universal objective of boosting machine architecture and performance. The hypercube computer was more impressive because it separated brute force from skillful design (Chen et al., 1988). The hypercube was meant to address the Von Neumann Bottleneck without disrupting the current computer industry. The existing multiprocessor systems were plagued by generality, implying that a machine model that attained better performance proportional to a multiprocessor's implementation costs was necessary. Additional features that make hypercube multiprocessors valuable and popular as general-purpose parallel machines include: The hypercube topology is isotropic, meaning a d-cube appears the same from each PE, leading to edge and node symmetry (Das et al., 1990). There are no edges, borders, or apexes where a specific PE needs to be considered differently, and no particular resource may cause a bottleneck. The geometry leading to a logarithmic diameter also provides a beneficial tradeoff between the high connectivity costs of a completely-connected scheme and significant HYPERCUBE MULTIPROCESSORS 8 diameter issues associated with a ring geometry. A hypercube also supports multiple interconnection topologies, including meshes, rings, and trees, that may be embedded within any d-cube (see Figure 3 below). In keeping with its topology flexibility, a hypercube supports multiple popular algorithms integrated onto a d-cube to direct communication between neighboring PEs (Das et al., 1990). Figure 3: 3-d hypercube embeddings of some interconnection schemes (Ostrouchov, 1987). Lastly, routing messages between non-adjacent PEs is straightforward after dividing the hypercube into smaller cube arrays to ensure multi-programming and simpler faulttolerant designs, as discussed in later sections (Das et al., 1990). For these four reasons, the hypercube is suitable for a wide range of applications and often provides an excellent test subject for parallel algorithms. Operating Principles of Hypercube Multiprocessors HYPERCUBE MULTIPROCESSORS 9 The processor activities in a hypercube parallel computer are coordinated through messages among processors. Consequently, if a message needs to be sent between two nonneighbor PEs, the message is routed through the necessary intermediate PEs. The routing process is simplistic: The message is sent to a neighbor whose binary label is one bit closer to the destination PE (Ostrouchov, 1987). Thus, the path length of such a message is the number of bit positions in which the binary labels of the two PEs differ. Typically, a communication co-processor exists on each PE to free the central processor from too much communication overhead, meaning that messages only pass through the co-processor on each PE. Although all PEs are identical, a separate host processor exists to manage the hypercube PEs. It has communication links with all the PEs and executes its management role loosely by sending messages over these links. The two operating principles mentioned above guarantee that a hypercube can attain a satisfactory balance between the number of channels per PE (degree) and the diameter (maximum path length between any two PEs) (Ostrouchov, 1987). Ideally, a d-cube's interconnection scheme should have a small diameter to ensure fast communication with PEs of a small degree so that the hypercube is easily scalable. It is also important to note that if only a single path exists between any pair of PEs, then a communication bottleneck may occur at the cube's root. Processor Allocation in Hypercube Multiprocessors A hypercube can run multiple separate jobs simultaneously on different subcubes/subgraphs of its processors. As a result, subcube allocation and deallocation techniques are critical as they help maximize processor utilization by minimizing task durations. HYPERCUBE MULTIPROCESSORS 10 An efficient communication scheme in a hypercube involves the PEs performing more computation than communication tasks. Nevertheless, most hypercube architectures comprise PEs primarily involved in communicating messages between neighbors (Ahuja & Sarje, 1995). Therefore, the processor allocation issue in a hypercube is identifying and locating a free subcube that can accommodate a specific request of a particular size while minimizing system fragmentation and maximizing hypercube utilization. The issue also extends to subcube deallocation and the reintegration of these released processors into the set of available hypercube processors. Fragmentation may be internal or external. Internal fragmentation occurs when the allocation scheme fails to recognize available subcubes. In contrast, external fragmentation happens when a sufficient number of available processors cannot form a subcube large enough to accommodate the incoming task request as they are scattered. External fragmentation is depicted in Figure 4 below, where four available nodes cannot form a 2-dimensional cube, meaning if a task requiring such a cube arrives, it is either rejected or queued: Figure 4: An example of hypercube fragmentation (Chen & Shin, 1990). HYPERCUBE MULTIPROCESSORS 11 Major Steps in Processor Allocation 1. Determining subcube size to accommodate the incoming task. In this stage, each incoming request is represented using a graph in which a PE denotes a task module, and each link represents the inter-module communication (Ahuja & Sarje, 1995). 2. Locating the subcube of the size determined in step (1) within the hypercube multiprocessor. The second step establishes whether a subcube can accommodate the request given specific constraints. Types of Processor Allocation Policies a) Processor allocation may occur online or offline: The operating system collects many requests before subcube allocation in an offline dynamic (Rai et al., 1995). On the other hand, in online allocation, each subcube request is addressed or ignored immediately it arrives regardless of the number of subsequent requests (Rai et al., 1995). This strategy requires the largest-sized subcubes to be maintained after every allocation and relinquished after processing. b) Processor allocation may also be static or dynamic: A policy is static if the only incoming requests are for assignment, meaning deallocation is not considered at any time. But a dynamic technique handles both allocation and relinquishment depending on job arrival and completion, leading to better resource utilization. However, finding a perfect dynamic policy is difficult due to the increased operational overhead. Approaches to Processor Allocation HYPERCUBE MULTIPROCESSORS 12 Top-down Approach Maintains a set or list to keep track of the free available cubes. A standard top-down method is the free list strategy which updates a list for each hypercube dimension by perfectly recognizing all the subcubes (Yoon et al., 1991). A free list records all disjoint but free subcubes, meaning that a requested subcube can only be allocated from the list. Although the allocation procedure is simple, the deallocation step is complicated. A deallocated cube has to be compared with all idle, adjacent cubes to form a higher dimension subcube – leading to a very high worst time complexity. Secondly, Yoon et al. (1991) proposed another top-down technique [Heuristic Processor Allocation (HPA)] strategy, which uses an undirected graph. The graph's vertex represents the available system subcubes and is used to deallocate and allocate subcubes. A heuristic algorithm is also used to ensure the available subcube is as large as possible during deallocation. The HPA technique minimizes external fragmentation and reduces search time by modifying only related PEs in the graph when addressing an allocation or reallocation request. Bottom-up Approach 2d allocation bits help keep track of the availability of all hypercube processors, meaning the allocation policy examines the allocation bits when forming subcubes (Yoon et al., 1991). A binary tree structure records the allocated and free nodes, which requires extensive storage and extended execution time to collapse the binary tree representation. The collapsed tree successively forms a subcube by bringing together distant PEs starting from the lowest dimension. Since subcube computation and information are distributed among idle nodes, the host's burden is reduced, increasing the subcube recognition capacity. Disadvantages of the bottom-up approach: HYPERCUBE MULTIPROCESSORS 13 It is more challenging to eradicate external fragmentation as the allocation policy only relies on allocation bit data. Also, it is tedious to search for free subcubes under heavy system load. The three central elements that impact the superiority of a top-down or bottom-up allocation scheme are subcube recognition capability, time complexity associated with subcube allocation, and optimality (if the policy can allocate a d-cube when there at least 2d free PEs) (Yoon et al., 1991). Optimality can either be static or dynamic: A statically optimal scheme can allocate a d-cube with at least 2d free PEs, given that none of the allocated subcubes are released. In contrast, a dynamically optimal policy can accommodate processor allocation and relinquishment at any time. Task Migration in Hypercube Multiprocessors As mentioned above, subcube allocation and deallocation may result in a fragmented hypercube even when a sufficient number of PEs is available since the available cubes may not form a subcube large enough to accommodate an incoming task request. Such fragmentation results in poor utilization of a hypercube's PEs, implying that task migration is necessary to address this problem. Task migration involves compacting and relocating active tasks within the hypercube at one end to make for larger subcubes at the other end. It is essential to note that considerable dependence exists between task migration and the subcube allocation policy (Chen & Shin, 1989). The relationship is necessary as tasks can only be relocated so that the allocation technique being used can detect subcube availability. Since a collection of occupied subcubes is called a configuration, it is essential to establish the goal configuration to which a given fragmented hypercube must change after active HYPERCUBE MULTIPROCESSORS 14 task relocation. The portion of a functional task located at each PE after the migration is called a task module, implying that a moving step describes a PE's action to move its task module to neighboring processors. Thus, the cost of task migration is measured using the moving steps required since task migrations between different source and destination cubes can occur in parallel. However, for parallel task migration to occur, it is critical to avoid deadlocks - adding an extra stage during task migration requires finding a routing procedure that uses the shortest deadlock-free route while formulating the PE-mapping between each pair of source and destination subcubes. Major Steps in Task Migration Determining a goal configuration. The allocation scheme used must be statically optimal before a goal configuration is established. Determining the node mapping between source and destination subcubes. Determining the shortest deadlock-free routing for moving task requests (Chen & Shin, 1990). Complete parallelism and minimal deadlock instances are possible when using stepwise disjoint paths for task migration. Additional considerations during task migration: An active task must be suspended when moved to another subcube location (Chen & Shin, 1990). The task then resumes execution upon reaching its destination Task migration induces operational overhead, which degrades system performance. As such, determining an optimal threshold is necessary – such a value is arrived at by HYPERCUBE MULTIPROCESSORS 15 compromising between task admissibility and resulting operational overhead. The host processor tracks every PE's status, meaning it can easily design an optimal threshold to decide when to execute task migration. Fault-Tolerant Hypercube Multiprocessors Hypercubes generally have high resilience since their parallel algorithms can be partitioned into multiple tasks run on separate PEs' processors to attain high performance. Such computers can run numerous tasks concurrently and individually on each processor within them. For instance, in a d-cube, its extensive connectivity prevents non-faulty processors from disconnection, meaning if the number of faulty PEs does not exceed d, the surviving network's diameter can be bounded reasonably (Latifi, 1990). Nonetheless, the hypercube's universality may be compromised if the topology changes after a fundamental algorithm is reorganized in an inefficient and non-uniform way. As such, a single PE's failure may destroy one dimension, meaning the loss of as few as two PEs could compromise more than one dimension, which limits the subcube's reliability. Without making any adjustments, the best choice when a link fails in a d-cube is to extract the operational (d-1)-cube from the damaged section (Latifi, 1990). However, this leaves more than half the nodes and connections within the network underused. Thus, alternative fault-tolerance techniques are necessary to utilize the hypercube's inherent redundancy and symmetry to reconfigure the architecture: 1. Use of hypercube free dimension A hypercube is partitioned into several subcubes such that each partition contains at most one faulty PE using the free dimension concept. A dimension in any hypercube is considered free if no pair of faulty PEs exists across that dimension (Abd-El-Barr & Gebali, 2014). For example, figure 5 demonstrates this idea using four different hypercube structures with differing free HYPERCUBE MULTIPROCESSORS 16 dimensions. Figure 5: Illustration of the free-dimension concept. In (a), all three dimensions are free. In (b), only dimensions 2 and 3 are free. In (c), only dimensions 1 and 3 are free. In (d), only dimensions 1 and 2 are free (Abd-El-Barr & Gebali, 2014). 2. Use of spanning trees (STs) STs are used to configure a specific hypercube to bypass faulty PEs. The initial step is to construct an ST for a given d-cube (Figure 6 depicts a 4-cube and its corresponding ST in Figure 7). HYPERCUBE MULTIPROCESSORS 17 Figure 6: A 4-cube multiprocessor with one faulty node [1010] (Abd-El-Barr & Gebali, 2014). Figure 7: A spanning tree of a 4-cube (Abd-El-Barr & Gebali, 2014). The ST construction is followed by reconfiguring the hypercube's architecture. First, the faulty PE is removed from the ST, and the children of this removed PE are reconnected (Abd-ElBarr & Gebali, 2014). Figure 8 shows the removal of the PE 1010 from the ST and the reconnection of its children. The reconfiguration defines new parents and children to circumvent HYPERCUBE MULTIPROCESSORS 18 the faulty PE, 1010 - for example, in figure 8, the new children of the PE 1001 become 1000 and 1011, while the parent to 1011 becomes 1001. Figure 8: How a spanning tree is reconfigured under a faulty PE (Abd-El-Barr & Gebali, 2014). Hypercube Multiprocessors: Routing Algorithms Message routing in large interconnection networks has attracted much attention, with various approaches being proposed. Some of the fundamental distinctions among routing algorithms involve the length of the messages injected into the network, the static or dynamic nature of the injection model, particular assumptions on the semantic of the messages, the architecture of the network and router, and the degree of synchronization in the hardware (Pifarre et al. 1994). In terms of message length, several issues have been studied concerning the ways to handle long messages (of potentially unknown size) and concise messages (typically of 150 to 300 bits). In packet-switching routing, the messages are of constant (and small) size, and they are stored entirely in every node they visit. In wormhole routing, messages of unknown size are routed in the network. These messages are never wholly stored in a node. Only pieces of the messages, called flits, are buffered when routing. HYPERCUBE MULTIPROCESSORS 19 According to Holsmark (2009), two subjects of long-standing interest in routing are deadlock and live-lock freedom. In a deadlock, packets are involved in a circular wait that cannot be resolved, whereas, in live-lock, packets wander in the network forever without reaching the destination. Another vital property that networks strive to avoid is starvation, whereby packets never get service in a node. Several strategies can be applied to handle deadlocks. Deadlock avoidance techniques, for example, ensure that deadlock never can occur. On the other hand, deadlock recovery schemes allow the formation of deadlocks but resolve them after the occurrence. Live-lock may occur in networks where packets follow paths that do not always lead them closer to the destination. A standard solution to live-lock is to prioritize traffic based on hop-counters. For each node a packet traverses, a hop counter is incremented. If several packets request a channel, the one with the most considerable hop-counter value is granted access. This way, packets that have long circled the network will receive higher priority and eventually reach the destination. An example of starvation is when packets with higher priorities constantly outrank lower priority packets in a router. As a result, the lower prioritized packets are stopped from advancing in the network. Starvation is a critical aspect when designing the router arbitration mechanism. Various routing algorithms can be used in hypercube processors to address the above issues. Routing is the mechanism that determines message routes, which links and nodes each message will visit from a source node to a destination node (Holsmark, 2009). A routing algorithm is a description of the method that determines the route. Standard classifications of routing algorithms are: - Source vs. Distribution routing HYPERCUBE MULTIPROCESSORS - Deterministic vs. adaptive (Static vs. Dynamic) routing - Minimal vs. nonminimal routing - Topology dependent vs. topology agnostic routing. 20 Source vs. Distributed Routing This classification is based on where the routing decisions are made. In source routing, the source node decides the entire path for a packet and appends it as a field in the packet. After leaving the source, routers switch the packet according to the path information. As routers are passed, route information that is no longer necessary may be stripped off to save bandwidth. Source routing allows for a straightforward implementation of switching nodes in the network. However, the scheme does not scale well since header size depends on the distance between source and destination. Allowing more than a single path is also inconvenient using source routing. In distributed routing, routes are formed by decisions at each router. Each router decides whether it should be delivered to the local resource or forwarded to neighboring routers based on the packet destination address. Distributed routing requires that more information is processed in network routers. On the other hand, the header size is smaller and less dependent on network size. It also allows for a more efficient way of adapting the route, depending on network and traffic conditions, after a packet has left the source node. Deterministic vs. Adaptive Routing Another popular classification divides routing algorithms into deterministic (oblivious, static) or adaptive (dynamic) types. Deterministic routing algorithms provide only a single fixed path between a source node and a destination node. This scheme allows for the simple implementation of network routers. HYPERCUBE MULTIPROCESSORS 21 Adaptive routing allows several paths between a source and a destination. The final path selection is determined at run-time, often depending on network traffic status. Minimal vs. Nonminimal Routing Route lengths determine if a routing algorithm is minimal or nonminimal. A minimal algorithm only permits paths that are the shortest possible, also known as profitable routes. A nonminimal algorithm can temporarily allow paths that, in this sense, are non-profitable. Even though nonminimal routes result in a longer distance, the time for a packet transmission can be reduced if the longer route avoids congested areas. Nonminimal paths may also be required for fault tolerance. Topology Dependent vs. Topology Agnostic Routing Several routing algorithms are developed for specific topologies. Some are only usable on regular topologies like meshes, whereas others are explicitly created for irregular topologies. There is also a particular area of fault-tolerant routing algorithms, designed to work if the topology is changed, where a regular topology is turned into an irregular topology. Illustration: Adaptive Routing A desirable feature of routing algorithms is adaptivity, that is, the ability of messages to use multiple paths toward their destinations. In this way, alternative paths can be followed based on factors that are local to each node, such as conflicts arising from messages competing for the same resources, faulty nodes, or links. For example, consider the 16-node hypercube shown in Figure 1. A message starting from node (0, 0, 0, 0) having (1, 0, I, 1) as its destination can move to any of the following nodes as its first step: (I, 0, 0, 0), (0, 0. I, 0), and (0.0,0,1). An adaptive routing function will allow more than one choice among the three possible nodes. The decision about which node is selected is based on an arbitration mechanism that optimizes available resources. If one of the involved links out of (0. 0, 0,0) is congested or faulty, a message can use HYPERCUBE MULTIPROCESSORS 22 another link to reach its destination. Thus, adaptivity may yield more throughput by distributing the traffic over the network's resources better. Figure 7: The paths available in the 4-hypercube between node (0, 0, 0, 0) and node (1, 0, I, 1) (Pifarre et al. 1994). Performance Measures for Hypercube Microprocessors Speedup In hypercube microprocessors, as in all parallel systems, time and memory are the dominant performance metrics. The faster process is preferred between alternate methods that use different amounts of memory, provided there is enough memory to run both ways. There is no advantage to using less memory than might be made available to the application unless less memory results in a reduction in execution time. Performance measurements in a similar domain have been made more complex by the desire to know how much faster an application is running on a parallel computer. What benefit arises from the use of parallelism, or how much is the speedup that results from parity. According to Sahni and Thanvantri (2002), there are varied definitions of speedup, one of which is actual analytical speedup. This metric can be computed HYPERCUBE MULTIPROCESSORS 23 using the number of operations or workload. For example, the effective speed of the computer may vary with the workload as larger workloads may require more memory and may eventually require the use of slower secondary memory. Table 1: Connected components average actual speedup on a nCube hypercube. Limits to Speedup Since each problem instance may be assumed to be solvable by a finite amount of work, it follows that by increasing the number of processors indefinitely, a point is reached when there is a lack of any work to be distributed to the newly added processors. No further speedup is possible (Sahni & Thanvantri, 2002). Therefore, to attain ever-increasing amounts of speedup, one must solve more extensive instances of workloads. Perhaps the oldest and most quoted observation about limits to attainable speedup is Amdahl's law, which observes that if a problem contains both serial (s) and parallel (p) components, then the observed speedup will be (s + p) / s + p/P, where P is the speedup of a given processor. Efficiency HYPERCUBE MULTIPROCESSORS 24 Efficiency is a performance metric closely related to speedup. It is the ratio of speedup and the number of processors P. Depending on the variety of speedup used, and one gets a different sort of efficiency. Since speedup can exceed P, efficiency can exceed 1(Sahni & Thanvantri, 2002). However, like speedup, efficiency should not be used as a performance metric independent of run time. The reason for this is that since speedup favors slow processors, so does efficiency. Additionally, the easiest way to get a relative efficiency of 1 is to use P = 1, which results in no speedup. Scalability The term scalability of a parallel system refers to the change in performance of the parallel system as the problem size and computer size increase. Intuitively, a parallel system is scalable if its performance continues to improve as the system, both the problem and machine, is scaled in size (Sahni & Thanvantri, 2002). Case Study: Cedar Multiprocessor The Cedar multiprocessor was the first scalable, cluster-based, hierarchical sharedmemory multiprocessor of its kind in the 1980s (Yew, 2011). It was designed and built at the Center for Supercomputing Research and Development (CSRD). The project successfully created a complete, scalable shared-memory multiprocessor system with a working 4-cluster (32processor) hardware prototype, a parallelizing compiler for Cedar Fortran, and an operating system called Xylem for scalable multiprocessor. The Cedar project was started in 1984, and the prototype became functional in 1989 (Yew, 2011). Cedar Machine Organization The organization of Cedar consists of multiple clusters of processors connected through two high-bandwidth single-directional global interconnection networks (GINs) to a globally HYPERCUBE MULTIPROCESSORS 25 shared memory system (GSM). One GIN provides memory requests from clusters to the GSM. The other GIN provides data and responses from GSM back to clusters. Figure 8: Cedar machine organization (Yew, 2011). Cedar Cluster In each cluster, there are eight processors, called computational elements (CEs). Those eight CEs are connected to a four-way interleaved shared cache through an 8 x 8 crossbar switch and four ports to a global network interface (GNI) that provide access to GIN and GSM. On the other side of the shared cache is a high-speed shared bus connected to multiple cluster memory modules and interactive processors (IPs). IPs handle input/output and network functions. Memory Hierarchy HYPERCUBE MULTIPROCESSORS 26 The 4 GB physical memory address space of Cedar is divided into two halves between the cluster memory and the GSM - 64 MB of GSM and 64 MB of cluster memory in each cluster on the Cedar prototype. It also supports a virtual memory system with a 4 KB page size. GSM could be directly addressed and shared by all clusters, but cluster memory is only addressable by the CEs within each cluster. Data coherence among multiple copies of data in different cluster memories is maintained explicitly through software by either programmer or the compiler. The GSM is double-word interleaved and aligned among all global memory modules. Each GSM module has a synchronization processor that could execute each atomic Cedar synchronization instruction issued from a CE and staged at GNI. Importance of Cedar Cedar had many features that were later used extensively in large-scale multiprocessors systems that could speed up the most time-consuming part of many linear systems in large-scale scientific applications, such as: 1. Software-managed cache memory to avoid costly cache coherence hardware support. 2. Vector data prefetching to cluster memories for hiding long memory latency 3. Parallelizing compiler techniques that take sequential applications and extract task-level parallelism from their loop structures. 4. Language extensions that included memory attributes of the data variables to allow programmers and compilers to manage data locality more easily. 5. And parallel dense/sparse matrix algorithms. Conclusion Hypercubes were a popular class of parallel computers that have had a significant impact on parallel computing. Generally, a hypercube is an arrangement of several ordinary processors, each with its memory and serial I/O hardware for connections with neighboring processors. The HYPERCUBE MULTIPROCESSORS 27 processors can be arranged in various topologies, such as rings, trees, and meshes, each with advantages and disadvantages. However, underlying each arrangement is the desire that drives all parallel systems: to achieve maximum processing output at the least cost. The processor activities in a hypercube parallel computer are coordinated through messages among processors. Therefore, the issues of deadlock and live-lock prevention are essential in a hypercube. Different message routing algorithms have been implemented to overcome these issues, including source vs. distribution, static vs. dynamic, minimal vs. nonminimal, and topology dependent vs. topology agnostic routing approaches. These algorithms have varying strengths and weaknesses, but, overall, they seek to enhance the three performance measures of hypercubes: speedup, efficiency, and scalability. While hypercubes may no longer be implemented commercially, they have been the foundation of many modern-day parallel computing systems. HYPERCUBE MULTIPROCESSORS 28 References Abd-El-Barr, M., & Gebali, F. (2014). Reliability analysis and fault tolerance for hypercube multi-computer networks. Information Sciences, 276, 295-318. Ahuja, S., & Sarje, A. K. (1995). Processor allocation in extended hypercube multiprocessor. International Journal of High- Speed Computing, 7(04), 481-488. Chen, M. S., & Shin, K. G. (1989, April). Task migration in hypercube multiprocessors. In Proceedings of the 16th annual international symposium on Computer architecture (pp. 105-111). Chen, M. S., & Shin, K. G. (1990). Subcube allocation and task migration in hypercube multiprocessors. IEEE Transactions on Computers, 39(9), 1146-1155. Chen, M., DeBenedictis, Y. E., Fox, G., Li, J., & Walker, Y. D. (1988). Hypercubes are GeneralPurpose Multiprocessors with High Speedup. CALTECH Report. Das, S. R., Vaidya, N. H., & Patnaik, L. M. (1990). Design and implementation of a hypercube multiprocessor. Microprocessors and Microsystems, 14(2), 101-106. Holsmark, R. (2009). Deadlock free routing in mesh networks on chip with regions (Doctoral dissertation, Linköping University Electronic Press) Latifi, S. (1990). Fault-tolerant hypercube multiprocessors. IEEE transactions on reliability, 39(3), 361-368. Matloff, N. (2011). Programming on parallel machines. University of California, Davis. Ostrouchov, G. (1987, March). Parallel computing on a hypercube: an overview of the architecture and some applications. In Computer Science and Statistics, Proceedings of the 19th Symposium on the Interface (pp. 27-32). HYPERCUBE MULTIPROCESSORS 29 Pifarre, G. D., Gravano, L., Denicolay, G., & Sanz, J. L. C. (1994). Adaptive deadlock-and live lock-free routing in the hypercube network. IEEE Transactions on Parallel and Distributed Systems, 5(11), 1121-1139 Rai, S., Trahan, J. L., & Smailus, T. (1995). Processor allocation in hypercube multiprocessors. IEEE transactions on parallel and distributed systems, 6(6), 606-616. Sahni, S., & Thanvantri, V. (1996). Parallel computing: Performance metrics and models. IEEE Parallel and Distributed Technology, 4(1), 43-56 Yew, P-C. (2011). Cedar multiprocessor. In Padua D. (eds) Encyclopedia of Parallel Computing. Springer, Boston, MA. https://doi.org/10.1007/978-0-387-09766-4_112 Yoon, S. Y., Kang, O., Yoon, H., Maeng, S. R., & Cho, J. W. (1991, December). A heuristic processor allocation strategy in hypercube systems. In Proceedings of the Third IEEE Symposium on Parallel and Distributed Processing (pp. 574-581). IEEE.