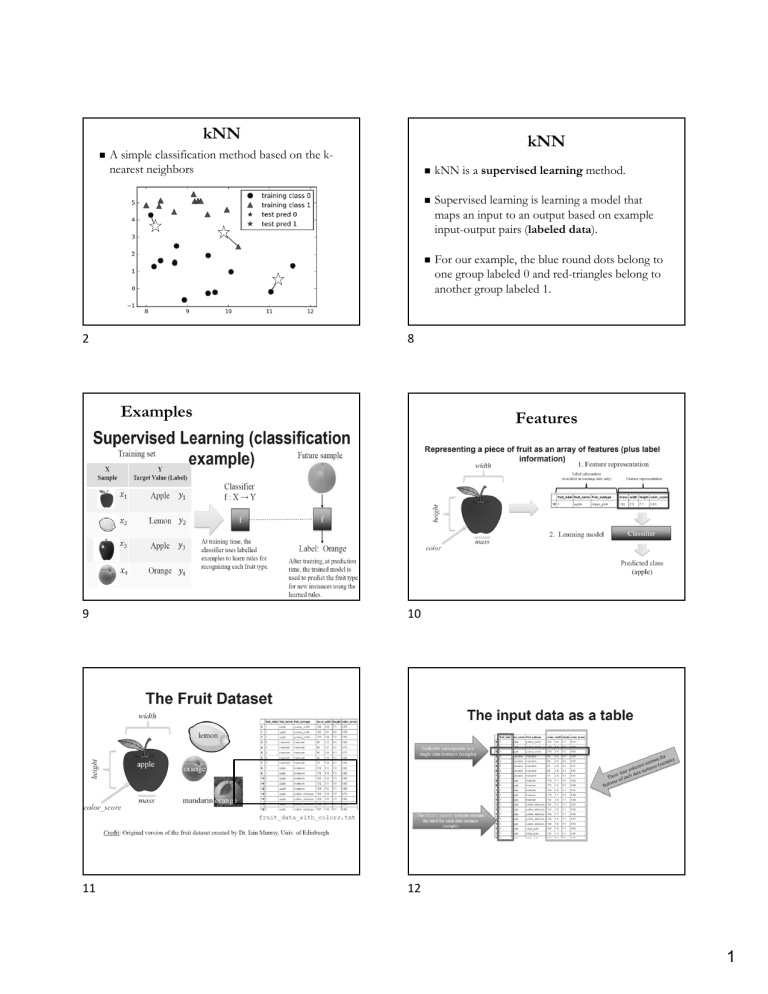

kNN kNN A simple classification method based on the knearest neighbors 2 kNN is a supervised learning method. Supervised learning is learning a model that maps an input to an output based on example input-output pairs (labeled data). For our example, the blue round dots belong to one group labeled 0 and red-triangles belong to another group labeled 1. 8 Examples Features 9 10 11 12 1 13 14 15 16 K-nearest neighbor classifier A distance metric How many 'nearest' neighbors to look at? 18 A distance metric Euclidean distance: (L2-norm) Manhattan distance: (L1-norm) Chebyshev distance: (L-norm) Minkowski distance: (Lp-norm) 1, 3 or 5 Method for aggregating the classes of neighbor points Optional weighting function on the neighbor points How do we implement the algorithm so finding the neighbors can be done efficiently? Is kNN a good model to use for the task? kNN Simple majority vote, ? 19 2 K-nearest neighbor classifier kNN Hamming distance: Manhattan distance How many 'nearest' neighbors to look at? Cosine similarity/distance: Method for aggregating the classes of neighbor points 21 Slide credit: Alexander Ihler 23 3, 5? (depends) Simple majority vote More than 2/3 Optional weighting function on the neighbor points 22 Slide credit: Alexander Ihler 24 Use a validation set to pick K Too “complex” Slide credit: Alexander Ihler 25 Slide credit: Alexander Ihler 26 3 k-d tree a 2-d tree example 27 28 k-d Tree (cont’d) k-d Tree (cont’d) • Every node (except leaves) represents a hyperplane that divides the space into two parts. • Points to the left (right) of this hyperplane represent the left (right) sub-tree of that node. Pleft As we move down the tree, we divide the space along alternating (but not always) axis-aligned hyperplanes: Split by x-coordinate: split by a vertical line that has (ideally) half the points left or on, and half right. Pright Split by y-coordinate: split by a horizontal line that has (ideally) half the points below or on and half above. 29 30 k-d Tree - Example k-d Tree - Example Split by x-coordinate: split by a vertical line that has approximately half the points left or on, and half right. Split by y-coordinate: split by a horizontal line that has half the points below or on and half above. x x y 31 y 32 4 k-d Tree - Example k-d Tree - Example Split by x-coordinate: split by a vertical line that has half the points left or on, and half right. Split by y-coordinate: split by a horizontal line that has half the points below or on and half above. x x y y x x x y y x x x y 33 x x y 34 Ball-Tree Example How to use k-d Tree to find 3 nearest neighbors The algorithm divides the data points into two clusters. Each cluster is encompassed by a hypersphere. Build big balls instead of boxes Pick a random point p1 Find one that’s furthest to p1, call it p2 Find one that’s furthest to p2, call it p3 likely p1 and p3 are the furthest pair Find the median split along the line connecting p2&p3 Find the centroid of left and centroid of right Curse of Dimensionality 35 36 Ball tree Example How good is kNN learning? Evaluating a classifier & a regression model: accuracy, false positives, false negatives, etc. Data leakage problem in machine learning 37 when the data used to train a machine learning algorithm includes the information you are trying to predict. 39 5 Model evaluation Summary K-Nearest Neighbor is the most basic and simplest to implement classifier Cheap at training time, expensive at test time Unlike other methods we’ll see later, naturally works for any number of classes Pick K through a validation set, use approximate methods for finding neighbors Success of classification depends on the amount of data and the meaningfulness of the distance function (also true for other algorithms) 41 Confusion matrix Accuracy = ௧௧ # ௧ ௗ௧௦ ௧௧ # ௦௧௦ =8/13 42 Model evaluation Demo Sensitivity = TPR = TP/P = 5/8 Specificity = TNR = TN/N = 3/5 Precision = PPV = TP/(TP+FP) = 5/7 43 44 6