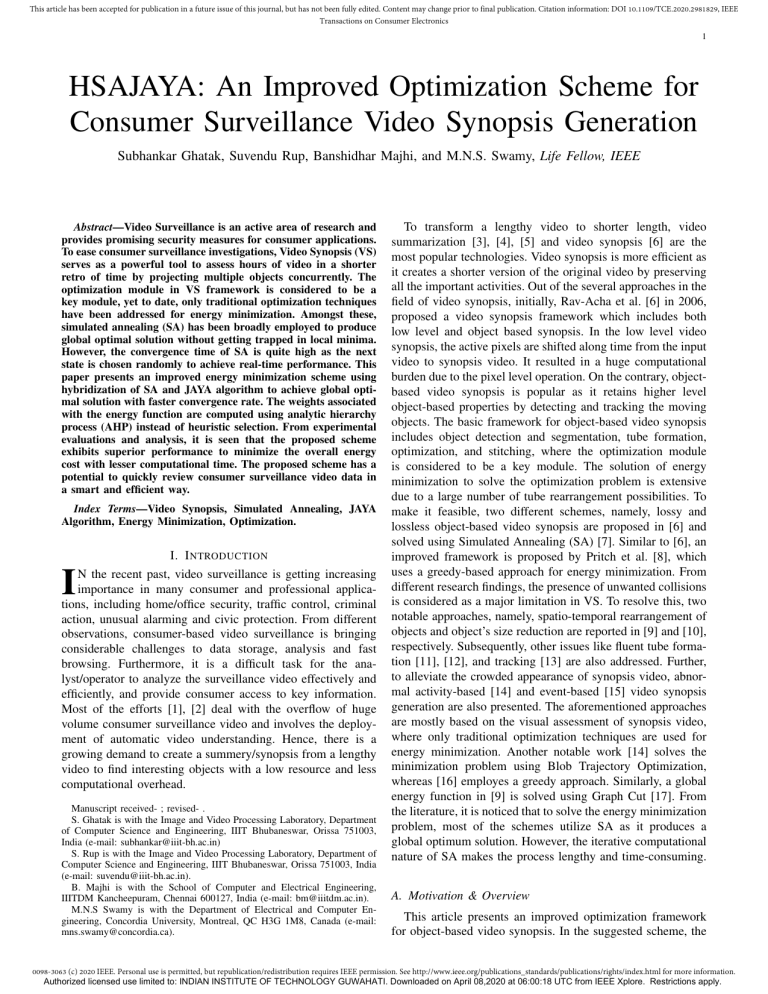

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TCE.2020.2981829, IEEE Transactions on Consumer Electronics 1 HSAJAYA: An Improved Optimization Scheme for Consumer Surveillance Video Synopsis Generation Subhankar Ghatak, Suvendu Rup, Banshidhar Majhi, and M.N.S. Swamy, Life Fellow, IEEE Abstract—Video Surveillance is an active area of research and provides promising security measures for consumer applications. To ease consumer surveillance investigations, Video Synopsis (VS) serves as a powerful tool to assess hours of video in a shorter retro of time by projecting multiple objects concurrently. The optimization module in VS framework is considered to be a key module, yet to date, only traditional optimization techniques have been addressed for energy minimization. Amongst these, simulated annealing (SA) has been broadly employed to produce global optimal solution without getting trapped in local minima. However, the convergence time of SA is quite high as the next state is chosen randomly to achieve real-time performance. This paper presents an improved energy minimization scheme using hybridization of SA and JAYA algorithm to achieve global optimal solution with faster convergence rate. The weights associated with the energy function are computed using analytic hierarchy process (AHP) instead of heuristic selection. From experimental evaluations and analysis, it is seen that the proposed scheme exhibits superior performance to minimize the overall energy cost with lesser computational time. The proposed scheme has a potential to quickly review consumer surveillance video data in a smart and efficient way. Index Terms—Video Synopsis, Simulated Annealing, JAYA Algorithm, Energy Minimization, Optimization. I. I NTRODUCTION I N the recent past, video surveillance is getting increasing importance in many consumer and professional applications, including home/office security, traffic control, criminal action, unusual alarming and civic protection. From different observations, consumer-based video surveillance is bringing considerable challenges to data storage, analysis and fast browsing. Furthermore, it is a difficult task for the analyst/operator to analyze the surveillance video effectively and efficiently, and provide consumer access to key information. Most of the efforts [1], [2] deal with the overflow of huge volume consumer surveillance video and involves the deployment of automatic video understanding. Hence, there is a growing demand to create a summery/synopsis from a lengthy video to find interesting objects with a low resource and less computational overhead. Manuscript received- ; revised- . S. Ghatak is with the Image and Video Processing Laboratory, Department of Computer Science and Engineering, IIIT Bhubaneswar, Orissa 751003, India (e-mail: subhankar@iiit-bh.ac.in) S. Rup is with the Image and Video Processing Laboratory, Department of Computer Science and Engineering, IIIT Bhubaneswar, Orissa 751003, India (e-mail: suvendu@iiit-bh.ac.in). B. Majhi is with the School of Computer and Electrical Engineering, IIITDM Kancheepuram, Chennai 600127, India (e-mail: bm@iiitdm.ac.in). M.N.S Swamy is with the Department of Electrical and Computer Engineering, Concordia University, Montreal, QC H3G 1M8, Canada (e-mail: mns.swamy@concordia.ca). To transform a lengthy video to shorter length, video summarization [3], [4], [5] and video synopsis [6] are the most popular technologies. Video synopsis is more efficient as it creates a shorter version of the original video by preserving all the important activities. Out of the several approaches in the field of video synopsis, initially, Rav-Acha et al. [6] in 2006, proposed a video synopsis framework which includes both low level and object based synopsis. In the low level video synopsis, the active pixels are shifted along time from the input video to synopsis video. It resulted in a huge computational burden due to the pixel level operation. On the contrary, objectbased video synopsis is popular as it retains higher level object-based properties by detecting and tracking the moving objects. The basic framework for object-based video synopsis includes object detection and segmentation, tube formation, optimization, and stitching, where the optimization module is considered to be a key module. The solution of energy minimization to solve the optimization problem is extensive due to a large number of tube rearrangement possibilities. To make it feasible, two different schemes, namely, lossy and lossless object-based video synopsis are proposed in [6] and solved using Simulated Annealing (SA) [7]. Similar to [6], an improved framework is proposed by Pritch et al. [8], which uses a greedy-based approach for energy minimization. From different research findings, the presence of unwanted collisions is considered as a major limitation in VS. To resolve this, two notable approaches, namely, spatio-temporal rearrangement of objects and object’s size reduction are reported in [9] and [10], respectively. Subsequently, other issues like fluent tube formation [11], [12], and tracking [13] are also addressed. Further, to alleviate the crowded appearance of synopsis video, abnormal activity-based [14] and event-based [15] video synopsis generation are also presented. The aforementioned approaches are mostly based on the visual assessment of synopsis video, where only traditional optimization techniques are used for energy minimization. Another notable work [14] solves the minimization problem using Blob Trajectory Optimization, whereas [16] employes a greedy approach. Similarly, a global energy function in [9] is solved using Graph Cut [17]. From the literature, it is noticed that to solve the energy minimization problem, most of the schemes utilize SA as it produces a global optimum solution. However, the iterative computational nature of SA makes the process lengthy and time-consuming. A. Motivation & Overview This article presents an improved optimization framework for object-based video synopsis. In the suggested scheme, the 0098-3063 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. Authorized licensed use limited to: INDIAN INSTITUTE OF TECHNOLOGY GUWAHATI. Downloaded on April 08,2020 at 06:00:18 UTC from IEEE Xplore. Restrictions apply. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TCE.2020.2981829, IEEE Transactions on Consumer Electronics objective function of the optimization module is solved using the proposed hybridization of SA [7] and JAYA Algorithm [18], namely HSAJAYA, to achieve a global optimal solution. The total energy cost function for energy minimization may not always be a convex function, as the individual cost functions associated with it are not always convex. Thus, in most of the observations, SA is chosen because of the fact that it is well acclaimed to achieve a global optimum solution. In SA, due to the random selection of the next state in each iteration, the algorithm finally reaches the global optimal solution, but the convergence time is fairly high. On the other hand, due to the parameter independent property of JAYA, it leads the algorithm to reach an optimal solution with a lesser number of function evaluations. But in JAYA, the search space is narrowed down because of its one-way nature of the solution(i.e. only the best and worst solutions decide the next state of the population). Thus, in the proposed HSAJAYA, JAYA is adopted in the generation step for considering the previous best and worst solutions to decide the next state of the population. Further, the selection of the population as a successful one is done by a probabilistic method as in SA. In the proposed scheme, the energy function is computed as a weighted sum of three objectives. The objectives are the minimization of activity loss termed as activity cost, minimization of collision between objects due to temporal rearrangement known as the collision cost and reduction of temporal relativity between objects as per the original one, called the temporal consistency cost. Further, the associated weights are used to signify the importance of the corresponding objectives. In general, weight values are decided intuitively or heuristically, but the proposed scheme utilizes Analytic Hierarchy Process (AHP) [19] to compute the weights. B. Contributions The contributions of the proposed scheme are threefold: 1) An improved computationally less overhead Consumer Surveillance Management System (CSMS) is proposed, facilitating an optimization framework for object-based video synopsis, where the energy minimization is achieved by the proposed HSAJAYA. 2) Further, AHP based-weight determination is employed to assign weights to the objective function. 3) The efficacy of the proposed scheme is validated in terms of some qualitative and quantitative measures along with complexity analysis. C. Organizations Rest of the paper is organized as follows: Section II presents the proposed framework. Simulation results are given in Section III. Finally, Section IV gives the concluding remarks. II. C ONSUMER S URVEILLANCE M ANAGEMENT S YSTEM The proposed scheme operates as follows. The live video streams are captured by the consumer surveillance cameras and fed to the Consumer Surveillance Management System (CSMS). CSMS is central to the Consumer Surveillance System and facilitates storage and Video Synopsis Services. Based Consumer Electronic Devices 2 Consumer Surveillance Management System (CSMS) Database & Archive Smart Phone Desktop PDA Tablet HDTV Video Synopsis Services Video Synopsis Generation Proposed HSAJAYA Input Video Object Detection and Segmentation Tube Formation Optimization Framework Video Synopsis Stitching Fig. 1. Flow Diagram of the proposed Video Synopsis Framework with Consumer Surveillance Management System. on the consumer time query to the CSMS, Video Synopsis Services fetch the desired video footage from the Database and transfer it to the VS Generation module for processing. In VS generation, the first step for the input video is to detect and segment the moving objects. Next, the segmented objects are tracked to form a tube for each object. Further, three objectives of optimization module are formulated and solved using the proposed HSAJAYA approach. Finally, the output resulting from the energy minimization based on objects’ information is blended with the background video to form the final video synopsis. The processing core of CSMS System is implemented in a consumer electronic device, Laptop/standard PC and the generated synopsis video can be suitably linked to consumer devices like smart-phone (for easy processing), tablet, HDTV (for easy visualization). Fig. 1 depicts the flow diagram of the proposed work. A. Object Detection & Segmentation The variation in illumination and misclassification of shadows, ghost, and noise as foreground can cause serious issues like, object merging, object shape distortion, and even object missing. So, a robust multi-layer background subtraction algorithm [20] is applied for the purpose of extracting the foreground along with a background model. OV (x, y, t) and BGI(x, y, t0 ) denote an input video and the corresponding background frame, respectively, where x & y are the spatial coordinates, t is the temporal index and t0 < t. The applied algorithm is boosted with the power of local binary pattern (LBP) features plus photometric invariant color measurements in RGB color space to address variation in illumination. Morphological dilation and erosion are also applied on each resulting foreground mask to get more accurate result. Finally, the noise-free foreground masks are inputted for object detection through blob analysis. In the proposed methodology, all the detected moving objects are represented in terms of precise and independent bounding boxes. 0098-3063 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. Authorized licensed use limited to: INDIAN INSTITUTE OF TECHNOLOGY GUWAHATI. Downloaded on April 08,2020 at 06:00:18 UTC from IEEE Xplore. Restrictions apply. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TCE.2020.2981829, IEEE Transactions on Consumer Electronics 3 B. Tube Formation In video synopsis terminology, the tracked representation of each independent object is represented as a tube. As multiple objects are present in a surveillance video, a robust multiobject tracking strategy is required for the tube generation. In the proposed scheme, a Kalman Filter-based algorithm [21] is employed for tracking. The set of all connected bounding boxes in the time domain is considered to be a track of a single moving object and will be temporally rearranged in the optimization module. C. Optimization Framework This section deals with the optimization framework where the proposed hybrid energy minimization is formulated. The prime objective is to preserve the activities among all the moving objects, their corresponding collision relic and to maintain the temporal consistency for the synopsis video with respect to the original one. The shifting time position of each object is addressed by the proposed energy minimization scheme, which produces minimum activity loss, collision, and violation to temporal consistency. These are the desired properties which represent energy of the video synopsis framework as described in (1). Finally, based on the new resultant positions the objects are stitched with the background video to produce the final synopsis. The objective function (also termed as energy function) of the proposed optimization framework is formulated by considering µ and E, which represent a mapping of objects from the input video to synopsis and the total energy cost, respectively, and is presented as in E(µ) = Ea (µ) + ω1 Ec (µ) + ω2 Et (µ) (1) where ω1 and ω2 are associated weight terms. Ea (µ), Ec (µ) and Et (µ) represent the penalty cost for activity loss, collision and the temporal consistency, respectively. To include all activities in the synopsis video, no weight is assigned to Ea (µ). The total energy cost function may not be always a convex function as the collision cost associated with it is an area function (namely, the standard dot product). 1) The Energy Cost: Activity Cost: As stated in (1), the activity cost Ea (µ) represents the penalty for non-preservation of original activity in the synopsis video and is defined by X X X Ea (µ) = Ea (obs ) = AbsDif f (ô) (2) ob∈OB ob∈OB ô ∈ obs ∧ ô ∈ / synopsis where ob represents an element that belongs to the set of all moving object tubes, denoted as OB. obs is a set, which contains all mapping representations of the elements of OB with respect to a new position in the synopsis video. ô is an element representation of obs that has not been included in the synopsis video. In (2), AbsDif f () is a function to evaluate the absolute difference between the element ô and its corresponding background. Thus, the AbsDif f () function can be defined as X AbsDif f (ô) = ||OV (x, y, tô ) − BGI(x, y, tô )|| (3) (x,y) ∈ bbox(ô) where tô is the corresponding frame number of ô and bbox() denotes the bounding box function. Thus, Ea (µ) is the summation of all active pixels that are not included in the synopsis video. In a lossless scenario, i.e., if all activities are preserved in the synopsis video, the activity cost will be set as zero. Collision Cost: In (1), the term Ec (µ) is used to minimize the number of collisions among the moving objects. The presence of collisions are considered unrealistic due to overlapping of two or more objects. So, minimum number of collisions ensures visually comfortable synopsis video, as suggested in [6] and [10]. Let obsm and obsn denote any two moving objects in the synopsis video, mapped from the original video objects obm and obn , respectively. The collision cost is defined as X Ec (µ) = Ec (obsm , obsn ) (4) obm ,obn ∈OB where the term Ec (obsm , obsn ) Ec (obsm , obsn ) = X is further defined as Area(bbox(ôm ) ∩ bbox(ôn )) (5) ôm ∈obsm ,ôn ∈obsn in which the Area() function calculates the area of the overlapped section of the bounding box of ôm (∈ obsm ) and ôn (∈ obsn ). If the synopsis video is produced with no collision, then the cost will be zero. Otherwise, it reflects the cost in terms of addition to all overlapping areas. Temporal Consistency Cost: In (1), the term Et (µ) represents the temporal consistency cost to maintain the chronological order of appearance of the objects. In the process of video synopsis generation, the temporal relationships among moving objects may be affected due to the applied temporal shifts, as mentioned in [6] and [10]. To eliminate these temporal shifts, which may cause temporal violation, a penalty cost with following situations are considered. 1. Two objects which share some common frames; 2. Two objects that do not share any common frames. Under situation 2, there can be two sub-classes: 2a. the temporal appearance order between these two objects is violated and 2b. the temporal appearance order between these two objects is preserved. Interactions among moving objects are most precious in case of surveillance. With the situations considered above, the preservation of interactions among moving objects in the resultant synopsis is done. Consider the first situation: the interaction between two moving objects can be measured probabilistically through their spatial relationships. Let obm and obn be two moving objects and share some common frames in the original video. Then their spatial relationships (4) can be established as 4(obm , obn ) = exp(− min t∈tobm ∩tobn (δ(obm , obn , t))/σ) (6) where δ(obm , obn , t) is the Euclidean distance between the objects obm and obn in the tth frame of the input video. σ lies in the range 0 − 100%, and represents the level of space interaction between obm and obn . A greater value of σ results in a situation where a higher cost will be assigned for the violation of temporal consistency. Hence, the optimization process attempts to minimize the cost though there may be very little possibility of interaction between the objects. Hence, 0098-3063 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. Authorized licensed use limited to: INDIAN INSTITUTE OF TECHNOLOGY GUWAHATI. Downloaded on April 08,2020 at 06:00:18 UTC from IEEE Xplore. Restrictions apply. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TCE.2020.2981829, IEEE Transactions on Consumer Electronics 4 in this scenario the cost allocation is inappropriate due the higher value of σ. On the other hand, a low value of σ drives the situation to assign a low temporal consistency cost which is not effective in the minimization process and a high possibility of interaction between objects might get neglected. Hence, in our experiment, we have considered an intermediate value of σ as 40, inspired from [10]. Beside spatial relationships, the measurement of temporal relationship (τ ) between objects can be established in terms of the absolute difference as → − → − → − → − τ (obm , obn ) = exp(||( t obm− t obn ) − ( t obsm− t obsn )||) (7) → − → − where t obm and t obn are the entry frame indices of obm and → − → − obn , respectively, in the input video while t obsm and t obsn are that of obm and obn , respectively, in the resultant synopsis. For the second situation, to measure the temporal violation (τ ∗ ) between obm and obn , another metric is defined as → − → − → − → − τ ∗ (obm , obn ) = ( t obm − t obn ) × ( t obsm − t obsn ) (8) A positive value of τ ∗ implies a successful preservation of temporal consistency between obm and obn in the synopsis resulting in zero penalty. Hence, the complete formulation of temporal consistency cost through the mapping of situations considered is presented as 4(obm , obn ) × τ (obm , obn ) ( → − → − Et (µ) = exp(||( t obsm − t obsn )||/γ) if τ ∗ (obm , obn ) ≤ 0 0 Otherwise if (tobm ∩ tobn ) 6= φ Otherwise (9) where γ, which lies in the range 0−100%, is used to define the time interval in which events have temporal communication. In [10], γ is considered as 10 due to higher number of objects with less interaction in their considered videos. Due to higher interaction among objects in our considered videos, the value of γ is chosen as 20. Here, an exponential cost is assigned in terms of the absolute difference of the temporal distance. The exponential increment of cost is directly proportional to the amount of temporal relationship violation. 2) Analytic Hierarchy Process (AHP) based weight determination: AHP [19] is a proficient tool to deal with complex decision problems. This analytic technique allows the user to make the best decision by the settlement of given priorities. Evaluation of the geometric means for the parameters considered defines the decision-making process in AHP. In this work, AHP is used to realize the values of weights associated with the corresponding costs in the objective function. The implementation step of AHP starts with a pairwise comparison matrix, denoted by An×n , where n is the number of considered evaluation criteria. The elements of An×n are denoted by aij , which represents the relative importance between ith and j th criteria. If aij > 1, the ith criterion is more significant than j th criterion. Likewise, if aij < 1, then ith criterion is less significant than j th criterion. If aij = 1, they are having equal significance. A numerical scale of 1 to 9 is used to measure the relative importance. After constructing An×n , the relative normalized weights ωi can be computed as v v uY n uY X u n u n n n aij / ( t aij ) (10) ωi = t j=1 i=1 j=1 P where i=1 ωi = 1. The realization of weight values are usually computed heuristically. In lossy scenario, the summation of weight values associated to activity, collision and temporal consistency is 1. Considering a lossless scenario in the proposed scheme, no weight has been assigned to activity cost. Considering Ec (µ) is absolutely more significant than Et (µ), Ec (µ) is assigned with label 9, while 1 represents the significance of Et (µ) and corresponding pairwise comparison matrix A is written as 1 9 a11 a12 (11) A= = 1 a21 a22 1 9 By comparing (10) and (11), we get ω1 = 0.8123; ω2 = 0.1877. Hence (1), can be modified in terms of a resultant objective function as E(µ) = Ea (µ) + 0.8123 × Ec (µ) + 0.1877 × Et (µ) (12) 3) Energy Minimization: The energy minimization function involves high computations due to a large number of possibilities. Some of the reported literature [6], [10], [22], [23] employ Simulated Annealing and Genetic Algorithm for minimization. The present proposal aims to minimize the process with reduced time along with optimal cost. The process is applied to the set of all possible mapping µ to find the temporal shift that swings the tubes along the time axis. In the proposed scheme, the lower bound of all temporal shifts is considered as the first frame of the synopsis video, wherein the upper bound of the shift denotes the absolute difference between synopsis length and corresponding tube length. In this work, the length of the synopsis is equal to the length of the tube, which constitutes maximum length. Considering the aforementioned conditions, an efficient hybridized optimization algorithm is proposed to solve (12). The proposed hybridization (HSAJAYA) is formulated using SA & JAYA algorithm. In Simulated Annealing [24], a preliminary state with energy E0 , a new state is selected by the shift of a randomly elected particle. For E0 > E, E is being selected and a new next state is generated as before, where E is the energy of the current state. Otherwise, if E0 ≤ E, the probability to remain in this new state is given as exp(−(E − E0 )/kb T ) (13) where kb is the Boltzmann constant and T is the current temperature. This probabilistic acceptance strategy is known as the Metropolis criterion. As the Metropolis algorithm is based on a single fixed temperature, Krikpatrick et al. [7], generalized it by incorporating an annealing schedule to reduce the temperature. Starting with a high initial temperature, it decreases according to annealing schedule and the step iterates until the system freezes in a state with global minimum energy. R. Rao [18] proposed a meta-heuristic optimization process named as Jaya algorithm. This technique operates with a population size Pmax (i.e. apparent optimum state, k = 1, 2, . . . , Pmax ) and N number of decision variables j = 1, 2, . . . , N for every individual of the population. For any mth iteration, there exist a best and a worst solution among m m all the apparent optimum states denoted by Xbest and Xworst , respectively. To obtain the best and worst states for the mth 0098-3063 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. Authorized licensed use limited to: INDIAN INSTITUTE OF TECHNOLOGY GUWAHATI. Downloaded on April 08,2020 at 06:00:18 UTC from IEEE Xplore. Restrictions apply. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TCE.2020.2981829, IEEE Transactions on Consumer Electronics 5 Algorithm 1: Proposed HSAJAYA Algorithm input : OV (x, y, t) : Original Video; T ube : Object Track output: µ = [xi,j ] : Temporal Shifts 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 Initialization of definition parameters: objno; // Number of moving objects in OV objlen; // Length of the corresponding object maxlen; // Length of the synopsis video T = 1000; // Initial Temperature e = 0.01; // Change in fitness value τs = 0.99; // Temperature Schedule Gen = 1; // Iteration Counter nP op = 10; // Size of the population Genmax = 100; // Maximum iteration xi,j = randi([0 (maxlen − objlenj )]); Initial Energy, E(µ[xi,j ]) is evaluated by (12); for Gen = 1 to Genmax do Generation and Evaluation Phase: for i = 1 to nP op do for j = 1 to objno do r = rand(1, 2); new Xi,j = xi,j + r(1) × (Bestj − |xi,j |) − r(2) × (W orstj − |xi,j |); new Enew (µ[Xi,j ]) is evaluated by (12); Selection Phase: for i = 1 to nP op do if Enew ≤ E then new µ[xi,j ] = µ[Xi,j ]; E = Enew ; else n = rand(1, 1); if n ≤ exp(−((Enew − E)/E)/T ) then new µ[xi,j ] = µ[Xi,j ]; E = Enew ; T = T × τs ; Gen = Gen + 1; m iteration, the position of Xk,j for the k th population and j th decision variable is updated as per (14). m m m m m m m Xk,j =xm k,j +r1,j (Xj,best−|xk,j |)−r2,j (Xj,worst−|xk,j |) (14) m m where, r1,j and r2,j are randomly initialized within the range m [0, 1] for every mth iteration. The updated value of Xk,j is accepted if and only if the resultant objective function value is better than xm k,j . a. HSAJAYA: The detailed steps of the proposed hybrid scheme is presented in Algorithm 1. The required parameters as specified in step 1 are initialized as per the defined optimization framework with the objective given in (12). Here, a set of initial population xi,j is assigned for each decision variable j, with a random integer value returned by the randi() function within a range of 0 to the absolute difference between length of the longest object tube and the corresponding object tube. Application of the initial population to the objective function decides the initial best population, Bestj and the worst population, W orstj . Here, xi,j is the current position Fig. 2. Experimental Setup: 1,2-Video Capture; 3-Processing; 4-Visualization for the ith population and j th decision variable. Now inspired from JAYA, the search is directed towards the best point and away from the worst to reach the optimum solution. new for each mth iteration Thus, the updated population Xi,j is evaluated from xi,j by (14). During the update, a random multiple ‘r0 is used within the range of [0, 1] for each iteration. The new generation is evaluated for activity, collision and temporal consistency costs, and the objective function value new E(µ) is obtained by (12). The selection procedure of Xi,j is accomplished by the random selection depicted in step 10 to step 16 of Algorithm 1. If the new selection is found to be better than the previous best, the population is designated as the best population. Otherwise, as per SA, the new solution is accepted to be the best solution from the basis of a probabilistic selection. Finally, the termination criterion is considered to attain the optimum solution. D. Stitching In this step, the resultant temporal shift is applied to the corresponding object tube to include it in the synopsis video. The generated background video and the object tubes are stitched together to produce the final synopsis. Before stitching, a time-stamp is generated based on the frame numbers and the rate for each object activity, and stitched along with the corresponding object which makes the synopsis efficient for video browsing. In this work, like other video synopsis generation methodologies [6], [8], [9], [10], Poisson Image Editing [25] is applied to blend the object information with the corresponding background frame. This image editing scheme has proven itself as an efficient blending tool to remove any undesirable seams if present in the frames. III. R ESULTS AND D ISCUSSION The experimental setup consists of a laptop with 3 CPU Core 1.70 GHz, 64 bit processor with 4 GB of RAM. Surveillance videos are captured through Dome/Day-Night consumer surveillance cameras and processing is done through the laptop to generate synopsis. Once the final synopsis is ready, visualization can be used by various consumer electronics display devices. Fig. 2 depicts the experimental setup of the proposed video synopsis framework. To appraise the efficacy of the proposed scheme, extensive experiments are carried out on 0098-3063 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. Authorized licensed use limited to: INDIAN INSTITUTE OF TECHNOLOGY GUWAHATI. Downloaded on April 08,2020 at 06:00:18 UTC from IEEE Xplore. Restrictions apply. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TCE.2020.2981829, IEEE Transactions on Consumer Electronics 6 TABLE I PARAMETERS OF E XPERIMENTAL S URVEILLANCE V IDEO First Frame Video Video Length Frame Rate Number of (fps) Objects Snapshot Number (# Frames) 2 1066 30 5 3 2688 30 12 4 300 10 3 various benchmark surveillance videos. A prototypical frame of each of the video sequences and parameters like frame rate, video length, the number of objects present are depicted in Table I. Video number 1, ”Atrium”, is taken from [16], where several moving objects (human) are walking around the field of view in a random manner. On the other hand, video number 2, ”Pedestrians” is taken from ChangeDetection.Net Dataset [26], which includes some human activities and bicycle riding as moving objects. The objects are moving from left to right and right to left that contains overlapping in objects. Video number 3 is obtained from PETS 2001 [27], where several human beings walk around single or in a group along with the car’s movement. Finally, video number 4 is taken from LASIESTA Database [28]. The experimental outcomes and analysis of the proposed scheme are presented and discussed experiment-wise as follows. A. Experiment 1: Evaluation of Object Detection and Segmentation To evaluate the efficiency of the employed algorithm, namely, Multi-layer background subtraction [20], for overcoming the issues discussed in section II(A), the following experiment has been conducted. The visual assessment of the employed scheme has been compared to the well accepted object detection and segmentation techniques, reflected in Fig. 3. In Fig. 3, A, B, C, and D represents videos 1, 2, 3, and 4, respectively. A(1), B(1), C(1), and D(1) are the input frames from original videos (1st row); 2nd row depicts the corresponding ground truths, and 3rd -7th rows depicts resultant frames obtained by Gaussian mixture model (GMM) [29], PBAS [30], LOBSTERBGS [31], SuBSENSE [32], and multilayer background subtraction algorithm [20], respectively. Fig. 3(D(7)) clearly shows improved segmented result as compared to Fig. 3(D(3) and D(4)) as they suffer from noise and Fig. 3(D(5) and D(6)) which contain ghosting artifacts. Moreover, it can be observed from Fig. 3(A(7), B(7), C(7), and D(7)), the resulting foreground masks obtained through the application of [20], outperform that of the benchmark schemes. In addition, a quantitative analysis is also carried out to evaluate the efficacy of the used algorithm [20] in terms of the performance metrics like Precision, Recall, F1, Similarity, and Percentage of Correct Classification (PCC) for the considered videos and presented in Table II. The detailed mathematical expressions B(1) C(1) D(1) A(2) B(2) C(2) D(2) A(3) B(3) C(3) D(3) A(4) B(4) C(4) D(4) A(5) B(5) C(5) D(5) A(6) B(6) C(6) D(6) A(7) B(7) C(7) D(7) Fig. 3. Subjective comparison of Object Detection and Segmentation Phase TABLE II Q UANTITATIVE A NALYSIS OF O BJECT D ETECTION Video#1 6 Video#2 30 Video#3 600 Video#4 1 A(1) AND S EGMENTATION Algorithm Precision Recall F1 Similarity PCC [29] 0.319 0.665 0.431 0.455 53.42 [30] 0.486 0.556 0.518 0.475 58.95 [31] 0.488 0.529 0.507 0.488 62.35 [32] 0.725 0.645 0.682 0.595 85.34 [20] 0.875 0.896 0.885 0.795 97.45 [29] 0.487 0.723 0.581 0.428 92.52 [30] 0.795 0.389 0.522 0.468 95.26 [31] 0.848 0.792 0.819 0.734 94.07 [32] 0.644 0.726 0.682 0.527 93.53 [20] 0.925 0.814 0.865 0. 948 98.70 [29] 0.628 0.885 0.734 0.516 96.25 [30] 0.792 0.625 0.698 0.573 97.37 [31] 0.878 0.541 0.669 0.426 91.85 [32] 0.745 0.628 0.681 0.553 94.52 [20] 0.926 0.803 0.860 0.839 98.35 [29] 0.338 0.398 0.365 0.262 74.86 [30] 0.675 0.604 0.637 0.482 84.29 [31] 0.592 0.697 0.640 0.498 86.78 [32] 0.789 0.812 0.800 0.675 91.24 [20] 0.977 0.918 0.946 0.927 92.74 of the above performance metrics are available in [33]. The algorithm in [20] exhibits higher values for each metrics excepts recall value in video number 3, compared to other algorithms for object detection and segmentation. B. Experiment 2: Evaluation of Proposed Optimization Approach To validate the effectiveness of HSAJAYA to solve (12), the results obtained are compared with that of other optimization techniques, namely, SA, Teaching Learning Based Optimization (TLBO) [34], JAYA, Elitist-JAYA [35], SAMP-JAYA [36], and Non-dominated Sort Genetic Algorithm II (NSGA II) [37]. For all simulations, the number of iterations is considered to be 100 and the number of population as 10. Table III demonstrates the performance of the proposed scheme to minimize the 0098-3063 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. Authorized licensed use limited to: INDIAN INSTITUTE OF TECHNOLOGY GUWAHATI. Downloaded on April 08,2020 at 06:00:18 UTC from IEEE Xplore. Restrictions apply. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TCE.2020.2981829, IEEE Transactions on Consumer Electronics 7 5 ×10 4 ×10 ×105 106 4.5 3 4 SA TLBO JAYA Elitist-JAYA SAMP-JAYA NSGA II HSAJAYA 3 2.5 SA TLBO JAYA Elitist-JAYA SAMP-JAYA NSGA II HSAJAYA 105 4 10 2 Best Cost 1.5 6 3.5 Best Cost SA TLBO JAYA Elitist-JAYA SAMP-JAYA NSGA II HSAJAYA 2 Best Cost Best Cost 2.5 SA TLBO JAYA Elitist-JAYA SAMP-JAYA NSGA II HSAJAYA 5 4 3 2 1 0 20 40 60 80 1.5 100 0 20 40 60 80 100 103 0 20 40 60 80 100 0 20 40 60 Iteration Iteration Iteration Iteration (a) (b) (c) (d) 80 100 Fig. 4. Convergence characteristics for various optimization techniques applied to (a) Video No.1 (b) Video No.2 (c) Video No.3 (d) Video No.4 Video#4 Video#3 Video#2 Video#1 TABLE III P ERFORMANCE C OMPARISON A MONG O PTIMIZATION T ECHNIQUES Collision Temporal Fitness Time of Optimization Activity Cost Consistency Value Execution Techniques Cost (Sec) Cost (×103 ) (×103 ) SA 0 13.77 9.69 11.19 207.18 TLBO 0 13.21 8.40 10.73 94.13 JAYA 0 13.21 8.40 10.73 41.28 Elitist-JAYA 0 13.15 9.09 10.69 80.65 SAMP-JAYA 0 13.10 8.95 10.65 81.52 NSGA II 0 13.21 8.38 10.73 47.09 HSAJAYA 0 12.99 11.72 10.55 136.47 SA 0 212.85 9.77 172.9 120.39 TLBO 0 520.75 11.53 423.01 53.23 JAYA 0 520.75 11.53 423.01 26.89 Elitist-JAYA 0 520.80 10.95 423.05 52.73 SAMP-JAYA 0 520.77 10.87 423.02 54.28 NSGA II 0 520.75 11.53 423.01 33.12 HSAJAYA 0 184.11 9.38 149.55 89.50 SA 0 5.01 36.75 4.08 1213.3 TLBO 0 276.72 32.51 224.78 512.52 JAYA 0 162.33 48.37 131.87 269.04 Elitist-JAYA 0 6.77 40.69 5.51 538.28 SAMP-JAYA 0 10.34 38.43 8.41 540.95 NSGA II 0 272.19 41.29 221.10 266.65 HSAJAYA 0 1.76 55.66 1.44 882.18 SA 0 719.04 1.07 584.07 8.59 TLBO 0 722.41 1.10 586.81 6.70 JAYA 0 722.41 1.10 586.81 3.67 Elitist-JAYA 0 722.41 1.10 586.81 10.04 SAMP-JAYA 0 719.04 1.07 584.07 15.42 NSGA II 0 722.41 1.10 586.81 6.42 HSAJAYA 0 184.35 2.90 149.74 4.37 objective function (12) over the four videos considered in terms of activity, collision and temporal consistency costs along with the execution time. During the entire simulation, the preservation of all activities by considering the length of the synopsis video to be equal to the length of the longest tube is assumed. Hence, in all the simulation results the activity cost reflects to be zero. A statistical comparative analysis is carried out to validate the superiority of the proposed scheme with respect to the best, mean, worst, standard deviation and the average execution time as compared to other considered meta-heuristic techniques, which are depicted in Table IV. The analysis is drawn over 10 independent runs of each algorithm (SA, TLBO, JAYA, ElitistJAYA, SAMP-JAYA, NSGA II, and the proposed HSAJAYA), where the best one is selected in terms of the convergence characteristics over four considered videos, shown in Fig. 4. In addition, the proposed HSAJAYA algorithm is employed and comparison is based on the fitness value and time of execution to minimize the objective functions (originally solved by SA) Fig. 5. First row: Video Synopsis results obtained in [6] of Video 1 (155th 158th frames). Second row: Video Synopsis results obtained in HSAJAYA of Video 1 (155th - 158th frames). Third row: Video Synopsis results obtained in [6] of Video 2 (140th - 143th frames). Fourth row: Video Synopsis results obtained in HSAJAYA of Video 2 (140th - 143th frames). Fifth row: Video Synopsis results obtained in [6] of Video 3 (425th - 428th frames). Sixth row: Video Synopsis results obtained in HSAJAYA of Video 3 (425th - 428th frames).Seventh row: Video Synopsis results obtained in [6] of Video 4 (36th - 39th frames). Eighth row: Video Synopsis results obtained in HSAJAYA of Video 4 (36th - 39th frames). Red circle signifies the presence of collision in the resultant synopsis obtained in [6], where as yellow circle signifies the collision in resultant synopsis obtained in HSAJAYA. proposed in [6] and [10], as illustrated in Table V. During simulations of [6] and [10], the weights associated for collision and temporal consistency costs are assumed to be the same as used in (12). From Tables III-IV, it is evident that the proposed algorithm outperforms the considered optimization techniques to solve the proposed objective function. Moreover, it is noticed from Table V that the proposed scheme is superior to solve the state-of-the-arts optimization frameworks in [6] and [10]. In general, the proposed hybrid scheme outperforms the traditional optimization algoritms (i.e. 0098-3063 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. Authorized licensed use limited to: INDIAN INSTITUTE OF TECHNOLOGY GUWAHATI. Downloaded on April 08,2020 at 06:00:18 UTC from IEEE Xplore. Restrictions apply. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TCE.2020.2981829, IEEE Transactions on Consumer Electronics Video#4 Video#3 Video#2 Video#1 8 TABLE IV C OMPARATIVE S TATISTICAL A NALYSIS Standard Average Best Mean Worst Deviation Optimization Execution Techniques (×103 ) (×103 ) (×103 ) 3 Time (Sec) (×10 ) SA 11.19 14.03 19.39 2.86 202.69 TLBO 10.73 12.48 14.40 1.53 96.52 JAYA 10.73 14.03 20.43 3.57 44.68 Elitist-JAYA 10.69 13.86 18.95 3.32 92.18 SAMP-JAYA 10.65 13.77 17.43 3.24 94.54 NSGA II 10.73 15.02 20.94 4.33 45.63 HSAJAYA 10.55 12.83 19.33 3.23 192.00 SA 172.90 276.80 364.36 54.02 117.81 TLBO 423.01 424.83 440.89 5.64 51.94 JAYA 423.01 426.61 442.68 7.64 26.55 Elitist-JAYA 423.05 430.07 438.69 7.25 54.45 SAMP-JAYA 423.02 428.03 436.75 7.12 53.78 NSGA II 423.01 454.01 538.30 41.11 32.96 HSAJAYA 149.55 221.03 291.42 42.35 91.71 SA 4.08 9.18 18.78 5.45 1150.33 TLBO 224.78 298.06 341.17 37.12 508.85 JAYA 131.87 226.47 389.91 89.27 259.01 Elitist-JAYA 5.51 18.47 23.76 11.54 503.81 SAMP-JAYA 8.41 25.87 52.65 32.59 509.32 NSGA II 221.10 303.37 454.78 69.52 270.13 HSAJAYA 1.44 36.24 115.71 40.90 914.59 SA 584.07 584.93 585.78 0.89 8.81 TLBO 586.81 586.81 586.81 0.00 6.44 JAYA 586.81 586.93 588.03 0.38 3.41 Elitist-JAYA 586.81 593.18 640.44 16.77 9.09 SAMP-JAYA 584.07 584.88 585.78 0.86 14.59 NSGA II 586.81 586.81 586.81 0.00 5.14 HSAJAYA 149.74 150.78 152.16 1.12 4.11 SA, TLBO, JAYA, Elitist-JAYA, SAMP-JAYA, and NSGA II) in solving the overall minimization problem for object-based surveillance video synopsis. Further, to validate the efficacy of the proposed consumer surveillance management system, a subjective quality assessment study as in [9] is conducted. Here, 45 subjects are invited to observe the generated synopsis of the experimental videos through [6] (left, Screen-1) and proposed CSMS (right, Screen-2) placed adjacently. Generated synopsis frames through [6] and proposed CSMS are depicted in the odd rows and even rows of Fig. 5, respectively. The subjects are requested to contribute their observations in terms of “Yes” or “No” for the questions: 1. Is the synopsis on Screen-2 more comfortable in terms of visualization? 2. Is synopsis on the Screen-1 having fewer observable collisions? 3. Are the objects clearly distinguishable in the synopsis on Screen2? Then the original videos are projected and the following question is asked: 4. Is the synopsis video on Screen-1 better than that on the Screen-2 in correspondence to the original video? To obtain a visual response rating (V RR), it is assumed that Rij = 1, if the ith participant has given “Yes” for the j th question, else Rij = 0. Equation (15) is applied to evaluate “Yes” rates for the questions, which reflects the participant’s opinion. 45 X 4 X V RR = ( Rij )/180 × 100% (15) i=1 j=1 The “Yes” rates for four questions were 76.8%, 16.4%, 82.3%, and 6.7%, respectively. From the testing data, it can be noted that most users prefer the proposed video synopsis as compared to [6]. TABLE V P ERFORMANCE C OMPARISON FOR S OLVING S TATE -O F -T HE -A RT Fitness Fitness Time of Time of Optimization Value [6] Execution Value [10] Execution Techniques (×103 ) [6] (Sec) (×103 ) [10] (Sec) 11.19 207.18 0.666 350.85 Video#1 SA HSAJAYA 10.55 136.47 0.629 216.62 172.90 120.39 4.514 194.18 Video#2 SA HSAJAYA 149.55 89.50 3.904 147.20 SA 4.08 1213.28 0.248 1866.05 Video#3 HSAJAYA 1.44 882.18 0.095 1383.18 584.07 8.54 34.760 14.46 Video#4 SA HSAJAYA 149.79 4.37 8.930 6.93 Video#1 Video#2 Video#3 Video#4 TABLE VI F RAME - WISE A NALYSIS OF P ROCESSING T IME Optimization Computational Computational Techniques Time [6] (Sec/Frame) Time [10] (Sec/Frame) SA 0.3453 0.5847 HSAJAYA 0.2274 0.3610 SA 0.1129 0.1821 HSAJAYA 0.0839 0.1380 SA 0.4513 0.6942 HSAJAYA 0.3281 0.5145 SA 0.0286 0.0483 HSAJAYA 0.0142 0.0231 C. Computational Complexity Analysis The computational complexity of the proposed algorithm is O(K 2 LW H), where K denotes the number of objects in the original video and L, W , and H represent the maximum tube length, bounding box width and height, respectively. Based on two major steps, 3 and 10 of Algorithm 1, the complexity is analyzed as remaining steps takes either constant time or iterates for constant time. Hence, the total time complexity of the proposed algorithm is O(K 2 LW H), which is computationally more efficient than that of [6] and [10] schemes that require O(T n ) and O(T n + Rn ), respectively, where T is the suitable temporal shift of objects and n is the number of all objects present in the original video and R denotes the reduction coefficient search space. Additionally, the processing time for each frame (in seconds) for the considered videos are depicted in Table VI. It is observed that the proposed scheme takes less time than that of [6] and [10]. IV. C ONCLUSION This paper deals with implementation of hybrid optimization scheme, HSAJAYA, in VS framework to use consumer electronic devices efficiently. During hybridization, the population step is generated using JAYA, and SA is utilized for selection. In general, the proposed HSAJAYA algorithm effectively minimizes the objective function in terms of activity loss, collision cost and temporal consistency cost with reduced computational time for efficient and intelligent applications like home security. Experimental results and analysis confirm that the performance of the proposed scheme surpasses that of the recent optimization techniques. The proposed technique may be applicable to the consumer electronic devices (such as smart phone, tablets, etc.) which are capable of processing video due to the reduced computational cost. R EFERENCES [1] W. Lao, J. Han, and P. H. De With, “Automatic video-based human motion analyzer for consumer surveillance system,” IEEE Trans. Consum. Electron., vol. 55, no. 2, pp. 591–598, May 2009. 0098-3063 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. Authorized licensed use limited to: INDIAN INSTITUTE OF TECHNOLOGY GUWAHATI. Downloaded on April 08,2020 at 06:00:18 UTC from IEEE Xplore. Restrictions apply. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TCE.2020.2981829, IEEE Transactions on Consumer Electronics 9 [2] S. Javanbakhti, S. Zinger et al., “Fast scene analysis for surveillance & video databases,” IEEE Trans. Consum. Electron., vol. 63, no. 3, pp. 325–333, Aug. 2017. [3] R. M. Jiang, A. H. Sadka, and D. Crookes, “Hierarchical video summarization in reference subspace,” IEEE Trans. Consum. Electron., vol. 55, no. 3, pp. 1551–1557, Aug. 2009. [4] G. Ciocca and R. Schettini, “Supervised and unsupervised classification post-processing for visual video summaries,” IEEE Trans. Consum. Electron., vol. 52, no. 2, pp. 630–638, May 2006. [5] Y. Gao, W. Wang, and J. Yong, “A video summarization tool using twolevel redundancy detection for personal video recorders,” IEEE Trans. Consum. Electron., vol. 54, no. 2, pp. 521–526, May 2008. [6] A. Rav-Acha, Y. Pritch, and S. Peleg, “Making a long video short: Dynamic video synopsis,” in Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., New York, NY, USA, Jun. 2006, pp. 435–441. [7] S. Kirkpatrick, C. D. Gelatt, and M. P. Vecchi, “Optimization by simulated annealing,” Science, vol. 220, no. 4598, pp. 671–680, May 1983. [8] Y. Pritch, A. Rav-Acha, and S. Peleg, “Nonchronological video synopsis and indexing,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 30, no. 11, pp. 1971–1984, Jan. 2008. [9] Y. Nie, C. Xiao, H. Sun, and P. Li, “Compact video synopsis via global spatiotemporal optimization,” IEEE Trans. Vis. Comput. Graphics, vol. 19, no. 10, pp. 1664–1676, Oct. 2013. [10] X. Li, Z. Wang, and X. Lu, “Surveillance video synopsis via scaling down objects,” IEEE Trans. Image Process., vol. 25, no. 2, pp. 740– 755, Feb. 2016. [11] M. Lu, Y. Wang, and G. Pan, “Generating fluent tubes in video synopsis,” in Proc. IEEE Int. Conf. Acoust., Speech Signal Process., Vancouver, BC, Canada, May 2013, pp. 2292–2296. [12] R. Zhong, R. Hu, Z. Wang, and S. Wang, “Fast synopsis for moving objects using compressed video,” IEEE Signal Process. Lett., vol. 21, no. 7, pp. 834–838, Jul. 2014. [13] L. Sun, J. Xing, H. Ai, and S. Lao, “A tracking based fast online complete video synopsis approach,” in Proc. 21st Int. Conf. Pattern Recognit., Tsukuba, Japan, Nov. 2012, pp. 1956–1959. [14] W. Lin, Y. Zhang, J. Lu, B. Zhou, J. Wang, and Y. Zhou, “Summarizing surveillance videos with local-patch-learning-based abnormality detection, blob sequence optimization, and type-based synopsis,” Neurocomputing, vol. 155, pp. 84–98, May 2015. [15] W. Wang, P. Chung, C. Huang, and W. Huang, “Event based surveillance video synopsis using trajectory kinematics descriptors,” in Proc. 15th IAPR Int. Conf. on Mach. Vis. Appl., Nagoya, Japan, May 2017, pp. 250–253. [16] K. Li, B. Yan, W. Wang, and H. Gharavi, “An effective video synopsis approach with seam carving,” IEEE Signal Process. Lett., vol. 23, no. 1, pp. 11–14, Jan. 2016. [17] Y. Boykov, O. Veksler, and R. Zabih, “Fast approximate energy minimization via graph cuts,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 23, no. 11, pp. 1222–1239, Nov. 2001. [18] R. Rao, “Jaya: A simple and new optimization algorithm for solving constrained and unconstrained optimization problems,” Int. J. Ind. Eng. Comput., vol. 7, no. 1, pp. 19–34, Jan. 2016. [19] S. P. Singh, T. Prakash, V. Singh, and M. G. Babu, “Analytic hierarchy process based automatic generation control of multi-area interconnected power system using jaya algorithm,” Eng. Appl. Artif. Intell., vol. 60, pp. 35–44, Apr. 2017. [20] J. Yao and J. M. Odobez, “Multi-layer background subtraction based on color and texture,” in Proc. IEEE Conf. Comp. Vis. Pattern Recog., Minneapolis, MN, USA, Jun. 2007, pp. 1–8. [21] G. Welch and G. Bishop, “An introduction to the kalman filter,” in Proc. Annu. Conf. Comput. Graph. Interact. Techn., Los Angeles, CA, USA, Aug. 2001, pp. 12–17. [22] T. Yao, M. Xiao, C. Ma, C. Shen, and P. Li, “Object based video synopsis,” in Proc. IEEE Workshop Adv. Res. Technol. Ind. Appl., Ottawa, Canada, Sep. 2014, pp. 1138–1141. [23] Y. Tian, H. Zheng, Q. Chen, D. Wang, and R. Lin, “Surveillance video synopsis generation method via keeping important relationship among objects,” IET Computer Vis., vol. 10, no. 8, pp. 868–872, Jun. 2016. [24] N. Metropolis, A. W. Rosenbluth, M. N. Rosenbluth, A. H. Teller, and E. Teller, “Equation of state calculations by fast computing machines,” J. Chem. Phys., vol. 21, no. 6, pp. 1087–1091, Jun. 1953. [25] P. Pérez, M. Gangnet, and A. Blake, “Poisson image editing,” ACM Trans. Graph., vol. 22, no. 3, pp. 313–318, Jul. 2003. [26] Y. Wang, P. Jodoin, F. Porikli, J. Konrad, Y. Benezeth, and P. Ishwar. (2014, Jun.) Cdnet 2014: An expanded change detection [27] [28] [29] [30] [31] [32] [33] [34] [35] [36] [37] benchmark dataset. Columbus, Ohio. CDnet 2014. [Online]. Available: http://changedetection.net/ PETS2001 Dataset. [Online]. Available: ftp://ftp.pets.rdg.ac.uk/pub LASIESTA Database. [Online]. Available: http://www.gti.ssr.upm.es/data D. S. Lee, “Effective gaussian mixture learning for video background subtraction,” IEEE Trans. Pattern Anal. Mach. Intell., no. 5, pp. 827– 832, 2005. M. Hofmann, P. Tiefenbacher, and G. Rigoll, “Background segmentation with feedback: The pixel-based adaptive segmenter,” in Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. Workshops, Providence, Rhode Island, USA, Jun. 2012, pp. 38–43. P. L. St-Charles and G. A. Bilodeau, “Improving background subtraction using local binary similarity patterns,” in Proc. IEEE Winter Conf. Appl. Comput. Vis., Steamboat Springs CO, Mar. 2014, pp. 509–515. P. L. St-Charles, G. A. Bilodeau, and R. Bergevin, “Flexible background subtraction with self-balanced local sensitivity,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. Workshops, Columbus, Ohio, Jun. 2014, pp. 408–413. D. K. Panda and S. Meher, “A new wronskian change detection model based codebook background subtraction for visual surveillance applications,” J. Visual Commun. Image Representation, vol. 56, pp. 52 – 72, Aug. 2018. R. Rao, V. J. Savsani, and D. P. Vakharia, “Teaching–learning-based optimization: a novel method for constrained mechanical design optimization problems,” Comput. Aided Design, vol. 43, no. 3, pp. 303–315, Mar. 2011. R. Rao and A. Saroj, “Constrained economic optimization of shell-andtube heat exchangers using elitist-jaya algorithm,” Energy, vol. 128, no. 1, pp. 785–800, Jun. 2017. ——, “A self-adaptive multi-population based jaya algorithm for engineering optimization,” Swarm Evol. Comput., vol. 37, pp. 1–26, Dec. 2017. K. Deb, A. Pratap, S. Agarwal, and T. Meyarivan, “A fast and elitist multiobjective genetic algorithm: NSGA-II,” IEEE Trans. Evol. Comput., vol. 6, no. 2, pp. 182–197, Apr. 2002. Subhankar Ghatak received the B.Sc. (Hons.) degree in mathematics from University of Calcutta, India, in 2005 and the MCA and M.Tech in Information Technology degrees from the same University, in 2008 and 2011, respectively. He is continuing his Ph.D. degree in Computer Science and Engineering from IIIT Bhubaneswar, India. His research interest includes Advanced Image and Video Processing, Computer Vision, Visual Cryptography and Steganography. Suvendu Rup received his M.Tech degree in CSE, from Jadavpur University, Kolkata, India. He received his Ph.D. degree in CSE from NIT Rourkela, Odisha, India. Since 2010, he is with the Department of CSE, IIIT Bhubaneswar, India as an Assistant Professor. His research interest includes Image and Video Processing, Computer Vision, Machine Learning, Distributed video coding, and assistive computing. Banshidhar Majhi received his M.Tech degree and Ph.D. in CSE in the year 1998 and 2003, respectively, from NIT Rourkela, Odisha, India. Presently, he is serving as Director & Registrar (i/c) of IIITDM Kancheepuram. His research interest includes Image and Video Processing, Data Compression, Soft Computing, Bio-metrics and Network Security. M.N.S. Swamy (S’59–M’62–SM’74–F’80–LF’01) received the B.Sc. (Hons.) degree in mathematics from Mysore University, India, in 1954, the Diploma degree in ECE from the IISc Bangalore, in 1957 and the M.Sc. and Ph.D. degrees in EE from the University of Saskatchewan, Saskatoon, Canada, in 1960 and 1963, respectively. He is presently a Research Professor and holds the Concordia Chair (Tier I) in Signal Processing in the Department of Electrical and Computer Engineering at Concordia University, Montreal, QC, Canada. 0098-3063 (c) 2020 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. Authorized licensed use limited to: INDIAN INSTITUTE OF TECHNOLOGY GUWAHATI. Downloaded on April 08,2020 at 06:00:18 UTC from IEEE Xplore. Restrictions apply.