Lecture 1

advertisement

RS – Lecture 1

Lecture 1

Least Squares

1

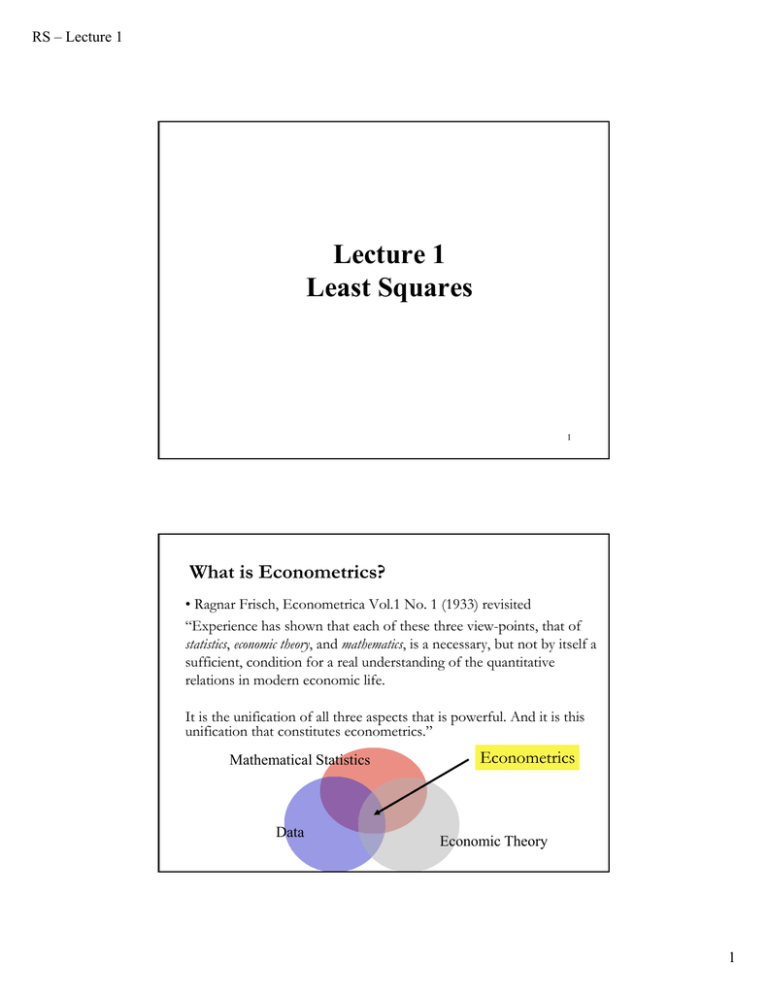

What is Econometrics?

• Ragnar Frisch, Econometrica Vol.1 No. 1 (1933) revisited

“Experience has shown that each of these three view-points, that of

statistics, economic theory, and mathematics, is a necessary, but not by itself a

sufficient, condition for a real understanding of the quantitative

relations in modern economic life.

It is the unification of all three aspects that is powerful. And it is this

unification that constitutes econometrics.”

Mathematical Statistics

Data

Econometrics

Economic Theory

1

RS – Lecture 1

What is Econometrics?

• Economic Theory:

- The CAPM

Ri - Rf = i (RMP - Rf )

• Mathematical Statistics:

- Method to estimate CAPM. For example,

Linear regression:

Ri - Rf = αi + i (RMP - Rf ) + i

- Properties of bi (the LS estimator of i)

- Properties of different tests of CAPM. For example, a t-test for

H0: αi =0.

• Data: Ri, Rf, and RMP

- Typical problems: Missing data, Measurement errors, Survivorship

bias, Auto- and Cross-correlated returns, Time-varying moments.

Estimation

• Two philosophies regarding models (assumptions) in statistics:

(1) Parametric statistics.

It assumes data come from a type of probability distribution and makes

inferences about the parameters of the distribution. Models are

parameterized before collecting the data.

Example: Maximum likelihood estimation.

(2) Non-parametric statistics.

It assumes no probability distribution –i.e., they are “distribution free.”

Models are not imposed a priori, but determined by the data.

Examples: histograms, kernel density estimation.

• In general, in parametric statistics we make more assumptions.

2

RS – Lecture 1

Least Squares Estimation

• Old method: Gauss (1795, 1801) used it in

astronomy.

Carl F. Gauss (1777 – 1855, Germany)

Idea:

• There is a functional form relating a dependent variable Y and k

variables X. This function depends on unknown parameters, θ. The

relation between Y and X is not exact. There is an error, . We have T

observations of Y and X.

• We will assume that the functional form is known:

yi = f(xi, θ) + i

i=1, 2, ...., T.

• We will estimate the parameters θ by minimizing a sum of squared

errors:

minθ {S(xi, θ) =Σi i2 }

Least Squares Estimation

• We will use linear algebra notation. That is,

y = f(X, θ) +

Vectors will be column vectors: y, xk, and are Tx1 vectors:

⋮ xk

⋮

⇒ y’ = [y1 y2 .... yT]

⇒

xk’= [xk1 xk2 .... xkT]

’ = [1 2 .... T]

X is a Txk matrix. Its columns are the k Tx1 vectors xk. It is common

to treat x1 as vector of ones:

x1’=[x11 x12 .... x1T] = [1 1 1... 1] = ί’

3

RS – Lecture 1

Least Squares Estimation - Assumptions

• Typical Assumptions

(A1) DGP: y = f(X, θ) + is correctly specified.

For example, f(x, θ) = X

(A2) E[|X] = 0

(A3) Var[|X] = σ2 IT

(A4) X has full column rank – rank(X)=k-, where T ≥ k.

• Assumption (A1) is called correct specification. We know how the data is

generated. We call y = f(X, θ) + the Data Generating Process.

Note: The errors, , are called disturbances. They are not something we

add to f(X, θ) because we don’t know precisely f(X, θ). No. The errors

are part of the DGP.

Least Squares Estimation - Assumptions

• Assumption (A2) is called regression.

From Assumption (A2) we get:

(i)

E[|X] = 0

⇒ E[y|X] = f(X, θ) + E[|X] = f(X, θ)

That is, the observed y will equal E[y|X] + random variation.

(ii) Using the Law of Iterated Expectations (LIE):

E[] = EX[E[|X]] = EX[0] = 0

(iii) There is no information about in X

⇒ Cov(,X)=0.

Cov(,X)= E[( - 0)(X - μX)] = E[X] = 0. (using LIE)

⇒ That is,

X.

4

RS – Lecture 1

Least Squares Estimation - Assumptions

• From Assumption (A3) we get

Var[|X] = 2IT

=> Var[] = 2IT

Proof: Var[] = Ex[Var[|X]] + Varx[E[|X]] = 2IT. ▪

This assumption implies

(i) homoscedasticity

(ii) no serial/cross correlation

⇒ E[i2|X] = 2

⇒ E[ i j |X] = 0

for all i.

for i≠j.

• From Assumption (A4) => the k independent variables in X are

linearly independent. Then, the kxk matrix X’X will also have full

rank –i.e., rank(X’X) = k.

To get asymptotic results we will need more assumption about X.

Least Squares Estimation – f.o.c.

• Objective function: S(xi, θ) =Σi i2

• We want to minimize w.r.t to θ. That is,

minθ {S(xi, θ) =Σi i2 = Σi [yi - f(xi, θ)]2 }

⇒ d S(xi, θ)/d θ = - 2 Σi [yi - f(xi, θ)] f ‘(xi, θ)

f.o.c. ⇒ - 2 Σi [yi - f(xi, θLS)] f ‘(xi, θLS) = 0

Note: The f.o.c. deliver the normal equations.

The solution to the normal equation, θLS, is the LS estimator. The

estimator θLS is a function of the data (yi ,xi).

5

RS – Lecture 1

CLM - OLS

• Suppose we assume a linear functional form for f(x, θ):

(A1’) DGP: y = f(X, θ) + = X +

Now, we have all the assumptions behind classical linear regression

(CLM) model:

(A1) DGP: y = X + is correctly specified.

(A2) E[|X] = 0

(A3) Var[|X] = σ2 IT

(A4) X has full column rank – rank(X)=k, where T ≥ k.

Objective function: S(xi, θ) =Σi i2 = ′ = (y- X)′ (y- X)

Normal equations: -2 Σi [yi - f(xi, θLS)] f ‘(xi, θLS) = -2 (y- Xb)′ X =0

Solving for b

⇒ b = (X′X)-1 X′y

OLS Estimation: Second Order Condition

• OLS estimator:

b = (X′X)-1 X′y

Note: (i) b = OLS. (Ordinary LS. Ordinary=linear)

(ii) b is a (linear) function of the data (yi ,xi).

(iii) X′(y-Xb) = X′y - X′X(X′X)-1X′y = X′e = 0 => e X.

• Q: Is b is a minimum? We need to check the s.o.c.

(y - X b )'(y - X b )

b

2 (y - X b )'(y - X b )

b b

2 X '(y - X b )

(y - X b )'(y - X b )

b

=

b

colum n vector

=

row vector

= 2 X 'X

6

RS – Lecture 1

OLS Estimation: Second Order Condition

in1 xi21

in1 xi1 xi 2

n

x x

in1 xi22

2e'e

2 X'X = 2 i 1 i 2 i1

...

bb'

...

n

n

i 1 xiK xi1 i 1 xiK xi 2

... in1 xi1 xiK

... in1 xi 2 xiK

...

...

n

... i 1 xiK2

If there were a single b, we would require this to be

positive, which it would be; 2x'x = 2 i 1 xi2 0.

n

The matrix counterpart of a positive number is a

positive definite matrix.

OLS Estimation: Second Order Condition

in1 xi21

n

x x

X'X = i 1 i 2 i1

...

n

i 1 xiK xi1

x i1

x

= in1 i 2 xi1

...

xik

n

= i 1 x i x i

in1 xi1 xi 2

n

2

i 1 i 2

...

x

...

...

...

in1 xiK xi 2

...

xi 2

...

x i21

in1 xi1 xiK

in1 xi 2 xiK

x x

= in1 i 2 i1

...

...

in1 xiK2

x iK xi1

x i1 x i 2

2

i2

...

x

...

...

...

xiK xi 2

...

xi1 xiK

xi 2 xiK

...

xiK2

x iK

Definition: A matrix A is positive definite (pd) if z′A z >0 for any z.

In general, we need eigenvalues to check this. For some matrices it is

easy to check. Let A = X′X.

Then, z′A z = z′X′X z = v′v >0.

⇒ X′X is pd ⇒ b is a min!

7

RS – Lecture 1

OLS Estimation - Properties

• The LS estimator of LS when f(x, θ) = X is linear is

b = (X′X)-1X′ y ⇒ b is a (linear) function of the data (yi ,xi).

b = (X′X)-1X′ y = (X′X)-1 X′(X + ) = + (X′X)-1X′

Under the typical assumptions, we can establish properties for b.

1) E[b|X]=

2) Var[b|X] = E[(b-) (b-)′|X] =(X′X)-1 X’E[ ′|X] X(X′X)-1

= σ2 (X′X)-1

We can show that b is BLUE (or MVLUE).

Proof.

Let b* = Cy

(linear in y)

E[b*|X]= E[Cy|X]=E[C(X + )|X]= (unbiased if CX=I)

Var[b*|X]= E[(b*- )(b*- )′|X] = E[C ′C ′|X] = σ2 CC′

OLS Estimation - Properties

Var[b*|X]= σ2 CC′

(note DX=0)

Now, let D = C - (X′X)-1 X′

2

-1

Then, Var[b*|X] = σ (D+(X′X) X′) (D′ + X(X′X)-1)

= σ2 DD′ + σ2 (X′X)-1 = Var[b|X] + σ2 DD′. ▪

This result is known as the Gauss-Markov theorem.

3) If we make an additional assumption:

(A5) |X ~iid N(0, σ2IT)

we can derive the distribution of b.

Since b = +(X′X)-1X′, we have that b is a linear combination of

normal variables ⇒ b|X ~iid N(, σ2 (X’ X)-1)

8

RS – Lecture 1

Algebraic Results

• Important Matrices

(1) “Residual maker”

M = IT - X(X′X)-1X′

My = y - X(X′X)-1X′y = y – Xb = e (residuals)

MX = 0

- M is symmetric

– M = M′

- M is idempotent

– M*M = M

- M is singular

– M-1 does not exist. => rank(M)=T-k

(M does not have full rank. We have already proven this result.)

Algebraic Results

• Important Matrices

(2) “Projection matrix” P = X(X′X)-1X′

Py = X(X′X)-1X′y = Xb =ŷ

(fitted values)

Py is the projection of y into the column space of X.

PM = MP = 0 (Projection matrix)

PX = X

- P is symmetric

- P is idempotent

- P is singular

– P = P′

– P*P = P

– P-1 does not exist.

⇒ rank(P)=k

9

RS – Lecture 1

Algebraic Results

• Disturbances and Residuals

In the population:

E[X′ ]= 0.

In the sample:

X′e = X′(y-Xb) = X′y - X′X(X′X)-1X′y

= 1/T(X′ e) = 0.

• We have two ways to look at y:

y = E[y|X] + = Conditional mean + disturbance

y = Xb + e = Projection + residual

Results when X Contains a Constant Term

• Let the first column of X be a column of ones. That is

X = [ί,x2,…,xK]

• Then,

(1) Since X′e = 0

⇒ x1′e = 0 – the residuals sum to zero.

(2) Since y = Xb + e

⇒ ί′y = ί′Xb + ί′ e = ί′Xb

⇒ y xb

That is, the regression line passes through the means.

Note: These results are only true if X contains a constant term!

10

RS – Lecture 1

Frisch-Waugh (1933) Theorem

• Context: Model contains two sets of variables:

X = [ [1,time] | [ other variables] ]

= [X1 X2]

• Regression model:

Ragnar Frisch (1895 – 1973)

y = X11 + X22 + (population)

= X1b1 + X2b2 + e (sample)

1

X1' X1 X1' X 2 X1' y

• OLS solution: b = (X′X)-1X′ y

X 2 ' X1 X 2 ' X 2 X 2 ' y

Problem in 1933: Can we estimate 2 without inverting the (k1+k2)x

(k1+k2) X’X matrix? The F-W theorem helps reduce computation, by

getting simplified algebraic expression for OLS coefficient, b2.

F-W: Partitioned Solution

• Direct manipulation of normal equations produces

( X X )b = X y

X 1 X 2

XX

Xy

and X y = 1

X = [X 1 , X 2 ] so X X = 1 1

X 2 X 1 X 2 X 2

X 2 y

X 1 X 2 b 1 X 1 y

XX

(X X)b = 1 1

=

X 2 X 1 X 2 X 2 b 2 X 2 y

X 1 X 1b 1 X 1 X 2 b 2 X 1 y

X 2 X 1b 1 X 2 X 2 b 2 X 2 y ==> X 2 X 2 b 2 X 2 y - X 2 X 1b 1

= X 2 (y - X 1b 1 )

11

RS – Lecture 1

F-W: Partitioned Solution

• Direct manipulation of normal equations produces

b2 = (X2X2)-1X2(y - X1b1) => Regression of (y - X1b1) on X2

Note: if X2X1 = 0

⇒ b2 = (X2X2)-1X2y

• Use of the partitioned inverse result produces a fundamental result:

What is the southeast element in the inverse of the moment matrix?

-1

[ ]-1

X1'X1

X 'X

2 1

X1'X2

X2'X2

(2,2)

• With the partitioned inverse, we get:

b2 = [ ]-1(2,1) X1′y + [ ]-1(2,2) X2′y

F-W: Partitioned Solution

• Recall from the Linear Algebra Review:

1

1

I XX

XY I 0 XX1 R1

XY XX

YY 0 I

0

YY

YX

1.

XX

YX

2.

1

1

I

XX

XY

XX

R2 YX R1

1

1

0 YY YX XX XY YX XX

3.

1

1

I XX

XY

[ YX XX

XY ]1 R2

YY

I

0

1

XX

0

I

0

I

1

D(YX XX

)

0

D

1

XY ]1

where D [YY YX XX

4.

1

1

1

1

I 0 XX

XX

XY DYX XX

R1 XX

R2

XY

1

)

D(YX XX

0 I

1

XX

XY D

D

12

RS – Lecture 1

F-W: Partitioned Solution

• Then,

X 'X

1. Matrix X' X 1 1

X 2 ' X1

X1 ' X 2

X2' X2

( X ' X ) 1 ( X1 ' X1 ) 1 X1 ' X 2 DX 2 ' X1 ( X1 ' X1 ) 1 ( X1 ' X1 ) 1 X1 ' X 2 D

2. Inverse 1 1

D

DX 2 ' X1 ( X1 ' X1 ) 1

where D [ X 2 ' X 2 X 2 ' X1 ( X1 ' X1 ) 1 X1 ' X 2 ]1 [ X 2 ' ( I X1 ( X1 ' X1 ) 1 X1 )' X 2 ]1

D [ X 2 ' M1 X 2 ]1

The algebraic result is: [ ]-1(2,1) = -D X2’X1(X1’X1)-1

[ ]-1(2,2) = D = [X2’M1X2]-1

Interesting cases: X1 is a single variable & X1 = ί.

• Then, continuing the algebraic manipulation:

b2 = [ ]-1(2,1) X1′y + [ ]-1(2,2) X2′y = [X2′M1X2]-1X2′M1y

Partitioned Solution - Frisch-Waugh Result

• Then, continuing the algebraic manipulation:

b2 = [X2′M1X2]-1X2′M1y

= [X2′ M1′M1X2]-1X2′M1′M1y

= [X*2 ′X*2]-1X*2′y*

where Z*= ′M1Z = residuals from a regression of Z on X1.

This is Frisch and Waugh’s result - the double residual regression. We

have a regression of residuals on residuals!

• Two ways to estimate b2:

(1) Detrend the other variables. Use detrended data in the regression.

(2) Use all the original variables, including constant and time trend.

Detrend: Compute the residuals from the regressions of the variables

on a constant and a time trend.

13

RS – Lecture 1

Frisch-Waugh Result: Implications

• FW result:

b2 = [X2’M1X2]-1X2’M1y = [X2’M1’M1X2]-1X2’ M1’M1y

• Implications

- We can isolate a single coefficient in a regression.

- It is not necessary to ‘partial’ the other Xs out of y (M1 is idempotent)

- Suppose X1 X2 . Then, we have the orthogonal regression

⇒ b2 = (X2’X2)-1X2’ y

&

b1 = (X1’X1)-1X1’ y

Example: De-mean

Let X1 = ί

⇒ P1 = ί (ί′ ί)-1ί′ = ί (T)-1ί′ = ί ί′/T

⇒ M1 z = z - ί ί′ z /T = z – ί z

(M1 demeans z)

b2 = [X2’M1’M1X2]-1X2’ M1’M1y

Note: We can do linear regression on data in mean deviation form.

Application: Detrending G and PG

Example taken from Greene

G: Consumption of Gasoline

PG: Price of Gasoline

14

RS – Lecture 1

Application: Detrending Y

Y: Income

Application: Detrended Regression

Regression of detrended Gasoline (M1G) on detrended Price of

Gasoline (M1PG) detrended Income (M1Y)

15

RS – Lecture 1

Goodness of Fit of the Regression

• After estimating the model, we would like to judge the adequacy of

the model. There are two ways to do this:

- Visual: plots of fitted values and residuals, histograms of residuals.

- Numerical measures: R2, adjusted R2, AIC, BIC, etc.

• Numerical measures. In general, they are simple and easy to

compute. We call them goodness-of-fit measures. Most popular: R2.

• Definition: Variation

In the context of a model, we consider the variation of a variable as

the movement of the variable, usually associated with movement of

another variable.

Goodness of Fit of the Regression

n

• Total variation = ( y i - y ) 2 = yM0y.

i=1

where M0 = I – ί(ί’ ί)-1 ί’ = the M de-meaning matrix.

• Decomposition of total variation (assume X1= ί –a constant.)

y = Xb + e,

so

M0y = M0Xb + M0e = M0Xb + e (deviations from means)

yM0y = b(X’ M0)(M0X)b + ee

= bXM0Xb + ee.

(M0 is idempotent & e’ M0X = 0)

TSS = SSR + RSS

TSS: Total sum of squares

SSR: Regression Sum of Squares (also called ESS: explained SS)

RSS: Residual Sum of Squares (also called SSE: SS of errors)

16

RS – Lecture 1

A Goodness of Fit Measure

•

TSS

= SSR + RSS

• We want to have a measure that describes the fit of a regression.

Simplest measure: the standard error of the regression (SER)

SER = RSS/(T-k)

=>SER depends on units. Not good!

• R-squared (R2)

1 = SSR/TSS + RSS/TSS

R2 = SSR/TSS = Regression variation/Total variation

R2 = bXM0Xb/yM0y = 1 - ee/yM0y

= (ŷ - ί y )’ (ŷ - ί y )/ (y - ί y )’ (y - ί y ) =[ ŷ’ŷ – T y 2]/[y’y –T y2 ]

A Goodness of Fit Measure

•

R2 = SSR/TSS = bXM0Xb/yM0y = 1 - ee/yM0y

Note: R2 is bounded by zero and one only if:

(a) There is a constant term in X --we need e’ M0X=0!

(b) The line is computed by linear least squares.

• Adding regressors

R2 never falls when regressors (say z) are added to the regression.

2

*2

R Xz

R X2 (1 R X2 )ryz

ryz: partial correlation coefficient between y and z.

Problem: Judging a model based on R2 tends to over-fitting.

17

RS – Lecture 1

A Goodness of Fit Measure

• Comparing Regressions

- Make sure the denominator in R2 is the same - i.e., same left hand

side variable. Example, linear vs. loglinear. Loglinear will almost

always appear to fit better because taking logs reduces variation.

• Linear Transformation of data

- Based on X, b = (XX)-1Xy.

Suppose we work with X* =XH, instead

(H is not singular).

P*y= X*b*= XH(HX XH)-1HXy (recall (ABC)-1=C-1B-1A-1)

= XHH-1(XX)-1 H-1 HXy

= X(XX)-1 Xy =Py

⇒ same fit, same residuals, same R2!

Adjusted R-squared

•

R2 is modified with a penalty for number of parameters: Adjusted R2

2

R = 1 - [(T-1)/(T-k)](1 - R2) = 1 - [(T-1)/(T-k)] RSS/TSS

= 1 - [RSS/(T-k)] [(T-1)/TSS]

⇒ maximizing adjusted R2 <=> minimizing [RSS/(T-k)]= s2

•

Degrees of freedom --i.e., (T-k)-- adjustment assumes something about

“unbiasedness.”

•

Adjusted-R2 includes a penalty for variables that do not add much

fit. Can fall when a variable is added to the equation.

•

It will rise when a variable, say z, is added to the regression if and

only if the t-ratio on z is larger than one in absolute value.

18

RS – Lecture 1

Adjusted R-squared

• Theil (1957) shows that, under certain assumptions (an important one:

the true model is being considered), if we consider two linear models

M1: y = X1β1 + ε1

M2: y = X2β2 + ε2

and choose the model with smaller s2 (or, larger Adusted R2), we will

select the true model, M1, on average.

• In this sense, we say that “maximizing Adusted R2” is an unbiased

model-selection criterion.

• In the context of model selection, the Adusted R2 is also referred as

Theil’s information criteria.

Other Goodness of Fit Measures

• There are other goodness-of-fit measures that also incorporate

penalties for number of parameters (degrees of freedom).

• Information Criteria

- Amemiya: [ee/(T – K)] (1 + k/T)

- Akaike Information Criterion (AIC)

AIC = -2/T(ln L – k)

L: Likelihood

⇒ if normality AIC = ln(e’e/T) + (2/T) k

(+constants)

- Bayes-Schwarz Information Criterion (BIC)

BIC = -(2/T ln L – [ln(T)/T] k)

⇒ if normality AIC = ln(e’e/T) + [ln(T)/T] k (+constants)

19

RS – Lecture 1

Maximum Likelihood Estimation

• We will assume the errors, , follow a normal distribution:

(A5) |X ~N(0, σ2IT)

• Then, we can write the joint pdf of y as

f ( yt ) (

1

2

2

)1/ 2 exp[

1

2 2

L f ( y1 , y 2 ,..., yT | , 2 ) Tt1 (

( yt xt ' ) 2 ]

1

2

2

)1 / 2 exp[

1

2 2

( y t xt ' ) 2 ]

1

1

exp(

)

2 2

( 2 2 )T / 2

Taking logs, we have the log likelihood function

ln L

T

T

1

ln 2 ln 2

2

2

2 2

Maximum Likelihood Estimation

• Let θ =(β,σ). Then, we want to

Max ln L( | y, X )

T

T

1

ln 2 2 2 ( y X)( y X)

2

2

2

• Then, the f.o.c.:

ln L

1

1

2 ( 2 X y 2 X X) 2 ( X y X X) 0

2

ln L

2

T

2 2

1

2 4

( y X)( y X) 0

Note: The f.o.c. deliver the normal equations for β! The solution to

the normal equation, βMLE, is also the LS estimator, b. That is,

ˆ MLE b ( X X ) 1 X y;

ˆ 2MLE

ee

T

• Nice result for b: ML estimators have very good properties!

20

RS – Lecture 1

ML: Score and Information Matrix

Definition: Score (or efficient score)

S(X ; )

log (L(X | ))

n

i 1

log (f(x i | ))

S(X; θ) is called the score of the sample. It is the vector of partial

derivatives (the gradient), with respect to the parameter θ. If we have

k parameters, the score will have a kx1 dimension.

Definition: Fisher information for a single sample:

log (f(X | )) 2

E

I ()

I(θ) is sometimes just called information. It measures the shape of the

log f(X|θ).

ML: Score and Information Matrix

• The concept of information can be generalized for the k-parameter

case. In this case:

log L log L T

E

I ()

θ θ

This is kxk matrix.

If L is twice differentiable with respect to θ, and under certain

regularity conditions, then the information may also be written as9

log L log L T

2 log(L(X | θ ))

I (θ)

E

E -

θθ'

θ θ

I(θ) is called the information matrix (negative Hessian). It measures the

shape of the likelihood function.

21

RS – Lecture 1

ML: Score and Information Matrix

• Properties of S(X; θ):

S(X ; )

log (L(X | ))

n

i 1

log (f(x i | ))

(1) E[S(X; θ)]=0.

1

f ( x; )

f ( x; ) dx 0

f ( x; )

log f ( x; )

f ( x; ) dx 0 E [S ( x; )] 0

f ( x; )

dx 0

f ( x; ) dx 1

ML: Score and Information Matrix

(2) Var[S(X; θ)]= n I(θ)

log f ( x ; )

f ( x ; ) dx 0

Let' s differenti ate the above integral once more :

2 log f ( x ; )

log f ( x ; ) f ( x ; )

dx

f ( x ; ) dx 0

'

2 log f ( x ; )

1

log f ( x ; )

f ( x; )

f ( x ; ) dx

f ( x ; ) dx 0

'

f ( x; )

log f ( x ; )

f ( x; ) dx

2

2 log f ( x; )

f ( x ; ) dx 0

'

log f ( x; ) 2

2 log f ( x ; )

E

E

I ( )

'

log f ( x; )

Var [ S ( X ; )] n Var [

] n I ( )

22

RS – Lecture 1

ML: Score and Information Matrix

(3) If S(xi; θ) are i.i.d. (with finite first and second moments), then we

can apply the CLT to get:

Sn(X; θ) = Σi S(xi; θ)

a

N(0, n I(θ)).

Note: This an important result. It will drive the distribution of ML

estimators.

ML: Score and Information Matrix – Example

• Again, we assume:

yi X iβ i

i ~ N (0, 2 )

ε ~ N (0, 2 I T )

y Xβ ε

or

• Taking logs, we have the log likelihood function:

ln L

1

T

ln 22 2

2

2

T

2

i

i 1

1

T

T

ln 2 ln 2 2 (y Xβ)(y Xβ)

2

2

2

• The score function is –first derivatives of log L wrt θ=(β,σ2):

ln L

1

2

ln L

2

T

2 x /

'

i i

2

i 1

T

2

2

(

1

2

1

2

T

)

4

X'ε

i 1

2

i

(

1

2

2

)[

ε' ε

2

T]

23

RS – Lecture 1

ML: Score and Information Matrix – Example

• Then, we take second derivatives to calculate I(θ): :

T

1

ln L2

xi xi ' / 2 2 X ' X

'

i 1

ln L

2 '

ln L

2

2

'

1

4

T

x '

i i

i 1

1

2

4

[

ε' ε

2

T] (

1

2

2

)(

ε' ε

4

)

1

2

4

[2

ε' ε

2

T]

• Then,

1

(

X'X)

ln L 2

I () E[

]

'

0

0

T

2 4

ML: Score and Information Matrix

In deriving properties (1) and (2), we have made some implicit

assumptions, which are called regularity conditions:

(i) θ lies in an open interval of the parameter space, Ω.

(ii) The 1st derivative and 2nd derivatives of f(X; θ) w.r.t. θ exist.

(iii) L(X; θ) can be differentiated w.r.t. θ under the integral sign.

(iv) E[S(X; θ) 2]>0, for all θ in Ω.

(v) T(X) L(X; θ) can be differentiated w.r.t. θ under the integral sign.

Recall: If S(X; θ) are i.i.d. and regularity conditions apply, then we can

apply the CLT to get:

S(X; θ)

a

N(0, n I(θ))

24

RS – Lecture 1

ML: Cramer-Rao inequality

Theorem: Cramer-Rao inequality

Let the random sample (X1, ... , Xn) be drawn from a pdf f(X|θ) and let

T=T(X1, ... , Xn) be a statistic such that E[T]=u(θ), differentiable in θ.

Let b(θ)= u(θ) - θ, the bias in T. Assume regularity conditions. Then,

Var(T)

[u ' ( )] 2 [1 b ' ( )] 2

nI ( )

nI ( )

Regularity conditions:

(1) θ lies in an open interval Ω of the real line.

(2) For all θ in Ω, δf(X|θ)/δθ is well defined.

(3) ∫L(X|θ)dx can be differentiated wrt. θ under the integral sign

(4) E[S(X;θ)2]>0, for all θ in Ω

(5) ∫T(X) L(X|θ)dx can be differentiated wrt. θ under the integral sign

ML: Cramer-Rao inequality

Var(T)

[u ' ( )] 2 [1 b ' ( )] 2

nI ( )

nI ( )

The lower bound for Var(T) is called the Cramer-Rao (CR) lower bound.

Corollary: If T(X) is an unbiased estimator of θ, then

Var(T) ( nI ( )) 1

Note: This theorem establishes the superiority of the ML estimate over

all others. The CR lower bound is the smallest theoretical variance. It

can be shown that ML estimates achieve this bound, therefore, any

other estimation technique can at best only equal it.

25

RS – Lecture 1

Properties of ML Estimators

^

(1) Efficiency. Under general conditions, we have that MLE

^

Var( MLE ) [ nI ( )] 1

The right-hand side is the Cramer-Rao lower bound (CR-LB). If an

estimator can achieve this bound, ML will produce it.

(2) Consistency. We know that E[S(Xi; θ)]=0 and Var[S(Xi; θ)]= I(θ).

The consistency of ML can be shown by applying Khinchine’s LLN

to S(Xi,; θ) and then to Sn(X; θ)=Σi S(Xi,; θ).

Then, do a 1st-order Taylor expansion of Sn(X; θ) around ˆ MLE

Sn (X; ) Sn (X;ˆMLE ) Sn ' (X; n* )( ˆMLE )

S (X; ) S ' (X; * )( ˆ )

n

n

n

| n* | | ˆMLE |

MLE

Sn(X; θ) and ( ˆMLE- θ) converge together to zero (i.e., expectation).

Properties of ML Estimators

(3) Theorem: Asymptotic Normality

Let the likelihood function be L(X1,X2,…Xn| θ). Under general

conditions, the MLE of θ is asymptotically distributed as

ˆ MLE a N , [ nI ( )] 1

Sketch of a proof. Using the CLT, we’ve already established

Sn(X; θ) N(0, nI(θ)).

Then, using a first order Taylor expansion as before, we get

p

S n (X; )

1

n

1/2

S n ' (X; n* )

1

n

1/2

( ˆMLE )

Notice that E[Sn′(xi ; θ)]= -I(θ). Then, apply the LLN to get

p

Sn′ (X; θn*)/n

-I(θ).

(using θn* θ.)

Now, algebra and Slutzky’s theorem for RV get the final result.

p

26

RS – Lecture 1

Properties of ML Estimators

(4) Sufficiency. If a single sufficient statistic exists for θ, the MLE of θ

must be a function of it. That is, ˆ MLE depends on the sample

observations only through the value of a sufficient statistic.

(5) Invariance. The ML estimate is invariant under functional

transformations. That is, if ˆ MLE is the MLE of θ and if g(θ) is a

function of θ , then g(ˆ MLE ) is the MLE of g(θ) .

27