Using Statistical Procedures to Identify Differentially

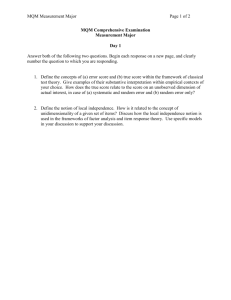

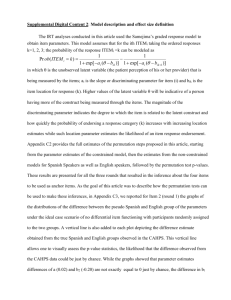

advertisement