Integrated On-chip Storage Evaluation in ASIP Synthesis

advertisement

Integrated On-chip Storage Evaluation in ASIP Synthesis

Manoj Kumar Jain, M. Balakrishnan and Anshul Kumar

Department of Computer Science and Engineering

Indian Institute of Technology Delhi, India

{manoj,mbala,anshul}@cse.iitd.ernet.in

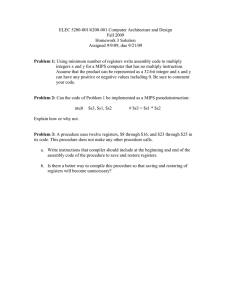

ABSTRACT

An Application Specific Instruction Set Processor (ASIP) exploits

special characteristics of the given application(s) to meet the desired performance, cost and power requirements. Performance estimation which drives the design space exploration is usually done

by simulation. With increasing dimensions of the design space,

simulator based approaches become too time consuming. In the domain of Application Specific Instruction set Processors (ASIP), this

problem can be solved by approaches which perform only scheduling for performance estimation and avoid code generation. However, existing scheduler based approaches do not help in exploring

on-chip storage organization.

We present a scheduler based technique for exploring the register

file size, number of register windows and cache configurations in

an integrated manner. Performance for different register file sizes

are estimated by predicting the number of memory spills and its

delay. The technique employed does not require explicit register

assignment. Number of context switches leading to spills are estimated for evaluating the time penalty due to a limited number of

register windows and cache simulator is used for estimating cache

performance.

1.

INTRODUCTION AND OBJECTIVES

An Application Specific Instruction Set Processor (ASIP) is a

processor designed for one particular application or for a set of

specific applications. An ASIP exploits special characteristics of

the given application(s) to meet the desired performance, cost and

power requirements. ASIPs are a balance between two extremes,

Application Specific Integrated Circuits and general programmable

processors. ASIPs offer the required flexibility (which is not provided by ASICs) at a lower cost and power than general programmable

processors. Thus, ASIPs can be efficiently used in many embedded

systems such as servo-motor control, automotive controls, game

devices, network routers, avionics, cellular phones etc.

A typical ASIP design flow includes key steps as application

analysis, design space exploration, instruction set generation, code

generation for software and hardware synthesis [1]. Design space

exploration is driven by performance estimations. These estimates

are generated using a simulator based [2, 3] or scheduler based

framework [4, 5]. Simulator based technique needs a retargetable

compiler to generate code for different processor configurations to

be explored. Further, simulating the generated code is slow. There

is a well known trade-off between retargetability and code quality

in terms of performance and code size compared to hand optimized

code. Therefore, in our opinion, simulation based approach is not

suitable for early design space exploration.

On the other hand, in scheduler based approach, the application

code is scheduled, on an abstract model of the processor being explored, to get an estimate of the execution time. Therefore, it is

much faster and quite suitable for an early design space exploration.

However, on-chip storage which includes register files and cache

is not explored by the scheduler based approaches reported so far.

Our previous study [6] using a retargetable code generator and stan-

dard simulator has indicated that choice of an appropriate number

of registers has a significant impact on performance and energy in

certain cases. In ASIP synthesis, suitable hardware is chosen for

given applications. As we have limited on-chip space and storage

consumes a significant part of the total chip-area, it is important to

select appropriate on-chip storage. For example trade-off between

register file size and cache size can be evaluated. Similarly, for a

given register file size, trade-off between number of register windows and window size can be evaluated.

Main focus of our work is to include on-chip storage exploration

as part of the design space exploration. To do this, we need to

take into account the influence of storage architecture on the performance or execution time. Our approach is to first find the execution time ignoring the influence of storage constraints on it and

then add overheads due to register spills because of limited register

file, window spills and restores due to limited register windows and

cache misses.

In scheduler based approach, register allocation is the key step

which helps in determining the influence of limited register file

size on the performance. Most of the approaches suggested for

register allocation perform register allocation either after scheduling like a typical compiler, or they try to solve register allocation

and scheduling in an integrated manner. Goodman et al. [7] suggested DAG driven technique for register allocation, but their approach needs a pre-pass scheduling. Rajiv Gupta et al. [8] propose

to solve register allocation and instruction scheduling in an integrated manner. However, register allocation before scheduling is

desirable when minimization of register file size is more important

than the length of the code sequence. Our technique does register allocation before scheduling using the concept of register reuse

chains [9] with significant extensions.

A number of techniques for memory exploration are reported in

literature [10, 11, 12]. To the best of our knowledge, no approach

considers register file as a part of storage space exploration.

Our integrated on-chip storage evaluation methodology is presented in the next Section. Techniques for exploring register file

size, number of windows and cache size are described in Section

3. Exploration and trade-off results with real life examples are presented in Section 4. Applications of proposed approach are discussed in Section 5 with conclusions in the last Section.

2. INTEGRATED ON-CHIP STORAGE

EVALUATION METHODOLOGY

Storage exploration is a part of the design space exploration phase

of overall methodology. Proposed technique for storage space exploration is shown in figure 1. Cycle count for application execution on the chosen processor and memory configuration is estimated. A parameterized model for processor as well as memory is

considered. Parameters of data cache include size, line size, associativity, replacement policy and access time. Processor configuration specification includes register file and windows organization

along with pipeline information and functional unit (FU) operation

capability and latency.

Register allocation is done on unscheduled code using the concept of reuse chains [9] with significant extensions [13]. The proposed technique for register allocation is briefly described in the

next Section. We have defined cost of merging of reuse chains considering spills. We have also developed systematic way of merging

these reuse chains. A priority based resource constrained list scheduler is used for performance estimation. Further, we have proposed

a novel technique for global performance estimation based on usage analysis of variables. Global performance estimation is done

without code generation.

Further, we estimate overheads due to limited register windows

and data cache memory. We have integrated this technique to explore register file size, windows and cache configurations.

Overall execution time estimate (ET ) for an application for the

specified memory and processor configuration can be expressed as

follows.

ET = etR + ohW + ohC

(1)

Where

etR : Execution time when register file contains R registers.

ohW : Additional schedule overhead due to limited windows.

ohC : Additional schedule overhead due to cache misses.

etR can be further expressed by the following equation.

etR = bet + ohdep + spillR ∗ tR

(2)

Where

bet : Base execution time considering constraints of resources

other than storage.

ohdep : The overhead due to additional dependencies inserted during register allocation.

spillR : The number of register spills.

tR

: The delay associated with each register spill.

Computation of etR is described in the next Section. ohW can be

further expressed by the following equation.

ohW = spillw ∗ tW

(3)

Where

spillw : Number of window spills and

tW

: Delay associated with each register window spill.

ohC can be further expressed by the following equation.

ohC = missC ∗ tC

Input application (written in C) is profiled using gprof to find

execution count of each basic block as well as functions. These

execution counts are used to multiply with the estimated execution

times. Intermediate representation is generated using SUIF [14].

Control and dependency analysis is done using this intermediate

representation. Control flow graph is generated at the function level

whereas the data flow graph is generated at the basic block level.

For each basic block B, local register allocation is performed

taking the data flow graph and number of registers to be used for

local register allocation (say k) as input using a modified register

reuse chains approach [13]. Data flow graph may be modified because of additional dependencies as well as spills inserted during

register allocation. This modified data flow graph is taken as input by a priority based resource constrained list scheduler, which

produces schedule estimates. This estimate is multiplied by the execution frequency of block B to compute local estimate (LEB,k ) for

this block.

Local estimates are produced for all the basic blocks contained

in a function, for the complete range of register file sizes to be

explored. Schedule overheads needed to handle global needs with

limited number of registers are computed using life time analysis of

variables. For each block, we need information on variables used,

defined, consumed, live at entry and exit points of this block. This

additional global needs overhead is also generated for the complete

range of number of registers for each basic block. Then, we decide on the optimal distribution of the registers available (say n)

into registers to handle local register allocation (k) and registers to

handle global needs (n − k), such that overall schedule estimate for

that block is minimized.

Overall estimate for a block B can be expressed as

OEB = mink (LEB,k + GEB,n−k )

EXPLORATION OF REGISTER FILE SIZE,

NUMBER OF REGISTER WINDOWS AND

CACHE SIZE

In this section we propose the techniques to estimate execution

time considering various design alternatives to be explored. As

mentioned earlier, register file size, number of register windows

and their sizes and cache size is considered while exploring on-chip

storage.

(5)

where OEB is the total schedule estimate for basic block B, and

GEB,n−k is the overhead to handle global needs with n − k registers.

OEB values for all blocks are summed up to produce estimates at

the function level. Estimates for all functions are added together to

produce overall estimate for the application i.e. etR . So etR can be

expressed as

etR =

(4)

Where

missC : Number of cache misses and

tC

: The cache miss penalty.

Computation of ohW and ohC is explained in the next Section.

tW is computed by knowing register window size and the latency

of ‘store’ instruction. tC is computed using block size and the delays associated in each data transfer. Storage configuration selector

selects suitable processor and memory configuration to meet the

desired performance by knowing all the execution time estimates.

3.

3.1 Execution Time Estimation with Limited

Registers

∑

f or each f unction

∑

(OEB )

(6)

f or each basic block B

3.2 Exploration of Number of Reg. Windows

Processors with register window scheme typically assume a set

of registers organized as a circular buffer. When a procedure is

called (means a context switch), the tail of the buffer is advanced in

order to allocate a new window of registers that the procedure can

use for its locals. On overflow, registers are spilled from the head

of the buffer to make room for the new window at the tail. These

registers are not reloaded until a chain of returns makes it necessary.

We consider the context switches that are due to function calls and

returns.

Vishal et al. [15] proposed a technique to estimate the number

of register windows spills and restores. This is based on generating a trace of calls and returns from the application execution. We

used their basic idea of instrumenting the application and generating such trace. After this, earlier approach generates finite automaton for each number of register windows. Trace was given as input

to these automaton one at a time, to compute number of window

spills and restores in each case. On the other hand, our approach

uses a very simple and efficient stack based analysis to compute

Application + Per. constraints

Window spill overheads

Register spill overheads

Cache miss penalty

* Function calls/ returns

sequence

* Reg. alloc. using RRC

* sim−cache used

* Global needs handling

* stack based analysis to

compute window spills

oh

* List scheduler used

* Results validated

et R

W

Parameterized

Memory

Parameterized Proc.

Including register file and windows

configurations

Storage Explorer

oh C

Execution time estimate

ET

Register file size

Cache configuration

Register windows configuration

Storage configuration selector

Figure 1: Storage exploration technique

window spills and restores. Trace is used only once and simultaneously spills and restores for different number of register windows

are computed.

Depending on the the total number of registers available and

number of register windows, the window size is decided. Register

window configuration will help us in deciding about the number of

registers which can be used for allocation within a context. We use

the techniques proposed in the last subsection to estimate execution

time when each function gets a specified number of registers for allocation. Window spills (spillW ) due to limited number of register

windows is computed as shown above. As mentioned earlier (equation 3), the execution penalty due to window spills and restores

(ohW ) is computed using window size and latency associated with

load and store operation for the processor. This additional penalty

is added into the estimates produced by the technique to produce

overall execution time estimates.

3.3 Exploration of Cache Size

Register file and register windows constraints are considered so

far. Now we consider cache to be a part of storage hierarchy for

design space exploration.

We observe that usually the number of memory locations required to store spilled scalar variables and register windows is small

compared to the total number of cache locations. Therefore, we assume that the spilling overhead is insensitive to the cache organization. This observation allows us to estimate the two independently.

To know the memory access profiles (total number of accesses,

hits, misses etc.) we need to generate addresses to which memory

accesses are made and then a simulator is required to simulate those

accesses. Since memory access patterns are typically application

dependent, so we can use any standard tool set to find memory

access profiles. Once we know the number of memory misses for

a particular cache, knowing the block size and delay information

we compute the additional schedule overhead due to cache misses

using the following equation.

d = α1 + α2 ∗ (b − 1)

where α1 is the time required (in terms of processor clock cycles)

for transferring first data from next level memory to cache and α2

is the time required for each of the remaining data transfers. b is

the block size. For our experiments, we have chosen α1 and α2 as

8 and 3 respectively. 3 clocks are required for each load/store for

LEON processor [16]. This additional delay is added with the base

execution time estimated from our methodology described earlier

to get the overall execution time estimates. Cache misses depends

on block size. On one end, small block sizes may not give the desired advantage of locality of references. On the other hand, using

larger block size leads to heavy miss penalty. While performing

exploration, for each cache size, we took different possible combinations of block sizes, associativity and replacement policies and

then, identified which combination gives the minimum misses.

We have used simplescalar tool set [17] to compute the number of cache misses for different cache sizes. It assumes a MIPS

like processor with some minor changes. Since we do not require

timing information from the simplescalar tool, we use sim-cache

simulator which is fast compared to sim-outorder. sim-cache does

not use processor timing information and it gives only cache miss

statistics. It takes only address trace along with cache configurations as input.

Since we have explored data cache here and large amount of data

is stored in form of array into memory, this array storage as well as

their sequence of access do not significantly depend on processor.

With this assumption, we have used sim-cache simulator to find

cache miss statistics.

4. RESULTS

4.1 Trade-off between Number of Register

Windows and Window Size

Figure 2 shows the impact of variation of register file size on execution time for quick sort. When these results are generated, only

a single register file of specified size is assumed and all the registers were allowed to be used for register allocation for any function.

Any schedule overhead due to limited number of register windows

was ignored while generating these estimates. Results indicate that

execution time decreases with an increase in the register file size,

but saturating after a certain register file size is reached. For this

application, 8 registers are sufficient. Beyond that, the cycle count

is not reduced further.

Variation of number of window spills and restores required with

different number of register windows is shown in figure 3. Curve

saturates at 9 windows meaning that, there would not be window

spills and restores when we have 9 or more register windows.

We are interested in trade-off between the number of registers

and window sizes. For each total number of registers, window size

would be different for different number of windows. While generating results (figure 4), we assumed that register file will be distributed in windows of equal sizes. We also assume that within

a context, number of registers available for register allocation is

equal to window size. Depending on the performance requirement,

suitable register file size can be chosen and for the chosen register

file size, number of windows and hence the window size (number

of registers in a window) can be decided.

On one end, when the number of windows is small, the time

overhead due to context switches dominates the cycle count. At

the other extreme, when the number of windows is large for the

same total number of registers, the individual window size becomes

small and the overhead due to load and stores (within a context)

dominates the cycle count.

ate values of α1 and α2 . Consider the results produced for matrixmult program for different register file size and memory configurations (figure 5). Some interesting trade-offs can be observed.

Based on the generated execution time estimates and the input performance constraint, suitable configurations can be suggested. For

example, if the application should not take more than 1.0E + 05

cycles, then one of the following configurations can be suggested.

1. 12 registers with 4K data cache

2. 15 registers with 2K data cache

3. 20 registers with 1K data cache

Execution time estimates for matrixmult

1.8E+05

1.7E+05

1.0E+05

500

9.0E+04

0

R29

R31

10

R25

9

R27

3 4 5 6 7 8

Number of register windows

R21

2

R23

8.0E+04

1

R17

6 7 8 9 10 11 12

Register file size

R19

5

R13

4

R15

3

R9

280000

1.1E+05

R11

300000

1.2E+05

R7

320000

1000

D1_1K

D1_2K

D1_4K

D1_8K

1.3E+05

R5

340000

1500

1.5E+05

1.4E+05

R3

# window spills and restores

Execution time (# cycles)

360000

quick_sort

2000

quick_sort

380000

Execution time (#cycles)

1.6E+05

Register file size

Figure 2: Impact of Register Figure 3: Impact of number

of registers windows

file size on execution time

Figure 5: Results for matrix-mult

420000

reg_12

reg_15

reg_16

reg_18

reg_20

Execution time (# cycles)

400000

4.3 Execution Time Validation

380000

360000

340000

320000

300000

280000

1

2

3

4

Number of register windows

5

6

Figure 4: Trade-off between number of windows and their sizes

4.2 Trade-off between Register File Size and

On-chip Data Cache Size

Execution time estimates for various benchmark applications for

different register file sizes and different data cache sizes were generated. We have not considered the impact of cache size variation

on memory latency, but it can be considered by choosing appropri-

Performance estimations with varying on-chip storage configurations for selected benchmarks applications were done. Three processors namely ARM (ARM7TDMI a RISC) [18], Trimedia (TM1000 a VLIW) [19] and LEON (a processor with register windows)

[16] were chosen for experimentation and validation. TM-1000’s

five-issue-slot instruction length enables up to five operations to be

scheduled in parallel into a single VLIW instruction. To know correctness of our techniques, we chose to validate our result against

the numbers produced by standard tool sets.

Validation shows that our estimates are within 10% compared

to the actual performance numbers produced by standard tool sets.

The actual figures were 9.6%, 3.3% and 9.7% for ARM7TDMI, TM1000 and LEON respectively. Further, this technique was nearly 77

times faster compared to the simulator based technique. Validation

results for LEON is shown in figure 6.

Results generated were also validated against VHDL level simulation for collision detection application which is developed at IIT

Delhi for detecting collision of an object with Camera [20]. The

execution time estimates produced by our estimator (443278 cycles) are within 10.33% compared to the estimates produced by

tsim (494375 cycles) and within 5.26% compared to the estimates

produced by VHDL simulation.

Normalized Estimates (million cycles)

1.2

width or providing more room for opcode so new application

specific instructions could be easily accommodated.

tsim-leon

ASSIST

1

0.8

• Reduction in the switching activity and thus saving in terms of

power consumption.

0.6

0.4

• In case, instruction width cannot be reduced by sparing some registers, these registers or their addresses could be used efficiently

for specific purposes. For example, hard-wiring some registers

with fixed values will help removing some moves. There are

other possibilities as well.

0.2

quick_sort (*4)

insertion (/3)

heap_sort (*4)

bubble_sort (/7)

matrix-mult (*1)

lattice (*15)

biquad (*35)

0

Now, we present a case study showing use of ‘spare’ registers in

smoothening co-processor interface to LEON processor.

Figure 6: Validation on LEON

4.4 ADPCM Encoder and Decoder Storage

Exploration

So far, we have generated results for small illustrative applications. Here, we present results generated for a real life benchmark

applications, namely, ADPCM encoder and Decoder which are part

of media benchmarks [21]. Data files used are clinton.pcm and

clinton.adpcm. The processor we have chosen is LEON [16].

To generate execution time estimate to explore storage organization, first we have generated execution time estimates using the

techniques proposed in this paper. Since high level of nesting is not

present in these applications, window spills would be minimum,

thus, window configuration exploration results are not included.

Exploration results for adpcm rawaudio encoder and adpcm rawaudio decoder are shown in figure 7 and 8 respectively. Results show

that increasing data cache size from 1K to 2K significantly improve

performance of encoder but not for the decoder. If encoder is to

complete its execution in 16 million cycles then we have to use

at least 2K data cache irrespective of register file size. We also observe that there is not significant performance improvement beyond

window size 11 for both the applications.

Execution time estimates for

adpcm_decoder

5.5E+07

2.5E+07

5.0E+07

2.2E+07

D1_1K

D1_2K

D1_4K

D1_8K

D1_16K

1.9E+07

1.6E+07

1.3E+07

Execution time (#cycles)

4.5E+07

D1_1K

D1_2K

D1_4K

D1_8K

D1_16K

4.0E+07

3.5E+07

3.0E+07

1.0E+07

R27

R30

R21

R24

R15

R18

R9

R6

R12

R30

R27

R24

R21

R18

R15

R9

R6

R12

R3

2.5E+07

Register file size

R3

Execution time (#cycles)

Execution time estimates for

adpcm_encoder

2.8E+07

Register file size

Figure 7: Results for adpcm Figure 8: Results for adpcm

rawaudio encoder

rawaudio decoder

5.

APPLICATIONS

Optimizing register file size is useful in many ways. Some of

them are listed here.

• If smaller register file can be used then register address needs

fewer bits. Thus, we can think of either reducing the instruction

5.1 Utilizing Spare Register Addresses to Interface Co-processors

In an interesting use, spare register addresses may be used to

address co-processor registers. Typically co-processors as well as

accelerators need to be interfaced to the processor for transmitting operands from processor to co-processor and results from coprocessor to processor. This can be very efficiently achieved if the

co-processor input/output registers are mapped to the register address space of the processor.

We have come across two different scenarios in interfacing RISC

architectures to co-processors. In case of MIPS, it allows direct

transfer of values from main register file to co-processor register

file and vice-versa. So operand values need to be moved from

main register file to co-processor register file and similarly, result values which are produced in co-processor registers need to

be moved to main register file. By applying proposed idea of using

addresses of ‘spare’ registers predicted by our technique, to address

co-processor registers can save such moves.

LEON provides floating point unit (FPU) interface to connect

any co-processor. It does not allow transfer of values directly between integer unit register file to floating point register file, so

the communication between processor and co-processor is through

memory. This means, the operand values are first stored into memory and then, these values are loaded into co-processor register file.

After co-processor had produced results, these results will be first

stored into memory and then, they are loaded into main register

file. By applying our idea of using addresses of ‘spare’ registers

predicted by our technique, significant benefits can be achieved in

this case. Because all such loads and stores can be avoided.

An approach is being developed for complete SoC synthesis at

IIT Delhi [22]. In this approach, the application C program is run

to get the profile to identify the computation intensive part of the

application. Synthesizable VHDL is automatically generated for

this computation intensive part along with the necessary interfaces.

We extend this synthesis approach for LEON processor based

ASIP. Level 1 data/instruction caches can be configured to meet

the need of the application which can be predicted using our techniques. Number of register windows can also be easily reconfigured by specifying a generic constraint in the top level VHDL description file. For the software synthesis we need a retargetable

compiler which can generate code for a chosen processor and memory configurations.

As mentioned earlier, we have chosen Collision Detection application for this study. We have generated VHDL description of

a co-processor for update sums and simulated the complete application using LEON VHDL simulator. Execution time reduced to

178573 cycles from 467912 cycles by use of co-processor.

We have used a FPU serial interface for co-processor. Main processor and co-processor cannot run in parallel in this interface. As

discussed earlier, in case of LEON, the parameters can be passed

only through memory. So all the parameters are to be stored into

memory when computed by integer unit and then loaded from the

memory into the co-processor. What we propose is to use addresses

of ’extra’ registers to address co-processor registers. The VHDL

code of the processor was modified such that the FPU register file

is synthesized as part of IU register file. We have just modified

the decoding of the register addresses of a couple of ’extra’ registers, so, now after decoding they point to FPU registers (figure 9).

Thus, when a co-processor parameter is generated by IU, it can be

directly written to one of the FPU registers. This way some loads

and stores from the memory are saved. In this case, each invocation of co-processor reduces from 36 cycles to 4. Simulation results

for collision detection using LEON and a co-processor has shown

that 11446 cycles are saved which is 6.4% of the total application

time by just using addresses of two ’extra’ IU registers to point to

FPU registers. The reduction is less because the co-processor is not

being invoked very frequently.

IU register file

IU reg. add.

decoder 1

decoder 2

coproc. reg. add.

{

{

IU

coproc.

coproc register file

Before

IU register file ‘spare’ registers

IU reg. add.

Modified

decoder 1

decoder 2

coproc. reg. add.

After

{

{

IU

coproc.

coprocessor register file

coproc. registers addressed by IU reg. address

Figure 9: Use of our Technique for coproc interface

6.

CONCLUSIONS AND FUTURE WORK

We have developed a complete strategy to explore on-chip storage architecture for Application Specific Instruction Set Processors. This work involves deciding a suitable register file size, number of register windows and on-chip memory configurations. Our

technique neither requires code generator nor a simulator for a specific target architecture. Further, the processor description required

for retargetting is very simple. Apart from on-chip storage related

parameters, we can also vary number and types of functional units.

The assumption is that we have a configurable and synthesizable

HDL description of a processor core which can be customized for

the application.

The proposed technique has been validated for several benchmarks over a range of processors by comparing our estimates to the

results obtained from standard simulation tools. The processors include ARM7TDMI, LEON and Trimedia (TM-1000). Usefulness

of our technique is also demonstrated by a case study where register

file address space “saved” by our approach is used for an efficient

co-processor interface.

Presently our technique has some limitations. Impact of register

file size on clock period is ignored. Further, compiler modifications

required to support variable register file are not considered. We

plan to address these limitations in future work.

7. REFERENCES

[1] M.K. Jain, M. Balakrishnan, and A. Kumar. ASIP Design

Methodologies: Survey and Issues. In Proc. of VLSI 2001,

pages 76–81.

[2] A. D. Gloria and P. Faraboschi. An Evaluation System for

Application Specific Architectures. In Proc. of Micro 23,

pages 80–89, 1990.

[3] J. Kin et al. Power Efficient Media processors: Design Space

Exploration. In Proc. of the DAC 1999, pages 321–326.

[4] T.V.K. Gupta et al. Processor Evaluation in an Embedded

Systems Design Environment. In Proc. VLSI 2000, pages

98–103.

[5] N. Ghazal et al. Retargetable Estimation Scheme for DSP

Architecture Selection. In Proc. of ASP-DAC 2000, pages

485–489.

[6] M.K. Jain et al. Evaluating Register File Size in ASIP

Design. In Proc. of CODES 2001, pages 109–114.

[7] J.R. Goodman and W.C. Hsu. Code Scheduling and Register

Allocation in Large Basic Blocks. In Proc. of ICS 1988,

pages 444–452.

[8] D.A. Berson, R. Gupta, and M.L. Soffa. URSA: A Unified

Resource Allocator for Registers and Functional Units in

VLIW Architectures. In Proc. of IFIP WG 10.3 (Concurrent

Systems) 1993, pages 243–254.

[9] Y. Zhang and H.J. Lee. Register Allocation Over a

Dependence Graph. In Proc. of CASES 1999.

[10] B.L. Jacob, P.M. Chen, S.R. Silverman, and T.N. Mudge. An

Analytical Model for Designing Memory Hierarchies. IEEE

Transactions on Computers, 45(10):1180–1194, October

1996.

[11] D. Kirvoski, C. Lee, M. Potkonjak, and W.H.

Mangione-Smith. Application-Driven Synthesis of

Memory-Intensive Systems-on-Chip. IEEE Transactions on

Computer Added Design of Integrated Circuits and Systems,

18(9):1316–1326, September 1999.

[12] S.G. Abraham and S.A. Mahlke. Automatic and Efficient

Evaluation of Memory Hierarchies for Embedded Systems.

In Technical Report HPL-1999-132, HPL Laboratories Palo

Alto, October 1999.

[13] M.K. Jain, M. Balakrishnan, and Anshul Kumar. An Efficient

Technique for Exploring Register File Size in ASIP

Synthesis. In Proc. of CASES 2002, pages 252–261.

[14] SUIF Homepage. “http://suif.stanford.edu/”.

[15] V. Bhatt, M. Balakrishnan, and A. Kumar. Exploring the

Number of Register Windows in ASIP Synthesis. In Proc. of

VLSI/ ASPDAC 2002, pages 223–229.

[16] LEON Homepage. ”http://www.gaisler.com/leon.html”.

[17] Simplescalar Homepage. ”http://www.simplescalar.com”.

[18] ARM Ltd. Homepage. “http://www.arm.com”.

[19] Trimedia Homepage. “http://www.trimedia.com”.

[20] S. K. Lodha and S. Gupta. A FPGA based Real Time

Collision Detection and Avoidance. In B. Tech. Thesis,

Department of Computer Science and Engineering, IIT

Delhi, 1997.

[21] MediaBech Homepage.

“http://cares.icsl.ucla.edu/MediaBench”.

[22] A. Singh et al. SoC Synthesis with Automatic Hardware

Software Interface Generation. In Proc. VLSI 2003, January

2003.