Accelerating discovery research_180215.cdr

advertisement

Life Sciences

White Paper

Adopting Future-Forward Discovery Research

Strategies: High Performance Computing in the

Pharmaceutical Industry

About the Authors

Dr Arundhati Saraph

Lead—High Performance Computing (HPC), Life Sciences

At Tata Consultancy Services (TCS), Arundhati works on leveraging HPC to address

scientific problems in niche areas of Life Sciences. These include discovery research,

genomics, metagenomics, predictive toxicology, and bioinformatics, all of which find

application in the pharmaceutical and healthcare sectors. Arundhati holds a PhD from

the National Chemical Laboratory, Pune, and has previously worked with several

research and academic institutions.

Anita Suri

Domain Consultant —HPC, Life Sciences

Anita has expertise in bioinformatics applications and various Life Sciences tools in the

HPC environment. She has over 10 years of experience in areas like molecular docking,

molecular dynamics, high throughput virtual screening, Quantitative Structure Activity

Relationships (QSAR), and pharmacophore modeling. Anita holds a master's degree in

bioinformatics from the University of Pune.

Vivek Sharma

Research Analyst—HPC, Life Sciences

Vivek has over six years of experience in using HPC tools in the Life Sciences domain.

He has worked with different categories of bioinformatics applications such as in silico

drug discovery, genomics data analysis, biomolecular simulation, and molecular

visualization. Vivek holds a master's degree in biomedical engineering from the Indian

Institute of Technology Bombay.

Abstract

High drug discovery and development costs and limited patent cycles are known challenges

of the pharmaceutical industry. An economical drug discovery and development process

which successfully leverages research and development (R&D) can act as a game-changer in

this competitive space. Reduced time-to-market plays a key role in driving performance.

It typically takes about 10 years for a potential new drug to be registered. Today, the R&D

productivity in terms of numbers of new chemical entities (NCEs) registered per unit amount

of investment is declining due to various factors. Also, there is a high iteration rate at the

clinical trial stage because of the stringent Food and Drug Administration (FDA) regulations.

This is resulting in slower drug approvals, and reinforcing the need for increased R&D

productivity.

One way this can be done is by simulating several steps in the drug discovery process.

The simulations, which generally leverage HPC, have demonstrated reduction in product

development lifecycles, as well as in product development costs and improved overall

productivity. In this paper, we discuss how HPC can enable pharmaceutical companies to

accelerate biomedical research and the discovery process.

Contents

1. Introduction

5

2. Application of HPC in Discovery Research

5

Challenges Encountered with HPC platforms

7

HPC enabled Bio-computation and simulation platforms

8

3. Benefits of Leveraging HPC

8

4. Conclusion

9

Introduction

The pharmaceutical industry is at a challenging juncture. Increasing drug development and marketing costs,

shrinking pipelines, expiring patents, demanding regulatory requirements, changing healthcare policies, as well as

the reducing popularity of the blockbuster drug model call for new adaptation strategies. In order to have sufficient

new molecules in the pipeline, there is a need to increase R&D productivity.

This can be achieved by applying innovative and cost-effective predictive research methods using in silico or virtual

studies. Simulations carried out prior to lengthy laboratory experiments and trials can help analyze a lot of 'what if'

scenarios. For instance, the experimental medicinal chemist and biochemist can use in silico screens to choose the

most promising drug candidates and avoid experimental dead-ends early in the new drug discovery cycle. HPC is

the practice of aggregating computing power to deliver much higher performance than one could achieve with

regular computing infrastructure. It helps perform complex simulations at various stages of the new drug discovery

and development process.

Application of HPC in Discovery Research

Trends in biomedical research show a shift towards translational research which requires better understanding of

biology. Today, simulation studies can be used to explore new research avenues, throw up novel insights, and

address problems upfront on the basis of the simulation assessment. These results can potentially enable a

medicinal chemist to select the appropriate molecules that can be taken ahead in the pipeline at various stages of

the discovery research process. Also, disciplines such as bioinformatics, systems biology, and next generation

sequencing (NGS) are data and compute-intense applications. HPC can be leveraged to carry out such biosimulations as well as for bio-computational analysis.

Some examples that show how HPC can be leveraged at different stages in a product life cycle are given below:

Example#1: HPC enables rapid response to the H1N1 virus

A group of scientists from the University of Illinois, Urbana- Champaign, carried out in silico studies to assess

mutation or change in the H1N1 virus that had rendered a potent drug Tamiflu ineffective.1 By appropriately using

the HPC platform, the experiment could be completed in just over an hour. If the simulations had been conducted

on a CPU this would have taken days to complete. The complete study was fairly extensive and required multiple

simulations to be carried out for a system of 35,000 atoms. The study concluded that the mutation that led to the

resistance to Tamiflu was due to the disruption of the ‘binding funnel’, shedding light on the mechanism behind

drug resistance.

[1] NVIDIA UK, University of Illinois: Accelerated Molecular Modelling Enables Rapid Response to H1N1, http://www.nvidia.co.uk/object/illinois-university-uk.html

5

Example#2 High throughput virtual screening using HPC

In silico methods such as high throughput virtual screening (HTVS) can be used in hit identification. This allows

covering a large chemical space and reducing the time, effort, and cost compared to the traditional high

2

throughput screening (HTS). Conventionally, HTS allowed a researcher to test millions of compounds in vitro using

robotics. As the number of molecules that are tested in the chemical space run into millions, their acquisition (or

chemical synthesis) and efficient testing by sophisticated robots is generally cost prohibitive and time consuming.

On the other hand, HTVS takes advantage of fast algorithms to filter the chemical space and successfully select

potential drug candidates. This reduces the number of probable hits to be further screened (in vitro and in vivo)

from the large chemical space of 10⁶⁰ conceivable compounds to a manageable number that can be synthesized,

purchased, and tested.3

A significant reduction in time is also achieved by using computational processes. In a study conducted using a

50,000-core cluster (Amazon Cloud), the computational chemistry outfit Schrödinger analyzed 21 million drug

compounds in just 3 hours for less than USD 4,900. Using the traditional approach, this would have taken years to

4

complete and involved a huge sum of money. The use of an HPC environment for HTVS reduces both the time and

cost involved in the hit identification stage of the new drug discovery process. This approach can also be used at

the lead optimization stage and for drug repositioning.

Example #3 Use of HPC in NGS and translational research

Techniques like NGS can help understand the genetic origin of diseases, assist in developing more potent regimes

for treating disorders, and offer alternate strategies to the standard blockbuster drug approach. The Translational

Genomics Research Institute is leveraging the high throughput gene sequencing technology for precision therapy

trials for children and adults with lethal cancers. It uses this information for diagnosis and treatment tailored to

individuals, for rapidly advancing child cancers.5

HPC, complex data analytics platforms, and high throughput storage systems are some of the key technologies

driving disciplines like NGS and bioinformatics forward. A robust HPC platform that hosts all the data analytics

tools, various bio-computational and bio-simulation tools, as well as databases that can be accessed by multiple

users, can drive research outcomes.

Research on biomarker analysis using NGS relies on data and compute-intense activities. To derive meaningful

analysis from the raw results thrown up by the sequencing machines or platforms, a number of computational and

data reorganization steps are required. A lot of information stored in databases pertaining to research data,

published work, omics, and clinical trials can be used to derive these insights. Such analysis will aid in better target

selection, research on the development of biomarkers for diagnostics, and in the long run reduce phase II and III

attrition. Studies on binding between potential drugs and proteins in the body can help identify cross binders that

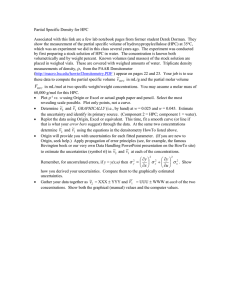

can be eliminated ahead of lengthy and expensive experimental stages of development. Figure 1 depicts how

information from various sources can be used to conduct bio-simulations and computations upfront before

conducting lengthy experimental procedures to test several 'what if' scenarios.

[2] Supercomputing facility for Bioinformatics and Computational Biology, IIT Delhi, Virtual high throughput screening: will it help for lead identification?, accessed on 10 February

2015, www.scfbio-iitd.res.in/seminar/incob/IndiraGhosh.doc

[3] International Journal of Advanced Research in Computer Science and Software Engineering, Insilico Methods in Drug Discovery-A Review, May 2013, accessed in 11 February

2015, http://www.ijarcsse.com/docs/papers/Volume_3/5_May2013/V3I5-0282.pdf

{4} Giagaom,Cycle Computing spins up 50K core Amazon cluster, April 2012, accessed on 4 February 2015 https://gigaom.com/2012/04/19/cycle-computing-spins-up-50k-coreamazon-cluster/

[5] DataDirect Networks, Genomics Research (DDN case study) 2011, accessed on 28 January 2015 http://www.ddn.com/pdfs/Tgen_Case_Study.pdf?982861

6

Pharmacogenomics approach linking biomarkers to therapeutics

Successful outcome

in select population

Hit to

lead

Target

to hit

Lead

optimization

Preclinical

Preclinic

al

Phase

1

Phase

II

Bio-simulation platforms

Bio-informatics platform

Genomics platform

Model based drug

development

n Novel target identification

n

n

n

Phase III

Submission

to launch

Launch

Information repository

n

n

n

n

Research

Published

Clinical

NGS

Figure 1: Use of the In silico Approach at Various Stages in the Product Life Cycle of a New Chemical Entity

Challenges encountered with HPC platforms

Computational analysis in each area of discovery research requires a different set of tools, each with a specific

compute, input output (I/O), and throughput requirement. For instance, the amount of compute, I/O, and memory

requirement for optimal performance on a bio-simulation run could be different from resource requirement for

genomics tools carrying out sequence alignment. Suboptimal use of resources such as memory or I/O can even

lead to poor performance or application failures.

Furthermore, to carry out accurate and speedy analysis of the clinical data, the data has to be accessed, processed,

analyzed, and visualized. Often, this requires movement of data from the storage device to cluster nodes for further

analysis, which becomes challenging as the data size increases. This research data generated through microarray

and cell analyzer systems is image based and generally requires both short and long term storage. Also, the data

and results generated at the discovery research step as well as various steps in the new drug discovery cycle have to

be studied and analyzed by various groups working across the organization. This increased movement of data back

and forth between different types of temporary storage can create bottlenecks and lead to suboptimal

performance. Storage thus becomes a critical resource requirement for all data-driven research, especially in NGS

analysis, as there is a huge need for data access and data storage—both short term and for data archival purposes.

Considering these challenges, it is difficult to provide optimal HPC architecture in scenarios with the following

diverse requirements:

n

Algorithmic complexity and high compute-intensive platform requirements

n

Distributed computing solutions needing low latency network-centric HPC

7

n

High throughput file systems which can access and synchronize data at multiple gigabytes

n

Architectures providing large-scale memory

n

Large data analysis and information extraction requiring data mining

n

Acquiring information in multiple data formats from a variety of sources requiring low latency and high

throughput systems

n

Graphics and visualization demands asking for near real time solutions

Complete knowledge and understanding of the resource requirement for each class of applications used in the

industry is required to address the above needs. HPC enabled platforms that host workflows to carry out various

analyses in areas of genomics, bio-simulations, or bioinformatics enable scientists to focus on analyzing results and

address domain related problems without spending time on resolving software or infrastructure-related issues.

HPC enabled Bio-computation and simulation platforms

A bio-computation and simulation service through the use of workflows or pipelines can be used as a prepackaged solution to address problems in discovery research. The main focus would be to see how computations

go a long way in supporting the traditional R&D activity. Recent advances in software techniques have made it

possible to combine software tools and information bases, making it possible to offer predefined software

solutions to domain problems. The scientific workflow system is a specialized form of a workflow management

system designed specifically to compose and execute a series of computational or data manipulation steps.

Essential requirements of a HPC-enabled work-flow or pipeline are as follows:

1. Strong domain knowledge, both breadth and depth

2. A set of software technologies that can create a user friendly and expressive interface

3. An assortment of well-trusted software tools

4. A software suite that allows the combination of the tools and takes care of the integration

5. A cluster management suite that manages the computer hardware—usually a network of clusters

A robust HPC infrastructure should cater to variable work load requirements. It should use optimal resources

through cluster and data management tools and job scheduling software to efficiently run the simulations.

Benefits of Leveraging HPC

By applying the HPC approach pharmaceutical enterprises can:

n

Accelerate drug discovery: HPC can be used to accelerate several steps like target identification and validation,

hit identification, cross binding studies, and drug repositioning in the new drug discovery process.

8

n

Explore new research avenues: HPC technology can successfully execute large simulations which otherwise

take longer to complete or are abandoned due to computing resource shortfalls, thus enabling new research

opportunities.

n

Enhance organizational focus: HPC enabled platforms that host work-flows to carry out various analyses in the

areas of genomics, run bio-simulations, or conduct bioinformatics analysis allow scientists to focus on analyzing

results and address domain-related problems without spending time on resolving software and IT related issues

n

Execute tasks faster: Use of HPC enabled workflows and platforms simplify solution usage and execute tasks

faster by reducing the simulation runtime

Conclusion

Complex, compute-intense application areas in the early stages of drug discovery such as target identification and

validation, hit or lead identification, cross binding studies, and NGS analysis can benefit from the use of HPC.

With the constant growth in data volumes and the requirement to carry out multiple analyses there arises a need to

move large volumes of data from storage device to cluster nodes. To achieve this, there is a need for high

performance as well as scalable I/O, as performance bottlenecks at any of these steps result in delays in the

downstream analysis. To avoid this, there should be an efficient access of data across servers as well as hassle-free

data movement between different tiers of storage to support variable work load. This can be realized with the use

of an HPC system that enables optimal utilization of the infrastructure through cluster and data management tools

providing optimal performance enhancement.

HPC enabled platforms or work-flows are instrumental in addressing problems through virtual or in silico studies at

various steps in the discovery research. Use of HPC enabled platforms in other areas in downstream drug discovery

including clinical trial analysis, supply chain management, drug safety analysis, and health economics can also be

beneficial. An HPC platform that hosts end-to-end applications and conducts seamless integration of workflows

linking the discovery, development, and clinical trial analysis to the downstream supply chain can enable a

pharmaceutical company to efficiently carry out new drug discovery and ultimately reduce the time-to-market.

9

About TCS Life Sciences

With over two decades of experience in the life sciences domain, TCS offers a comprehensive

portfolio in IT, Consulting, KPO, Infrastructure and Engineering services as well as new-age business

solutions including mobility and big data catering to companies in the pharma, biotech, medical

devices, and diagnostics industries. Our offerings help clients accelerate drug discovery, advance

clinical trial efficiencies, maximize manufacturing productivity, and improve sales and marketing

effectiveness.

We draw on our experience of having worked with 7 of the top 10 global pharmaceutical companies

and 8 of the top 10 medical device manufacturers. Our commitment towards developing next

generation innovative solutions and facilitating cutting-edge research - through our Life Sciences

Innovation Lab, research collaborations, multiple centers of excellence and Co-Innovation Network

(COINTM) - have made us a preferred partner for the world's leading life sciences companies.

Contact

For more information, contact lshcip.pmo@tcs.com

Subscribe to TCS White Papers

TCS.com RSS: http://www.tcs.com/rss_feeds/Pages/feed.aspx?f=w

Feedburner: http://feeds2.feedburner.com/tcswhitepapers

About Tata Consultancy Services (TCS)

Tata Consultancy Services is an IT services, consulting and business solutions organization that

delivers real results to global business, ensuring a level of certainty no other firm can match.

TCS offers a consulting-led, integrated portfolio of IT and IT-enabled infrastructure, engineering and

assurance services. This is delivered through its unique Global Network Delivery ModelTM,

recognized as the benchmark of excellence in software development. A part of the Tata Group,

India’s largest industrial conglomerate, TCS has a global footprint and is listed on the National Stock

Exchange and Bombay Stock Exchange in India.

IT Services

Business Solutions

Consulting

All content / information present here is the exclusive property of Tata Consultancy Services Limited (TCS). The content / information contained here is correct at

the time of publishing. No material from here may be copied, modified, reproduced, republished, uploaded, transmitted, posted or distributed in any form

without prior written permission from TCS. Unauthorized use of the content / information appearing here may violate copyright, trademark and other applicable

laws, and could result in criminal or civil penalties. Copyright © 2015 Tata Consultancy Services Limited

TCS Design Services I M I 03 I 15

For more information, visit us at www.tcs.com