611: Electromagnetic Theory II

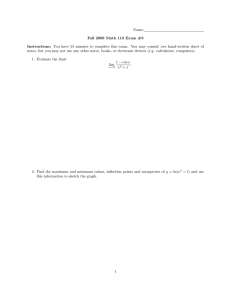

advertisement