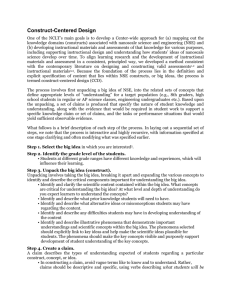

Learning-Goals-Driven Design Model: Developing Curriculum

advertisement