Chapter 8 - Flows in Series

advertisement

8

Flows in Series-Parallel-Graphs

We have already studied series-parallel (multi)graphs in Sections 6.14 and 6.15.

There we showed that Braess’s Paradox can not occur in these graphs, and that

Nash flows can be computed efficiently by means of dynamic programming. In this

Chapter, we are going to study flows in series-parallel graphs in greater detail. For

convenience, we repeat the recursive definition of series-parallel graphs:

Definition 8.1 (Series-parallel graph)

The class of (two-terminal) series-parallel (multi)graphs can be defined by the following rules (cf. [BLW87]):

(i) The set of primitive graphs consists of the single graph P with vertex set {s, t}

and the single directed edge (s, t). The vertex s is the “start-terminal” of P

and the vertex t is the “end-terminal” of P .

(ii) Let G1 = (V1 , R1 ) and G2 = (V2 , R2 ) be series-parallel graphs with terminals

s1 , t1 and s2 , t2 respectively. Then the graph obtained by identifying t1 and

s2 is a series-parallel graph, with s1 and t2 as its terminals. This graph is the

series composition of G1 and G2 . The graph obtained by identifying s1 and

s2 and also t1 and t2 is a series-parallel graph, the parallel composition of G1

and G2 . This graph has s1 (= s2 ) and t1 (= t2 ) as its terminals.

In all what follows, we will assume that a series-parallel graph G is accompanied

(or given) by its parse tree (or decomposition tree) which specifies G is constructed

using the above rules. The size of the parse tree is linear in the size of the input

graph. As mentioned in Section 6.14, such a parse tree (decomposition tree) can be

computed in O(m) time by an algorithm given in [VTL82]. The presentation and

analysis of this algorithm is, unfortunately, beyond the scope of this lecture. For

a given graph H in the decomposition tree let us denote by val(H) the maximum

flow value from the s terminal to the t terminal of H.

8.1

Maximum Flows

In this section we investigate the complexity of computing maximum flows in seriesparallel graphs. Recall that a feasible (s, t)-flow f in a graph G with upper capacities c is called a blocking flow, if there is no path P from s to t in G such that

f (r) < c(r) for all r 2 P (cf. Definition 3.17).

130

Flows in Series-Parallel-Graphs

Lemma 8.2 Any blocking flow in a series-parallel graph is also a maximum flow.

Proof: Once more we use induction on the size of the decomposition tree of the

graph G. The claim is clear, if G is a primitive graph consisting of a single arc.

Let G be the parallel composition of G1 and G2 . If f is a blocking flow in G, then

its restriction to G1 and G2 must also be blocking in those subgraphs. Thus, by

induction hypothesis f |Gi is a maximum flow in Gi , i.e., val(f |Gi ) = val(Gi ) for

i = 1, 2. Since val(f ) = val(f |G1 ) + val(f |G2 ) and val(G) = val(G1 ) + val(G2 ), the

claim follows.

Finally, let G be the series composition of G1 and G2 . Then any blocking flow f must

be also blocking in at least one of the graphs Gi . Assume that f |G1 is blocking in G1

(the other case is symmetric). Then, by induction hypothesis val(f ) = val(f |G1 ) =

val(G1 ). Since val(G) = min{val(G1 ), val(G2 )} it follows that any feasible flow in G

has value no greater than val(G1 ) = val(f ) and, hence, f is a maximum flow in G.

2

In Section 3.2 we gave an O(nm) time algorithm to compute a blocking flow in any

graph. In Lemma 3.22 we also showed that in the case of unit-capacity networks, a

blocking flow can be computed in O(n + m) time. Thus, this gives a O(nm) time

maximum flow algorithm for the general series-parallel case and a linear time O(n+

m) algorithm for the unit capacity case.

Lemma 8.3 Suppose that G = (V, R, ↵, !) is a series-parallel graph with at least 3

vertices and without parallel arcs. Then |R| 3|V | 6.

Proof: We prove the claim by induction on the size of the decomposition tree.

The claim is true for the base case |V | = 3: the only series-parallel graphs with 3

nodes and without parallels are depicted in Figure 8.1 with 2 3 · 3 6 = 3 and

3 3 · 3 6 = 3, respectively.

Figure 8.1: Possible series-parallel graphs with 3 nodes and without parallels

If G is the series composition of G1 and G2 , then we have

|R| = |R1 | + |R2 |

ind. hyp.

3|V1 |

6 + 3|V2 |

6 = 3(|V1 | + |V2 |

1)

9 3|V |

6.

2)

6 = 3|V |

6.

If G is the parallel composition of G1 and G2 , then

|R| = |R1 | + |R2 |

ind. hyp.

3|V1 |

6 + 3|V2 |

6 = 3(|V1 | + |V2 |

This completes the proof.

2

So, if we have a series-parallel graph without parallel edges, then m 2 O(n) and our

running time of O(nm) improves to O(n2 ). (This obviously also holds true for the

single-arc case |V | = 2.) Now, observe that we can eliminate parallel edges simply

by identifying the subtrees which contain only parallel compositions and end at the

leaves. This can be accomplished easily in O(m) time and corresponds to replacing

each bundle of parallel arcs by a single arc with capacity equal to the sum of the

bundle. Combining this with Lemma 8.2 gives the following result:

File: –sourcefile–

Revision: –revision–

Date: 2015/02/09 –time–GMT

8.2 Minimum Cost Flows

Theorem 8.4 A maximum flow in a series-parallel graph can be computed in

time O(n2 ). If all capacities are equal to 1, then the maximum flow algorithm runs

in fact in linear time O(n + m).

2

We have reached a running time of O(n2 ) which beats our fastest maximum flow

algorithms of Chapter 3. Still, we can do better!

As a warmup let us first compute the value of a maximum flow in a series-parallel

graph G = (V, R, ↵, !) with capacities c : R ! R+ . As usual, we use a dynamic

programming approach guided by the decomposition tree of G. Recall that val(H)

denotes the maximum flow value from the s terminal to the t terminal of H.

If H = ({s, t}, {(s, t)}) is the primitive graph consisting of just the two terminals

and a single edge connecting the two vertices, then clearly val(H) = c(s, t). Thus,

we can compute the value val(H) for a leaf in the decomposition tree in constant

time.

If H is the series composition of two (series-parallel) graphs H1 and H2 , then

val(H) = min{val(H1 ), val(H2 )}. Finally, if H is the parallel composition of H1

and H2 , then val(H) = val(H1 ) + val(H2 ). This shows that for an internal node H

of the decomposition tree we can compute val(H) also in constant time given the

values for the two sons. This gives an algorithm with running time O(m) to compute

the maximum flow value.

How can we get the flow on the arcs of G from the information which we have

computed so far? This requires a little bit more thought. Suppose we know a

value z val(H) which we would like to route through for a subgraph H in the

decomposition tree (At the end, of course, we will be interested in the case that

z = val(G)). We would like to compute a feasible flow in H which achieves exactly

the value z.

If H is the parallel composition of H1 and H2 , then as above z val(H) = val(H1 )+

val(H2 ). We can now pick any values z1 0 and z2 0 such that z1 + z2 = z and

z1 val(H1 ) and z2 val(H2 ). If we know (recursively) how to route those values

through H1 and H2 , we have a flow in H as desired. Similarly, if H is the series

composition of H1 and H2 , then z val(H) = min{val(H1 ), val(H2 )}. Thus, if we

know how to route zi := z val(Hi ) units of flow through Hi for i = 1, 2 we have

again a flow in H as desired.

The above considerations show that we can process the decomposition tree topdown, starting at the root G with z = val(G). Then, at any node which represents

some subgraph H and some value z val(H), we write z1 and z2 as above on the

two nodes representing the two subgraphs H1 and H2 from which H is composed.

We then proceed to the subgraphs. The procedure stops when we reach a leaf,

which represents an arc r. Then, we simply set f (r) := z. This gives a maximum

flow. The time spent at any node in the decomposition tree is O(1). Thus, this

gives us a linear time algorithm. We have just proved the following result:

Theorem 8.5 A maximum flow in a series-parallel graph can be computed in

time O(n + m).

2

8.2

Minimum Cost Flows

Next consider the minimum cost flow problem on two terminal series-parallel graphs

in a parametrized version: given G = (V, R) with source s, sink t together with

capacity c(r)

0 and cost k(r) 2 R for all r 2 R, two distinct vertices s, t 2 V ,

File: –sourcefile–

Revision: –revision–

Date: 2015/02/09 –time–GMT

131

132

Flows in Series-Parallel-Graphs

we aim to determine a minimum cost flow sending z units of flow from s to t. The

problem can be formulated as follows:

X

min

k(r)f (r)

(Pz )

r2R

s.t. excessf (v) = 0

excessf (s) =

for all v 6= s, t

z

excessf (t) = z

for all r 2 R

0 f (r) c(r)

The value zmax denotes the maximum flow value, such that (Pz ) has a feasible

solution f . Throughout this section we denote by Pxy some x-y-path in G.

The following Lemma is due to Valdes et al. [VTL82] and gives a criterion to decide

whether a graph is series parallel.

Lemma 8.6 A directed, acyclic graph G = (V, R) is two terminal series-parallel if

and only if there are no four distinct vertices s0 , t0 , u, v 2 V such that there are –

except for the start and end vertex – pairwise node-disjoint paths Ps0 u , Ps0 v , Puv , Put0

and Pvt0 .

2

The method to determine a solution to (Pz ) is a simple Greedy-type augmenting

path procedure: find a minimum cost s-t-path P in G and send as much flow along P

as possible, i.e., as long as f (r) c(r). If an arc r on the current path P becomes

saturated, we remove r from G, decrease the capacity of all other arcs in P by the

(residual) capacity c(r) and repeat. Algorithm 8.1 shows the method more formally.

Algorithm 8.1 Augmenting Path Algorithm for Min Cost Flow in SP-graphs.

Greedy-SP-MinCost

1 for all r 2 R do

2

f (r) := 0, j = 0

3 end for

4 while there is a path in G from s to t do

5

j := j + 1

6

find a min cost s-t-path Pj and set k(P ) = kj

7

lj := min{c(r) : r 2 Pj }

8

for all r 2 Pj do

9

c(r) := c(r) lj

10

if c(r) = 0 then

11

R := R r

12

end if

13

end for

14 end while

Observe that this method is an augmenting path algorithm which does not use any

backward arcs as none of these are taken into consideration when choosing the path

of minimum cost. Furthermore, the method indicates that the objective function

value of (Pz ) as a function in z is piecewise linear (as a function of z):

As long as possible (i.e., on an interval of length lj ), flow is pushed along a path

increasing the objective value linearly by the cost of the respective path (i.e., with

slope kj ). After that the graph is updated and a new path is chosen. The validity

of the method is also a necessary condition for a graph to be series-parallel as the

following theorem shows.

File: –sourcefile–

Revision: –revision–

Date: 2015/02/09 –time–GMT

8.2 Minimum Cost Flows

133

Theorem 8.7 Let G be a directed, acyclic graph with a single source s and a single

sink t. Then G is a two-terminal series-parallel graph if and only if the Greedymethod in Algorithm 8.1 solves the min cost flow problem (Pz ) for all 0 z zmax

for arbitrary costs k and arbitrary capacities c 0.

Proof: First suppose that G is in fact series-parallel. We show the following property: for fixed z, 0 z zmax , and Pst a min cost path from s to t, there is an

optimal solution f ⇤ for (Pz ) such that

f ⇤ (r)

min {z, min{c(r0 ) : r0 2 Pst }}

for all r 2 Pst .

(8.1)

Hence, the choice in Algorithm 8.1 is valid and the claim that Algorithm 8.1 gives

an optimal solution follows.

We show the claim by induction on the number of edges in G. The claim is obviously true for a single-edge graph. So, assume that G is obtained by the serial

combination of two (series-parallel) graphs G1 and G2 , where si and ti denote the

i

source and sink of Gi , and Pst

denotes the restriction of Pst to Gi , for i = 1, 2. Observe that it the series composition, the flow value through G1 and G2 also equals z.

By induction hypothesis, the problem (Pz ) defined on Gi , i = 1, 2, has an optimum

(i)

⇤

⇤

solution f(i)

such that f(i)

(r)

min{z, min{c(r0 ) :0 r 2 Pst }}. The combination

⇤

⇤

(f(1) , f(2) ) yields an optimum solution for (Pz ) on G fulfilling (8.1).

Next, assume that G is obtained by a parallel composition of G1 and G2 . Without loss of generality assume that Pst is completely contained in G1 . If in an

optimal solution to (Pz ) on G, z1 units of flow go through G1 , we know by induction hypothesis (applied for (Pz1 ) in G1 ) that there is an optimal solution with

f ⇤ (r)

min{z1 , min{c(r) : r 2 Pst }}. Obviously, z1 z, so the claim follows

directly if the above minimum is attained at some c(r) with r 2 Pst , as then also

f ⇤ (r)

min {z1 , min{c(r) : r 2 Pst }} = min{z, min {c(r) : r 2 Pst }} .

(8.2)

Assume that z1 is strictly less than min{z, min{c(r) : r 2 Pst }}, i.e., in the optimum

solution sending z units in G, the path Pst is not saturated. Then, (8.2) is not

guaranteed. But by choice, Pst is a path with minimum cost, and hence, rerouting

some flow in the optimum solution to Pst does not entail larger cost and we can

assume – again without loss of generality – that in fact z1 min{z, min{c(r) : r 2

Pst }} and (8.2) holds.

To show the other implication, let G be a directed, acyclic graph for which Algorithm 8.1 gives the optimum solution for all 0 z zmax and suppose that G is

not series-parallel. We will construct capacities and costs yielding a contradiction.

By Lemma 8.6 there are four distinct vertices s0 , t0 , u, v and five, with the exception

of the end vertices, node-disjoint paths Ps0 u , Ps0 v , Puv , Put0 and Pvt0 in G. Furthermore, if s 6= s0 , then there is also a path Pss0 does not share any vertex with the

above five path except for the end-vertex as G is acyclic: if Pss0 uses a vertex in

one of these and then returns to s0 , this would imply a cycle in G. By the same

arguments, there is also a disjoint path Pt0 t (given t0 6= t). If s = s0 or t = t0 , the

respective paths are empty. Consider an instance on G with the following capacities

and costs for all edges r 2 R:

8

>

<2 if r 2 Pss0 or r 2 Ptt0

c(r) := 1 if r 2 Ps0 u , r 2 Ps0 v , r 2 Puv , r 2 Put0 or r 2 Pvt0

>

:

0 else

(

0 if r 2 Ps0 u , r 2 Puv or r 2 Pvt0

k(r) :=

1 else

File: –sourcefile–

Revision: –revision–

Date: 2015/02/09 –time–GMT

134

Flows in Series-Parallel-Graphs

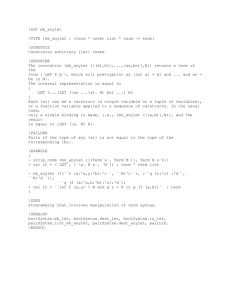

u

1

c=2

k=1

s0

c=

c=1

k=0

s

c= 0

k=

k= 1

1

v

c=

k= 1

1

t0

1

c= 0

k=

c=2

k=1

t

Figure 8.2: Subgraph contained in non-series-parallel G

The situation is illustrated in Figure 8.2. Observe that, given these values, no edge

outside can be used as c(r) = 0 and path P 0 := Pss0 Ps0 u Puv Pvt0 Pt0 t is the

unique minimum cost path. For z = 2, the optimum solution to (P2 ) does obviously

not use P 0 as this would block any other path while Algorithm 8.1 does, and hence,

the algorithm does not solve (P2 ) for the given instance.

2

Let us briefly analyze the running time of the algorithm. Since in any iteration at

least one arc becomes saturated (i.e., its residual capacity is reduced to zero) and

any arc can become saturated at most once by the fact that the algorithm does not

use any backward arcs, it follows that there are at most m iterations of the whileloop. Thus, the only task becomes to bound the effort for computing a minimum

cost s-t-path in Step 6. A first naive approach uses dynamic programming: Let

H be a series-parallel graph and denote by k ⇤ (H) the length of a shortest s-t-path

in H with respect to some edge weights k. Then, if H consists of a single edge,

k ⇤ (H) is trivial to compute. If H is the series-composition of H1 and H2 , then

k ⇤ (H) = k ⇤ (H1 )+k ⇤ (H2 ). Finally, if H is the parallel composition, we have k ⇤ (H) =

min{k ⇤ (H1 ), k ⇤ (H2 )}. Thus, we can compute k ⇤ (G) bottom-up from the leaves of

the decomposition tree in time O(m). This gives an overall running time of O(m2 ).

We will now show how this running time can be reduced to O(nm + m log m).

First, we represent each bundle of parallel edges by a binary heap which is minordered with respect to the costs. Thus, we can find the minimum cost element of

the bundle in O(1) time. We replace each subtree representing such a bundle in the

decomposition tree by a single vertex which corresponds to the heap. Thus, we are

in the same situation of Theorem 8.4 where we had a series-parallel graph without

parallel arcs. Thus, by Lemma 8.3 we have at most 3n 6 edges left, and shortest

path computation takes time O(n). When an edge becomes saturated, we delete

the corresponding minimum cost element from the heap in time O(log m). Thus,

we obtain:

Theorem 8.8 A minimum cost flow in a series-parallel graph can be found in time

O(nm + m log m).

2

File: –sourcefile–

Revision: –revision–

Date: 2015/02/09 –time–GMT

![-----Original Message----- From: Val Lewandowski [ ]](http://s2.studylib.net/store/data/015587716_1-a31a561e6293c546b1ea3d700977080d-300x300.png)