Full Text

advertisement

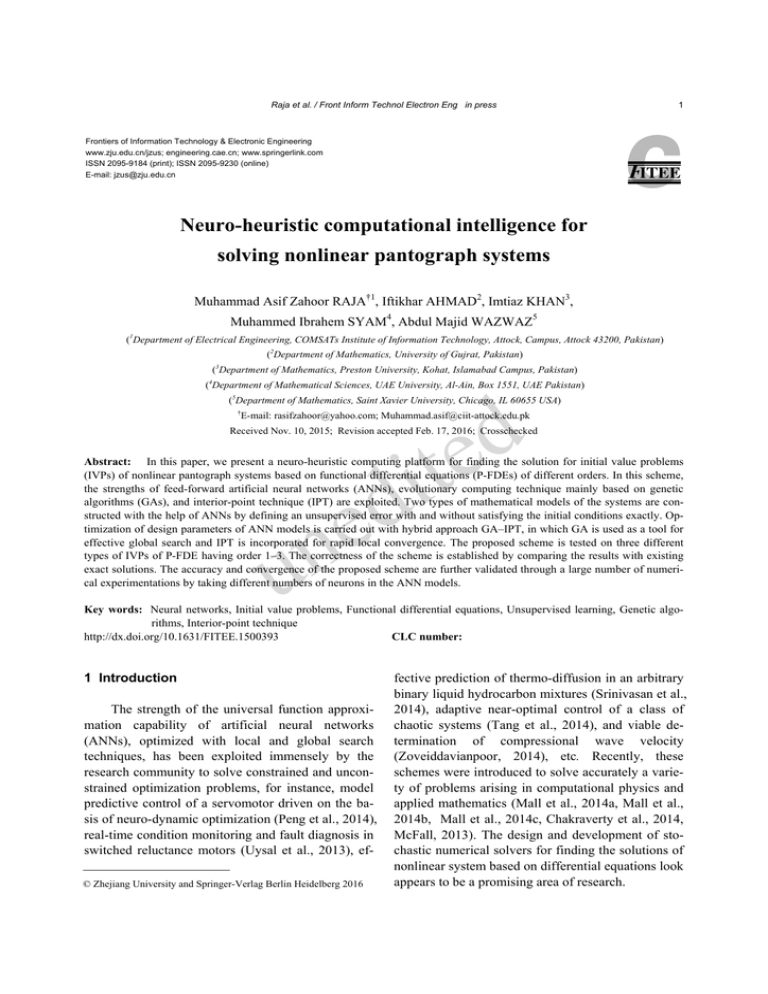

Raja et al. / Front Inform Technol Electron Eng in press 1 Frontiers of Information Technology & Electronic Engineering www.zju.edu.cn/jzus; engineering.cae.cn; www.springerlink.com ISSN 2095-9184 (print); ISSN 2095-9230 (online) E-mail: jzus@zju.edu.cn Neuro-heuristic computational intelligence for solving nonlinear pantograph systems Muhammad Asif Zahoor RAJA†1, Iftikhar AHMAD2, Imtiaz KHAN3, Muhammed Ibrahem SYAM4, Abdul Majid WAZWAZ5 (1Department of Electrical Engineering, COMSATs Institute of Information Technology, Attock, Campus, Attock 43200, Pakistan) (2Department of Mathematics, University of Gujrat, Pakistan) (3Department of Mathematics, Preston University, Kohat, Islamabad Campus, Pakistan) d e t i d 4 ( Department of Mathematical Sciences, UAE University, Al-Ain, Box 1551, UAE Pakistan) (5Department of Mathematics, Saint Xavier University, Chicago, IL 60655 USA) † E-mail: rasifzahoor@yahoo.com; Muhammad.asif@ciit-attock.edu.pk Received Nov. 10, 2015; Revision accepted Feb. 17, 2016; Crosschecked Abstract: In this paper, we present a neuro-heuristic computing platform for finding the solution for initial value problems (IVPs) of nonlinear pantograph systems based on functional differential equations (P-FDEs) of different orders. In this scheme, the strengths of feed-forward artificial neural networks (ANNs), evolutionary computing technique mainly based on genetic algorithms (GAs), and interior-point technique (IPT) are exploited. Two types of mathematical models of the systems are constructed with the help of ANNs by defining an unsupervised error with and without satisfying the initial conditions exactly. Optimization of design parameters of ANN models is carried out with hybrid approach GA–IPT, in which GA is used as a tool for effective global search and IPT is incorporated for rapid local convergence. The proposed scheme is tested on three different types of IVPs of P-FDE having order 1–3. The correctness of the scheme is established by comparing the results with existing exact solutions. The accuracy and convergence of the proposed scheme are further validated through a large number of numerical experimentations by taking different numbers of neurons in the ANN models. u e n Key words: Neural networks, Initial value problems, Functional differential equations, Unsupervised learning, Genetic algorithms, Interior-point technique http://dx.doi.org/10.1631/FITEE.1500393 CLC number: 1 Introduction The strength of the universal function approximation capability of artificial neural networks (ANNs), optimized with local and global search techniques, has been exploited immensely by the research community to solve constrained and unconstrained optimization problems, for instance, model predictive control of a servomotor driven on the basis of neuro-dynamic optimization (Peng et al., 2014), real-time condition monitoring and fault diagnosis in switched reluctance motors (Uysal et al., 2013), ef© Zhejiang University and Springer-Verlag Berlin Heidelberg 2016 fective prediction of thermo-diffusion in an arbitrary binary liquid hydrocarbon mixtures (Srinivasan et al., 2014), adaptive near-optimal control of a class of chaotic systems (Tang et al., 2014), and viable determination of compressional wave velocity (Zoveiddavianpoor, 2014), etc. Recently, these schemes were introduced to solve accurately a variety of problems arising in computational physics and applied mathematics (Mall et al., 2014a, Mall et al., 2014b, Mall et al., 2014c, Chakraverty et al., 2014, McFall, 2013). The design and development of stochastic numerical solvers for finding the solutions of nonlinear system based on differential equations look appears to be a promising area of research. 2 Raja et al. / Front Inform Technol Electron Eng in press Stochastic platform based on ANNs supported by global search methods such as genetic algorithms (GAs), particle swarm optimization (PSO), simulated annealing (SA), and pattern search (PS), and local search method including interior-point, sequential quadratic programming, Nelder–Mead simplex direct search, active-set algorithms, etc. are incorporated to solve governing nonlinear systems associated with initial value problems and boundary value problems of ordinary/partial/fractional/functional differential equations. A few renewed applications solved by these models can be seen in the literature, such as the nonlinear Toresch’s problem (Raja, 2014a. Raja 2014b), singular nonlinear transformed two-dimensional Bratu’s problems (Raja et al., 2013c, Raja et al., 2013d, and Raja et al., 2014e), nonlinear first Painlevé initial value problem (IVP) (Raja et al., 2015f, Raja et al., 2012g, Raja et al., 2015h), nonlinear Van-der Pol oscillatory problems (Khan et al. 2011a, Khan et al. 2015b), nonlinear boundary value problem (BVP) for fuel ignition model in combustion theory (Raja et al., 2014i, Raja, 2014j), nonlinear algebraic and transcendental equations (Raja et al., 2015k), nonlinear magnetohydrodynamic (MHD) Jeffery–Hamel flow problems (Raja et al., 2014l , Raja et al., 2014m), nonlinear BVPs of pantograph functional differential equations (Raja, 2014n), linear and nonlinear fractional differential equations (FDEs) (Raja et al., 2010o, Raja et al., 2010p), IVPs of nonlinear Riccati FDEs (Zahoor et al., 2009. Raja et al., 2010q, Raja et al., 2015r), Bagley–Torvik FDEs (Raja et al., 2015s, Raja et al., 2015t), thin film flow (Raja et al., 2015u), and others. Other techniques such as the exponential functions method, collocation method, and the Adomian decomposition method (Evans et al. 2005, Yusufoğlu, 2010, Yüzbaşı et al. 2013a, Bhrawy et al. 2013, Yüzbaşı et al. 2013b, Shakeri et al. 2010, Dehghan et al. 2010a, Dehghan et al. 2010b, Dehghan et al. 2010c, Shakourofar, et al. 2008, Barro et al., 2008, Pandit et al., 2014) are efficiently used in the literature. All these contributions are the source of motivation for us to explore in this domain and try to design a mate-heuristic computing procedure for solving IVPs of pantograph functional differential equations (P-FDEs). In this paper, we develop a novel computational intelligence algorithm for approximating the solution of IVP for the functional differential equations of pantograph type using feed-forward artificial neural networks, evolutionary computing technique synchronized on GAs, the interior-point technique (IPT), and their hybrid combinations. Silent features of the proposed method are as follows: The scheme comprises approximate neural network mathematical models in an unsupervised manner. u d e t i d e n Optimal design parameters of the models are trained using heuristic computational intelligence methods based on effective and efficient global and local search techniques. Strength and weakness of the proposed methods are analyzed on six different linear and nonlinear initial value problems of the first, second, and third order. The validity of the obtained results is proven with available standard solutions, i.e., the exact, numerical, and analytical solutions. The accuracy and convergence of the proposed scheme are also investigated based on a large number of numerical experimentations by changing the number of neurons in neural networks modeling. The rest of the paper is organized as follows: Section 2 gives the brief description of IVPs of P-FDEs taken for the study. In Section 3, the proposed design methodology is provided in detail along with the neural networks architecture and flow diagram of hybrid approach GA-IPA. Numerical experimentation based on three problems is presented in Section 4. Conclusions along with future research directions are provided in the last section. 3 Raja et al. / Front Inform Technol Electron Eng in press 2 System model: IVPs of P-FDEs P-FDE is a specific type of functional differential equations that possess proportional delays. These equations are of fundamental importance due to their functional arguments characteristics. Moreover, these equations have a significant role in the description of various phenomena, in particular, in problems where the ODE models fail. The systems based on these equations occur in many applications in diverse fields including adaptive control, number theory, electrodynamics, astrophysics, nonlinear dynamical systems, probability theory on algebraic structure, quantum mechanics, cell growth, engineering, and economics (Ockendon et al. 1971, Iserles, 1993, Zhang et al., 2008, Derfel et al., 1997, Azbelev et al., 2007, ). The study in this field has been flourishing because of the significant role played by these equations. This has encouraged researchers to invest a considerable amount of time and effort aiming to make further progress in this domain. Many researchers have shown great interest in solving P-FDEs, and many algorithms, including analytical and numerical solvers, have been developed even recently (Agarwal et al. 1986, Sedaghat et al.,2012, Saadatmandi et al., 2009). In this study, a stochastic numerical treatment is presented for solving IVPs of P-FDEs of different orders with prescribed conditions. The generic form of P-FDEs of the first order is given as d e t i d df z f t , f g t , t 0, 0 t 1 dt f (0) c1 while for the second-order P-FDE it is given as e n d2 f df z f (t ), , f g t , t 0, 2 dt dt d f (0) c1 , f (0) c2 . dt u (1) (2) Accordingly, the generic form of P-FDE of the third order is given as d3 f df d 2 f z f ( t ), , 2 , f g t , t 0, 3 dt dt dt f (0) c1 , (3) 2 d d f (0) c2 , 2 f (0) c3 , dt dt where g(t) is a known function, smooth or not, of the inputs, and c1 , c2 , and c3 are the constants that represent the initial conditions. The system model for the study consists of three IVPs of P-FDEs as represented in Eqs. (1)–(3). 3 Design of unsupervised ANN models A concise description of the design of unsupervised ANN models is presented here for solving IVPs of nonlinear P-FDEs. Two types of feed-forward ANN models are developed for the solution and derivative terms of the equation. Additionally, the construction of a fitness function using these networks in an unsupervised manner is also given here. 4 Raja et al. / Front Inform Technol Electron Eng in press 3.1 Artificial neural network modeling: Type 1 In this case, mathematical model of P-FDEs is developed by exploiting the strength of feed-forward ANNs, in the form of following continuous mapping for the solution f(t) , and its first derivative df/dt, second derivative d2f/dt2, and the nth order derivative dnf/dtn, respectively, are written as k fˆ (t ) i y ( wi t i ) i 1 , dfˆ d i y ( wi t i ) dt i 1 dt , k k d 2 fˆ d2 y ( wi t i ) i 2 dt 2 dt i 1 , (4) d e t i d k d n fˆ dn y ( wi t i ) i dt n dt n i 1 . For networks (4) for composite functional terms in functional differential equations, the following neural networks mapping are incorporated: k e n fˆ ( g (t )) i y ( wi ( g (t )) i ) i 1 u , dfˆ ( g (t )) k d i y( wi ( g (t )) i ) dt dt i 1 , d 2 fˆ ( g (t )) k d2 i 2 y ( wi ( g (t )) i ) dt 2 dt , i 1 (5) k d n f ( g (t )) dn y ( wi ( g (t )) i ) i dt n dt n i 1 . The networks shown in the set of equations (4) and (5) are the normally used log-sigmoid y(x) =1/(1 + e–x) and its respective derivatives as an activation functions. Therefore, the networks shown in (4) can also be written in an updated form for first few terms as follows: k 1 ˆf (t ) i ( wit i ) , 1 e i 1 (6) 5 Raja et al. / Front Inform Technol Electron Eng in press e( wit i ) dfˆ k i wi 1 e( wit i ) dt i1 k 2e 2( wi t i ) d 2 fˆ 2 w i i 1 e ( wit i ) dt 2 i 1 2 , e ( wi t i ) 1 e 3 ( wi t i ) 2 , Similarly, the networks given in (5) can also be written in an updated form for the first few terms as k 1 fˆ ( g (t )) i ( wi g ( t ) i ) 1 e , i 1 k e ( wit i ) dfˆ i wi 1 e ( wit i ) dt i1 dg 2 dt 2( wi t i ) 2e 3 2 ˆ k 1 e ( wit i ) d f i wi 2 2 dt e ( wi t i ) i 1 1 e ( wit i ) ( w t ) k e i i i wi i 1 1 e( wit i ) dg dt 2 2 d g 2 2 , dt u d e t i d , (7) e n A suitable combination of equations given in the sets (4) and (5) can be used to model the P-FDEs as given in the set of equations (1)–(3). Fitness Function Formulation An objective function or fitness function is developed by defining an unsupervised error, and it is given by the sum of two mean-squared errors as e e1 e2 . (8) where e1 and e2 are error functions associated with different orders of P-FDEs and their initial conditions, respectively. In case of a first-order P-FDE (1), the following the fitness function tion and its initial condition e2 FO , respectively, as e1 FO constructed the equa- 6 Raja et al. / Front Inform Technol Electron Eng in press e1 FO 1 N dfˆ z fˆm , fˆ ( gm ), tm N m1 dt 2 , 1 N , fˆm fˆ tm , g m g (tm ), tm mh, , h e2 FO fˆ0 c1 (9) . 2 However, for the second-order P-FDW as given in (2), the error functions ed as follows: e1 SO and e 2 SO are construct- 2 e1 SO e2SO d 2 fˆ 2 N dt 1 , ˆ N m 1 df z fˆm , m , fˆ ( g m ), tm dt 2 1 d fˆ0 c1 fˆ0 c2 2 dt 2 d e t i d . (10) e n Accordingly, in the case of the third-order P-EDE, as given in (3), the unsupervised error functions e1TO u and e 2 TO are formulated, respectively, as e1TO e2TO 2 dfˆ fˆm , m , N 3 ˆ 1 d f dt , 3 z N m 1 dt d 2 fm ˆ , ( ), f g t m m dt 2 2 2 d fˆ0 c1 fˆ0 c2 dt . 1 2 3 d2 ˆf c dt 2 0 3 (11) 3.2 Artificial neural network modeling: Type 2 An alternate ANN model is also developed that satisfies initial conditions exactly to solve IVPs of PFDEs. The governing equations of update neural networks model for the solution y(t), and its first derivative df/dt, second derivative d2f/dt2, and nth order derivative dnf/dtn , respectively, are written as ~ f t Pt Qt fˆ g (t ) (12) 7 Raja et al. / Front Inform Technol Electron Eng in press dfˆ ( g (t )) dg df dP dQ ˆ f g (t ) Q t dt dt dt dt dt d 2 f d 2 P d 2Q ˆ 2 2 f g (t ) dt 2 dt dt 2 ˆ dQ df g (t ) dg d 2 fˆ ( g (t )) dg , 2 Q t dt dt dt dt 2 dt 2 ˆ df ( g (t )) d g Q t dt dt 2 n n n d f d P d Q ˆ dn ˆ f g t Q f g (t ) , ( ) (t) dt n dt n dt n dt n where, d/dt, d2/dt2 and dn/dtn are the networks shown in the set of equations (4) and (5). The updated neural network used for solving Eqs. (1) to (3) satisfies the initial conditions exactly by taking the appropriate choice for P(t) and Q(t) functions. The suitable combination of the equations given in the set (12) can be used to model the P-FDEs as given in the set (1)–(3). Fitness Function Formulation An objective function or fitness function FO is developed in an unsupervised manner for Eq. (1) in the d e t i d mean-squared sense as follows: FO 1 N e n 2 df z fm , f g m , tm , m 1 dt N u 1 N , fm f tm , g m g (tm ), tm mh , h where f and its derivative are given by the set of equations (12). In case of the second-order (13) P-FDE, as given in (2), the fitness function SO is constructed as 2 SO dfm , 1 N d 2 f f , 2 z m dt , N m1 dt f g , t m m (14) Consequently, in case of a third-order P-EDE, as given in (3), the fitness function TO is written as 2 TO d 3 f 3 1 N dt . 2 N m1 df d f m , f g m , tm z fm , m , 2 dt dt (15) 8 Raja et al. / Front Inform Technol Electron Eng in press In the availability of weights of neural networks such that the objective functions given in (8)–(15) approach zeroes, the proposed solution will approximate the exact solution of Eqs. (1) to (3). 4 Learning process 4.1 Genetic algorithm GAs, inspired with the natural evolution of genes, were presented by Holland in 1975 (Holland, 1975). GAs normally use natural evolution as an optimization mechanism for solving diverse areas of computational mathematics, engineering, and science. The performance of the GA depends upon factors including multiplicity of preliminary population, appropriate selection of the fittest chromosomes to the next generation, survival of high-quality genes in recombination operation, and seeding of new genetic material in mutation and the number of the generation. GA is well known as an efficient and effective global search technique that is less probable to get stuck in the local minimum, avoid divergence, stable, and robust compared to other mathematical solvers. The fundamental operations of the GA involve crossover of the type single point, multiple points, heuristic, etc., mutation via various functions like linear, logarithmic, adaptive feasible, etc., and selection functions are based on uniform method, tournament method, etc. Recently reported potential applications addressed with GAs can be seen in (Zhang et al., 2014, Troiono et al., 2014, Arqub et al., 2014, Xu et al., 2013). There are two types of the parameter settings involved in GA: one is specific and other is general. The general setting involves the population size, number of generations, size of the chromosome, and lower and upper bounds of the adaptive parameters, whereas the specific settings are like scaling function, selection function, crossover function, mutation function, elite count, migration direction, etc. The parameter setting fixed in the work is provided in Table 1, which is achieved by performing a number of runs depending on the complexity and accuracy of the problems. 4.2 Interior-point technique u d e t i d e n IPT is an efficient local search method to tune the weights or unknowns of a problem. This is a derivativebased technique based on Langrage multipliers and KKT equations (Potra et al., 2000, Wright et al., 1997). There are some general and specific parameter settings involved with IPT; the specific parameters involve the type of the derivative, scaling function, the number of maximum perturbations, the types of finite difference, etc. On the other hand, the general setting involves Hessian function, minimum perturbation, nonlinear constraint tolerance, fitness limit, and bounds based on upper and lower limit. The parameter setting fixed in this work is shown in Table 2. 4.3 Hybrid approach: GA–IPT This is well known that the global search techniques are good enough to get the approximate solution, but this is obtained at a lower level of accuracy. On the other hand, the approximation time complexity of local search techniques is invariably excellent, but it can get stuck in the local minima or can cause premature convergence. However, the hybrid approach exploits both the capabilities of the global and local optimizer to get an accurate result. As the number of generations of GA gradually increases and the ability to bring the optimal solution decreases significantly, this means that with very large iteration numbers, hardly any optimal and reliable results can be obtained. It is, therefore, required to merge efficient local search techniques to obtain better results. Hence, IPT is employed in this work, so that it provides rapid convergence by selecting the best global individuals of GAs as a start point in the training of neural network model of IVP of pantograph functional DEs. The generic workflow diagram of the proposed methodology is given in Fig. 1, while necessary details for the procedural steps are provided in Table 3. 9 Raja et al. / Front Inform Technol Electron Eng in press Table 1 Parameter setting for GAs Parameter Setting Parameter Setting Population creation Constraint-dependant Population size 300 Scaling function Rank Chromosome size 30/ 45/60 Selection function Uniform Generation 700 Crossover fraction 1.25 Function tolerance 10-20 Crossover function Heuristic StallGenLimit 100 Mutation function Adaptive feasible Bounds (lower, upper) (–20, 20)130 Elite count 4 Migration direction Forward Initial penalty 10 Migration interval 20 Penalty factor 100 Nonlinear constraint tolerance 10-20 Migration fraction 0.2 Fitness limit 10-20 Subpopulations 10 Others Defaults d e t i d Table 2 Parameter setting for interior-point technique Parameter Setting Parameters Setting Start Point Randomly from (–3.5, 3.5) Hessian BFGS Derivative Solver approximate Minimum perturbation 10–08 Subproblem algorithm IDI factorization X-tolerance 10–18 Scaling Objective and constraints Nonlinear constraint tolerance 10–15 Maximum perturbation 0.1 Fitness limit 10–15 Finite difference types Central differences Bounds (lower, upper) (–35, u e n 35) 10 Raja et al. / Front Inform Technol Electron Eng in press u d e t i d e n Fig. 1 Generic flowchart of hybrid evolutionary algorithm based on GA–IPT Table 3 Process of training of weights of ANN models with integrated approach GA-IPT Procedure of GA-IPT in steps Step 1: Initialization: The initial population is randomly generated with real values represented chromosomes or individuals with same number of elements equal to number of unknown weights in the models. Initialize the values GAs param eters as given in Table 1. Step 2: Calculate Fitness: The fitness value for each individual of population is evaluated first by using Eq. (3) for the first neural network model and then Eq. (8) for the second neural network model. Step 3: Ranking: Each individual of the populations is ranked on the basis of the minimum value of the respective fitness functions of the models. Step 4: Termination Criteria: The algorithm is terminated when any of following criteria is matched: The predefined fitness value is achieved The number of generations is completed The stoppage criteria given in Table 1.is fulfilled If any of the above-mentioned termination criteria is met, then go to step 6 Step 5: Reproduction: Create next generation at each cycle by using 11 Raja et al. / Front Inform Technol Electron Eng in press Crossover: Call for heuristic function, Mutation: Call for Gaussian function, Selection: Call for Stochastic Uniform function and Elitism count 05, etc. Repeat the process from step 2 to step 5 with new population. Step 6: Hybridization: The IPT is used for the refinement of results by using the best individual of GAs as initial weights. The parameter settings used for IPT are given in Table 2. Step 7: Store: The final weight vector and fitness values achieved for this run of the algorithm are stored. 5 Results and discussion In this section, the results of the design methodology are presented for linear and nonlinear IVPs of PFDEs. Three types of problems are taken for numerical experimentation based on different orders. Proposed solutions of the equations are determined for both types of ANN models. The effect of change in the number of neurons on the accuracy and convergence is also presented in a number of graphical and numerical illustrations along with comparative studies from reference exact solutions. 5.1 Problem I: IVPs of first-order P-FDEs d e t i d Four different problems of first-order P-FDEs [35–37] are solved by the proposed methodology presented in the last section. a) Example 1 In this case, IVP of the first-order P-FDE [43, 44] of type (1) is taken as df 1 t / 2 1 1 e f t f (t ), dt 2 2 2 f (0) 1. Equation (16) is derived u e n from (1) by taking (16) z f , f g , t 0.5et /2 f g (t ) 0.5 f (t ) and g t t / 2 . The exact solution of equation (16) is known and is given by f (t ) et . (17) The proposed design methodology is applied to solve the IVP based on two types of ANN models to get the approximate solution. The unsupervised errors in the form of fitness functions are developed for this equation using N = 10 and step size h = 0.1, and these are written for both the models as FO 2 1 10 dfm 1 tm / 2 1 e f (tm / 2) f m , 10 m 1 dt 2 2 eFO 1 10 dfˆ 1 1 m etm /2 fˆ (tm / 2) fˆm 10 m1 dt 2 2 . (18) 2 (19) 2 fˆ0 1 . Now the requirement is to search for trained weights for the fitness functions given in Eqs. (18) and (19). 12 Raja et al. / Front Inform Technol Electron Eng in press The proposed methods based on GA, IPT, and the hybrid approach GA–IPT are applied to train unknown weights using the parameter setting as listed in Tables 1 and 2. One set of optimized weights by GA–IPT for neuron k = 10, with fitness of around 10–09 for both models are used to derive the approximated solutions as follows: 0.0804 1 e 0.1586t 1.3347 0.1894 1 e 0.9333t 0.4303 0.18674 f t et t 2 1 e 0.7280t 0.4827 0.2899 1 e 0.7425t 0.3296 0.0801 0.9054 t 0.4487 1 e 0.3143 1 e 0.4129t 1.0102 0.3385 1 e 0.0024t 0.2654 0.2081 , 1 e 0.0806t 0.1575 0.0107 1 e 0.0654t 0.2871 0.3010 0.1943t 0.1444 1 e 0.0804 0.3143 0.1586 t 1.3347 0.4129 t 1.0102 1 e 1 e 0.1894 0.3385 1 e 0.9333t 0.4303 1 e 0.0024t 0.2654 . 0.1867 0.2081 ˆf t 1 e 0.7280t 0.4827 1 e 0.0806t 0.1575 0.2899 0.0107 1 e 0.7425t 0.3296 1 e 0.0654t 0.2871 0.0801 0.3010 0.9054 t 0.4487 0.1943t 0.1444 1 e 1 e u (20) d e t i d (21) e n The solutions presented in Eqs. (20) and (21) are provided in the extended form in the Appendix in Eqs. (48) and (49), respectively. The proposed solutions are obtained with trained weights by GA-IPT for neuron, i.e., k = 10, 20, and 30, using Eqs. (20) and (21) along with the first equation of set (7) and (12). Results are given in Table 4 for inputs t ∈ [0, 1] with the step size 0.1. The exact solution is determined using Eq. (17) and is also given in Table 4. The proposed solutions are also calculated with the help of optimized weights of GA, IPT, and GA–IPT, and results in term of absolute error (AE) from the reference exact solution are shown graphically in Fig. 2. It can be inferred from the results presented in Fig. 2(a) that for k = 10, 15, and 20 the values of AE for GA lie around 10–06, 10–06–10-07, and 10–06, respectively, for the mathematical model based on (18), while for (19) the values of AE are around 10–03–10–04, 10–03–10–04, and 10–03–10–04, for k = 10, 15, and 20, respectively. Similarly, one can see from Fig. 2(b) that for k = 10, 15, and 20 the values of AE for IPT lies around 10–06, 10–06–10– 07 , and 10–06, respectively, for mathematical model based on (18), while for (19) the values of AE are around 10–03–10–04, 10–03–10–04, and 10–03–10–04, for k = 10, 15, and 20, respectively. On the other hand, the results of GA–IPT shown in Fig. 2(c) show that for k = 10, 15, and and 20 the values of AE lie around 10–06, 10–06–10– 07 , and 10–06, respectively, for the mathematical model based on (18), while for (19) the values of AE are around 10–03–10–04, 10–03–10–04, and 10–03–10–04, for k = 10, 15, and 20, respectively. b) Example 2 Consider another first order-nonlinear IVP of P-FDE as [35, 36]: df 1 2 f 2 ( g (t )) dt , (22) 13 Raja et al. / Front Inform Technol Electron Eng in press f (0) 0. Equation (22) is derived from Eq. (1) by taking z f , f g , t 1 2 f 2 g t and g (t ) t / 2 . The exact solution of Eq. (22) is f (t ) sin t . (23) The proposed design methodology is applied on a similar procedure as adopted in the last example; however, the fitness functions using N = 10 and step size h = 0.1 in this case are written for both types of neural network models as FO 2 1 10 df 2 1 2 f (tm / 2) 10 m1 dt 2 eFO (24) , 1 10 dfˆm 1 2 fˆm2 (t m / 2) fˆ0 10 m 1 dt . 2 d e t i d (25) One set of optimized weights trained by GA–IPT in the case of ten neurons, i.e., k = 10, fitness values in the range of 10–08 for both ANN models are used to obtain the derived solutions as follows: e n 0.9496 1.3470 0.3659 t 0.9103 1.0241t 0.7515 e e 1 1 1.5037 0.0355 1 e 0.7085t 1.6240 1 e 1.3745t 0.1847 0.4700 0.2330 , f t sin?t t 2 1 e 1.0889t 0.2060 1 e 0.3844t 0.6442 0.0952 0.1510 1 e 1.4891t 0.5765 1 e 1.7607 t 0.9299 1.0914 2.0344 0.6036 t 0.2050 1 e 0.3910t 0.8486 1 e u 0.3888 1.3191 1.1141t 2.2556 1.4621t 2.0752 1 e 1 e 1.2244 0.9506 1 e 1.6263t 0.3115 1 e 1.0389t 1.2599 . 1.2310 1.1211 fˆ t 1 e 1.9668t 1.9453 1 e 0.8882t 3.2592 0.9784 0.0031 0.7765 t 0.9891 1.9169 t 0.4591 1 e 1 e 0.1830 1.2423 2.5442 t 0.8153 1 e 1.6713t 0.0094 1 e (26) (27) The solutions presented in Eqs. (26) and (27) are provided in extended form in the Appendix in Eqs. (50) and (51), respectively. Solutions are calculated using the trained weight of GA–IPT and results are given in Table 5 for inputs t ∈ [0, 1] with a step size 0.1 based on different numbers of neurons. The exact solution for the problem is also given in Table 4.2 for the same input parameters. The results in terms of AE are plotted graphically in Fig. 2. It is seen from the results that for k = 10, 15, and 20 both methodologies give small values, but the ANN models with exactly satisfying initial condition provides better accuracy. 14 Raja et al. / Front Inform Technol Electron Eng in press Table 4 Comparison of proposed result by GA–IPT from exact solution for example 1 of problem 1 f (t ) f (t ) Exact k = 30 k = 45 k = 60 k = 30 k = 45 k = 60 0 1 1 1 1 1.000002 1 1 0.1 1.105171 1.105171 1.105168 1.105171 1.105214 1.105093 1.105093 0.2 1.221403 1.221403 1.221399 1.221403 1.22144 1.221327 1.221327 0.3 1.349859 1.349859 1.349857 1.349859 1.349883 1.349776 1.349776 0.4 1.491825 1.491825 1.491823 1.491825 1.491858 1.491723 1.491723 0.5 1.648721 1.648721 1.648717 1.648722 1.648782 1.648609 1.648609 0.6 1.822119 1.822118 1.822114 1.822119 1.822196 1.822005 1.822005 0.7 2.013753 2.013752 2.013748 2.013753 2.013816 2.013629 2.013629 0.8 2.225541 2.225541 2.225538 2.225541 2.225582 2.225395 2.225395 0.9 2.459603 2.459603 2.459599 2.459604 2.459665 2.459439 2.459439 1 2.718282 2.718281 2.718275 2.718282 2.718391 2.718114 2.718114 t fˆ (t ) u d e t i d e n (a): ANN model of type 1 for GA–IPT (b) ANN model of type 2 for GA–IPT (c) ANN models optimized with GAs (d) ANN models optimized with IPT Raja et al. / Front Inform Technol Electron Eng in press 15 Fig. 2. Comparison of proposed results with reference exact solution for example 1 of problem 1. In case of (a) and (b), results in terms of solution versus inputs are plotted, while in (c), (d), and (e), the values of the corresponding absolute errors are shown in term of solid lines for ANNs model of type 1 and dash lines for ANNs model of type 2 for all three algorithms. (e) ANN models optimized with GA-IPT u d e t i d e n (a) ANN model of type 1 for GA–IPT (b) ANN model of type 2 for GA–IPT (c) ANN models optimized with GAs (d) ANN models optimized with IPT 16 Raja et al. / Front Inform Technol Electron Eng in press Fig. 3. Comparison of proposed results from the reference exact solution, for example 2 of problem 1. In case of (a) and (b), results in term of solution versus inputs are plotted, while for (c), (d) and (e), the values of the corresponding absolute error are shown in term of solid lines for ANNs model of type 1 and dashed lines for ANNs model of type 2 for all three algorithms. (e) ANN models optimized with GA-IPT d e t i d Table 5 Comparison of proposed result by GA–IPT and exact solution for example 2 of problem 1 t f (t ) f (t ) Exact k = 30 fˆ (t ) k =45 k = 60 0 0 0 0 0.1 0.099833 0.099833 0.2 0.198669 0.3 k = 30 k = 45 k = 60 0 –1.62E–07 –7.20E–09 –1.62E–09 0.099833 0.099833 0.099833 0.099819 0.099809 0.198669 0.198669 0.198669 0.19867 0.198659 0.19865 0.29552 0.29552 0.29552 0.29552 0.295523 0.295515 0.295507 0.4 0.389418 0.389418 0.389418 0.389418 0.389421 0.38941 0.389404 0.5 0.479426 0.479425 0.479426 0.479425 0.479424 0.479412 0.479406 0.6 0.564642 0.564642 0.564642 0.564642 0.564639 0.56463 0.564623 0.7 0.644218 0.644218 0.644218 0.644218 0.644217 0.644213 0.644206 0.8 0.717356 0.717356 0.717356 0.717356 0.71736 0.717358 0.717352 0.9 0.783327 0.783327 0.783327 0.783326 0.783327 0.783323 0.78332 1 0.841471 0.841471 0.841471 0.841471 0.841464 0.841461 0.841461 u e n c) Example 3 Consider another form of first-order IVP of P-FDEs with proportional delay in the forcing term [37–44] as 1 df 1 f (t ) f ( g (t )) e t / 2 4 dt 4 , f (0) 1. (28) Equation (28) is derived from Eq. (1) by taking z f , f g , t f (t ) 0.24 f ( g (t )) 0.25e and g (t ) t / 2 . The exact solution of the problem is given by t /2 17 Raja et al. / Front Inform Technol Electron Eng in press f (t ) et . (29) In this case, the fitness functions based on unsupervised error are formed using N = 10 and step size h = 0.1 and are given for both kinds of ANN models as 2 FO 1 10 df 1 1 m fm f (tm / 2) e tm /2 , 10 m1 dt 4 4 eFO dfˆm fm 10 2 1 fˆ0 1 . dt 10 m 1 1 ˆ 1 t / 2 f (tm / 2) e m 4 4 (30) 2 (31) Optimization of the fitness functions given in (30) and (31) is carried out with GA, IPT, and the hybrid approach GA–IPT, and the solutions derived using one set of weights by GA–IPT for k = 10 with fitness values of order 10–11 and 10–08 for ANN model type 1 and 2, respectively, are given mathematically as follows: 1.12541 1.4210 1 e 0.8641t 1.9350 1 e 1.1903t 0.3159 0.7202 0.5391 1 e 0.2772t 0.1214 1 e 0.9870t 1.1302 1.7714 0.7534 , f t e-t t 2 1 e 0.8935t 0.6601 1 e 0.0059t 0.0656 1.2487 0.4799 1 e 0.5566t 1.6375 1 e 0.0252t 1.6337 1.6996 0.8303 1.5402 t 1.4391 1 e 0.7958t 0.4921 1 e u e n d e t i d 0.0393 3.6192 3.5121t 1.0534 0.0334 t 2.4531 1 1 e e 0.5867 2.7176 1 e 0.9518t 0.6042 1 e 2.1911t 1.7109 . 1.7338 0.9885 fˆ t 1 e 0.3528t 0.4800 1 e 0.8103t 4.7230 0.4657 0.1041 0.8925 t 0.2514 1.5841t 1.0946 1 e 1 e 1.7886 0.1960 0.0901t 2.5349 1.8923t 1.0802 1 e 1 e (32) (33) The solutions presented in Eqs. (32) and (33) are provided in extended form in the Appendix in Eqs. (52) and (53), respectively. The approximate solutions are also calculated with the weights of the GA–IPT algorithm for different neurons, i.e., k = 10, 20, and 30, and the results are listed in Table 6 for inputs in the interval [0, 1] with a step size of 0.1 and in Fig. 4 in term of AEs. The exact solution is also given in the table for comparison. It is seen that proposed results are overlapping the standard solution and small values of AEs are achieved for this example as well. 18 Raja et al. / Front Inform Technol Electron Eng in press Table 6 Comparison of the proposed result by GA-IPT from exact solution, for example 3 of problem 1 t f (t ) f (t ) Exact k = 30 fˆ (t ) k =45 k = 60 k = 30 k = 45 k = 60 0 1 1 1 1 1 1 1 0.1 0.904837 0.904837 0.904839 0.904836 0.904863 0.904863 0.904907 0.2 0.818731 0.81873 0.818732 0.818730 0.818752 0.818752 0.818789 0.3 0.740818 0.740818 0.740819 0.740818 0.740839 0.740839 0.740874 0.4 0.67032 0.67032 0.670321 0.67032o 0.67034 0.67034 0.670375 0.5 0.606531 0.60653 0.606532 0.606530 0.606548 0.606549 0.606579 0.6 0.548812 0.548811 0.548813 0.548811 0.548828 0.548828 0.548855 0.7 0.496585 0.496585 0.496585 0.496585 0.496601 0.496601 0.496627 0.8 0.449329 0.449329 0.449329 0.449329 0.449345 0.449345 0.44937 0.9 0.40657 0.40657 0.406570 0.406569 0.406583 0.406584 0.406607 1 0.367879 0.367879 0.367880 0.367880 0.367892 0.367913 d e t i d 5.2 Problem II: Second-Order P-FDE 0.367892 In this case, we evaluated the performance of the proposed scheme on the second-order IVP of P-FDE as [36, 37] e n d 2f 3 f (t ) f ( g (t )) t 2 2 dt 2 4 , d f (0) 0, f (0) 0, and g (t ) t / 2 dt u (34) Equation (34) is derived from (2) for g (t ) t / 2 and function z as z f , f g ,t 3 f (t ) f ( g (t )) t 2 2 4 (35) The exact solution of (34) is given by f (t ) t 2 . (36) For solving this problem, two fitness functions are formulated by taking the value of N = 10 and step size h = 0.1, as 19 Raja et al. / Front Inform Technol Electron Eng in press , SO 2 dfm 1 f m f (tm / 2) 1 10 dt 4 10 m1 1 tm /2 e 4 (37) 2 d 2 fˆ 3 1 10 2m fˆm fˆ (tm / 2) eSO dt 4 10 m1 2 tm 2 2 1 d fˆ0 ( fˆ0 )2 2 dt (38) . u (a) ANN model of type 1 for GA–IPT (c) ANN models optimized with GAs e n d e t i d (b) ANN model of type 2 for GA–IPT (d) ANN models optimized with IPT 20 Raja et al. / Front Inform Technol Electron Eng in press Fig. 4. Comparison of proposed results from reference exact solution for example 3 of problem 1. In case of (a) and (b), results in term of solution versus inputs are plotted, while for (c), (d), and (e), the values of corresponding absolute error are shown in term of solid lines for ANNs model of type 1 and dash lines for ANNs model of type 2 for all three algorithms. (e) ANN models optimized with GA–IPT u d e t i d e n (a) ANN model of type 1 for GA–IPT (b) ANN model of type 2 for GA–IPT (c) ANN models optimized with GAs (d) ANN models optimized with IPT 21 Raja et al. / Front Inform Technol Electron Eng in press Fig. 5. Comparison of proposed results from the reference exact solution for problem 2. In case of (a) and (b), results in term of solution versus inputs are plotted, while for (c), (d), and (e), the values of corresponding absolute error are shown in term of solid lines for ANNs model of type 1 and dash lines for ANNs model of type 2 for all three algorithms. (e) ANNs models optimized with GA–IPT d e t i d In order to get the unknown parameters for fitness function (37) and (38), GA, IPT, and the hybrid approach GA–IPTs are applied, and the approximate solutions are expressed mathematically with one set of weights by GA–IPT for k = 10 with fitness values of the order 10–07 and 10–06 as follows: e n 0.6748 0.5449 0.7242 t 0.0173 0.4061t 0.3663 e e 1 1 0.4904 0.0372 1 e 0.2772t 0.5385 1 e 0.7469t 0.8472 0.2313 0.1861 , f t t 2 t 2 0.7242 t 0.0480 0.7242 t 1.1941 1 e 1 e 0.9102 0.0466 1 e 0.6772t 0.2389 1 e 0.6888t 0.3597 0.9887 1.0436 0.0827 t 0.2227 1 e 0.3359t 0.4375 1 e u 2.3576 2.0152 1.4204 t 1.5204 1.0376 t 1.2196 1 e 1 e 1.7391 1.5842 1 e 2.7948t 3.3269 1 e1.5079t 0.0606 . 3.4531 1.4274 ˆf t 1 e 0.2616t 1.2650 1 e 1.4536t 3.6760 0.4409 1.4325 1 e 2.1884t 1.5155 1 e 0.8949t 3.9208 4.9585 0.7523 1.8723t 4.1947 1 e 0.1993t 2.2551 1 e (39) (40) The solutions presented in Eqs. (39) and (40) are provided in extended form in the Appendix in Eqs. (54) and (55), respectively. The proposed solutions are determined by weights trained by GA-–IPT for k = 10, 20, and 30 neurons, and the results along with exact solutions are given in Table 7 for inputs t ∈ [0, 1] with a step size 0.1. The results based on AEs are shown graphically in Fig. 5 for both ANN models. It can be seen that given solutions are overlapping with the exact solutions. It is seen from the results in Fig 5(a) that for k = 10, 22 Raja et al. / Front Inform Technol Electron Eng in press 15, and 20 the values of AE for GA are around 10–04, 10–05–10–06, and 10–06–10–07, respectively, by optimizing (37), while optimizing (38) gives the values of AE as 10–03–10–04, 10–03, and 10–03, for k = 10, 15, and 20, respectively. Further, one can deduce from Fig 5(b) that for k = 10, 15, and 20, the values of AE for IPT are in between 10–06 and 10–07 for the first kind of model (37), while for another model (38) the values of AE are also around 10–06–10–07 for k = 10, 15, and 20. Accordingly, the results of GA–IPT plotted in Fig 5(c) shows that for k = 10, 15, and 20 the values of AE lie in the range 10–04–10-05, 10–05–10–06, and around 10–07–10–08, respectively, for the first type of ANN modeling (37), while for the second type of ANN modeling (38) the values of AE are 10–04–10–08, 10–04–10–09, and 10–04–10–09, for k = 10, 15, and 20, respectively. 5.3 Problem III: Third-Order P-FDE In this case, we consider a relatively difficult IVP of P-FDE, which is based on the third-order ODE, given as [35, 36] d3 f 2 1 2 f g (t ) 3 dt , d d2 f (0) 0, f (0) 1, 2 f (0) 0, dt dt d e t i d . (41) and g (t ) t / 2 . Equation (41) is derived from (3) for g (t ) t / 2 , and function z is given as e n z f , f g , t 1 2 f g (t ) . 2 u (42) The exact solution of the problem (41) is given by: f (t ) sint . (43) The proposed design methodology is applied to find the approximate solution of this IVP as well; however, the merit or fitness functions using N = 10 and step size h = 0.1 are constructed for this equation as 2 2 1 10 d 3 f TO 3m 1 2 f (tm / 2) 10 m 1 dt , 2 1 10 d 3 fˆ 2 m ˆ (t / 2) 1 2 f m 10 m 1 dt 3 . eTO 2 2 2 1 2 d d fˆ0 fˆ0 1 2 fˆ0 3 dt dt (44) (45) In order to get the weights for optimizing the fitness functions given in Eqs. (44) and (45), the strength of GA, IPT, and hybrid approach GA-–IPT is exploited, and one set of learned weights by GA–IPT for k = 10 for both ANN models are written as 23 Raja et al. / Front Inform Technol Electron Eng in press 0.3858 0.6178 0.9242 t 1.0047 0.2237 t 0.1588 1 1 e e 0.0060 0.4950 1 e 0.3636t 0.6231 1 e 0.7295t 0.6231 0.6360 0.1211 f t sin(t ) t 2 , 0.5584 t 0.0036 0.1745 t 0.3059 1 e 1 e 0.3131 0.3690 1 e 0.2311t 0.1747 1 e 0.9056t 0.9262 0.1313 0.0395 0.8040 t 0.04617 0.9470 t 0.1381 1 e 1 e (46) 0.3858 0.6178 0.9242t 1.0047 0.2237 t 0.1588 1 1 e e 0.0060 0.4950 1 e 0.3636t 0.6231 1 e 0.7295t 0.6231 . 0.6360 0.1211 fˆ t 1 e 0.5584t 0.0036 1 e 0.1745t 0.3059 0.3690 0.3131 1 e 0.2311t 0.1747 1 e 0.9056t 0.9262 0.1313 0.0395 0.8040t 0.0461 1 e 0.9470t 0.1381 1 e (47) d e t i d The solutions presented in Eqs. (46) and (47) are provided in extended form in the Appendix in Eqs. (56) and (57), respectively. The approximate solutions are found with adapted weights of GA–IPT for different neuron numbers, i.e., k =10, 20, and 30, and results are given in Table 8 for input t ∈ [0, 1] with size of step 0.1. The exact solutions are also shown in the same table. The estimated solutions are also calculated with weights given by GA, IPT, and GA–IPT, and results based on AE are shown graphically in Fig. 6. It is seen from the results presented in the table that there is a close match between the given and exact solution. From the results of GA–IPT shown in Fig 6(c), one can infer that for k = 10, 15, and 20 the values of AE lie in the range 10–05–10–06, 10–05–10–06, and 10–05–10–07, respectively, for ANN model (44) while for model (45), the values of AE are around 10–04–10–06, 10–04–10–05, and 10–04–10–06 for k = 10, 15, and 20, respectively. For interested readers of the proposed scheme, see [36–38]. u e n Table 7 Comparison of proposed result by GA-–IPT with exact solution for problem 2 t f (t ) f (t ) Exact k = 30 k =45 fˆ (t ) k = 60 k = 30 k = 45 k = 60 0 0 0 0 0 –3.46E–08 –7.55E–10 –1.56E–09 0.1 0.01 0.01 0.01 0.01 0.009995 0.009996 0.010005 0.2 0.04 0.040001 0.039999 0.039999 0.039989 0.03999 0.040012 0.3 0.09 0.090001 0.089999 0.089999 0.089984 0.089984 0.090019 0.4 0.16 0.160001 0.159998 0.159999 0.159977 0.159978 0.160027 0.5 0.25 0.250002 0.249998 0.249998 0.24997 0.249971 0.250034 0.6 0.36 0.360002 0.359997 0.359998 0.359963 0.359965 0.360042 0.7 0.49 0.490002 0.489997 0.489998 0.489957 0.489958 0.490051 0.8 0.64 0.640003 0.639996 0.639997 0.639949 0.63995 0.64006 0.9 0.81 0.810003 0.809996 0.809997 0.80994 0.809942 0.810069 1 1 1.000004 0.999995 0.999996 0.999931 0.999933 1.00008 24 Raja et al. / Front Inform Technol Electron Eng in press (b) ANN model of type 2 for GA–IPT (a) ANN model of type 1 for GA–IPT (c) ANN models optimized with GAs u e n d e t i d (d) ANN models optimized with IPT Fig. 6. Comparison of proposed results from the reference exact solution for problem 3. In the case of (a) and (b), results in term of the solution versus inputs are plotted, while for (c), (d), and (e), the values of the corresponding absolute error are shown in term of solid lines for ANNs model of type 1 and dash lines for ANNs model of type 2 for all three algorithms. (e) ANN models optimized with GA–IPT 25 Raja et al. / Front Inform Technol Electron Eng in press Table 8 Comparison of proposed result by GA-IPT with exact solution for problem 3 t f (t ) f (t ) Exact k = 30 fˆ (t ) k =45 k = 60 k = 30 k = 45 k = 60 0 0 0 0 0 4.88E–09 1.89E–08 1.38E–07 0.1 0.099833 0.099833 0.099833 0.099833 0.099833 0.099834 0.099833 0.2 0.198669 0.198669 0.19867 0.198669 0.198669 0.19867 0.198666 0.3 0.29552 0.29552 0.295521 0.295519 0.29552 0.295521 0.295511 0.4 0.389418 0.389418 0.38942 0.389417 0.389418 0.38942 0.389401 0.5 0.479426 0.479425 0.479429 0.479423 0.479425 0.479428 0.479397 0.6 0.564642 0.564642 0.564647 0.564639 0.564642 0.564646 0.564601 0.7 0.644218 0.644218 0.644224 0.644212 0.644218 0.644223 0.644161 0.8 0.717356 0.717356 0.717364 0.717349 0.717356 0.717363 0.717281 0.9 0.783327 0.783327 0.783337 0.783318 0.783327 0.783336 0.783231 1 0.841471 0.841471 0.841483 0.84146 0.841482 0.841352 d e t i d 0.841471 6 Conclusions with future research directions e n The following conclusions are drawn on the basis of numerical experimentation performed in this study. A new heuristic computational intelligence technique is developed for solving initial value problems of functional differential equations of pantograph type effectively by exploiting the strength of ANN modeling, GA, IPT, and their hybrid approach GA–IPT. A comparison of the proposed approximate solutions with existing exact solutions shows that, in each case, of all three problems, the results of the hybrid GA–IPT approach are far more accurate than the results obtained through the GA ot IPT models. The values of AE for problem 1 by GA–IPT lie in the range 10–03–10–07, 10–05–10–07, and 10–04–10-–10, for examples 1, 2, and 3, respectively, while for problems 2 and 3 the values of AE lie in the range 10–04–10–08 and 10–04–10–06 , respectively. The behavior of the proposed scheme, based on neural network models with different number of neurons, shows that a slight gain is seen in the precision of the results with increasing number of neurons in the modeling; however, no drastic change in accuracy has been observed. On the other hand, the time of computations has increased exponentially by increasing the number of neurons in the modeling. Besides the consistent accuracy and convergence of the proposed scheme, other valuable advantages are the simplicity of the concept, ease in implementations, provision of results on entire continuous grid of inputs, and easily extendable methodology for different applications. All the features established the intrinsic worth of the scheme as a good alternate, accurate, reliable, and robust computing platform for stiff nonlinear systems such as the pantograph. One the basis of the presented study, the following research directions are suggested for those interested of this domain: Improvement of the results is possible by investigating ANN modeling either by introducing new transfer functions or by changes in the type of neural networks. Few activation functions such as radial basis function, tag-sigmoid, and modern models based on Mexican and wavelet hat neural networks should be tried in this regard. One can incorporate modern optimization algorithms and their hybrid combination with the efficient local search method to get the design parameters of neural network models with better capabilities to im u 26 Raja et al. / Front Inform Technol Electron Eng in press prove the results. In this regard, fractional variants of the PSO algorithm, chaos optimization algorithm, evolutionary strategies, genetic programming, and differential evolution etc. can be good alternatives. Availability of better hardware platforms and modern software packages can also play a role in exploring the capability of the algorithm in a much wider search space, easily and efficiently. References Agarwal R. P., Chow Y. M., 1986. Finite difference methods for boundary-value problems of differential equations with deviating arguments, Comput. Math.Appl. 12(11): 1143-1153.. Arqub, O. A. Zaer A-H., 2014. Numerical solution of systems of second-order boundary value problems using continuous genetic algorithm Information Sciences 279: 396-415. Azbelev N. V., Maksimov V. P. Rakhmatullina L. F. “Intoduction to the Theory of Functional Differential Equations: Methods and Applications” New York: Hindawi Publishing Corporation, 2007. Barro, G., So, O., Ntaganda, J.M., Mampassi, B. and Some, B., 2008. A numerical method for some nonlinear differential equation models in biology.Applied Mathematics and Computation,200(1):28-33. Chakraverty, S., and Mall S. 2014. Regression-based weight generation algorithm in neural network for solution of initial and boundary value problems. Neural Computing and Applications 25:585-594. Dehghan M., Salehi R., 2010a. Solution of a nonlinear time-delay model in biology via semi-analytical approaches, Computer Physics Communications, 181:1255-1265. Dehghan M., Nourian M. Menhaj M., 2009b. Numerical solution of Helmholtz equation by the modified Hopfield finite difference techniques, Numerical Methods for Partial Differential Equations, 25:637-656 . Dehghan M and F. Shakeri, 2008c.The use of the decomposition procedure of Adomian for solving a delay differential equation arising in electrodynamics, Physica Scripta, 78:065004 ). Derfel G., Iserles A., 1997. The pantograph equation in the complex plane J. Mathematical Analysis Applications, 213(1):117– 132. Evans D. J., Raslan K. R., 2005. The Adomian decomposition method for solving delay differential equation.International Journal of Computer Mathematics 82(1): 49-54. Holland J. H., 1975. Adaptation in natural and arti_cial systems, The University of Michigan Press, Ann Arbor, MI. Iserles A., 1993. On the generalized pantograph functional-differential equation, European J. Appl. Math. 4:1-38, 1993. Khan J. A., Raja, M. A. Z., Qureshi I. M., 2011a. Novel Approach for van der Pol Oscillator on the continuous Time Domain, Chin. Phys. Lett. 28:110205 [Doi: 10.1088/0256-307X/28/11/110205. Khan J. A., Raja, M. A. Z.,, Syam M. A., Tanoli S. A. K., and Awan S. E., 2015b. Design and Application of Nature Inspired Computing approach for Non-linear Stiff Oscillatory Problems, Neural Computing and Application, in-press [DOI: 10.1007/s00521-015-1841-z]. Mall, S., Chakraverty.S., 2014a. Chebyshev Neural Network based model for solving Lane–Emden type equations. Applied Mathematics and Computation 247:100-114. Mall, S., Chakraverty.S. 2014b. Numerical solution of nonlinear singular initial value Problems Of Emden-Fowler type using Chebyshev Neural Network Method. Neurocomputing 149(B):975-982. Mall, S., Chakraverty.S. 2013c. Comparison of artificial neural network architecture in solving ordinary differential equations. Advances in Artificial Neural Systems 2013:1:12. McFall K. S. 2013. Automated design parameter selection for neural networks solving coupled partial differential equations with discontinuities. Journal of the Franklin Institute 350(2):300-317. Ockendon J. R., Tayler A. B., 1971. The dynamics of a current collection system for an electric locomotive in Proc. Roy. Soc. London A, 322:447-468. Pandit, S., Kumar, M., 2014. Haar wavelet approach for numerical solution of two parameters singularly perturbed boundary value problems.Appl. Math, 8(6):2965-2974. Peng Y., Jun W., Wei W. 2014. Model predictive control of servo motor driven constant pump hydraulic system in injection molding process based on neurodynamic optimization. Journal of Zhejiang University SCIENCE C 15(2):139-146. Potra F. A., Wright S. J. 2000. Interior-point methods. Journal of Computational and Applied Mathematics 124(1):281-302. Raja M. A. Z., 2014a. Stochastic numerical techniques for solving Troesch’s Problem. Information Sciences, 279:860–873. [DOI:10.1016/j.ins.2014.04.036]. Raja M. A. Z., 2014b Unsupervised neural networks for solving Troesch’s problem. Chin. Phys. B 23(1):018903. Raja M. A. Z., Ahmad S. I., Samar R 2013c. Neural Network Optimized with Evolutionary Computing technique for Solving the 2-Dimensional Bratu Problem, Neural Computing and Applications, 23(7-8):2199-2210. DOI:10.1007/s00521-0121170-4]. Raja M. A. Z., Samar R., Rashidi M. M., 2014d. Application of three Unsupervised Neural Network Models to singular nonline- u e n d e t i d Raja et al. / Front Inform Technol Electron Eng in press 27 ar BVP of transformed 2D Bratu equation. Neural Computing and Applications 25:1585–1601, [DOI :10.1007/s00521-0141641-x]. Raja M. A. Z., Ahmad S. I., Samar R 2014e. Solution of the 2-dimensional Bratu problem using neural network, swarm intelligence and sequential quadratic programming, Neural Computing and applications, 25:1723–1739, [DOI: 10.1007/s00521014-1664-3]. Raja M. A. Z., Khan J. A., Shah S. M., Behloul D, Samar R. 2015f. Comparison of three unsupervised neural network models for first Painleve´ Transcendent. Neural Computing and applications, 26(5):1055-1071. [DOI:10.1007/s00521-014-1774-y] Raja, M. A. Z., Khan J. A., Ahmad S. I., Qureshi I. M., 2012g. Solution of the Painlevé Equation-I using Neural Network Optimized with Swarm Intelligence. Computational Intelligence and neuroscience, ID 721867 2012:1-10. Raja, M. A. Z., Khan J. A., Behloul D., Haroon T., Siddiqui A M., Samar R., 2015h. Exactly Satisfying Initial Conditions Neural Network Models for Numerical Treatment of First Painlevé Equation. Applied Soft Computing, 26:244–256. [DOI: 10.1016/j.asoc.2014.10.009]. Raja M. A. Z., Ahmad S I., 2014i Numerical treatment for solving one-dimensional Bratu Problem using Neural Networks. Neural Computing and Applications, 24(3-4):549-561. [DOI: 10.1007/s00521-012-1261-2]. Raja M. A. Z., 2014j. Solution of the one-dimensional Bratu equation arising in the fuel ignition model using ANN optimised with PSO and SQP. Connection Science, 26(3):195–214. [DOI:10.1080/09540091.2014.907555]. Raja M. A. Z., Sabir Z., Mahmood , Alaidarous E. S., Khan J. A., 2015k. Stochastic solvers based on variants of Genetic Algorithms for solving Nonlinear Equations., Neural Computing and Applications, 26(1):1-23, [DOI: 10.1007/s00521-0141676-z]. Raja M. A. Z., Samar R., 2014l Numerical Treatment for nonlinear MHD Jeffery-Hamel problem using Neural Networks Optimized with Interior Point Algorithm. Neurocomputing 124:178-193. [DOI: 10.1016/j.neucom.2013.07.013]. Raja M. A. Z., Samar R., 2014m. Numerical treatment of nonlinear MHD Jeffery-Hamel problems using stochastic algorithms. Computers and Fluids, 91:28-46. Raja M. A. Z., 2014n. Numerical Treatment for Boundary Value Problems of Pantograph Functional Differential Equation using Computational Intelligence Algorithms. Applied Soft Computing 24:806–821, [DOI: 10.1016/j.asoc.2014.08.055]. Raja M. A. Z., Khan J. A., Qureshi I. M. 2010o. Evolutionary computational intelligence in solving the Fractional differential Equations. Lecture notes in Computer Science, 5990(1): 231-240, Springer-Verlog, ACIIDS Hue City, Vietnam. Raja M. A. Z., Khan J. A., Qureshi I. M. 2010p. Heuristic computational approach using swarm intelligence in solving fractional differential equations. GECCO (Companion) 2010:2023-2026. Raja M. A. Z., Khan J. A., Qureshi I. M. 2010q, A new Stochastic Approach for Solution of Riccati Differential Equation of Fractional Order. Ann Math Artif Intell., 60(3-4):229-250. Raja M. A. Z., Manzar M. A., Samar R., 2015r. An Efficient Computational Intelligence Approach for solving Fractional order Riccati Equations using ANN and SQP. Applied Mathematical Modeling., 39:3075-3093. DOI:10.1016/j.apm.2014.11.024]. Raja M. A. Z., Khan J. A., Qureshi I. M, 2011s Solution of fractional order system of Bagley-Torvik equation using Evolutionary computational intelligence. Mathematical Problems in Engineering, 2011:1-18, Article ID. 765075. Raja M. A. Z., Khan J. A., Qureshi I. M 2011t. Swarm Intelligence Optimized neural network for solving Fractional Order Systems of Bagley-Tervik equation, Engineering Intelligent Systems, 19(1):41–51. Raja M. A. Z., Khan J. A., Haroon T., 2015u, Numerical Treatment for Thin Film Flow of Third Grade Fluid using Unsupervised Neural Networks", Journal of chemical institute of Taiwan, 48:26-39. . DOI: 10.1016/j.jtice.2014.10.018. Saadatmandi A., Dehghan M. 2009. Variational iteration method for solving a generalized pantograph equation. Computers & Mathematics with Applications 58.11 (): 2190-2196. Sedaghat, S., Ordokhani Y, Dehghan M 2012. Numerical solution of the delay differential equations of pantograph type via Chebyshev polynomials. Communications in Nonlinear Science and Numerical Simulation 17(12): 4815-4830. Shakeri F., Dehghan M., 2010. Application of the decomposition method of Adomian for solving the pantograph equation of order m. Z. Naturforsch A, 65(a):453-460. Shakourifar M., Dehghan M., 2008. On the numerical solution of nonlinear systems of Volterra integro-differential equations with delay arguments, Computing, 82:241-260. Srinivasan S., Saghir M. Z., 2014. Predicting thermodiffusion in an arbitrary binary liquid hydrocarbon mixtures using artificial neural networks. Neural Computing and Applications 25(05):1193-1203. Tang L., Ying G., Liu Y.-J., 2014. Adaptive near optimal neural control for a class of discrete-time chaotic system. Neural Computing and Applications 25(05):1111-1117. Tohidi, E., Bhrawy, A. H., Erfani, K., A., 2013. Collocation method based on Berneoulli operational matrix for numerical solution of generalized pantograph equation. Appl. Math. Model, 37:4283-4294. Troiano, L., Cosimo B. 2014. Genetic algorithms supporting generative design of user interfaces: Examples. Information Sciences 259: 433-451. Uysal A., Raif B., 2013. Real-time condition monitoring and fault diagnosis in switched reluctance motors with Kohonen neural u e n d e t i d 28 Raja et al. / Front Inform Technol Electron Eng in press network. Journal of Zhejiang University SCIENCE C 4(12): 941-952. Wright S. J. 1997. Primal-dual interior-point methods. Vol. 54. Siam. Xu, D. Y., Yang, S. L., Liu, R. P., 2013. A mixture of HMM, GA, and Elman network for load prediction in cloud-oriented data centers. Journal of Zhejiang University SCIENCE C,14(11), 845-858. Yusufoğlu E., 2010. An efficient algorithm for solving generalized pantograph equations with linear functional argument. Applied Mathematics and Computation 217(7):3591-3595. Yüzbaşı Ş., Mehmet S., 2013. An exponential approximation for solutions of generalized pantograph-delay differential equations. Applied Mathematical Modelling 37(22):9160-9173. Yuzbası S., Sahin N., Sezer M., 2011. A Bessel collocation method for numerical solution of generalized pantograph equations. Numer. Method Partial Diff. Eq., 28(4):1105-1123 Zahoor R. M. A., Khan J., A. Qureshi I. M., 2009. Evolutionary Computation Technique for Solution of Riccati Differential Equation of Arbitrary Order. World Academy Science Engineering Technology, 58:303-309. Zhang H., Wang Z., Liu D., 2008. Global Asymptotic Stability of Recurrent Neural Networks With Multiple Time-Varying Delays. IEEE Trans Neural Networks 19(5). Zhang Y. T., Liu C. Y., Wei S. S., Wei C. Z., Liu, F. F. 2014. ECG quality assessment based on a kernel support vector machine and genetic algorithm with a feature matrix. Journal of Zhejiang University SCIENCE C, 15(7), 564-573. Zoveidavianpoor M. 2014. A comparative study of artificial neural network and adaptive neurofuzzy inference system for prediction of compressional wave velocity. Neural Computing and Applications 509:379-386. d e t i d Appendix The proposed solution determined by GA–IPT for examples 1, 2, and 3 of problem 1 are given in Eqs. (48), (50), (52) for type 1 ANN model, respectively, while in case of type 2 ANN model, these solutions are given in Eqs. (49), (51), and (53), respectively. In all these solutions, the values of weights are given up to 15 decimal places to reproduce the results without rounding-off error. Similarly, the proposed solution obtained by GA–IPT for type 1 and 2 of ANN model are given in Eqs. (54) and (55), respectively, in case of problem 2, while the respective derived solutions in case of problem 3 are given in Eqs. (56) and (57). u e n Raja et al. / Front Inform Technol Electron Eng in press 29 0.08047048725693 0.31432056627273 0.18940183413017 0.15868695656243t 1.33478313793967 0.41293246659441t 1.01022962552465 0.93331578125332t 0.43039738795550 1 e 1 e 1 e 0.33855749600711 0.18674538959152 0.20816472295073 0.00242933381986t 0.26540787244610 1 e 0.72809115882354t 0.48270544940064 1 e 0.08068614516604t 0.15753101096643 f t et t 2 1 e , 0.28995022570587 0.01078994692573 0.08019342423114 (48) 1 e 0.74258349638942t 0.32968989770064 1 e 0.06548603292043t 0.28711817842405 1 e 0.90540925204271t 0.44872098525044 0.30104605312484 0.19439724515215t 0.14442038812894 1 e 0.08047048725693 0.31432056627273 0.18940183413017 0.15868695656243t 1.33478313793967 0.41293246659441t 1.01022962552465 0.93331578125332t 0.43039738795550 1 e 1 e 1 e 0.33855749600711 0.18674538959152 0.20816472295073 1 e 0.00242933381986t 0.26540787244610 1 e 0.72809115882354t 0.48270544940064 1 e 0.08068614516604t 0.15753101096643 fˆ t (49) 0.28995022570587 0.01078994692573 0.08019342423114 1 e 0.74258349638942t 0.32968989770064 1 e 0.06548603292043t 0.28711817842405 1 e 0.90540925204271t 0.44872098525044 0.30104605312484 0.19439724515215t 0.14442038812894 1 e . d e t i d 0.94968636589038 1.34707016173989 1.50376102881720 0.36598661744660t0.91032578393597 1.02414067551136t0.75154331984783 0.70858162424501t1.62400066730724 1e 1e 1e 0.03557070571277 0.47001507033427 0.23305837311508 1.08898877761457t0.20606659426161 0.38441110076635t0.64422797117518 1.37451636535473t0.18473926476503 e e 1 e 1 1 f t sint t2 , (50) 0.15108271818877 1.09149434892607 0.09523920613157 1e 1.48913241335406t0.57657833567507 1e 1.76070453396823t0.92998486321521 1e 0.60362891562988t0.20502695693704 2.03442822797601 0.39108223347625t0.84866377435531 e 1 u e n 0.38881966892190 1.31918961805391 1.22449687272902 1.11418841809915t 2.25563957260796 1.46211656154129t 2.07524253971787 1.62639052922476t 0.31151922035731 1 e 1 e 1 e 1.23108599422796 1.12118246406554 0.95063660319925 1 e 1.03897554016441t 1.25990267464770 1 e1.96689259927169t 1.94532284119518 1 e 0.88822325672342t 3.25929146277014 fˆ t 0.97847216019113 0.00314856657258 0.18309250352326 1 e 0.77652713362583t 0.98914623881902 1 e1.91697295273083t 0.45913654785170 1 e 2.54427699903716t 0.81534618655148 1.24237534404657 1.67139816416238t 0.00940566554469 1 e . (51) 30 Raja et al. / Front Inform Technol Electron Eng in press 1.12541054542090 1.42107085050758 0.72020986751055 0.86414389504577t1.93509834423992 1.19037759895068t0.31596886185575 0.27729469981955t0.12140778691139 1 e 1 e 1 e 1.77141883091385 0.75342250919741 0.53916152945804 1 e 0.98702611183554t1.13020042280804 1 e 0.89355313779096t0.66014546632640 1 e 0.00598133525911t0.06560791801234 t 2 f t e t , 0.83035257991172 1.24877492661986 0.47996223413418 1 e 0.55664874440902t1.63756764034424 1 e 0.02523047344736t1.63378518104399 1 e 1.54025298381168t1.43915532279329 1.69965383251226 0.79583818855866t0.49219676522824 1 e 0.03938932428191 3.61927373249391 0.58675032378341 3.51213575571960t 1.05347679553264 0.03343635415317t 2.45317399445864 0.95181815640963t 0.60427491764310 1 e 1 e 1 e 0.98854688825296 1.73384540758718 2.71769200543045 1 e 2.19111560763314t 1.71099928318430 1 e 0.35286191867645t 0.48001553168288 1 e 0.81035153163577t 4.72308058050530 fˆ t 1.78864066897855 0.10418056767381 0.46575448050756 1 e 0.89251461316538t 0.25143355072444 1 e1.58417815388160t 1.09463805983697 1 e 0.09015603268787t 2.53496052734188 0.19603309379616 1.89237204970674t 1.08023058491683 1 e . d e t i d 0.67481402890471 0.54495444340597 0.49041078692904 0.72428677248073t 0.01736399032803 0.40611612147944t 0.36636461914877 0.27729469981955t 0.53859825453361 1 e 1 e 1 e 0.03729188121912 0.23139332702103 0.18614473212929 0.74696359619139t 0.84728654014916 0.72428677248073t 0.04809384917708 0.72428677248073t 1.19414810994135 1 e 1 e 1 e f t t2 t 2 , 0.91023978492493 0.04667127849473 0.98871950708149 1 e 0.67729619869392t0.23891963246400 1 e 0.68880970586025t0.35972581646471 1 e 0.08275019593919t0.22273020736427 1.04368600896385 0.33594060443084t 0.43755975854033 1 e 2.35765022822569 2.01525121308703 1.73918129519222 1.42042570927303t 1.52045677392612 1.03763384998739t 1.21968443114654 2.79488686756248t 3.32690992303054 e e e 1 1 1 3.45312354920132 1.42747529801592 1.58426773576773 1 e1.50797590767581t 0.06061594666766 1 e 0.26162812103018t 1.26506268835496 1 e1.45362735496134t 3.67600007204353 fˆ t 4.95852188476576 0.44094421894441 1.43255775523394 1 e 2.18849377496478t 1.51550227341482 1 e 0.89494454041285t 3.92088053259279 1 e1.87238913995881t 4.19479511492098 0.75231371273461 0.19933372356409t 2.25519223606087 . 1 e u e n (52) (53) (54) (55) Raja et al. / Front Inform Technol Electron Eng in press 31 0.38587328403368 0.61786030104397 0.49502139469426 0.92427819499371t1.00473001962324 0.22375880639950t0.15885857074322 0.36360552145478t0.62318676878473 1 e 1 e 1 e 0.00607301947495 0.63601560586288 0.12111238121108 0.55846114919198t0.00368740172280 0.17454660414062t0.30593508351101 0.72953651865590t 0.62318676878473 1 e 1 e 1 e f t sin(t) t2 , (56) 0.36903144988625 0.13130744773902 0.31314632220313 0.90566658946709t0.92620879355016 0.80406276217057t0.04617396770553 81 0.23117893524621 t 0.174701756685 1 e 1 e 1 e 0.03950515977014 0.94704210714465t0.13814360100838 1 e 0.38587328403368 0.61786030104397 0.49502139469426 0.92427819499371t 1.00473001962324 0.22375880639950t 0.15885857074322 0.36360552145478t 0.62318676878473 1 e 1 e 1 e 0.00607301947495 0.63601560586288 0.12111238121108 1 e 0.72953651865590t0.62318676878473 1 e 0.55846114919198t 0.00368740172280 1 e 0.17454660414062t 0.30593508351101 fˆ t 0.31314632220313 0.36903144988625 0.13130744773902 1 e 0.23117893524621t 0.17470175668581 1 e 0.90566658946709t 0.92620879355016 1 e 0.80406276217057t0.04617396770553 0.03950515977014 0.94704210714465t 0.13814360100838 1 e . u e n d e t i d (57)