A GENERALIZED SURFACE APPEARANCE REPRESENTATION

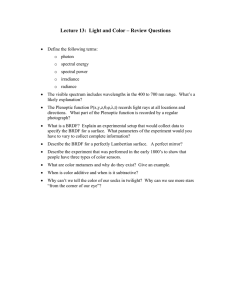

advertisement