What happens when the data gets really really big? History

advertisement

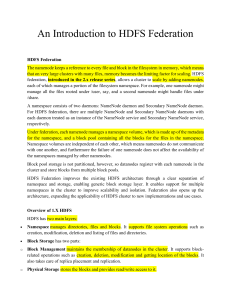

HDFS I do not want to duplicate the things that are being talking about in the cloud computing course. Cloud computing talks about computing. Databases talks about how the data is stored and accessed. What happens when the data gets really really big? Computers have physical limitations • • • RAM Processors Disk RAM is getting cheaper, processors more cored, disk is getting bigger and cheaper. But data is winning the race. We can put data on supercomputers with millions of cores and exabytes or RAM and disk. But the price of a supercomputer is much more than the price of the same # cores, memory and disk space bought at best buy. We call supercomputers – supercomputing We call supercomputing on best-buy hardware “big-data” So, the question is: how do we turn 100 best buy computers into a supercomputer? When data gets really really big we can’t fit it on a single HDD. Or if we could, the processors couldn’t compute it. History Data warehousing, OLAP, OLTP -> Streaming -> big data Only store important things columns -> sample rows -> keep everything HDFS Hadoop file system – Started at Google and Yahoo. Yahoo released a paper. Hadoop Hadoop was started based on Yahoo’s paper by Michael Cafarella (Nutch) and Doug Cutting (Lucene) Hadoop includes MapReduce and HDFS • • MapReduce is the computation o Job Tracker o TaskTracker HDFS is a filesystem just like ext3 or NTFS. But it’s a distributed filesystem that can store exabytes of data on best buy machines. o Namenode o Datanode Each machine has one or more of these. We will focus on HDFS and databases that run on HDFS. As you add more machines you increase capability linearly (almost) JobTracker Accepts jobs, splits into tasks, monitors tasks (schedules, reschedules) NameNode Splits a file into blocks, replicates. Data never flows through the namenode, the name node just points to where the data blocks exist. Properties of HDFS Reliable – data is held on multiple data nodes (replicated =2 or 3), and if a data node crashes the namenode reshuffles things. Scalable – can scale code from 1 machine to 100 machines to 10000 machines – with the same code. Simple – APIs are really simple Powerful – can process huge data efficiently Other projects that we will talk about Pig – a high level language that translates data processing into a Mapreduce job. Like Java gets compiled into byte code. Half of jobs at Yahoo are run with Pig Hive – Define SQL that gets translated to MapReduce jobs. About 90% of Facebook queries use Hive. But these are all batch processes – they take a long time to fire up and execute. HBase – provides simple API to HDFS that allows incremental, real time data access Can be accessed by Pig, Hive, MapReduce Stores its information in HDFS – so data can scale and is replicated, etc. Hbase is used in Facebook Messages – each message is an object in an HBase table. Zookeeper – provides coordination and stores some Hbase meta data. Mahout (ML), Ganglia (monitor), Sqoop (talk to MySQL), Oozie (workflow management – like cron), flume (streaming loading into hdfs), Protobuf (google) – Avro - Thrift (serialization tools) HDFS Supports read, write, rename and append. It does not support random write operations. Optimized for streaming reads/writes of large files. Bandwidth scales linearly with nodes and disks. Built in redundancy – like we talked about before. Auto addition and removal – one person can support an entire data center. Usually one namenode, many datanodes on a single rack. Files are split into blocks. Replicated to 3 (typically); replication can be set on a file by file basis. Namenode manages directories, maps files to blocks. Checkpoints periodically. Large clusters Typically • • • • 40 nodes to a rack Lots of racks to a cluster 1Gigabit/s between nodes 8-100 Gb/s between racks (higher level switch) Rack aware Files are written first to the local disk where the writer exists (MapReduce) and then to rack-local disks. Replica monitoring makes sure that disk failures or corruptions are fixed immediately by replicating the replicas. Typically 12TB can be recovered (re-replicated) in 3-5 minutes. Thus, failed disk need to be replaced immediately as needed in RAID systems. Failed nodes not needed to be replaced immediately. Failure Happens frequently – get used to it. (best buy hardware) If you have 3K nodes, with 3 year amortized lifespan… how many nodes die per day? - 3*365 = approx. 1000. 3000/1000 means 3 nodes will die per day on average (weekends, fall break, etc). Data centric computing Past 50 years, data moves to computation… now computation moves to the data. (HDFS mapreduce enables this)