Lecture 10: Numerical solution of characteristic

advertisement

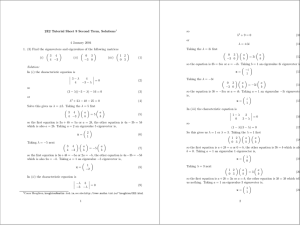

Handout 12 29/08/02 1 Lecture 10: Numerical solution of characteristic-value problems A characteristic-value problem In lecture 9 we covered two methods on how to solve boundary value problems. Those concerned the solution of ODEs with conditions imposed at different values of the independent variable. Now we will cover the numerical solution of characteristic-value problems. Characteristic-value problems are a subset of boundary value problems because the governing ODE is a boundary value problem, except in characteristic-value problems we are also concerned with finding the characteristic-value, or eigenvalue, that governs the behavior of the boundary value problem. Consider the boundary value problem d2 y + k2y = 0 , dx2 (1) with boundary conditions y(0) = 0 and y(1) = 0. The value of k is not known a-priori, and it represents the characteristic value, or eigenvalue, of the problem. This characteristic value problem has an analytical solution, which is given by y(x) = a cos(kx) + b sin(kx) . (2) Substituting in the boundary conditions, we have y(0) = a = 0 , y(1) = a cos(k) + b sin(k) = 0 . The trivial solution is a = b = 0, but this is not a very useful result. A more useful result is to set sin(k) = 0 , (3) which is satisfied when k = ±nπ n = 1, 2, . . . . (4) The general solution to the problem is then given by y(x) = b sin(nπx) n = 1, 2, . . . . (5) These solutions are known as the eigenfunctions of the boundary value problem and k is known as the eigenvalue. It is common to write the solution as yn (x) = b sin(kn x) kn = nπ n = 1, 2, . . . , (6) where yn (x) is referred to as the nth eigenfunction with corresponding eigenvalue kn . In this lecture we will be concerned with solution methods for finding the eigenvalue of the characteristic-value problem. Handout 12 29/08/02 2 The characteristic-value problem in matrix form Just as we did in lecture 9, we will discretize the characteristic-value problem using the second order finite difference representation of the second derivative to yield yi−1 − 2yi + yi+1 + k 2 yi = 0 , (7) 2 ∆x with y1 = 0 and yN = 0 as the boundary conditions. If we discretize the domain with N = 5 points, then we have ∆x = 1/(N − 1) = 0.25, and the equations for yi , i = 2, . . . , 4 are given by 16y1 − 32y2 + 16y3 + k 2 y2 = 0 , 16y2 − 32y3 + 16y4 + k 2 y3 = 0 , 16y3 − 32y4 + 16y5 + k 2 y4 = 0 , but since we know that y1 = 0 and y5 = 0, we have −32y2 + 16y3 + k 2 y2 = 0 , 16y2 − 32y3 + 16y4 + k 2 y3 = 0 , 16y3 − 32y4 + k 2 y4 = 0 , This can be written in matrix-vector form as 32 −16 0 y2 y2 0 −16 32 −16 y3 − λ y3 = 0 , 0 −16 32 y4 y4 0 where λ = k 2 . If we let 32 −16 0 A = −16 32 −16 , 0 −16 32 (8) (9) then the problem can be written in the form (A − λI)y = 0 , where y2 y= y3 , y4 (10) (11) and the identity matrix is given by 1 0 0 I= 0 1 0 . 0 0 1 (12) Equation (10) is known as an eigenvalue problem, in which we must find the values of λ that satisfy the nontrivial solution for which y 6= 0. The nontrivial solution is determined by finding the eigenvalues λ of A, which are given by a solution of det(A − λI) = 0 . (13) Handout 12 29/08/02 3 The eigenvalues are then given by a solution of the third-order polynomial h i (32 − λ) (32 − λ)2 − 512 = 0 , (14) whose roots are given by λ1 = 9.37 , λ2 = 32.00 , λ3 = 54.63 , or, in terms of k = ±λ1/2 , k1 = ±3.06 , k2 = ±5.66 , k3 = ±7.39 . These are close to the analytical values of k1 = ±π = ±3.14 , k2 = ±2π = ±6.28 , k3 = ±3π = ±9.42 , but because we only discretized the problem with N = 5 points, the errors are considerably large. The errors can be reduced by using more points, but as N gets very large, it becomes much too difficult to solve for the eigenvalues. Therefore, we need to come up with other methods to solve for the eigenvalues and eigenvectors of A that are more efficient. The power method The power method computes the largest eigenvalue and its corresponding eigenvector in the following manner: Pseudocode for the power method 1. Choose a starting vector x = [1, 1, 1]T . Set the starting eigenvalue as λ = 1. 2. Approximate the eigenvector with x ← Ax. The approximate eigenvalue is λ∗ = max(x). 3. Normalize with x ← x/λ∗ . 4. If |λ∗ − λ| < then finished, x is the eigenvector and λ is its corresponding eigenvalue. Otherwise set λ = λ∗ and return to step 2. Handout 12 29/08/02 4 As an example, consider the matrix 32 −16 0 A = −16 32 −16 . 0 −16 32 (15) If we start with x = [1, 1, 1]T , then the first iteration will give us 32 −16 0 1 16 x = Ax = −16 32 −16 1 = 0 , 0 −16 32 1 16 (16) which gives us our first approximate eigenvalue as λ∗ = 16, and normalizes the eigenvector as x = [1, 0, 1]T . Iterating again with this eigenvector, the second guess for the eigenvector is given by 32 −16 0 1 32 x = Ax = −16 32 −16 0 = −32 , (17) 0 −16 32 1 32 which gives us our second guess of the eigenvalue as λ∗ = 32, and normalizes the eigenvector as x = [1, −1, 1]T . Subsequent iterations yield eigenvectors of 48 53.33 54.40 x = −64 , −74.67 , −76.80 ,... , 48 53.33 54.40 (18) and we see that we are converging upon the correct eigenvalue of λ = 54.63 and the corresponding eigenvector x = [1, −1.4142, 1]T . We can also find the smallest eigenvalue of A by using the power method with the inverse −1 A . The advantage of the power method is that it is very simple and easy to program. However, it has poor convergence characteristics and does not behave well for matrices with repeated eigenvalues. There are a host of other methods available to compute the eigenvalues of a matrix, but they are beyond the scope of this course. Both Octave and Matlab can be used to compute the eigenvalues of a matrix with >> [v,d]=eig(A) where d is a diagonal matrix containing the eigenvalues of A and v is a matrix whose columns are the eigenvectors of A.