ILMDA: An Intelligent Learning Materials Delivery Agent and Simulation

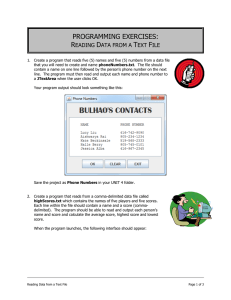

advertisement

ILMDA: An Intelligent Learning Materials

Delivery Agent and Simulation

Leen-Kiat Soh, Todd Blank, L. D. Miller, Suzette Person

Department of Computer Science and Engineering

University of Nebraska, Lincoln, NE

{lksoh, tblank, lmille, sperson} @cse.unl.edu

Introduction

Traditional Instruction

http://battellemedia.com/archives/old%20book%206.gif

ourworld.compuserve.com/homepages/g_knott/lecturer.gif

Introduction

Intelligent Tutoring Systems

–

–

–

–

Interact with students

Model student behavior

Decided which materials to deliver

All ITS are adaptive, only some learn

Related Work

Intelligent Tutoring Systems

–

PACT, ANDES, AutoTutor, SAM

These lack machines learning capabilities

–

–

–

They generally do not adapt to new

circumstances

Do not self-evaluate and self-configure their own

strategies

Do not monitor usage history of content presented

to students

Project Framework

Learning material components

–

–

–

A tutorial

A set of related examples

A set of exercise problems

Project Framework

Underlying agent assumptions

–

–

A student’s behavior is a good indicator how well

the student is understanding the topic in question

It is possible to determine the extent to which a

student understands the topic by presenting

different examples

Methodology

ILMDA System

–

–

–

Graphical user interface front-end

MySQL database backend

ILMDA reasoning in-between

Methodology

Overall methodology

Methodology

Flow of operations

Under the hood

–

–

–

–

Case-based reasoning

Machine Learning

Fuzzy Logic Retrieval

Outcome Function

Learner Model

Student Profiling

–

Student background

–

Relatively static

First and last name, major, GPA, interests, etc.

Student activity

Real-time behavior and patterns

Average number of mouse clicks, time spent in tutorial,

number of quits after tutorial, number of successes, etc.

Case-based reasoning

Each case contains problem description and

solution parameters

The casebase is maintained separately from

the examples and problems

Chooses example or problem for students

with most similar solution parameters

Solution Parameters

Solution Parameters

Description

TimesViewed

The number of times the case has been viewed

DiffLevel

The difficulty level of the case between 0 and 10

MinUseTime

The shortest time, in milliseconds, a single student has

viewed the case

MaxUseTime

The longest time, in milliseconds, a single student has

viewed the case

AveUseTime

The average time, in milliseconds, a single student has

viewed the case

Bloom

Bloom’s Taxonomy Number

AveClick

The average number of clicks the interface has recorded for

this case

Length

The number of characters in the course content for this case

Content

The stored list of interests for this case

Adaptation Heuristics

Adapt the solution parameters for the old

case

–

–

–

Based on difference between problem description

of old and new cases

Each heuristic is weighted and responsible for

one solution parameter

Heuristics are implemented in a rulebase that

adds flexibility to our design

Simulated Annealing

Used when adaptation process selects an

old case that has repeatedly led to

unsuccessful outcome

Rather than remove old case SA is used to

refresh its solution parameters

Implementation

End-to-end ILMDA

–

–

–

Applet-based GUI front-end

CBR-powered agent

Backend database system

ILMDA simulator

Simulator

Consists of two distinct modules

–

Student Generator

–

Creates virtual students

Nine different types student types based on aptitude and

speed

Outcome Generator

Simulates student interactions and outcomes

Student generator

Creates virtual students

–

–

Generates all student background values such as

names, GPAs, interests, etc

Generates the activity profile such as average

time spent on session and average number of

mouse clicks using Gaussian distribution

Outcome Generator

Simulates student interaction and outcomes

–

–

Determines the time spent and the number of

clicks for one learning material

Also determines whether a virtual student quits

the learning material and answers it successfully

Simulation

900 students, 100 from each type

–

–

–

Step 1: 1000 iterations with no learning

Step 2: 100 iterations with learning

Step 3: 1000 iterations again with no learning

Results

–

–

Between Steps 1 and 3, average problem scores

increased from 0.407 to 0.568

Between Steps 1 and 3, the number of examples

given increased twofold

Future Work

Deploy the ILMDA system to the introductory

CS core course

–

–

–

Fall 2004

Spring 2005

Fall 2005

(done)

(done)

Add fault determination capability

–

Students || Agent Reasoning || Content at fault

Questions

Responses I

Blooms Taxonomy (Cognitive)

–

–

–

–

–

–

Knowledge: Recall of data.

Comprehension: Understand the meaning, translation, interpolation, and

interpretation of instructions and problems. State a problem in one's own

words.

Application: Use a concept in a new situation or unprompted use of an

abstraction. Applies what was learned in the classroom into novel

situations in the workplace.

Analysis: Separates material or concepts into component parts so that its

organizational structure may be understood. Distinguishes between facts

and inferences.

Synthesis: Builds a structure or pattern from diverse elements. Put parts

together to form a whole, with emphasis on creating a new meaning or

structure.

Evaluation: Make judgments about the value of ideas or materials.

http://www.nwlink.com/~donclark/hrd/bloom.html

Responses II

Outcome function (example or problem)

–

–

–

Ranges from 0..1

Quitting at tutorial or example results in 0 for

outcome

Otherwise, compare average clicks and times for

student with those for example or problem