S2.11 Samples of AFI reports from AIMS 2009 Report

advertisement

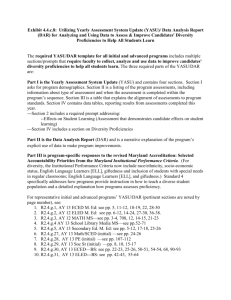

S2.11 Samples of AFI reports from AIMS 2009 Report The Unit has developed and implemented an annual, systematic process for collecting, aggregating and analyzing advanced program specific data (Yearly Assessment System Update). While each program collects and aggregates its program-specific data (according to its assessment plan), the Data Analysis Report requires each program to respond to common questions regarding the consistent use of data to drive program improvement decisions. Program directors submit these instruments to the NCATE CoCoordinators. The Unit-level individual's responsible for Assessment and Accreditation, meet regularly with individual program directors to confirm Unit-wide advanced program systematic use of data for advanced program improvement decision making. These efforts document the systematic aggregation, analysis and consistent use of data for graduate program improvement. A number of substantial programmatic improvements have been confirmed this year using the described processes. Two program examples follow, one that indicates a data-based improvement, the other details a data-based decision to seek program improvement. The Elementary Education M.Ed program made several improvements based on data collected in the 2006-2007 academic year. A midpoint assessment and students' capstone projects both indicated that students needed better descriptions of course projects as they relate to course objectives, and better understanding of the alignment of course objectives to program goals. As a result of these modifications, ELED M.Ed completers performed better on each of the key assessments. Within the program, completers did a clearly better job addressing learner diversity (which had been defined too narrowly previously), and indicated an improved perception of competence for all program goals. Capstone course projects have also identified an increased likelihood of using key projects within an actual school setting, and application of course-based research to improve classroom student learning. In the 2008-2009 academic year, the School Library Media concentration of the Instructional Technology MS program made revisions within two courses to better align program goals with AASL standards. In the required instructional development course, student assessments and faculty feedback showed that the final project rubric did not adequately align to AASL standards relating to collaborative planning and instruction. The assessment and course were modified accordingly to align the final project and its rubric with the AASL standards that promote collaborative instruction. The final course in the School Library Media program was previously a three credit practicum and portfolio development course. The AASL has modified its portfolio process standards to increase program-wide reflection, with specific emphasis on practicum experiences. Candidates completing the portfolio in 2007-2008 found it very difficult to complete the portfolio and practicum simultaneously. Based on this feedback, program faculty determined that the candidates would be best served with a six credit practicum and portfolio experience. 2010 Report The Unit continues to refine its annual, systematic process for collecting, aggregating and analyzing advanced program-specific data through a two-part report: • Part I is the Yearly Assessment System Update, which requires each program to report the data collected from its required assessments • Part II is the Data Analysis Report (DAR), wherein programs account for the success of program initiatives introduced in the prior year. The YASU and DAR cycle helps to identify areas for program improvement, or to otherwise optimize the program’s course of study. A generic sequence of the YASU/DAR includes an analysis of raw data (YASU), and a set of prescriptions for activating targeted programming initiatives (DAR). The following year, the YASU data helps reveal the outcomes of the prior year’s changes, and the DAR captures the progress that each program makes toward its programming goals. Program directors submit these instruments to the NCATE Coordinators, Unit-level individuals responsible for Assessment and Accreditation, who meet regularly with individual program directors to confirm Unit-wide advanced program systematic use of data for advanced program improvement decision-making. These efforts document the systematic aggregation, analysis and consistent use of data for graduate program improvement. A number of substantial programmatic improvements have been confirmed this year using the described processes. Two program examples follow. The MS in Reading Education (REED-MS) program completed a series of meetings during the 08-09 academic year to address “lower scores” in the required REED 726 course. The REED 726 course expects students to analyze case studies of PreK-12 students’ reading performances and behaviors. The proficiencies measured in REED 726 are introduced and (built upon) in three prior courses. The REED 726 is thus a culminating activity of a prospective Reading Specialist’s proficiencies to diagnose and address reading difficulties. Past offerings had not been consistent in the manner and assessment of the case study analysis performed by master’s candidates, resulting in comments about these inconsistencies, and concerns for the differences in instructor expectations for this culminating project. The program assembled its teaching faculty and discussed the various case study approaches in use, ultimately deciding on a small set of consistent approaches for completing the key assignment for REED 726. The result of this coordination was an immediate increase in the grades earned on the key assignment, and a commensurate reduction in the number of complaints about variances in teacher expectations across sections. As a result of this year’s data analysis, the program’s Professional development project in REED 745 has been targeted for improvement during the present academic year. The M.Ed in Early Childhood Education continues its data-centric decision making processes. Since the program itself is routinely held up as a “best practice” in the realm of data-based decision, I will highlight here two examples from the program’s detailed annual report. A small number of courses in the required program of study are also required for students enrolled in other graduate programs in the College of Education. As a result of the sharing among program courses, some of the ECED areas of focus were not consistently represented in each section that contained ECED Masters candidates. Among these areas of focus are to emphasize the diverse roles and settings of Early Childhood professionals in the program beyond the classroom. The program director worked with the course instructors for these “cross-program” requirements to make them aware of these roles for master’s candidates enrolled in the Early Childhood program. Because of these conversations, instructor evaluations in the “cross-program” courses improved, candidate portfolios improved regarding Curriculum Theory and Development projects, and student comments on the Graduate survey noted that all candidates were better informed on these measures. Another focus area was on student understanding of the connections between the NAEYC Code of Ethics for Professionals and a local set of Essential Dispositions for Educators. There was an evident disconnection in student understanding between these two sets of codes, which became especially evident in early program courses. The focus of those early courses was on pre-assessing candidate dispositions, and on completing formative assessments on candidate content knowledge. To address these disconnections, the topic of candidate dispositions was added to two early courses, and was reinforced in the portfolio presentation review near the end of the program. These efforts have resulted in a mean score of 3 (Target level) on the three areas of the essential dispositions scale (caring, collaboration and commitment). 2011 Report As has been reported previously, Unit graduate programs have adopted the YASU/DAR process as a mechanism for collecting, analyzing and reporting program change decisions. This year, we will highlight the Special Education (SPED) program (generally) and the MEd in Special Education (particularly). The MEd as adopted a very systematic process for digesting and responding to the Unit's Annual Data Set. All unit programs receive their Data Sets (an 8part summary of data related to program evaluation, student teaching evaluation (INTASC and SPA; by mentors, supervisors and candidates), dispositions, first and third year alumni, and employers). These reports are disaggregated by program level and location) in the late summer. The SPED program completes three data analysis retreats during the Academic Year (September, January and May). During these retreats, the whole department identifies goals, based on the outcomes data summarized in the Data Set. Each specialization group within the department then choose the goals that it can best address, and they use that information to draw up a specific action plan. During the balance of the academic year, each specialization engages in activities to affect the necessary changes to the program. The SPED (and its MEd) are exemplars of the way that the annual assessment system update was supposed to work, and their attention to detail has led to University wide accolades based on assessment practices. 2012 Report This year, we will highlight the SPED MEd and the ILPD MS Programs, and their use of data-based decision making principles. The ILPD MS program is undergoing a transition in ELCC standards, after being Nationally Recognized with the former standards. The most significant finding for the department last year was the need to examine the comprehensive exams and the internship experience. The prior 100% pass rate is now at 76%. This is slightly lower than the 83% pass rate for the State of Maryland, which also declined from its former pass rate of 98%. The faculty recognizes the need to work more strenuously on ELCC Standard 1.1, 1.2, 1.3, and 1.4, and plan to address this in our course and internship revision processes. The SPED MEd program reports the 2011-2012 data show improvement in all five of the areas identified last year. It should also be noted that during this same period of time (2011-12) in the Special Education Department, the total Student Credit Hour production increased by 7%, with total program enrollments also increasing. No significant difference in data was evident between courses taught on the TU campus compared to the off-site locations, nor was there a difference between data from courses taught by part-time versus full time faculty. The faculty used a retreat to review the 2011-12 strategic plan, created from the 2010-11 data. Following the retreat, Individual Practice Plans identified actions that would support program and department plans.