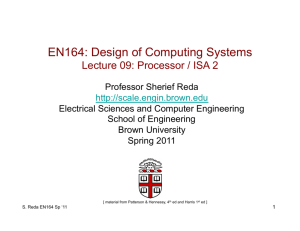

Jie Liu: It's a great pleasure to welcome Sherief...

advertisement