23475 >> Dengyong Zhou: Today we have Alekh Agarwal from... our machine learning series. Alekh is a fifth-year Ph.D....

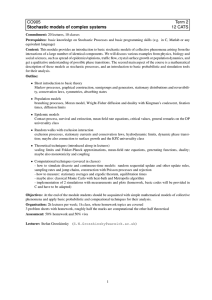

advertisement

23475 >> Dengyong Zhou: Today we have Alekh Agarwal from UC Berkeley to talk at our machine learning series. Alekh is a fifth-year Ph.D. student advised by Peter Bartlett and Martin Wainwright. Has been a recipient of MSR Fellowship and also a Google Fellowship. So working, machine learning, convex opposition, statistics. And today he's going to talk about learning with non-i.i.d. data. >> Alekh Agarwal: Thanks for the introduction. Good to be back here. Although, I think I prefer the summer more. Okay. So, yeah, this is some joint work with John Duchi, Mikal Johansson and Mike Jordan, mostly trying to understand when do learning algorithms continue to do well even with non-i.i.d. data samples. But before I go into that, I just want to very quickly recap the basic setting that's typically assumed in statistics and a whole lot of machine learning, where you would think that you have N data samples, maybe X1 by X2, YN, so this can be the data and this can be the binary label or a regression value or something. So here I just have a cartoon of, for instance, a binary classification task. And the usual underlying assumption is that all these N samples were sampled independently and identically from a certain fixed distribution. And what we would do then is to train some sort of a model that fits the data well. So in this case just this linear separator that seems to be classifying my training data. And then what a whole lot of theory and statistics focuses on is to show that this model will actually continue to work well even on unseen samples generated from the same distribution. So here if I see a red dot or another red point, the test points, as long as they're from the same distribution, we continue to do along that. But the whole story relies very critically on the fact that everything was sampled in an i.i.d. fashion. And so what happens when things are not i.i.d.? This is not a new question. People have thought about it. And in particular one very nice framework that kind of takes the other extreme is what's often called online adversarial learning where we now view the same set of examples, X1 by 1 through X1 YN as coming at sort of an online stream at us. Quite remarkably, we do not assume any statistical structure on the data at all. Of course, that means that the performance criteria that we use also changes and in particular what people measure here is a notion of regret to a fixed predictor. So what goes on here is that, okay, I'm receiving these samples that I think of as an online data stream, and I train now a sequence of models. And in particular the model at time I has been trained on samples up to I minus 1, is then used to make a prediction on the sample at time I. I measure the loss under some notion of a loss function for on this new sample point, and this cumulative loss occurred by the learner is accumulated. So that's the first term. And the second term is to say that, okay, now suppose I took the entire data and trained the best model from my model class on this data, then how small would that cumulative loss be. And the rationale kind of here is the second quantity is what you were computing in sort of this setup right in the offline setup that's exactly the computation we were doing. And now we are trying to argue how close is our online computation towards this off-line computation. But kind of -- while this is an interesting performance measure, again, it's a bit hard to interpret in terms of what it buys us statistically, or what it means in terms of what's going to happen in the future based on the samples I've seen. So a very nice answer to that was provided by Cesa-Bianchi [indiscernible] in '04 who said I can -- I have this class of algorithms that work with arbitrary data samples and give me guarantees on this regret. But now let me ask what happens when the data samples are indeed run in an i.i.d. fashion and what they showed was that any algorithm that has a small quantity of this regret will also generalize well to unseen examples drawn from the same distribution. And that basically, for instance, can be thought of as one justification almost for this performance measure of regret. Because it implies good generalization at least when your data is i.i.d.. but again this connection very critically relied on the fact that samples were indeed drawn in an i.i.d. fashion. And what -- so the problem with this whole approach is that of course the world is not always R. Dy. And there are many, many scenarios depending on some samples is very naturally built into our sampling procedures. So if you've got time series data just by the very definition there is some temporal structure in your samples that's governing their dependence. Particularly rises, for instance, a lot in financial data. Rain domestically taken snapshot from Google Finance quite clearly the stock prices are between two different instances of time are not i.i.d. with respect to each other. The same with more general order regressive processes or lots of situations in robotics or reinforcement learning. You've got a little robot going around the room measuring quantities positioned at time T and T plus 1 is not going to be independent of each other. This is going to be very heavily dependent. Same situations arise in a lot of other control and dynamic systems and lots of scenarios where we do want to do some sort of learning in our samples of non-i.i.d. And there are results about it, but the existing theory is nowhere as comprehensive as what we understand for i.i.d. data processes. Before I go into more details of the work, I want to give a very high level picture of what this, what our contribution is going to be in this talk. So of course if I want to learn with non-i.i.d. data and the data has no statistical structure, then the notion of generalization to future test samples is a very strange thing to be talking about. So we do need to assume something. What we assume is the data satisfies certain mixing conditions. What this means is, okay, suppose I have a training sample from today and I look at a training sample far, far out in the future. Then these two would be more or less independent. So there is dependence, but the dependence kind of weakens as you go further out into time. So if I take two samples ten days apart, then they are more dependent than two samples 100 days apart. That's the notion of mixing. I'll be more concrete about this later. The second thing you want to understand is what's the right notion of generalization when talking about dependent data. It's not as -- there's actually some confusion on, and there are different definitions exist in the literature. The one that makes most sense to us personally and the one we end up picking is the one depicted in this cartoon here. So the idea is that you've got some, say, time series that's running. And you sample a whole bunch of training data denoted in blue here. And now you stop receiving training data. You train a model on this training data. The time series continues to run. You continue to draw more samples from it. These red squares now are going to form the test sample. So the test samples are just future samples from the same sample path from which your training data came, which means that the test samples are again going to be dependent on the red guys and the blue guys are going to be dependent on each other they're not going to be statistically independent. And the rationale behind this definition is that you're kind of -- you're thinking that you're observing sort of one random realization of a process that's going on in this world. And you want to make future predictions on that same random realization. It's not like you're going to go into an alternate universe and make some prediction on a different random realization of the process. >>: [inaudible] multiple realizations of test samples for a given context? >> Alekh Agarwal: So, no, we actually -- we only let the process run into future, the same process runs into future and the test samples are all drawn from the same realization. >>: [inaudible]. >> Alekh Agarwal: Yes. Only one forward realization. So some people thought about having test samples coming from an independent realization than the training sample, which personally does not make that much sense to me, which is why we ended up picking this definition. Okay. Finally, we're going to make a prediction about what sorts of algorithms can learn well in these non-i.i.d. data scenarios. We're not going to make a claim for just one algorithm. We're going to make a claim for a whole class of algorithms. And these are going to be algorithms that have been designed for basically online learning in an adversarial scenario but satisfy a certain added stability property. And it's going to cover several interesting examples like online gradient descent and Miller descent and regularized dual averaging and several other examples. And so for all these algorithms, we are going to prove that you actually, as long as your loss function, your performance criterion is suitably convex, you can, based on this non-i.i.d. training data generalized to test samples and are the definition of generalization I talked about here. And one thing that people have discovered a lot in optimization is when your loss function has more than convexity if it has some nonridge curvature then optimization can gain from it. And one aspect of our work will be we will show that these gains are also preserved in learning in the error bounds that you can guarantee on the test sample. And in particular this will have some nice consequences for a variety of linear prediction problems, things like Lee's squared regression, Lee's squared SVM boosting, so on. You can learn at a much faster rate than you would expect in the worst case using just convexity. Okay. So that's kind of what we do on the learning side. Now, in the last several years, people have realized that learning and stochastic optimization are essentially like two facets of the same coin. And that allows very nice exchange of technology between the two fields. And we kind of use this intuition to also interpret and develop an application of our results in a broader context of stochastic optimization. So in particular what we are able to argue is that all these stable online algorithms that our theory applies to can actually be used for stochastic optimization but stochastic optimization, where the source of noise in your gradients or function value estimates is again non-i.i.d.. it's a stochastic source that again satisfies these mixing conditions. And turns out, while this is an interesting theoretical result, it turns out it has many, many applications in the area of stochastic optimization. And many scenarios where the result is actually quite relevant. So there is a very simple and elegant distributed optimization algorithm that we will show we can just directly analyze as an application of our results that will also be applications to, say, things like learning ranking functions from partially ordered data observations and a very sort of gnarly but cool application to pseudorandom number generators which I will briefly sketch at the end. Okay. So let me -- I'm going to try to keep the notation to a bare minimum, but a few things I am going to need and mostly in this slide, most of the notation. So you can assume that there's some stochastic process BI that's governing the -governing the revolution of my sample so PI is a state of the process PI and the mixing assumption that I'm going to make will kind of guarantee as I said before that this distribution PI converges to some stationary distribution pi. So, for instance, you can think of -- I'm sure most of you are familiar with, say, the convergence of random walks as you let a random walk run for long enough it fixes to a stationary distribution. And we need that sort of phenomenon in a broader generality here. We're going to receive end training samples according to the stochastic P. Yes? >>: I'm confused about I here and stationary use condition. >> Alekh Agarwal: Uh-huh. >>: Are you saying that the stochastic process at time I has a stationary distribution, or that ->> Alekh Agarwal: No, if you -- look at the -- so you start the distribution from an arbitrary state and then you look at the distribution induced at time I. Then as I goes to infinity the distribution at time I approaches the stationary distribution. So you let say your Markov chains run for long enough then the distribution induced over the sample should start looking like stationary distribution. >>: That's the position stationary. So the problem I have is connecting the stationary process PI. >> Alekh Agarwal: No, PI is not a stationary process. >>: Sorry. The stochastic process PI with a stationary process that you just described. >> Alekh Agarwal: No, no. So okay like PI would be the distribution of the Markov chain, for instance, at time I. And this ->>: The distribution of the state. >> Alekh Agarwal: Yes, the distribution of the states. >>: The margin of the distribution of the states for the Markov chain as an example. >> Alekh Agarwal: Exactly. And we want this converge to a stationary distribution. So it could happen that you have a nonalgorithmic Markov chain that does not convert to a stationary distribution and if that happens then my results aren't going to apply. All I'm saying is I would need this convergence to stationary distribution that happens, for instance, for all finite state Markovian chains happens for the process PI. So PI is from that nice class of processes where you actually do converge to a stationary distribution. Yeah, PI itself is not a process. It's just a fixed distribution. >>: So PI, it's at time I? >> Alekh Agarwal: Yes. >>: What do you see trending X1 to X then process ->> Alekh Agarwal: Yes. So P here is the process run from time 0 through N or, sorry, 1 through N. >>: Okay. >> Alekh Agarwal: Okay. So, for instance, in the very simple scenario of i.i.d. data, PI will be just PI at HI. It will just be the stationary distribution. Okay. And the other thing I'll need, because I'm talking about learning, I need a loss function to measure the quality of my estimator, and that's going to be denoted by capital F in this case. And capital F takes a predictor W and a sample XI and measures the fruit of the predictor to the sample. And a quantity that's going to turn out to be important is the expectation of this loss. Applied to a sample measure from the stationary distribution. Intuitively, also where that might be important if this is an i.i.d. scenario, then this is actually a fresh example drawn according to the same distribution as my training data and measuring the fruit of that sample. And as we'll see in the sequel, the distribution will be important for non-i.i.d. data, but I'll point that out when it comes. Let's just see some examples of the setup just to get a better understanding of the various quantities involved. So again the simplest mixture of non-i.i.d. that you can think of is a finite state Markov chain. So here are shown a simple example. You've got a graph. Your process is just the random walk on the undirected graph, uniform random walk. And the data observation process is the following: So each node in this graph has some distribution associated with it. It could just be the empirical distribution on some local data. Maybe it just has a way of sampling to some distribution and whatever it is each node has a certain distribution associated with it. Now I have a talk on that randomly walking around on this graph. Token stats are the first node draws a sample from that node. And then it moves according to the random walk it will land in one of these two neighbors, maybe with equal probability in this case. And sample from whichever place it lands in. And then we take another step of the random walk. Now it can be any one of these three places and again samples from that node. And so PI in this case is evolving as the marginal distribution on states of the Markov chain. And by a well known Markov chain theory I know this is going to converge and in this case because the graph is regular it's just going to converge to the uniform distribution over all the nodes. So that's the distribution I get in the limit. So that's just what these distributions can look like in, for instance, a simple example. A slightly more realistic example that can actually start capturing data generating processes and machine learning applications is, say, data generated according to order aggressive process. What an order aggressive process is that the sample at time I is obtained by taking the sample by I minus 1, hitting it with a fixed matrix A and then adding some new random noise to it. So it governs kind of how much of the past signal you retain and ZI is the fresh randomness you're getting. For instance, this is what the process can look like. Here I've shown of course XIs are only one dimensional here and it's just a vector in this example. But in general the XIs will be higher dimensional vectors and you can have even dependence on past K samples and so on. So this is a fairly general class of processes and is often used to model time series data to model control system dynamics and so on. And an actual way this can arise in machine learning problems is that your data XI is sampled according to another regressive process and then you've got some regression output associated with this XI. That's what you're trying to fight by minimizing something like a squared loss, right? So this is one form of a problem that we might be interested in. And as long as A the matrix satisfies some nice conditions, again this order regressive process will mix to a stationary distribution. So, again, this sort of a thing can fit into our theory. A third and even a bit more realistic example would be say the task of portfolio optimization. So here your data X will be the stock price vector of the stocks you might be interested in investing. W would be the fraction of your wealth that you want to invest in each stock and a commonly used loss function of these portfolio optimization problems is the log loss. So log of W transpose X would be the error function we use. And in this case PI is the stochastic process that governs the prices of stocks at time I and I'm not going to make any claims about what that converges to actually. But a lot of people seem to expect that if you look at long enough periods of time then it does converge to stationnality maybe things like generic Brownian motion but that's kind of a bit more uncertain. I cannot make formal claims that it converges to stationnality here. But you could expect to at least for long enough periods of time you would expect some sort of mixing to happen. Now I just want to make the notion of mixing precise that I will need. And the notion of mixing that I use is called phi mixing and the idea is this measures the closeness of two distributions in the notion of total variation, which is just like looking at the L1 norm between two distributions and what it says is if you condition on the data at time T and you look K steps into the future, then how close are the -- how close is the distribution induced at time T plus K to the stationary distribution pi. So I fixed the -- I condition on a certain time and look K steps into the future. I want this to be close to stationary distribution and I take a uniform upper bound over all time steps T. That's the phi mixing coefficient. And quite often actually any ways the bound you get for any fixed T will be independent of K. So the maximization of over T doesn't really bother -- so let's see what this can actually look like. So the simplest example is, again, going back to i.i.d. samples, because as we talked earlier, the distribution is just pi at every time. So if I condition on the previous round and look at it one step ahead, then I'm already at the stationary distribution. Right? Because the distribution is just pi. And independently pie. So phi of 1 is already 0 of i.i.d. samples. For a random walk, the kind of Markov chain sample we saw earlier, for instance, by using existing results, we can show that phi of K is bounded by a quantity that goes to 0 geometrically as a function of K. And the exponent of the contraction factor is governed by the spectral gap of the random walk. So it's a very standard result, for instance. And that's just another example of what these coefficients might look like. >>: So your phi of K, it's soup [phonetic] over T. >> Alekh Agarwal: Yeah. >>: So the W or P of the T doesn't converge to pi. If it converge to pi, then -does it -- the P covers the pi. Happens when phi goes to infinity that should be 0 quantity? >> Alekh Agarwal: Yes. But you take soup over T. Right? So you will take the smallest -- you will take the largest value of phi of K. So the largest value will be initially when you start and look K steps ahead. So what you're saying is correct. But... >>: Okay. So that's interesting. >> Alekh Agarwal: Can you gain from it? >>: So the stochastic [inaudible] you consider, the state does converge to a [inaudible]. >> Alekh Agarwal: Right. >>: What's the relationship between the regenerative process? Is that -- I'm just confused. >> Alekh Agarwal: I -- I would have to think about that. >>: Okay. >> Alekh Agarwal: Okay. So now I want to quickly define what is the class of algorithms that we're going to work with. So as I said we will prove a result about all online algorithms that satisfy two conditions. First of all, when we run them on a sequence of convex functions then they should have a bounded regret. So that's all this is saying, that I've got my losses indexed by the sample XIs, the online algorithm generates a sequence of predictors WIs, they better have a bounded regret. The part where stability comes in is I assume that the predictor does not change too much from round to round. There's a bound on how much it can move. And the reason I don't think this is a restrictive assumption, is that for a lot of algorithms, the proof of this part goes. We are approving this as an intermediate step anyways, either implicitly or explicitly. This is just by proof technique proof for a lot online algorithms sort of stability requirement. But for our proof it's kind of an essential assumption to make explicitly. Some examples that would, for instance, satisfy this would be for instance the online gradient descent algorithm from Zinkevich in '03 that runs from the data in online fashion taking gradient step at every time just ignoring the fact that it's a changing sequence of function rather than a fixed function that you're doing gradient descent on. That has a bound on the regret and satisfies stability bounded by the step size of the algorithm. Same thing also holds for the regularized dual averaging algorithm of Linn, it has both of these quantities bounded in the same way. And just one final ingredient that I need that will allow me to state my results and state them in the simplest possible way, would be making this connection pointing out how this expected function F that I mentioned before is important for learning in non-i.i.d. data scenarios as well. So recall we want to measure a generalization on test samples. Drawn from the same sample path as the training data. So I condition on my first N samples which are the training samples and then I ask what is the expected error I could incur on the test samples. And I want that average expected error condition on the training data to be small. And what we can show is that that average error is actually bounded by the error under the function little F and plus a couple of terms which will basically be smaller or not be significant enough in the analysis so we will just focus on bounding this quantity, the gap under F in the SQL with the understanding that that gives us bounds on the generalization as well. One just quick thing to note is that if you put pi equals one in this result then you get this term is 0 and this term is phi of 1. Phi of 1 is 0 of i.i.d processes, for i.i.d processes recovers the intuition that this is the expected function and this captures generalization of the algorithm fully. >>: [inaudible]. >> Alekh Agarwal: Tau is just a free variable. Tau is for any natural number tau. So anything between 1 and infinity, any natural number. This is bound is true for all values of tau. >>: [inaudible]. >> Alekh Agarwal: Exactly. So you can minimize the bound over all values of tau. Based on what the mixing coefficients look like, you can optimize which one gives you the best bound. The algorithm does not use the knowledge of tau. >>: Okay. >> Alekh Agarwal: Although, later on, like if you want to, you know, optimize, for instance, the learning rate, you can incorporate down to the learning rate to get the best possible bound that sort of thing will come in. That's where the algorithm can exploit it. >>: On the right side [inaudible] but sometimes [inaudible]. >> Alekh Agarwal: Well, actually tau, whenever tau is, phi of tau is decreasing as tau increases, right? So the question is how small actually can you keep tau while having phi of tau so small so much. >>: [inaudible] before ->> Alekh Agarwal: And that's what we're going to see in the next results. Here I was kind of short on slide space. So I didn't actually put that in. But, yes, that's precisely what's going to happen. Okay. So with all this, I can give my first main result. So we assume that we've got iterates W1 through WN, through a stable online algorithm. And we take their average and measure the generalization of that average under the function F and this is bounded by some of three main quantities. The first quantity is the average regret of the online algorithm. The second quantity is kind of going to be a deviation bound. It depends -- so this is going to be a probabilistic result that holds with probability one minus delta. And this term depends on how high a probability you want the result to hold, and it kind of has to do with how far things can deviate from their expectation. And the third term really, these two terms kind of analogs of these two terms arise also in similar results of just [inaudible] for i.i.d. data. But the last term is really the main term that's new for non-i.i.d. penalties which depends very critically on this average stability of the algorithm, of the online algorithm that's why we need a stable online algorithm and again on the mixing rate of the data source. So we can again perform the same sanity check that we put tau equals 1 and we recall that phi of 1 equals 0 for i.i.d. samples. So this term becomes 0. This term becomes 0. We are left with just 1 over N, log 1 over delta here and that's exactly the result you get for an i.i.d. setting. So it's kind of backwards compatible. But to understand this a bit more better, what we would like to do is to make, to fix a stable online algorithm so that we have a handle on this and this quantity. And maybe fix a mixing rate for the process so that we have a handle on this quantity so we can actually see a bit more concretely what the error, what the rates look like. So to do that, we first start with fixing an online algorithm. And this applies to both online gradient descent and regularized dual averaging with a separate proportion to 1 square root I at time I, both of them satisfy regret guarantee. That's order square root N and stability that's 1 over root I at time I because the stability is just proportional to step size in this case. So for these algorithms, with high probability, the error is essentially on the order of 1 over root N which is kind of the op optimal error even for i.i.d. settings except you incur a spent based on tau. And there is this second term root N phi of tau. So now you can minimize the bound over tau and basically as long as the minimum of this is suitably small, everything's okay. So one thing that we show -- I mean that's not completely obvious from looking at the expression, but with a few lines of work you can show, is that as long as phi of K goes to 0 and K goes to infinity, it can be arbitrarily slow. As long as this happens, this bound will actually go to 0. We will get consistent learning as long as phi of K eventually goes to 0. And so basically in the limit of infinite data you will actually learn the right model is one thing that this does guarantee you. In particular, if you put ourselves in a really, really nice setting, where our processes converging extremely fast. So if we have an exponentially fast convergence which is, for instance, the Markov chain example that we saw had this sort of a convergence, then actually the rate that you get up to constants and up to logarithmic factors, it's just the same as the i.i.d. rate. So the i.i.d. result was 1 over root N and non-i.i.d. result is log N over root N. So there isn't much of a penalty at all in this case, for instance, due to the fact -- despite the fact that you were learning with non-i.i.d. data. In general, if the process mixes slowly, you can even have this exponent, it can be worse, worse than 1 over root N. It can be a much slower rate as well. But it will go to 0 as long as the process eventually mixes. Okay. So I didn't have the chance to put many experimental slides in this talk. One example that I've included here is for an order regressive process. 25 dimensional order regressive progress. We run online gradient descent algorithm. And this is basically the error under function F as a function of the number of training samples, if you are -- if you are optimistic you could see this curve to be roughly like 1 over square root N, I don't know, shape looks similar. But we are still doing a more careful investigation of the results. So but at least this points to a basic 0 [inaudible] consistency claim being correct when you're testing on future samples. Sorry, yeah, I said this was being measured under function F. That was wrong. This was actually the generalization error measured over a fixed test set over a fixed number of test samples. So it is true generalization error and averaged over 20 files. Okay. So the next thing that I want to briefly discuss is what happens when you have some more information about the structure of the loss function. As I remark in optimization, there is this coverage assumption that we make that often helps us. So what the strong convexity assumption means if you have a function and you take its first order [inaudible] approximation of course the function is convex it's reliable first order approximation but strong convexity means that the gap is lower bounded by a constant times a square distance between the two points in consideration. And so your loss kind of curves up, is not flat anywhere. And the canonical example of that is just square root L2 norm. Very nice property, if you take a convex function and add a strongly convex function to it, then the result is strongly convex. That gives us a license to declare all kinds of regularized objective strongly convex. So things like SVM, ridge regression, regularized logistical regression, entropy based regularized methods like max N, they end up being strongly convex because they incorporate strongly convexed regularizers in them. And we would like to ask whether the strong convexity of the regularizers in these problems helps the learning process somehow. Okay. So the exact assumption we're going to make is that the function F is strongly convex for every sample point X. And the reason we want to make that assumption is that under that assumption algorithms like online gradient descent and regularized dual averaging with the slight modification, they obtain a regret that scales as 1 over N instead of 1 over -- sorry. That scales as log N instead of the square root N regret that we saw for the convex case. So the regret is much, much smaller as a function of N. And again directly translates to learning guarantees. We see now that our learning is 1 over N. Again, times factors that depend on tau and on the mixing rate phi of tau. So first thing is this is faster than the 1 over root N bound that we get for convex losses. Setting tau equals 1 and phi of 1 equals 0 recovers the results for strongly convex losses. That was proven by [inaudible]. And again you can do the same thing. You can look at what happens for geometric mixing and the result is almost as good as i.i.d.. Even though like given the fact that the regret is small seems like this has to be a natural thing. It can kind of, at a technical level it takes a fair bit of work. Even though conceptually it seems like a very obvious thing that has to happen technically it's a bit more -- a bit more involved than it seems at the first site. The other -- the one main interesting application of these results that I will not have much time to describe because it gets even a bit more involved is for the pretty common case of linear prediction that arises in machine learning problems. What I mean by linear prediction is that you have some sort of -- you have some covariate X. You measure -- you have a linear predictor W. You apply a loss function on the prediction W transpose X. This captures things like logistic regression, boosting, Lee's squared regression and so on. You can have a Y as well label. That's perfectly fine. But as long as there's this linear structure, now -- and so this is a fairly common structure in statistics and learning. The problem is with this structure, the sample function F of WX can narrowly be strongly convex. Because there is only one direction, the direction of the sample X, in which it has any non-degenerisity in all other directions you can move the parameter, the function is just flat in all the directions except the direction of the sample X, because that's the only direction in which the loss function is acting. So here you can never have strong convexity, even though quite frustratingly the loss function L itself is often strongly convex in the scalar view on which it acts but it's just not strongly convex in the vector W that we are interested in. So but at the same time people in statistics have actually used this observation to show faster rates of learning with i.i.d. data for several of these problems. And we wanted to see if these gains do actually translate to non-i.i.d. scenarios as well. So the good message is yes they do. The bad message is that they don't quite do that for just our canonical off-the-shelf algorithms that the previous results apply to because those algorithms are not stable on these problems in the right way. If you directly try to apply those algorithms with the parameter settings that we want to apply them to they're not stable anymore. So you have to tweak the algorithms a bit. It's a minor correction but so implementation-wise it doesn't change much. But it gives us this upshot of fast learning. But the details of this had to be left to the paper because introducing the algorithm would have taken a while. Okay. So in the remaining time I want to talk just a tiny bit about this connection between learning and optimization and what it allows us to conclude for the broader setup of stochastic optimization. So I mean what we've seen so far could be alternatively described as we observe a sequence of random functions indexed by samples X1 through XN. These samples are non-i.i.d.. each function is unbiased in a certain sense. That is, if instead of taking the function based on sample X1 if I were to take the sample drawn according to the stationary distribution, then it would give me the right function. So it's roughly unbiased for this. Of course, you're not sampling from pi you're sampling from the dependent process. So it's unbiased in quotes I really should have really said. The results that we proved were on the quantity F of W hat N, right, the value of my pre -- the value of the function at my predicted point, minus the minimum value of the function. So we are trying to minimize the expected function little F, and we are trying to do that with these non-i.i.d. random realizations of the function essentially. And this is exactly what the setup of what's called in optimization the problem of stochastic approximations, except typically people study the problem with i.i.d. data samples and we are studying the problem now with non-i.i.d. data samples. Again, the problem being we're trying to minimize this function F and we are receiving noisy estimates that are going to kind of converge to the function because our process PT is converging to pi. So this sequence is eventually going to start looking like function F. Right? And our results can then be interpreted as showing convergence of stable online algorithms for stochastic optimization with non-i.i.d. noise in the gradients. So, for instance, now like typically when you think of maybe running stochastic gradient descent, we kind of at every step we draw a sample uniformly at random maybe and then evaluate the gradient on it. Now we don't have to necessarily draw it uniformly at random. The sampling at time T could be dependent on what you did at time T minus 1. And that would be fine, as long as the sampling procedure mixes. Okay. So a very interesting application of this arises in distributed optimization. And this is an algorithm called Markov incremental gradient descent by Mikal Johannson and co-authors and it's a very simple idea. So the idea is you've got a distributed network of nodes. And each node has an associated convex function FI. What you are trying to do is you're trying to minimize the average of these functions FIs. And this is how we're going to do it. So we're going to again have a token randomly walking on this graph. So you run the random walk at each time your token is somewhere. You take that function, the function, the local function of the node that your random walk is at. You evaluate the gradient of that particular function and you update your weight vector. You've got now a weight -- basically whenever the token goes, takes the current point WT result, and then you take a step based on the gradient of the local function and then WT the updated WT plus 1 goes to the next node with the random walk and you take another gradient step and again the parameter goes to the next node and you take a gradient step based on the local function of the node. This is a completely local operation because you're in a node you can evaluate the gradient on that node's local function. And, for instance, the application, for instance, you might think of this as this is either like a sensor network where each sensor is measuring some local quantity and can evaluate gradient of the local quantity, but cannot evaluate the gradient of a global quantity or -- this is not something I would apply to a cluster setting but the way you could think of this as this is really, this network is your cluster and at each step only one computer is doing the processing in the cluster, and that computer has its own local dataset. It evaluates the stochastic gradient based on that local dataset. So that's kind of the kinds of applications for this algorithm. And now in this case P to the T, PT is just the distribution of the Markov chain after T steps. If you design the Markov chain correctly, this is not going to be the simple random walk but you can define a Markov chain on the graph so it converges to the uniform distribution over nodes, in which case the expectation over, under the stationary distribution is exactly going to be the average of the local functions. That is the quantity that we are trying to minimize. And because Markov incremental gradient descent as the backbone is using online gradient algorithm, it's a stable algorithm at the backbone. So our results directly imply that under the stationary distribution F, the stationary function F, which is just the average of the local functions now, that function gets optimized by just taking the average of all the iterate steps we've generated, and in particular we show that the error goes to 0 as 1 over square root T if these Fs are convex. And the mixing rate is now -- depends on the mixing of the Markov chain again on this graph. And so sigma 2 of P is again 1 minus 2 of P is going to be the spectral gap of the random walk. And this is basically the most low-powered distributed optimization algorithm that you can think of, because this is communicating very less, it's communicating very less. It's kind of ideal with things like wireless sensor networks. And just by simple application of our theory you'll be able to drive a sharper rate than what was known before for this algorithm. And the -- the graph structure-based dependence is actually kind of reflected, if you run this algorithm. So if we run the algorithm on different kinds of graphs, then the spectral gap depends on the graph topology. So in particular expander graphs random walks mixed really fast, cycled random walks mixes really slowly. So that's reflected here for cycle, the error goes to 0 really slowly for expander goes to 0 really fast. And, again, we are working on more simulations here trying to get a more quantity experimental verification of the exact scaling with the number of nodes that we predict. But that's again something we're still verifying. Okay. So to wrap up, what we saw today was how a [inaudible] of stable algorithms have the nice property that you can generalize well to future test samples, even when your training samples are drawn in a non-i.i.d. fashion. Our analysis applies to a large class of mixing processes. And one nice aspect is that as long as -- for instance, if this mixing is fast enough then we get almost the same rates as i.i.d. learning, and we're also able to show better rates of converge and the losses have a better structure such as stronger convexity. There are several extensions that we actually discuss in our paper. So, first of all, we in our paper we also provide results under weaker assumption mixing, phi mixing is often considered as the strongest mixing condition you can assume, and we give all sorts of results under weaker mixing condition. We also give other applications in the paper. So one is basically if you're trying to optimize a ranking function and your observations are just partial orders over subsets of samples, then how do you solve optimization problems of this nature? And the problem here is that sampling permutation, rankings is hard, if you want to do stochastic optimization, but it's easy to write down Markov chains that sample from this and converge, sample from such combinatorial spaces and converge to some nice stationary distribution. The idea in fact it spans generally from optimization from Markov chain Monte Carlo samples from combinatorial space, apply to problems like optimizing the parameters of a qeueing system of something like a routing network where your packets might be very, where your loads might be governed by some sort of qeueing process. And coming back to this application to pseudorandom number generators, this is just something I randomly came across while looking at the literature, and what they were talking about is that, look, we often run simulations on our computers with these pseudorandom number generators and treat them as a sequence of i.i.d. random numbers, of course pseudorandom numbers are not i.i.d. but they have some sort of dependence because they're being generated according to some, starting from some random seed, they have a dependence on each other. And people have actually thought about this sort of stuff carefully and they have actually shown that even though they are non-i.i.d., the process actually mixes to stationality. So the next time linear testing your new stochastic optimization algorithm don't worry about the fact that pseudo random numbers are not i.i.d., things are going to be fine. So that's all I have to say. Papers that contain the results are available on archive, and I'm around for the rest of the day if you want to talk more. Thanks. [applause]