>> Amar Phanishayee: Our speaker today is Vivek Seshadri... and he works there with Onur Mutlu and Todd Mowry. ...

advertisement

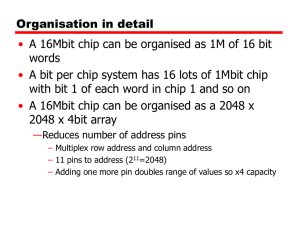

>> Amar Phanishayee: Our speaker today is Vivek Seshadri from Carnegie Mellon University, and he works there with Onur Mutlu and Todd Mowry. Vivek's done a lot of work at the intersection of computer architecture and systems, and he's super-creative, as will be evidenced by his talk today. And he somehow figured out a way to consistently publish at the top conferences, architecture conferences. So I've crossed paths with Vivek very briefly at CMU. He's a pretty intense squash player, so those of you meeting him later today, if you want him to really think on his feet, take him to Pro Club. Welcome, Vivek. >> Vivek Seshadri: Yes, thank you, Amar, for the introduction. I like the way he said "found a way to consistently publish at top conferences." I hope it's an intersection of good ideas and also good writing and not some kind of gaming the system. Anyway, so in this talk, I'll be talking about answering this question, can DRAM do more than just store data? So before I get in the meat of the talk, let me quickly give you a background on my past. So I did my undergrad at IIT Madras, so there I got a bachelor's of technology in computer science. Back at IIT, I had been looking at problems specifically with respect to VLSI, CAD algorithms, placement routing, more algorithms than architecture, if you can say. And I started my PhD at CMU in 2009, so I've been there for the past close to seven years. So at CMU, I have been looking at problems with respect to computer architecture, mostly memory systems. I've done some work on DRAM architecture and virtual memory and caches and in collaboration, I've also looked at problems with respect to compression and quality of service. So in this particular talk, I'll be focusing my attention on my work related to DRAM architecture. So let me quickly get into the problem. So this particular slide shows the two components of a typical system, a processor connected to off-chip memory, which is predominantly built using DRAM today, and a channel connecting the two components. And there are two trends going in the future, which are going to affect the system. So the first one is we are adding more and more compute to the processor. We are adding more cores on the chip. We are putting a GPU on the processor and possibly more accelerators going into the same processor chip. On the other hand, because applications are accessing increasingly more data, we are adding more and more memory modules. So the compute power is increasing on one side, and the memory capacity is increasing on the other side, so to extract better performance from the system, the only bottleneck is the memory channel, right? And the only way we know how to increase the bandwidth on the memory channel is just by increasing the frequency or adding more channels. Both these approaches are very expensive, and they are not very scalable, due to pin count limitations on the processor and also some electrical load limitations on the memory channel itself. So clearly, the story is not good for performance. We need to find out some way of extracting more bandwidth from the memory channel. But it turns out the story is not good in terms of energy, as well. So this is actually a slide from Bill Dally's keynote talk at HIPEAC 2015, where he talks about the amount of energy different operations in the system would consume, and I would like you to focus on two aspects. So on the leftmost here, on the top, we have the energy consumed by a single 64-bit double-precision operation, and it consumes around 20 picojoules. On the other extreme, we have the energy consumed but in transferring a single cache line from DRAM all the way into the processor. It's around 16 nanojoules, so really, we are looking at a 1,000-fold increase in energy to move data from outside of a DRAM chip to the processor and actually performing computations on the processor. So clearly, data movement is a bottleneck both for performance and energy. So what is the community doing? One recent research trend that has taken over is this idea called processing in memory. This is an idea that has been looked at in the past. There have been several works that have looked at this problem, but more recently, it's gaining more traction because of the bottlenecks introduced by the memory channel. And the idea behind processing in memory is to essentially put some small amount of processing logic closer to memory, which has much higher bandwidth to the memory modules, and then do some simple searching or filtering operations in the logic that is sitting there, and then send a much smaller amount of data over the memory channel to the main processor, which can do a much heavier computing. The question that this line of research asks is what computation should we move to the processing and memory structure? >>: So why can't we have a higher memory channel to the memory, just because it's closer, is it the distance that matters? Or is it something else? >> Vivek Seshadri: So it really depends on the architecture, so let me give you an example. So one architecture that is coming up these days is this 3D stacked memory architecture, so there is a technology called Hybrid Memory Cube that is coming out of collaboration between Intel and Micron. If you look at that architecture, what it essentially has is a logic layer which is stacked with multiple layers of memory, and there, the connection between the logic layer and the DRAM stack is using this technology called through-silicon-vias, and not a traditional pin that is connected to the motherboard. So now you can have many more through-silicon-vias than pins, which increases bandwidth. So it's essentially the architecture and not any other. >>: Is there any breakdown of when you talk about the cost of getting memory, how much of that is what has to happen on the chips with all of the data being read out and then rewritten again, and maybe reading a lot wider set of data than you actually need versus moving the data over that channel, versus signaling the address bits? Some of that you can't -- getting closer is not going to help you in terms of the energy that you need when you read the DRAM and have to write the data back in. >> Vivek Seshadri: Extremely good point. So, essentially, you're saying within accessing data, there is a step where I have to take the data from the DRAM cells into some structure and then read the data out, so that really these two components -- and reading the data out on a memory channel, which component is consuming the most energy? So I would say both of them are significant in some sense, and what I am representing in this work is essentially techniques which actually exploit the bandwidth closer to the DRAM cells. So I'm hoping the rest of the talk will answer your question, but if it doesn't, we can really take it after that. But this is not what we are looking at in our work. So in our work, the question that we are asking is, today, we use DRAM as just a store device, so what we can do is write some value into DRAM and read the value at a later point in time. So if you notice, I have annotated the reads and writes with 64 bytes, essentially because commodity DRAM today is optimized to read and write wide cache lines. The question that we ask is, can DRAM do more? And the answer is yes. We have developed some techniques. We are hoping to increase the size of the [indiscernible] as we identify more techniques. Right? So what can we do with DRAM? So it all started off with a technique where we actually showed that DRAM can be used to perform bulk copy operations, and more recently, we have developed techniques where we can use the DRAM architecture to accelerate strided gather-scatter access patterns, and our most recent technique is a mechanism to perform bitwise operations using DRAM technology. And in this talk, I'll be focusing my attention mostly on the two recent works, the gather-scatter DRAM, which is a mechanism to perform strided access patterns within DRAM, and Buddy RAM, which is a mechanism to perform bitwise operations completely inside DRAM. And finally, I'll end the talk with an overview of my other projects and future work. So let me jump into the first part of the talk. So in this slide, I am essentially using an in-memory database table to describe what a strided access pattern in the modern system may look like. So on the right, we have a picture of a database table with multiple records and multiple fields. I have highlighted the third field in yellow. Let's say that's the field of interest for a particular query, and depending on how the database is stored in main memory, that particular query can result in a strided access pattern. So if the database is stored as a row store, where values of the same record are stored together, accessing the third field, which is the yellow blocks marked here, is going to result in a strided access pattern. I'm not going to access values contiguously. Now, what is the problem with strided access patterns in existing systems? Like I mentioned, existing DRAM modules are optimized to access cache lines. So I am annotating the values with the cache line boundaries here, and what happens in existing systems is whenever you access a particular value from within a cache line, the entire cache line is brought into the system. So if I access one of those yellow values, the system is actually going to bring in all the gray values, as well, through the memory channel into the processor caches. And the result is going to increase the latency of completing the operation, because I am fetching more data than I actually need. It's going to waste the bandwidth, memory bandwidth and also going to waste cache space. And like I mentioned, since the database actually consumes a lot of energy, this is also going to result in high energy. The question that we ask is, is it possible for us to retrieve a cache line that contains only useful values. Can I retrieve a cache line that contains only yellow values from existing systems without adding significant cost? And the answer is yes. That's our proposal, the gather-scatter DRAM, and we are going to specifically focus on power-of-2 strided access patterns. So just to understand the architecture that our system exploits, so this is a DRAM module. In most modern systems, a DRAM module contains multiple chips. I'm not sure if you are able to see those black boxes there, but those black boxes are individual DRAM chips, and to a system, all the DRAM chips are connected -- within a module are connected to the same command and address bus, whereas each chip will have an independent data bus. So the result of this is whenever a read command is sent to the module, the command and the address are actually sent to all the DRAM chips, and each chip will independently respond with some piece of data. And the memory controller will put together all these values together to form a cache line. In a typical system, the amount of data that a single DRAM chip would send out is around 8 bytes, so the size of the cache line would be 64 bytes, and the memory controller will send the cache line to the bus. And like I mentioned before, the question we are asking is, is it possible for the memory controller to issue a read command with an address to the module so the module actually responds with the yellow values? And there are two challenges to be able to address towards achieving this mechanism. The first challenge is what we call as chip conflicts, and this is very specific to power-of-2 strides. Like I mentioned before, the rate of each cache line is spread across all the chips, so let's look at the first cache line. A simple scheme that the memory controller may use to split these values would be to map the first value to the first chip, the second 8 bytes to the second chip and the last 8 bytes to the last chip. Unfortunately, because we have a power-of-2 number of chips and looking at a power-of-2 strided access pattern, if the second cache line is mapped in the exact same manner, the data is going to be mapped like this, and clearly, all the useful values are mapped to just two chips. So in some sense, the memory controller has no hope of retrieving any useful value from let's say the first chip here, right? So the bandwidth is essentially limited by the number of chips that have any useful value. The second problem is even if the values are somewhat more present in multiple chips, all the chips share the same address bus, which means the memory controller has not flexibility in specifying different addresses to different chips. One way to address this problem could be to have an individual address bus to each chip instead of sharing the address bus across all chips, but now that's going to increase the pin count on the processor, which is expensive. So in this work, we are proposing two schemes, which will address each one of those two challenges. The first scheme is a simple shuffling mechanism, which we call a cache line ID based shuffling. The idea is fairly straightforward. We take the cache line and we pass it through a bunch of shuffling stages and then map the data to different chips. So here, if you look at there are three shuffling stages, the first stage essentially swaps address and values. The second stage essentially swaps address and password values, and the third stage swaps address and [indiscernible] values. But if you do this shuffling at the same way for all the cache lines, it's not going to eliminate the problem. Because some more we have to control which stages are active for different cache lines. So for this purpose, we use the cache line ID. We use some bits of the cache line ID to determine which status are active, so in this particular case, the least significant bits are 1, 0 and 1, and stage 1 will be active, stage 2 will not be active and stage 3 will be active. Essentially, we are looking at stage N will be active the N least significant bit of the cache line ID is set. So just to look at what this shuffling mechanism can achieve for us, this is the first four cache lines of the table before shuffling, and as you can see, there are a lot of chip conflicts. The useful values are mapped just to two chips. After employing the shuffling scheme that I described in the previous slide, that's how the data is going to look. If you look at the cache line 0, because all the three listing significant bits are zeroes, it goes through no shared link. The mapping between the cache line and the chips are exactly the same as in the baseline. However, if you look at the other three cache lines, they have undergone some shuffling, and the result now is the error values are uniformly distributed across all of the chips, and you can actually show that for any -- in this particular case, we are looking at a stride of 4, but you can show that for any power-of-2 strided access pattern, you can use the scheme to uniformly distribute data across all the chips. >>: Do you know the stride access pattern before you decide how you're going to do the shuffling? >> Vivek Seshadri: That's a great question, so is the shuffling scheme dependent on what the strided access pattern is? It turns out the maximum stride that you can exploit is dependent on -will dependent on what shuffling you employ. So, for example, with this scheme, you can actually do a stride of 8, 4, 2 and 1, and also a few of the shuffling patterns, like I'll show in a few slides, but let's say you want to do a stride of 64 or 128. Then you may have to use a few of the bits of your address to do the shuffling. That's a good point. >>: But you probably need to know at the time you write it. >> Vivek Seshadri: Yes. >>: Oh, okay. >> Vivek Seshadri: Question, yes. >>: So in moving the data into the caches, what actually happens in the caches? Is it that there are empty slots in there, because from the software view, right, it expects values to be contiguous. So what's actually happening in the cache is what's ->> Vivek Seshadri: That's a good question. Can you hold that question for a moment? I'll actually show you what the mechanism is going to be to the on-chip caches. >>: And were there access patterns for which shuffling actually does not help or actually hurts, going back to your core shuffling scenario? >> Vivek Seshadri: So this particular mechanism would work for any power-of-2 strided access pattern, but if you have let's say an odd stride -- now, let's just take an odd stride. So an odd stride would actually not have the chip contact problem at all, because you typically have a power-of-2 number of chips, so if you have an odd stride, because there are co-primes, all the values with the odd stride are actually going to be uniformly distributed across all the chips. So there, the problem will be the next component of the stride, how you design the ->>: How you shuffle ->> Vivek Seshadri: How you treat the data, which I'll be showing. >>: A question here. So clearly, this is how the data has to be layered in the memory beforehand. Anybody who's driving the data to the memory has to know this is how it will be accessed, and therefore this is how the shuffling pattern should be, right? So what's the difference between that, and for your example, simply using a [indiscernible] layout over here that would have also given you ->> Vivek Seshadri: That's a great question. It'll be clear by the end of this section of the talk, but to quickly give you the answer, with a columnar layout, what you can achieve is you can achieve good throughput for a query that's going down a particular field. But let's say I have a system that I am running both transactions and analytics where the transactions want data in the row major order and the analytics queries want data in the column major order. A columnar layer is not going to give you the best performance. But what this mechanism is actually going to do, it allows you to access data both in the row major order and in the column major order simultaneously. So if you just look at this particular picture, as I am showing here, the LO values are mapped to different chips before they can be retrieved using a single command. We still don't know the memory controller is going to do it, but the memory controller can potentially achieve them using a single command. But if you look at it in the row wise, if you look at just the cache line 0, the cache line 0 is still mapped to different chips, which means the memory controller can still retrieve data in the row major order with a single command and the column major order with a single command, so that's the benefit that this mechanism will provide. So to quickly jump into what the second part of the mechanism is, it's like I mentioned before. These values can now be achieved using a single command, but the memory controller has to somehow provide different addresses to different chips. If you look at chip 0, it has to provide address 2, it has to provide address 2, but if you look at chip 1, it has to provide address 3, so how is the memory controller going to do it? Like I mentioned before, one way is to just have different address buses to each chip, but that's going to be costly. So what we actually show is the math behind the shuffling actually allows us to use a small piece of logic in front of each chip to locally compute the address of the chips, so just why we introduced this notion of a pattern ID. So the idea is to put a small piece of logic in front of the address bus that is connected to each chip, and that piece of logic is somehow going to compute which address the chip is going to access. So similar to before, the memory controller is going to issue a read command with an address, but in addition to the address, it's going to provide a pattern ID. The pattern ID is going to somehow indicate what the strided access pattern the memory controller is expecting. And what each chip is going to do is it's going to take the address and the pattern, and it's going to compute a new pattern to access and then return the data corresponding to the new address. And zooming in not the column translation logic that is used by each chip, I don't want to get into the details here, but it's extremely simple. It just uses one bitwise XOR and one bitwise AND operation, along with a chip ID, to compute the new address. I'll quickly jump straight into what is the effect of putting these two components together? Now, there is only one layout of data in memory after the shuffling scheme, but -- so in this particular sense, I'm showing the first 32 values contiguously stored in DRAM, starting from address 0. If the memory controller issues a read with address 0 and pattern 0, it essentially gets a cache line that contains the first eight values. This is what happens in existing systems. You get contiguous cache links. You access address 0, you get the first eight values. You access address 1, you get the next eight values. But the memory controller uses read with address 0 and pattern 1, it actually retrieves a cache line that contains every other value. This is without changing the layout in memory. If it uses a pattern 3, it gets a stride of 4. This is exactly what our query wants, so instead of address 0, if you use address 3, you'll get every fourth value, starting from this value, which is what the query in our example wanted. And if you use read with address 0 and pattern 7, it turns out you actually get a stride of 8. I am missing a lot of patterns in between, and if you use those patterns, you actually extract values with different strides. All right. So that's the benefit of the mechanism, so just having a single layout in memory, just by changing the pattern ID, you can access different strided values from DRAM. Now, to answer [Tunjay's] question on how this plays with the rest of the system stack, so we have a DRAM substrate that can actually support different strided access patterns, but now we have to change the rest of the system stack to somehow exploit the DRAM substrate. So what I'm going to show in the slide is one way of exploiting it. There are many different ways that one can imagine. So what we are actually going to do is, after the memory controller extracts a non-contiguous cache line from DRAM, it's actually going to store the data in a single physical cache line in the data store, instead of splitting across multiple physical cache lines, and there are reasons why we do that. So to somehow identify these non-contiguous cache lines in the data store, what we show is we essentially have to append the tag store with the pattern ID. So essentially, each tag entry in the cache will store with what pattern ID that particular cache line was fetched on DRAM, and we show that this is somehow -- this is a unique way of identifying each non-contiguous cache line. So this will also mean that when you have two cache lines, one that is having non-contiguous values and one that has contiguous values, there can be an overlap, a partial overlap between two cache lines, and we -- that will require some support from the cache to maintain coherence, and we actually show in our paper that it's fairly straightforward to maintain coherence with the map behind the mechanism. From the CPU side, we actually add two new instructions called path load and path store, which essentially is a way for the application to specify a strided access pattern 2D processor, so for example, if you use the pack load instruction, the pack load is similar to a load instruction. In addition to the register and the address, it also specifies the pattern ID with which the cache line has to be fetched, and the CPU checks the on-chip cache to see if this particular cache line with the pattern is present in the cache. If it is not present, the requisite send to the memory controller. The memory controller uses that pattern to actually fetch the data from DRAM and send the results back to the processor. [Tunjay]? >>: So if I understand correctly, if I have two trends, accessing the same piece of data using different pattern IDs, does that mean the data that I have fetched in will be duplicated in different cache lines? >> Vivek Seshadri: Absolutely, so that's exactly what will happen. And as long as both threads are doing just reads, it's not going to cause any problem. There will be a problem only when there is a write, and we actually show that on a write, each cache controller, we can locally invalidate the other overlapping cache line. Now, there's a tradeoff here. Let's say one thread is accessing values with pattern 0. The other thread is accessing values with pattern 3. I may be duplicating data in my cache, so I may not be using my caches very efficiently. That's some problem that we have not stressed in our applications, but the benefits of actually having data in the same physical cache line will outweigh the cost. >>: Can I assume that all of the different data belonging is always going to be on the same page? >> Vivek Seshadri: Yes, yes. If you're looking at -- it depends on what thread you're looking at. We didn't look at really large threads. In our examples, we looked at strides less than 64, so the data will be within the same operating system page. >>: Because there are other CPU issues there, relating to protection mechanisms. >> Vivek Seshadri: Absolutely, absolutely. >>: Pages throughout out the segments is still a [indiscernible]. >> Vivek Seshadri: No, absolutely. But yes, in our examples, we did not encode it. But at the moment we look at really large strides, we find our mechanisms do not support super-large strides, but it will also have problems like you are mentioning with respect to protection and ->>: Even with a modest stride, if you're accessing something that's otherwise right at the end of the page, a single cache line might cross a page boundary, right? With a traditional one, it doesn't, because you only look at one. You specify a load of one word, and then whatever cache line is on that word, because cache lines divide equally into pages, but if I have a stride even of 2, and happened to pick the thing that's the last thing on the page, that will cross, right? >> Vivek Seshadri: It's a good question, but because we are looking at power-of-2 strides and a system where a cache line is a power of 2. >>: So it's the same. >> Vivek Seshadri: Yes, a cache line is a power of 2, the operating system page is a power of 2. >>: I get it. Never mind. >>: This is a query, but you can tell me if you will answer it now or then, whatever. So you assume [indiscernible] that each value is stored in the memory only once. If it's replicated, it's replicated in your cache. You could take a completely different track, like take [indiscernible], for example. I could have two regions of memory, because you said memory is blended with only the bandwidth, store it row wise, store it column wise, and use your memory controller, whenever there are updates, to figure out how to somehow update both occasions simultaneously. And when you're reading, read whichever pattern gives you the most cache operations. So why not? >> Vivek Seshadri: I'll give you a one-sentence answer to the question. The on-chip cache has much lower data, right? So actually maintain the consistency using cache [code] I mentioned, there may be cases where you're writing to a value, and the replicated version is not in there in the cache, but to keep two versions in memory consistent, we actually looked at that particular column in a completely different setting. The writes -- the write amplification actually kills performance, any performance that you can extract from the system. We'll take one last question of this and then move on. >>: Yes, you're saving energy by doing this. Are you also saving clocks, or when you -- how long does it take for it to return, because these are coming from the different cache lines on the DRAM? Do you actually have to do individual cycles to get them together and then send it back, or does it take the same amount as a regular DRAM read? >> Vivek Seshadri: That's a good question. So we actually save a lot on clocks, because when the memory controller issues a command, each does its operation simultaneously, so we get the strided contiguous caching at almost the same latency as fetching any other cache link, and so we're actually saving both energy and gaining performance. So let me quickly go into the evaluation and summary of the results, that we used a simulator to make sure the performance -this is the gem5 simulator that is widely used by the architecture community, with the standard memory hierarchy, and for energy, we again used some tools that are used by architects to evaluate system energy. And for workloads, we actually used the in-memory database workload that I mentioned, so there are two popular layouts for in-memory databases. One is a row store, where like I mentioned, the values of the same record are stored together. Then we have a column store, where the values of the same column are stored together, and finally, we have the gather-scatter DRAM where we actually use an underlaying row store layout, but we can actually fetch data both in the row major order and the column major order. I'm assuming that the table fits in with the power-of-2 restriction that our mechanism has. And for the workloads, we have transactions which essentially update many values or re-update many values within one or a few records, and we have analytics, which essentially looks at data within a field but across many, many roads. And more recently, there is this hybrid workload, where both transactions and analytics are run on the same version of the database. And it's well known that row stores are good for transactions, column stores are good for analytics, but neither one is good for both. I am going to show you that gather-scatter DRAM can potentially obtain the best of both worlds. So here is just a description of what the workloads are. If we use a single database -- we are just looking at the storage engine of the database. We are not looking at the concurrency control and all those aspects, just the throughput of the storage engine. So we use a single table with a million records for the transaction. Each transaction operates on a randomly chosen record with a varying number of read-only and write-only and read-write fields. For analytics, we just use a simple arithmetic sum of all the values within a column, and the hybrid workload has two threads running on the same system. One is doing transactions, and the other one is performing analytics. >>: Have you mapped any real-world workloads to this type of workload? How will this map to the workloads? >> Vivek Seshadri: If you look at any traditional database engine, this is a problem that they are dealing with today through various other means, but we believe this approach can actually be better. We didn't run a real database engine on top of our mechanism, because just setting it up and then running it would be -- would have taken me a much longer time. So this is just to show, demonstrate, the benefits of our scheme. Okay, so on this slide, I'm showing the results for the transaction workload. On the left, we have throughput in terms of millions of transactions per second, and on the right, we have the energy consumed by different mechanisms for running 10,000 transactions, and there are three mechanisms. And as is clear for transactions, the row store is significantly better than the column store. It's around a 3X improvement in terms of the throughput and GS-DRAM is able to achieve almost the same throughput as the row store, and the results for energy are almost similar, a 3X improvement in the energy compared to the column store. On this slide, I show the results for the analytics query. On the left, we have the execution time, essentially, the time it took for the system to complete the analytical query, and on the right, we have again the energy consumed by the query itself, end to end. And again, the story is very similar to the previous slide. The column store is much better than the row store here, and the GS-DRAM is almost able to achieve the performance of the column store. It's a 2X reduction in execution time compared to the row store, and the story is very similar for energy. Question? >>: Just when you're saying -- is that indicating the memory system, or just energy across the whole system? >> Vivek Seshadri: Energy, including the CPU, caches, the memory channel and DRAM. Having said that, I must tell you, there are two components where we gain the energy benefits. One is we are actually transmitting far fewer cache lines than in this case, the row store. And the second thing is, we are actually reducing execution time, which means the amount of energy that are going to consume in the process is also slower. >>: Okay, so -- but can I -- I don't how know accurate energy simulations are, but if that simulation is accurate, how does that play into my [indiscernible]. Am I really looking at a 2X power reduction? >> Vivek Seshadri: So you wouldn't. The power is altogether a different story, because we actually don't change anything to reduce peak power or average power consumption. The power consumption will almost be the same. It's simply ->>: Because you've got a little bit of extra logic that's running on the ->> Vivek Seshadri: You could actually argue that the power [indiscernible] because we are stalling less on memory. So you could actually argue that ->>: And you're smaller in this ->> Vivek Seshadri: The power consumption actually increased. >>: Well, for a given workload -- for a given workload, are you telling me that my overall server energy budget will be halved? >> Vivek Seshadri: If this is the only workload you're having on the system, which if you are doing this all the time. And having said that, I must also warn you, we are just looking at the storage engine here. There is a lot of other logic that is surrounding the database which we have not looked at. So I will ->>: You have more power on the separates. >>: I know, I know. >>: It's just memory. >>: You won't really save 2X of the energy on the entire system, right? This isn't the Ethernet control where we're in ->>: I guess what I'm saying is, we don't even know how much this is [indiscernible]. >> Vivek Seshadri: Yes. Yes. Okay, so this is the hybrid workload. Again, like I said, it's running two threads, one in the transaction thread and the analytics thread. On the left, I am showing the throughput for the transactions, and on the right, I am showing the execution time for the analytics thread. It turns out here, the row store is actually not performing as well as GSDRAM. IN fact, GS-DRAM outperforms both the row store and the column store. I'm not going to get into the details of why this is happening. It's related to memory system interface. For analytics, the results are as expected. GS-DRAM is able to perform as well as the column store and much better than the row store. So really, the summary is, with this mechanism for the database storage engine, we can potentially obtain the best of both row store and column store layers. >>: In that case, your coherence is actually going to have to shoot down cache lines, right? >> Vivek Seshadri: Yes. >>: Which was not true in either of the other ones. >> Vivek Seshadri: Yes. >>: Okay. And even with that, it still doesn't ->> Vivek Seshadri: Yes. >>: Cool. >> Vivek Seshadri: So it simulates actually models the ->>: Yes, I assumed that you did. Otherwise, it would be bogus. >>: Sorry, one more question on that. Are you simulating a multi-core, multi-socket system? >> Vivek Seshadri: So it's a single-socket but multi-core system. >>: But you have a unified cache. >> Vivek Seshadri: Yes, we have a unified cache. The last level ->>: If you have a big multi-sockets server, and now you're going to see more coherence misses simply because these strided sets are contending when they previously wouldn't contend, have you thought about the impact that would have on scalability? >> Vivek Seshadri: So we have thought about it. So here is the question we have to ask, right? In the base link system, we are spending more requests to DRAM to get to fetch the data. And in our system, we may potentially be invalidating having more coherences. So now, it's a question of which is more costly. Is a potential coherence miss costly or is an extra DRAM access costly? The tradeoff may be different for different systems. In this particular case, we found that the coherence misses are almost nonexistent, because my analytics thread is running on some piece of data. How likely is it that a transaction is going to update the exact piece of data that I am operating on right now, right? So we actually didn't see many coherence misses in this particular case. Okay. So just to summarize this part of the talk, there are many data structures are using many databases, but the many databases, but the main data accesses that exhibit multiple access patterns. And depending on how the data is laid out, only one of them experiences good spatial locality, and we propose gather-scatter DRAM, where the memory controller actually gathers or scatters values with different strided access patterns, and we can show that it can have actually near idea bandwidth and cache utilization for power-of-2 strides, and I showed an example of inmemory database, where GS-DRAM provided the best of both row store layer and the column store layer. So this is actually a paper published in MICRO 2015, so if you want, you can actually look at the paper for more details and more questions. Okay, now, before I jump into the second part of the talk, how much more time do I have? >> Amar Phanishayee: I think you should just dive in right now. >> Vivek Seshadri: So for this part of the talk, we are going to dive into the details of how DRAM actually operates. So here is a DRAM module. Now, if we zoom into a DRAM chip, sufficiently far into the DRAM chip, because DRAM chip itself is a hierarchy of structures. So if you go to the lowest level, this is what each DRAM chip contains, a 2D array of DRAM cells connected to these components called sense amplifiers. Now, the DRAM cells themselves are extremely small, so we need some other component to actually extract the state of the DRAM, so that's what the sense amplifier is going to do. So now what I'm going to do in the next slide is essentially look at a single cell sense amplifier combination and see how that operates. And then we will see how we can exploit that operation to perform more complex things using DRAM. So this is the connection. So a DRAM cell contains two components. One is the capacitor, which stores the data, and an access transistor, which essentially determines whether this cell is currently being accessed or not. So the access transistor itself is controlled by the signal called the wordline, and like I mentioned before, we have this sense amplifier component. I'll describe how the sense amplifier operates in a minute. The sense amplifier has an enabled signal which determines if it's currently active or not, and the cell and the sense amplifier are both connected using this wire called the bitline. So in the initial state, both the wordline and the sense amplifier are disabled, and both ends of the sense amplifier, the bitline and the other end, are maintained at this voltage level of half VDD, which is midway between the lowest and the highest voltages, and the capacitor we are going to assume is fully charged. So to access data from this capacitor, we are first going to raise the wordline, which essentially is going to connect the capacitor to the bitline, and now the capacitor is fully charged, which means it's at the voltage level of VDD, and just like water, which flows from higher levels to lower levels, charge will flow from higher voltage level to lower voltage level. So in this case, the charge is going to flow from the capacitor to the bitline, and it's going to increase the voltage level on the bitline slightly. In this particular state, if we enable the sense amplifier, it is going to detect the deviation on the bitline. Essentially, it's going to compare the top and the bottom and say that the top is slightly higher than the bottom, and it's going to amplify the deviation until it reaches the extremes, where the top becomes VDD and the bottom becomes zero. And since the cell is still connected to the bitline, it's also going to replenish the charge on the cell. So this is the system that modern DRAMs use to access data. Now, let's look at what happens with the two cells connected to the same sense amplifier. Let's say we wanted to copy data from the top cell to the bottom cell. So initially, we will activate the top cell, which we call the source, and it's going to go through the operation that I just read in the previous slide, and the cell is going to get fully charged at the end of it, and the bitline is now at VDD. Now, in this state, typically, what DRAMs do today is to perform this operation called precharge, which will essentially take the bitline back to half VDD. But instead of doing that, they're going to activate the destination directly. What this does is it lowers the wordline on the source, essentially locking the charge into the source cell, but connect the destination cell to the sense amplifier. But since the sense amplifier is already in a stable state, the cell is so small that it cannot switch the state of the sense amplifier. Instead, what's going to happen is the data on the sense amplifier is going to get written into the cell, so it's going to get fully charged. So you can convince yourself that for any possible initial state, this is going to result in a data copied from the source to the destination, and imagine this happening across an entire row of DRAM cells. That's what happens in today's system, right? So we can actually do a multi-kilobyte-wide copy operation using this simple scheme. Now, let's look at three cells. So we started with one, two. Now, let's look at three cells connected to the same sense amplifier. What we are going to do is we're going to connect all three cells simultaneously to the sense amplifier, right? And here, two cells are fully charged. One cell is fully empty, so the effective voltage level on the three cells is two-thirds VDD, which is still higher than half VDD, which is the voltage we have on the bitline, right? So when we do this connection, at the end of the charge charting phase, where the charge flows from the cells to the bitline, there is still going to be a positive deviation on the bitline, and if you enable the sense amplifier in that state, it's going to actually push the bitline to VDD, and also all the three cells to the fully charged state. Now, if you look at this particular operation, let's say the final state of the bitline has output, and let's use A, B and C to denote the initial states of the three cells, we can derive the final state as AB plus BC plus CA. This is essentially the majority function, right? If at least two cells are fully charged, my final state will be one. Otherwise, my final state will be zero. It turns out using Boolean algebra, we can actually rewrite this expression as C times A or B plus not three times A and B. What this expression tells us is if I can control the initial value of cell C, then we can actually use these majority operations to perform a bitwise AND or a bitwise OR of the remaining two cells. Again, you imagine this operation happening across an entire row of DRAM cells, so essentially we have a mechanism to perform a multi-kilobyte-wide bitwise AND or a bitwise OR operation. Now, to quickly complete the picture, let's look at how we can perform negation using sense amplifier. Like I told you before, the sense amplifier has two parts. In the stable state, the bottom part is always a negotiation of the top part. So if we had a mechanism to some more connect a cell both to the top and the bottom, that will be great, which is why we use this notion of a dual-contact cell. A dual-contact cell is essentially a twotransistor, one-capacitor cell. It's used in many other schemes, as well. So one transistor connects the cell to the bitline. The other transistor connects the cell to the other side of the sense amplifier. And we call the wordline that controls the transistor that connects the cell to the bitline as the regular wordline and the other wordline as the negation wordline. Now, let's look at how we can take a source cell and store the negational source cell to the dual-contact cell. So initially, we will activate the source cell, which will go through the series of steps that I described a couple of slides before, and at the end of the whole operation, the source cell will be fully charged. The bitline will be at VDD. But the other side of the sense amplifier will be at zero, which represents the negation of the data that was there in the source cell. In this state, we activate the negation wordline, which essentially connects the dual-contact cell to the bitline or the other side of the sense amplifier, and this essentially drains out all the charge that is there in the dual-contact cell, so essentially, we have the negation stored in the dual-contact cell. So really, what we have is we have these two areas of DRAM cells, and we can actually perform copy operations between rows. We can do bitwise AND and bitwise OR, and we can do NOT, right? So if you put all these operations together, we essentially have a scheme where we can do any bitwise logical operation at a low granularity within the answers. >>: From the first three operations, you don't need any changes to the architecture of the DRAM cells, but for NOT, you do need some process framework. Is it some transistor that's already present at the sense amp that you can reuse? >> Vivek Seshadri: That's a great question. So what we actually show is the dual-contact cell, they need two transistors. So what we can actually do is we can just not use one row of capacitors to store data, and instead use those transistors actually for the repurposed one row of transistors to act as negation transistors for the dual-contact cell. That's a good question. But you are right. For the first three mechanisms, we actually only need changes to [indiscernible] the row decoder that is sitting in the periphery of the DRAM. Okay, so just to look at how the scheme can perform, in this slide, I am going to compare the throughput of our mechanism with what we can achieve using existing systems. So essentially, what we are doing is we are taking an Intel system and we are running a micro-benchmark that is performing a bitwise AND of two vectors, A and B, and storing the result in a third bit vector, C, and we are repeating this operation and figuring out what is a throughput that we can achieve for this particular operation. On the X-axis here, we are increasing the size of this bit vector, so starting from 32 kilobytes all the way up to 32 megabytes. So the working set of the application is somehow three times this size, because we are adding three such vectors. And the Y-axis is showing the throughput of performing bitwise AND or OR for this particular benchmark in terms of the gigabytes of result produced per second. So this is the throughput that you can achieve with one core. Before dissecting this result, let's look at what happens if we have two cores. With two cores, I'm getting much higher throughput on the left-hand side. With four cores, I'm getting even higher throughput. So the step function is the result of multiple levels of on-chip caches that you have, so essentially, if you look at the four cores, this is where the entire working set fits into the L1 cache of all the four cores. This is when it fits into the L2 cache, and this is when it fits into the L3 cache. The key here is the moment the working set stops within any level of the on-chip cache, the throughput that you can achieve with any number of cores is almost the same. It doesn't matter whether you are one, two or four cores. You have to go to memory, which means you are memory bandwidth bound. With Buddy RAM, we can actually achieve close to 10 times the throughput of just going to memory, and this is just using a single bank, whatever mechanism I showed. DRAMs typically have multiple banks that can be accessed simultaneously. If you employed more banks to perform the DRAM operations, we can actually get even higher. Right. So in the previous slide, I showed the results for bitwise AND and OR. So here, I am just summarizing the results for all bitwise operations. On the left, I have the same throughput result but for different bitwise operations, and comparing the throughput of Buddy RAM with the throughput you can achieve when the working set does not fit in any level of the on-chip cache. As you can see, for NOT, you get the highest throughput benefit. When you have more complex operations, like XOR and XNOT, the improvement is not as much, but it's still close to 4X the throughput that you can achieve with [indiscernible] systems. And this is, again, using just one bank. And the bigger story is we can achieve significant reductions in energy, because we are avoiding all the memory transfers on the data channel, so we essentially -- it's a very, very efficient mechanism to perform bitwise operations. And we looked at some applications for this particular DRAM substrate. One is we can simply use this to implement set operations. So if we can represent a set using bit vectors and if the operations mostly involved insert, lookup, union, intersection and difference, then we can actually show that this approach may be much better than using a traditional red-black tree style implementation for sets. And we actually looked at a real-world case where this may be useful, is this notion called an in-memory bitmap indices. So this, you can think of this as a replacement for the B tree base, and this is popularly used by databases, so if I am a website, and I am tracking millions of users, then the answer of having B trees and other kind of indices to track different attributes, I'll just a bit vector to denote different attributes of the user. For example, is the user a male? I can use a bit vector to represent that. Is the user under 18 years of age? I can use another bit vector to represent that. Did the user log in on this particular day into the system? Did the user update a photo into my webpage? So you can use bit vectors to track all these pieces of in, and you can have interesting queries on the database, which will essentially involve performing bitwise operations on these different bit vectors. And we actually show that Buddy can improve the query performance for some of these queries by close to threefold. All right, so ->>: And then query performance, because I've seen how you can -- so you have a great big bit vector. You'd do an add. But the query then is going to say, find me all the set bits, which is going to end up sucking the cache line sense memory, anyway. >> Vivek Seshadri: That's a great point, right? If we don't have a mechanism to do bit count, so what if my query involved bit count? So in fact, the query that we looked at, so again, it really depends on how often are you performing these detection operations as opposed to bitwise operations? In fact, the query that we looked at is doing a bunch of -- so let’s take a query that I'm looking at how many male users under the age of 18 logged into my website in the past one week and uploaded a photo? You're going to perform a bunch of bitwise operations on many, many bit vectors, and then send the result into the processor. So the amount of data that you're transporting is actually much lower than what you would do in the baseline. It's a great point. So just to summarize, DRAM can be more than just a storage device. We have looked at [indiscernible] different techniques to perform different operations inside DRAM, and the bottom line is we aim to achieve high performance at low cost. Essentially, getting order of magnitude improvement in performance for the respective operations while incurring very few changes inside the DRAM chips. All right, I have time? Okay. So if there are any questions? >>: Keep going. Just keep going. >> Vivek Seshadri: So in the last part of the talk, I just present a quick overview. It's essentially a list of other work that I've done at CMU and go over some of the future directions that I'm planning to look at next. So these are the works that are part of my PhD thesis. I essentially presented the first three mechanisms. I think I should have probably changed this ISCA under submission. We got the results back. It didn't get in, so these are the other two components of my PhD thesis. One is called Page Overlays, which I presented at ISCA last year. The idea here is existing virtual memory frameworks, which are page granularity, we show that they are inefficient to perform certain find in memory operations. Just if you take copy on write, copy on write essentially happens at a page granularity, but even if I modify a single byte within a page, it's going to result in a full-blown page copy. So essentially, this particular framework is looking at can we make such operations more efficient? Because the hardware can inherently lack cache line, so the processor can track which cache lines represent that, so it can use the capability to enable a more efficient framework. That's what this particular idea is looking at. So the dirtyblock index is attacking another problem, which essentially present in the mechanisms that I presented. So let's say we are performing an in-memory operation. Let's say I'm performing a bitwise operation on two source values and then storing the result in a third row in DRAM. What if the cache, on-chip cache, has some dirty blocks from those source pages? Now, before performing the bitwise operation, we have to actually flush the data of the source flows from the on-chip caches into DRAM before you can actually perform the operation. So essentially, the flushing is on the critical part of performing the bitwise operation in memory. What the dirtyblock index is going to allow us to do is to accelerate this flushing mechanism, so essentially it's a scheme where we can identify dirty blocks of a particular DRAM row very efficiently and also flush them to DRAM very efficiently. In the past, I have looked at -- this is early years of my PhD. I have looked at some mechanisms to improve on-chip cache utilization, so these are essentially algorithms to control cache insertion and algorithms to manage prefetch blocks in onchip caches. And I've also done a bunch of works in collaboration with other PhD students, looking at practical data compression for modern memory systems. This is in collaboration with Gennady Pekhimenko, who I believe interviewed here a few weeks ago. And also looked at problems with respect to slowdown estimation indication. So essentially, when multiple applications are running on the same system, applications will get slowed down, because there is interference at shared resources. Now, it turns out that when the slowdown is actually dependent on which applications you are running, so there is not just slowdown, but there is also some kind of unpredictability in the slowdowns, depending on which applications are running. So can we actually enable hardware support to allow the operating system to understand what your slowdown is going to look like, so those are the mechanisms that we looked at in collaboration with Lavanya, who is currently an intern. And I've looked at some other low-latency data architectures with other students, primarily Yoongu Kim and Donghyuk. So Donghyuk was a researcher at Samsung for several years, and it's from him we gained all the DRAM knowledge in the group. So for future directions, there are -- for the near future, there are definitely some works that have come out of my thesis that I would like to look at, essentially extending our mechanisms to the full system, but these are directions that are resulting from some imminent trends in architecture. So the first one is new memory technology. So essentially, there is a bunch of different technologies that are mostly in research now, but that are also going to be imminent. These technologies have two characteristics. One, they are non-volatile. So essentially, data is persistent, unlike in DRAM, and they have some challenges with respect to the read-write characteristics that they have, both in terms of performance and energy. So essentially, these are -- this is a non-volatile memory technology that can be accessed using loadstore primitives. But today, if you look at any level of persistent storage in the system, they are mostly block access, SSD [indiscernible] hard drives. So how will these technologies affect the way we think about applications, right? So if you have a database, do you have to still write logs or how does it change in existing systems? The second direction is heterogeneity. We already have a lot of heterogeneity in the system, like I mentioned before. We have CPUs, we have GPUs in the same system. Intel is probably going to put an FPGA on chip, and we are describing all of these processing and memory mechanisms, Buddy RAM, gather-scatter DRAM. If a system has all these different computing resources, how does the operating system determine how to schedule a particular task? And the heterogeneity is also seeping into memory, right? So today, memory is mostly monolithic. We have one kind of DRAM sitting in the system, but in the future, you can expect fast, low-latency DRAMs, high-bandwidth DRAM, low-power DRAM, all put in the same system. With this amount of heterogeneity, how does, A, the programmer specify what the application should do? Because unlike heterogeneity in a NUMA system or a multi-socket system that the architecture is still homogeneous, here, we may have different compute units which require different pieces of code to run. So how does the application specify or the programmer specify what the application should be doing and how the compiler should compile a single piece of code to different architectures? And how does the operating system schedule different applications on these different architectures? I think there are many, many interesting questions to be answered in this particular space. And finally, something that has picked up in many places, including at Microsoft, essentially, application- specific system design, that if I know my application and if I know this is the most important workload that's going to be running on a large system, can I actually go and think about the system end to end and modify both hardware and software to essentially extract the maximum efficiency out of the system, right? Microsoft Research here is doing that for -- has done that for Bing search already, the Catapult system, and now they are looking at expanding this to other areas. So again, I would like to look at problems in the space and look at how I can use my architecture knowledge to contribute to ideas here. So that's it. Thank you for hearing me talk. Question. >>: So have you had time to talk to hardware vendors, and you can comment on what's their reaction to GS-DRAM, particularly how expensive they think this would be for them, or it would be easy for them to actually add this new logic to their module? What's their sense? What was the reaction of industry to this idea? >> Vivek Seshadri: I'll give you a two-part answer. So one, we have been in touch with -throughout the research, we have been in touch with some DRAM manufacturers, like designers of DRAM, like DRAM research terms, and here is what we hear from them. From a feasibility perspective, there is no challenges here, so all the mechanisms are feasible to implement in DRAM, but they are going to increase costs. They are going to increase costs because there are some yield implications to our mechanisms. Let's say we have Buddy RAM, which means we have one more feature in my DRAM, which also means that there may be many more modules that can fail because they don't support this feature. So really, the question here is, is there -what is the killer application? That's the question that they ask us. And we go and tell them, hey, in-memory database bitmap indices. But that's not the vision for them. They want some bigger vendor, like Microsoft or Google, to actually tell them, hey, we really care about this. This is what I think research will show. There are some really important applications which can benefit significantly from these items that will push the data manufacturers to actually go and even implement some of these things. Having said that, there was a keynote talk at MICRO this year that I was present in the [indiscernible]. This was from Thomas Pawlowski from Micron, so he was giving a keynote on where he thinks new memory technologies are going, and there he mentioned look at ideas like gather-scatter DRAM. So I think from that perspective, we really think these are easily implementable mechanisms in DRAM. We really need the killer applications to actually push the manufacturers to do it. >>: The [indiscernible] or did you ->> Vivek Seshadri: So I was ->>: I'm not going to ask you where I can buy it. >> Vivek Seshadri: Well, I was saying Intel is probably going to put FPGAs on chip. You may not get a chip today, but maybe in the near future. >>: So if that happens, that's sort of a more general mechanisms, so then you don't need the kind of -- >> Vivek Seshadri: FPGA on chip? So I think this actually goes back to his point, his question initially where at the end of the day, the real bottleneck is actually taking the data from the DRAM cells and pushing it over the channel. Now, you can increase the bandwidth on the channel and push an FPGA or whatever piece of logic that you want. That is going to be good for some operations, so definitely, the mechanisms that I have described here have limitations in the sense you cannot do every single computation using the mechanism that we illustrate. But at the end of the day, any system that separates memory and computation and has a channel in between will eventually become bandwidth bottlenecked, because we always want more computation and more data. >>: Right, there are plenty of apps that when things get stuck on disks, things get stuck on -someone will be. You may [indiscernible]. >> Vivek Seshadri: So ->>: You said any application will. >> Vivek Seshadri: I was being really strong, so point taken, point taken. >>: I'm just quibbling. >> Vivek Seshadri: Point taken. >>: In the first part of your work, you suggested you will augment the tag RAMs, and typically, cache accesses are out on the critical part of a processor's frequencies. Does your technique impact frequency? Do you analyze that aspect? >> Vivek Seshadri: So just to repeat his question, so we are adding this pattern ID to the on-chip tags. Does that have any effect on the cache access latency? So essentially what our mechanism does is it adds a few additional bits to the tag itself, so if your tag was 32 bits, with three additional bits of pattern ID, it will become 35 bits. So now if you -- we did some simulations using CACTI, which is essentially a cache area and latency estimation tool, and we did not find it increasing the latency. >>: Is it as simple as that? I thought that because you're accessing words from different areas and putting them in the same cache line, you will probably need a way to have in directions say, okay, this did all this. This is not supposedly this cache line, but actually is, or this is ->> Vivek Seshadri: So, again, there are many ways of exploiting our work, but the way we looked at it is the application actually specifies the pattern ID, also. So let's say the application accessed a cache line that contained contiguous values. And then the application actually wants to access a cache line which has strided values starting at the same address. So now it's accessing the first value. Even though it is already present in the on-chip cache, it will actually result in a cache miss, because the application is looking for a different pattern, and if you go all the way to the memory controller and the values will be fetched from DRAM. So it's not looking at values at an individual granularity. The application is -- the on-chip cache is still storing things at the cache line granularity. >>: [Indiscernible] write in here. >> Vivek Seshadri: Yes, you need it for coherence, which is why the question is, when I am writing to a particular cache line, what are all the other possible cache lines that can potentially overlap with this cache line that have been validated, so I did not go into the details in the talk, but we have a very simple mechanism wherein the cache controller can actually list which cache lines have to be invalidated, and it will invalidate those additional cache lines, as well. So in terms of messages going between cores, it's still a single message that is going, shoot down this particular cache line. Except the cache controller is locally doing some additional invalidations before it sends an act back to the original core that sent the invalidate. So that's where I think there is a tradeoff between how often are you doing these additional invalidations as opposed to how often are you fetching additional cache lines from the data. I think there will be a tradeoff there. Question. >>: In a second part of the work, it appeared that you would be activating multiple rows in the DRAM to perform your logical bitwise operations. Is that a standard technique, or did you -- do you have to do splice simulations to figure out whether it will actually work, because all of that is [indiscernible]. >> Vivek Seshadri: So we had simulations, and what I can tell you is in simulations, it works. But when you go to real DRAM, there are other considerations which it's clear are completely outside the scope of an academic institution to do those studies. But having said that, like I mentioned, we have been in touch with some DRAM manufacturers, so they expect the schemes to work. >>: But they are upset about the yields, which means that they are expecting some impact of these techniques. >> Vivek Seshadri: No, definitely. So if you look at the existing DRAM market, the DRAM design itself, from whatever I just said to you, you probably already know 50% to 70% of how DRAM works. So the DRAM design itself is very, very simple, but the real cost or where DRAM manufacturers have an edge over any other system or any other place that can actually manufacturer DRAM is how -- the techniques that they use to optimize DRAM for yield, and those are not public at all. So we don't know all the techniques that they employ to maximize yield, which is why I'm commenting, this is a new feature, and it may affect yield, which will be a big concern for them. But if Microsoft says, okay, today I am paying $10 per gigabyte, but I'll be willing to pay you $100 per gigabyte for a system that will also allow me to do bitwise operations, maybe they will consider it. Maybe, all right? >>: Thank you. >> Vivek Seshadri: Thank you.