Processes CMSC-421 Operating Systems Principles Chapter 2

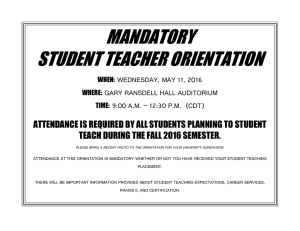

advertisement

Processes

CMSC-421

Operating Systems Principles

Chapter 2

Gary Burt

7/26/2016

1

The Process Model

All the runnable software on the

computer, including the operating

system is organized into a number of

sequential processes.

A process is just an executing program,

including the current values of the

program counter, registers, and

variables.

Gary Burt

7/26/2016

2

Multiprogramming of

Four Programs.

One program

counter

A

B

Process

Switch

C

D

Gary Burt

7/26/2016

3

Multiprogramming of

Four Programs. (II)

Four program counters

A

Gary Burt

B

C

7/26/2016

D

4

Multiprogramming of

Four Programs. (III)

D

C

B

A

Time

Gary Burt

7/26/2016

5

Problem

With the CPU switching back and forth

among an unknown number of

processes, the rate at which a process

performs its computation will not be

uniform, and probably not even

reproducible.

This causes a problem for real-time

processes!

Gary Burt

7/26/2016

6

Process Hierarchies

Operating systems that support the

process concept must provide some

way to create all the processes needed.

Init starts all of the necessary processes

when the computer is booted.

All subsequent processes can created

new processes.

Gary Burt

7/26/2016

7

Process States

Possible states:

» Running

– Actually using the CPU at that instant.

» Ready

– Runnable; temporarily stopped to let another

process run.

» Blocked

– Unable to run until some external event

happens.

Gary Burt

7/26/2016

8

Transition State

Diagram

Running

2

1

3

Blocked

Gary Burt

4

Ready

7/26/2016

1. Process blocks

for input

2. Scheduler picks

another process

3. Scheduler picks

this process

4. Input becomes

available

9

Alternative View

0

1

...

n

n-1

scheduler

Gary Burt

7/26/2016

10

Scheduler

Gary Burt

According to this view, the scheduler not

does process scheduling, but also

interrupt handling and all the

interprocess communication.

7/26/2016

11

Implementation of

Processes

OS maintains a process table with one

entry per process.

Contains process state, pc, stack

pointer, memory allocation, status of

open files, accounting and scheduling

information.

Gary Burt

7/26/2016

12

Sample Fields

Process mgmt

Registers

PC

PSW

Stack pointer

Process state

Time started

Children CPU time

Time next alarm

Message queue

Pending signal bits

PID

Various flag bits

Gary Burt

Memory mgmt

file mgmt

Pointer to text seg. UMASK

Pointer to data seg. Root directory

Pointer to bss seg. Working directory

Exit status

File descriptors

Signal status

Effective UID

PID

Effective GID

PPID

System call Param

Real UID

Various flag bits

Effective UID

Real GID

Bit maps for signals

Various flag bits

7/26/2016

13

Threads

In a traditional process, there is a single

thread of control and a single pc in each

process.

In some modern OS, support is

provided for multiple threads of control

within a process.

Gary Burt

7/26/2016

14

Heavyweight Threads

Computer

Program

Counter

Gary Burt

Thread

7/26/2016

Process

15

Lightweight Threads

Computer

Gary Burt

7/26/2016

16

Threads II

Gary Burt

An example of when to use threads, is

for a server. The server is one single

process, but each connection is a

separate thread. This allows critical

data to be shared in the process global

memory and available without special

handling. When one connection is not

sending data, only that thread is

blocked.

7/26/2016

17

Threads III

Another example of using threads is in

Netscape. One process can have a

section of code to manage the internet

connections, since there is one logical

connection for each image on the

screen.

There is one standard thread package,

POSIX P-threads.

Gary Burt

7/26/2016

18

Design Issues

The two alternatives seem equivalent.

Difference is in performance. Switching

threads is much faster when thread

management is done in user space than

when a kernel called needed.

When one thread blocks for I/O, all

threads are blocked by the kernel.

Gary Burt

7/26/2016

19

Design Issues (II)

When the parent (with threads) forks,

does the child have threads?

Problems with one thread closing a file

that another thread needs!

How is memory allocation coordinated?

How is error reporting handled?

Gary Burt

7/26/2016

20

Design Issues (III)

How are signals handled, are they

thread specific?

How are interrupts handled, are they

thread specific?

Which thread gets keyboard input?

How is stack management coordinated?

Gary Burt

7/26/2016

21

Good News, Bad News

These problems are not

insurmountable, but they do show that

just introducing threads into an existing

system is not going to work at all.

There is also more work currently for

the applications programmer!

Gary Burt

7/26/2016

22

InterProcess

Communications

Processes frequently need to

communicate with other processes.

One example is the shell pipeline.

There is a need for communications

between processes, preferably in a wellstructured way, not using interrupts.

Gary Burt

7/26/2016

23

Three Issues

How one process can pass information

to another.

Making sure two or more processes do

not get into each other's way when

engaging in critical activities.

Proper sequencing when dependencies

are present.

Gary Burt

7/26/2016

24

Race Conditions

One way to sure is with a common

resource (main memory, shared file)

One example is the print spooler

» one process enters the file name into a

special spooler directory.

» another process *printer daemon"

periodically checks to see if there are any

files to print.

Gary Burt

7/26/2016

25

Race Conditions (II)

directory is capable of a large number of

slots.

Two shared variables:

» out which points to the next file to be

printed

» in which is the next free slot in directory.

Gary Burt

7/26/2016

26

Race Conditions

Example

Slots 1-3 are empty

Slots 4-6 are full

Simultaneously, processes A and B

decide they want to queue a file for

printing.

Both processes thing the next slot is 7.

Gary Burt

7/26/2016

27

Race Conditions

Example (II)

out = 4

4

5

Gary Burt

A

6

7

B

8

in = 7

7/26/2016

28

Critical Sections

Gary Burt

The key to preventing trouble here and

in other situations involving shared

resources is to find some way to prohibit

more than one process from reading

and writing the shared data at the same

time

7/26/2016

29

Mutual Exclusion

Making sure that if one process is using

a shared resource, the other processes

will be excluded from doing the same

thing.

Choice of primitive operation is a major

design issue in any operating system.

Gary Burt

7/26/2016

30

Solution

Part of the time, a process is busy doing

internal computations and other things

that do not lead to race conditions.

The part of the program where the

shared memory is accessed in called

the critical region or critical section.

No two processes can be in their critical

region at the same time.

Gary Burt

7/26/2016

31

Four Conditions

Gary Burt

No two processes may be simultaneously

inside their critical regions. (Mutual

exclusion)

No assumptions may be made about speeds

or the number of CPUs (Timing)

No process running outside its critical region

may block other processes. (Progress)

No process should have to wait forever to

enter its critical region. (Bounded waiting)

7/26/2016

32

Mutual Exclusion with

Busy Waiting

Disabling Interrupts after entering

critical region and re-enable them just

before leaving

» Simplest solution.

» No clock interrupts can occur.

» Very vulnerable

» Does not work if multiple processors are

involved

Gary Burt

7/26/2016

33

Lock Variables

Variable initially set to 0

First process to enter critical section

changes the lock variable to 1.

Any other process checks the lock

variable to see if it is 0. If it is not, it

must wait until the lock becomes 0

again.

Gary Burt

7/26/2016

34

Lock Variables Faults

This is like the spooler problem. If two

processes read the variable when the

variable is set to zero, both will enter the

critical region.

Violates Mutual Exclusion!

Gary Burt

7/26/2016

35

Strict Alternation

While (TRUE) {

while ( turn != 0) ;

critical_region();

turn = 1;

noncritical_region();

}

While (TRUE) {

while ( turn != 1) ;

critical_region();

turn = 0;

noncritical_region();

}

Process A

Gary Burt

Process B

7/26/2016

36

Strict Alternation

Faults

Continually testing a variable for some

state is called busy waiting. Should be

avoided, since it wastes CPU time.

Can end up blocking and violating

Progress.

Gary Burt

7/26/2016

37

Peterson's Solution

#define FALSE 0

#define TRUE

#define N

int

turn;

int

interested[N];

Gary Burt

1

2

7/26/2016

38

Peterson's Solution (II)

void enter_region( int process )

{

int other;

other = 1 - process;

interested[process] = TRUE;

turn = process;

while ( turn == process &&

interested[ other ] == TRUE )

;

}

Gary Burt

7/26/2016

39

Peterson's Solution (III)

void leave_region( int process )

{

interested[process ] = FALSE;

}

Gary Burt

7/26/2016

40

TSL Instruction

Hardware instruction that is especially

important for computers with multiple

processors is TSL

Test and Set Lock

Solves problems with Strict Alternation,

because there is no chance of two

processes reading the variable and

getting the same value.

Gary Burt

7/26/2016

41

TSL

enter_region:

tsl

register, lock

cmp register, #0

jne

enter_region

ret

leave_region:

move lock, #0

ret

Gary Burt

7/26/2016

42

Peterson's Solution &

TSL Instruction Fault

Both are correct.

But require busy waiting.

Gary Burt

7/26/2016

43

Priority Inversion

Problem

Two processes:

» H, with high priority, to run whenever it is in

a ready state.

» L, with low priority

Gary Burt

If L is in critical region and H becomes

ready, L never gets scheduled so it can

leave it critical region, so H can run.

7/26/2016

44

SLEEP & WAKEUP

SLEEP is a system call that causes the

caller to be blocked until something

wakes it up.

WAKEUP has one parameter, the

process to wakeup.

Gary Burt

7/26/2016

45

Producer-Consumer

Problem

Also known as the bounded buffer

problem.

One process puts data into a buffer.

Another takes it out.

Gary Burt

7/26/2016

46

Buffer Problems

Producer wants to put a new item into

full buffer.

» Producer goes to sleep until consumer

empties queue.

Consumer wants to to remove an item

when buffer is empty

» Consumer goes to sleep until producers

adds to queue.

Gary Burt

7/26/2016

47

Fatal Race Condition

#define N

100

int

count = 0;

void producer( void )

{

while ( TRUE ) {

produce_item();

if ( count == N ) sleep ( );

enter_item( );

count = count + 1;

if (count == 1 ) wakeup(consumer );

}

}

Gary Burt

7/26/2016

48

Fatal Race Condition

(II)

void consumer( void )

{

while ( TRUE ) {

if ( count == 0 ) sleep ( );

remove_item( );

count = count - 1;

if ( count == N - 1 ) wakeup ( producer );

consume_item( );

}

}

Gary Burt

7/26/2016

49

Fatal Race Condition

(III)

Because access to count is

unconstrained, problems occur.

Consumer sees count is zero, but gets

suspended.

Producer adds, gives wakeup call.

Consumer misses call, gets resumed

and goes to sleep.

Producer goes to sleep.

Gary Burt

7/26/2016

50

Semaphores

1965, E.W. Dijkstra suggested using an

integer variable to count the number of

wakeups saved for future use.

This was called a "semaphore".

Proposed having two operations,

DOWN and UP.

DOWN checks to see if the value is

greater than 0, and continues if it is.

Gary Burt

7/26/2016

51

Semaphores (II)

Checking the value, changing it, and

going to sleep is done as a single,

indivisible atomic action.

The UP operation increments the value

of the semaphore. If there are

processes sleeping, then one is given a

WAKEUP.

Gary Burt

7/26/2016

52

Producer-Consumer

Problem Revisited

#define N 100

typedef int semaphore;

semaphore mutex =1;

semaphore empty = N;

semaphore full = 0;

Gary Burt

7/26/2016

53

Producer-Consumer

Problem Revisited (II)

void producer( void )

{

int item;

while( TRUE ) {

produce_item( &item );

down( &empty );

down( &mutex );

enter_item( item );

up( &mutex );

up( &full )

}

Gary Burt

7/26/2016

54

Producer-Consumer

Problem Revisited (III)

void consumer( void )

{

int item;

while ( TRUE ) {

down( &full );

down( &mutex );

remove_item( &item );

up( &mutex);

up( &empty )

}

}

Gary Burt

7/26/2016

55

Monitors

The order of the DOWN operations is

the Producer-Consumer problem can

lead to a deadlock.

A monitor is a collection of procedures,

variables, and data structures that are

grouped together in a special kind of

module or package.

Gary Burt

7/26/2016

56

Monitors (II)

Gary Burt

Monitors have a property that only one

process can be active in a monitor at a time.

Compilers can handle calls to the monitor

procedures in a different manner. Calling

processes will be suspended until the other

process has left the monitor.

Compiler implements the mutual exclusion on

the monitor entries.

7/26/2016

57

Monitors (III)

Blocking is controlled with condition

variables. If a monitor procedure

discovers it can not continue, it WAITs

on a condition variable.

Waking up a waiting monitor procedure

is done with a SIGNAL on the condition

variable.

Condition variables are not counters.

Gary Burt

7/26/2016

58

Monitors (IV)

The advantage of the monitor with WAIT

and SIGNAL over SLEEP and WAKEUP

is that the monitor allows only one

active process. This avoids the fatal

race conditions.

By making mutual exclusion automatic,

monitors make parallel program much

less error-prone.

Gary Burt

7/26/2016

59

Down Side

Monitors are not supported in C, Pascal,

and most other languages.

Semaphores are not supported either,

but can be added as library calls.

Monitors and semaphores are designed

to systems that have common memory.

Gary Burt

7/26/2016

60

Message Passing

Message passing uses to primitives

SEND and RECEIVE. These are not

language constructs but can be put into

library procedures.

Message passing system have many

challenging problems and design issues

that are not present for semaphores and

monitors.

Gary Burt

7/26/2016

61

Message Passing (II)

Problems

» Messages can be lost on the network.

(Use acknowledgement messages)

» Messages can arrive out of order (Use

consequence number.)

» Have to deal with issues of how processes

are named.

» How can imposters be kept out?

Gary Burt

7/26/2016

62

Message Passing (III)

Problems (continued)

» performance is slower because of copying

messages between processes.

Gary Burt

7/26/2016

63

Examples

IPC between processes in MINIX and

UNIX is done via pipes. These are

effectively mailboxes (memory buffers).

However, pipes do not maintain

message boundaries.

Gary Burt

7/26/2016

64

Classical IPC Problems

The Dining Philosophers Problem

The Readers and Writers Problem

The Sleeping Barber Problem

Gary Burt

7/26/2016

65

The Dining

Philosophers Problem

Five philosophers are seated around a

circular table. Each philosopher has a

plate of spaghetti. The spaghetti is so

slippery that a philosopher needs two

forks to eat it. Between each par of

plates is one fork.

The life of a philosopher consists of

eating and thinking.

Gary Burt

7/26/2016

66

The Dining Philosophers

Problem (II)

When a philosopher gets hungry,

he/she tries to acquire the fork on each

side of the plate.

If successful, the philosopher eats for a

while and then puts down the fork and

continues to think.

If all philosophers simultaneously take

their left fork, they couldn't get the right

fork -- starvation.

Gary Burt

7/26/2016

67

Readers and Writers

Problem

It is acceptable to have multiple

processes reading a database at the

same time.

But if one process is updating (writing)

the database, no other processes may

have access to the database.

The problem occurs if there are multiple

readers and the writer wants exclusive

use.

Gary Burt

7/26/2016

68

Sleeping Barber

Problem

The barber shop has one barber, one

barber chair, and some number of

chairs for waiting customers.

If there are no customers, the barber

goes to sleep.

When a customer arrives, the customer

has to wake up the barber.

Gary Burt

7/26/2016

69

Sleeping Barber

Problem (II)

If additional customers arrive, they have

to sit in one of the empty chairs, or

leave the shop.

The problem is to program the barber

and the customers without getting into

race conditions.

Gary Burt

7/26/2016

70

Sleeping Barber

Problem Code

#define CHAIRS 5

typedef int semaphore;

semaphore barbers

semaphore customers

semaphore mutex

int

waiting

Gary Burt

= 0;

= 0;

= 1;

= 0;

7/26/2016

71

Sleeping Barber

Problem Code (II)

void barber( void )

{

while ( TRUE ) {

down ( customers );

down ( mutex );

waiting = waiting - 1;

up ( barbers );

up ( mutex );

cut_hair( );

}

}

Gary Burt

7/26/2016

72

Sleeping Barber

Problem Code (III)

void customers( void )

{

down( mutex );

if ( waiting < CHAIRS ) {

waiting = waiting + 1;

up ( customers );

up ( mutex );

down ( barbers );

get_haircut( )

} else

up ( mutex )

}

Gary Burt

7/26/2016

73

Process Scheduling

When two or more processes are

runnable, the operating system must

decide which on to run first.

The part of the operating system that

makes this decision is called the

scheduler.

The algorithm used is called the

scheduling algorithm.

Gary Burt

7/26/2016

74

Scheduling Criteria

Gary Burt

Fairness -- make sure each process gets its

fair share of the CPU.

Efficiency -- keep the CPU busy 100 percent

of the time.

Response time -- minimize response time for

interactive users.

Turnaround -- minimize the time batch users

must wait for output.

Throughput -- maximize the number of jobs

processed per hour.

7/26/2016

75

Scheduling Criteria (II)

The criteria are contradictory.

» interactive

» batch

Any scheduling algorithm that favors

some class of processes hurts another

class.

Every process is unique and

unpredictable.

Gary Burt

7/26/2016

76

Clocks

To make sure that no process runs too

long, nearly all computers have an

electronic clock built in, which causes

an interrupt periodically.

A frequency of 50 or 60 times a second

( called 50 or 60 Hz [Hertz]) is common.

At each interrupt, the OS gets to decide

whether the currently running process

should be allowed to continue.

Gary Burt

7/26/2016

77

Preemptive Scheduling

Gary Burt

The strategy of allowing processes that are

logically runnable to be temporarily

suspended is called preemptive scheduling.

Run to completion (non-preemptive) was

the method for early batch modes.

Processes can be suspended at an arbitrary

instant, without warning.

Can lead to race conditions.

7/26/2016

78

Round Robin

A

B

C

D

E

Gary Burt

7/26/2016

79

Round Robin (II)

Gary Burt

This is one of the oldest, simplest, fairest, and

most widely used algorithms.

Each process is assigned a time interval

called a quantum.

If the process is still running at the end of its

quantum, the CPU is preempted and given to

another process.

If a process is finished or blocked before the

quantum is up, CPU switching is done.

7/26/2016

80

Round Robin (III)

The length of the quantum is an issue.

If the context switch takes 5 msec, and

the quantum is 20 msecs, 20% of the

CPU is wasted on administrative

overhead.

If the quantum is 500 msecs, the only

1% is wasted, but if 10 interactive users

hit the Enter key at the same time, one

user will have a 5 second delay.

Gary Burt

7/26/2016

81

Priority Scheduling

Not all processes are equally important.

Some users should have a higher

priority.

Some processes should have a higher

priority.

» Processes that are extremely I/O bound

might be higher.

Gary Burt

Processes can have static and

dynamically assigned priorities.

7/26/2016

82

Priority Scheduling (II)

High priority processes can run

indefinitely.

» One solution is lower the priority after each

quantum.

» Another solution is to assign a maximum

quantum.

Gary Burt

Can combine priority and round-robin.

7/26/2016

83

Priority Scheduling (III)

Highest

Runnable Processes

Priority 4

Priority 3

Priority 2

Priority 1

Low priorities may all starve to death!

Gary Burt

7/26/2016

84

Multiple Queues

High priority classes have smaller

quantums.

Processes that use up their quantums

are moved down to the next priority

class, but get twice as large a quantum.

Quantums: 1, 2, 4, 8, 16, 32, 64.

A process needing 100 quantums only

gets swapped 7 times.

Gary Burt

7/26/2016

85

Shortest Job First

Gary Burt

(Previous algorithms were designed for

interactive systems.)

8

4

4

4

A

B

C

D

4

4

4

8

B

C

D

A

7/26/2016

86

Guaranteed Scheduling

If there are n users on the system, you

will bet 1/n of the CPU power

Actual time vs. entitled time should be

1.0.

The process with the lowest ratio, gets

run.

Gary Burt

7/26/2016

87

Lottery Scheduling

Give processes lottery tickets for

resources.

Hold a random drawing and the winner

gets 20msec of CPU time as a price.

Important processes are given more

tickets.

If there are 100 tickets and one process

has 20 tickets, it will run 20 percent of

the time.

Gary Burt

7/26/2016

88

Two-level Scheduling

Previous algorithms assumed that all

processes were in memory.

Two-level schedules in-memory and

swapped to disk as two different

scheduling problems.

Schedule those in memory only.

Schedule those on disk only.

Gary Burt

7/26/2016

89

Two-level Scheduling

(II)

Criteria of higher-level schedule could

be:

» How longs has it been since the process

was swapped in or out?

» How much CPU time has the process had

recently?

» How big is the process? (Small ones do

not get in the way.)

» How high is the priority of the process?

Gary Burt

7/26/2016

90

Policy versus

Mechanism

What if different processes do not

belong to different users?

DBMS can have children processes to

handle sub-functions.

Separate scheduling mechanism from

the scheduling policy.

Allow the kernel to modify the priority of

children processes.

Gary Burt

7/26/2016

91

Real-Time Scheduling

When the computer must react

appropriately to external stimuli within a

fixed amount of time.

types:

» hard real time: Must meet absolute

deadlines.

» soft real time: Missing an occasional

deadline is acceptable.

Gary Burt

7/26/2016

92

Real-Time

Scheduling(II)

Events can be:

» periodic

» aperiodic

Gary Burt

A system may have to respond to multiple

periodic event streams.

Real-time systems can meet all of the

periodic events is said to be schedulable.

Scheduled dynamically or statically.

7/26/2016

93

Real-Time Scheduler

Algorithms

rate monotonic algorithm -- assigns to

each process a priority based on the

frequency of occurrence.

earliest deadline first -- run the

process which must be done the

soonest.

least laxity: Run those on a critical

path instead of others.

Gary Burt

7/26/2016

94

Internal Structure of

MINIX

Structured in four layers

» Process management

» I/O tasks

» Server processes

» User processes

Gary Burt

7/26/2016

95

Internal Structure of

MINIX (II)

Init

Mem

Mgr

User

User

User

Process Process Process

File

Network

System server

Disk TTY

Task Task

...

...

Clock System Ethernet

...

Task Task Task

User Processes

Server Processes

I/O tasks

Process Management

Gary Burt

7/26/2016

96

Internal Structure of

MINIX (III)

Gary Burt

Bottom layer (Process management)

catches all interrupts, traps, does

scheduling, and provides higher layers

with a model of independent sequential

processes that communicate using

messages.

7/26/2016

97

Process Management

Functions:

» catching traps and interrupts

» saving and restoring registers

» scheduling

» Providing services to the next layer.

Gary Burt

7/26/2016

98

Process Management

(II)

» Handling mechanics of messages

» Checking for legal destinations

» locating sending and receiving buffers in

physical memory

» copying bytes from sender to receiver.

Gary Burt

7/26/2016

99

Process Management

(III)

The part of the layer dealing with the

lowest level interrupt handling is written

in assembly language.

Everything else is written in C.

Gary Burt

7/26/2016

100

I/O Tasks Layer

Contains the I/O processes, one per

device type.

Processes are called tasks or device

drivers.

A task is needed for reach device type,

including disks, printers, terminals,

network interfaces, and clocks, plus any

others present on your system.

Also there is one system task.

Gary Burt

7/26/2016

101

Kernel

All of the tasks in layer 2 and all of the

code in layer 1 are linked together into

one single binary program called the

kernel.

Some of the tasks common subroutines,

otherwise the are independent from one

another, scheduled independently, and

communicate using messages.

Gary Burt

7/26/2016

102

Kernel (II)

The true kernel and interrupt handlers

are executing, they are accorded more

privileges than tasks.

The true kernel can access any part of

memory and any processor register,

execute any instruction, using all parts

of memory.

Tasks can not execute all instructions

nor access all memory and registers.

Gary Burt

7/26/2016

103

System Task

Does no I/O in the normal sense.

Exists in order to provide services, such

as copying between different memory

regions, for processes which are not

allowed to do such things themselves.

Gary Burt

7/26/2016

104

Server Processes

Layer 3 contains processes that provide

useful services to the user processes.

These server processes run a a lessprivileged level then the kernel and

tasks and cannot access I/O ports

directly.

They also can not access memory

outside the segments allocated to them.

Gary Burt

7/26/2016

105

Server Processes (II)

The memory manager (MM) carries

out all the MINIX system calls that

involve memory management such as

FORK, EXEC, and BRK.

the file system carries out all the file

system calls, such as READ, MOUNT,

and CHDIR.

Gary Burt

7/26/2016

106

User Processes

All the user processes are in Layer 4.

Includes shells, editors, compilers, and

user-written a.out programs.

Includes daemons -- processes that are

started when the system is booted and

run forever.

Gary Burt

7/26/2016

107

Process Management in

MINIX

MINIX follows the general process

model previously described.

All processes are a part of the tree

started at init.

Gary Burt

7/26/2016

108

Boot Process

Gary Burt

At power on, the hardware reads the first

sector of the first track of the book disk into

memory and executes the code it finds there.

On a diskette, it is a bootstrap program

which then loads the boot program.

On a hard disk, the first sector contains a

small program and the disk's partition table.

Combined are the Master Boot Record.

7/26/2016

109

Boot Process (II)

boot loads the kernel, the memory

manager, the file system, and init, the

first user process.

The bottom three layers are all

initialized.

init is started.

init reads the file /etc/ttytab and forks off

a new process for each terminal that

can be used as a login device.

Gary Burt

7/26/2016

110

Boot Process (III)

Normally, each process is /usr/bin/getty,

which prints a message and waits for a

name to be typed.

Then /usr/bin/login is called with the

name as its argument.

After successful login, login executes

the shell that is specified in the

/etc/passwd file.

Gary Burt

7/26/2016

111

Boot Process (IV)

The shell waits for commands to be

typed and then forks off a new process

for each command.

This is how the process tree grows for

all processes.

Gary Burt

7/26/2016

112

Creating Processes

The two principle MINIX system calls for

process management are FORK and

EXEC.

FORK is the only way to create a new

process.

EXEC (and variants) allows a process

to execute a specific program.

When a new program is executed, it is

allocated a portion of memory.

Gary Burt

7/26/2016

113

IPCs in MINIX

Three primitives for sending and

receiving messages are provided:

» send( dest, &message );

» receive( source, &message );

» send_rec( src_dst, &message );

Gary Burt

When a message is sent to process that

is not currently waiting for a message,

the sender blocks. -- rendezvous.

7/26/2016

114

Process Scheduling

The interrupt system is the heart.

When processes block waiting for input,

other processes can run.

When input becomes available, the

current running process is interrupted

by the device.

Clock also generates interrupts.

The lowest layer hides these interrupts

by turning them into messages.

Gary Burt

7/26/2016

115

Process Scheduling (II)

Gary Burt

The message gets sent to some process.

At each interrupt (and process termination)

the OS redetermines which process to run.

MINIX uses a multi-level queuing system with

three levels, corresponding to Layers 2, 3,

and 4.

Within task and server levels, processes run

until they block.

7/26/2016

116

Process Scheduling (III)

User processes are scheduled using

round-robin.

Tasks have the highest priority.

Memory manager and File system are

next.

User processes are last.

Gary Burt

7/26/2016

117

Process Scheduling (IV)

Gary Burt

When picking a process to run, the

scheduler checks to see if any tasks are

ready to run. If one or more are ready,

the one at the head of the queue is run.

Otherwise it goes down the priority

queue.

7/26/2016

118

Process Scheduling (V)

At each clock tick, a check is made to

see if the current process is a user

process that has run more than 100

msec.

If it is and there is another runnable

process, the current process is moved

to the end of the scheduling queue, and

the process at the head of the queue is

run.

Gary Burt

7/26/2016

119

Process Scheduling (VI)

Gary Burt

Tasks, the memory manager, and the

file system are never preempted by the

clock, no matter how long they have

been running.

7/26/2016

120

Resource

CGI interface to browse the entire

LINUX kernel source:

http://sunsite.unc.edu/linux-source

Gary Burt

7/26/2016

121

Organization of source

code

/usr/include/

» sys/ POSIX headers

» minix/ headers for the operating system

» ibm/ IBM PC specific definitions

/usr/src/

» kernel/layers 1 and 2 (processes,

messages, drivers

» mm/ memory manager

» fs

code for the file system

Gary Burt

7/26/2016

122

Others

» src/lib

library procedures (read,

open)

» src/tools

init program

» src/boot

booting and installing MINIX

» src/commands

utility programs

» src/inet

network support

Gary Burt

7/26/2016

123

Conventions

Normally all related code is in one

directory.

Some files have the same name (but

are in different directories). const.h

Gary Burt

7/26/2016

124

Memory Layout

640K

Mem available

for user progs

129K, depending

Ethernet task

Printer task

Terminal task

Memory task

Clock task

Disk Task

Gary Burt

Kernel

2K

Unused

Interrupt Vectors

1K

0

7/26/2016

125

Memory Layout (II)

Limit of memory

Gary Burt

Memory

Available

for

user

Programs

Depending

Init

2383K

Inet task

2372K

File System

1077K

Memory Mgmr

ReadOnly & I/O

1024K

640K

7/26/2016

126

Some Code Items

#ifndef _ANSI_H

#endif

types defined in typedefs use “_t” as

suffix.

Type

16-bit

32-bit

gid_t 8

8

dev_t 16

16

pid_t 16

32

ino_t 16

32

Gary Burt

7/26/2016

127

Some Code Items

Six message types (see page 110).

#if (CHIP == INTEL)

#endif

Bootstrapping MINIX

» Look at figure 2-31, page 120

Gary Burt

7/26/2016

128

Interrupt Processing

Hardware

INT

CPU

INTA

S

y

s

t

e

m

d

a

t

a

INT

Master

interrupt

Controller

ACK

INT

Slave

interrupt

Controller

b

u

s

Gary Burt

IRQ 0

IRQ 1

.

.

IRQ 7

ACK

7/26/2016

IRQ 8

IRQ 9

.

.

IRQ 15

129

Interrupt Handling

Details vary from system to system.

The task-switching mechanism of a 32bit Intel processor is complex.

Much support is built into the hardware.

Only a tiny part of the MINIX kernel

actually sees the interrupt. This code is

in mpx386.s and there is an entry point

for each interrupt.

Gary Burt

7/26/2016

130

Interrupt Handling (II)

In addition to hardware and software

interrupts, various error conditions can

cause the initiation of an exception.

Exceptions are not always bad.

» They can be used to stimulate the

operating system to provide a service:

provide more memory,etc

» Swap in swapped out pages

Gary Burt

Don’t ignore!

7/26/2016

131

Interprocess

Communications

It is the kernel's job to translate either a

hardware or software interrupt into a

message.

MINIX uses the rendezvous principle.

» One process does a send and waits.

» The other process does a receive.

» Both processes are then marked as

runnable.

Gary Burt

7/26/2016

132

Queues

2: USER_Q

3

5

1: SERVER_Q

FS

MM

0: TASK_Q

Gary Burt

Clock

7/26/2016

4

2: USER_Q

1: SERVER_Q

0: TASK_Q

133

Hardware-Dependent

Code

There are several C functions that are

very dependent upon the hardware.

To facilitate porting MINIX to other

systems, these functions are

segregated in the files exception.c,

i8259.c, protect.c.

Gary Burt

7/26/2016

134

Utilities and the Kernel

Library

_Monitor makes it possible to return to

the boot monitor.

_check_mem is used at startup time to

determine the size of a block of

memory.

_cp_mess is used to faster copying of

messages.

Env_parse is used at startup time.

Gary Burt

7/26/2016

135

Summary

To hide the effects of interrupts,

operating systems provide a conceptual

model consisting of sequential

processes, running in parallel.

Processes can communicate with each

other using interprocess communication

primitives.

Gary Burt

7/26/2016

136

Summary (II)

Primitives are used to ensure that no

two processes are ever in their critical

sections at the same time.

A process can be running, runnable, or

blocked and can change when it or

another process executes one of the

interprocess communications primitives.

Gary Burt

7/26/2016

137

Summary (III)

Many scheduling algorithms are known,

including round-robin, priority

scheduling, multi-level queues, and

policy-driving schedulers.

Messages are not buffered, so a SEND

succeeds only when the receiver is

waiting for it. Similarly, a RECEIVE only

succeeds when a message is available.

Gary Burt

7/26/2016

138

Summary (IV)

If either a SEND or RECEIVE does not

succeed, the caller is blocked.

When a interrupt occurs, the lowest

level of the kernel creates and sends a

message to the task associated with the

interrupting device.

Task switching can also occur when the

kernel itself is running.

Gary Burt

7/26/2016

139

Summary (V)

When all interrupts have been serviced ,

a process is restarted.

MINIX scheduling algorithm used three

priority queues:

» The highest one for tasks,

» Next for the file system, memory manager,

and other servers,

» The lowest for user processes

Gary Burt

7/26/2016

140

Summary (VI)

User processes are run round robin for

one quantum at a time.

All others run until they block or are

preempted

Gary Burt

7/26/2016

141