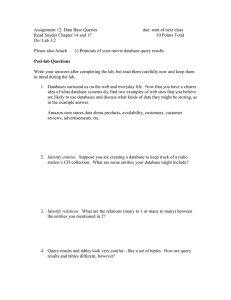

Adaptive Processing in Data Stream Systems Shivnath Babu st

advertisement

Adaptive Processing in Data Stream Systems Shivnath Babu Stanford University stanfordstreamdatamanager Data Streams • New applications -- data as continuous, rapid, time-varying data streams – – – – – – Network monitoring and traffic engineering Sensor networks, RFID tags Financial applications Telecom call records Web logs and click-streams Manufacturing processes • Traditional databases -- data stored in finite, persistent data sets stanfordstreamdatamanager 2 Using a Traditional Database User/Application Query Query … Loader Result Result … Table R Table S stanfordstreamdatamanager 3 New Approach for Data Streams User/Application Register Continuous Query (Standing Query) Input streams stanfordstreamdatamanager Result Stream Query Processor 4 Example Continuous (Standing) Queries • Web – Amazon’s best sellers over last hour • Network Intrusion Detection – Track HTTP packets with destination address matching a prefix in given table and content matching “*\.ida” • Finance – Monitor NASDAQ stocks between $20 and $200 that have moved down more than 2% in the last 20 minutes stanfordstreamdatamanager 5 Data Stream Management System Streamed Result Register Continuous Query 13 34 71 90 Data Stream Management System (DSMS) 12 22 50 45 Stored Result Input Streams Archive Stored Tables stanfordstreamdatamanager 6 Data Stream Management System Streamed ResultDSMS System Components • User/application interface Register • Execution plan operators Data Stream Continuous and synopsis data stores Query Management • Query Query processor System (DSMS) Processor • Operator scheduler • Memory manager Input Streams • Metadata and statistics catalog • and others stanfordstreamdatamanager 7 Primer on Database Query Processing Declarative Query Preprocessing Database System Canonical form Query Optimization Best query execution plan Results Query Execution stanfordstreamdatamanager Data 8 Traditional Query Optimization Which statistics are required Estimated Periodically collects statistics, statistics Query Statistics Manager: Optimizer: e.g., data sizes, histograms Finds “best” query plan to process this query Data, auxiliary structures, statistics Executor: Chosen query plan Runs chosen plan to completion stanfordstreamdatamanager 9 Optimizing Continuous Queries Poses New Challenges • Continuous queries are long-running • Stream properties can change while query runs – Data properties: value distributions – Arrival properties: bursts, delays • System conditions can change • Performance of a fixed plan can change significantly over time Adaptive processing: use plan that is best for current conditions stanfordstreamdatamanager 10 Rest of this Talk Streamed Result Register Continuous Query Input Streams DSMS System Components Query Processor StreaMon Adaptive Query Processing Engine stanfordstreamdatamanager Adaptiveordering Adaptive orderingofof commutative filters commutative filters Adaptive caching for multiway joins Adaptive use of input stream properties for resource mgmt. 11 Traditional Optimization StreaMon Which statistics are required Query Estimated Optimizer: Re-optimizer: Periodically collects statistics, Finds “best” queryisplan to Monitors current stream and statistics Ensures that plan efficient Statistics Manager: Profiler: e.g., table characteristics sizes, histograms system Combined in part for efficiency processcharacteristics this query for current Executor: Executor: Executes Runs chosen current planplan on to completion incoming stream tuples stanfordstreamdatamanager Chosen Decisions query plan to adapt 12 Pipelined Filters • Commutative filters over a stream • Example: Track HTTP packets with destination address matching a prefix in given table and content matching “*\.ida” • Simple to complex filters – Boolean predicates – Table lookups – Pattern matching – User-defined functions stanfordstreamdatamanager Bad packets Filter3 Filter2 Filter1 Packets 13 Pipelined Filters: Problem Definition • Continuous Query: F1 Æ F2 … Æ … Fn • Plan: Tuples F(1) F(2) … … F(n) • Goal: Minimize expected cost to process a tuple stanfordstreamdatamanager 14 Pipelined Filters: Example 2 1 Input tuples 1 2 3 4 1 2 3 7 1 5 6 7 1 2 4 8 F1 F2 F3 F4 Output tuples Informal Goal: If tuple will be dropped, then drop it as cheaply as possible stanfordstreamdatamanager 15 Why is Our Problem Hard? • Filter drop-rates and costs can change over time • Filters can be correlated • E.g., Protocol = HTTP and DestPort = 80 stanfordstreamdatamanager 16 Metrics for an Adaptive Algorithm • Speed of adaptivity – Detecting changes and finding new plan • Run-time overhead Profiler – Re-optimization, collecting statistics, plan switching • Convergence properties Re-optimizer StreaMon Executor – Plan properties under stationary statistics stanfordstreamdatamanager 17 Pipelined Filters: Stationary Statistics • Assume statistics are not changing – Order filters by decreasing drop-rate/cost [MS79, IK84, KBZ86, H94] – Correlations NP-Hard • Greedy algorithm: Use conditional statistics – F(1) has maximum drop-rate/cost – F(2) has maximum drop-rate/cost ratio for tuples not dropped by F(1) – And so on stanfordstreamdatamanager 18 Adaptive Version of Greedy • Greedy gives strong guarantees – 4-approximation, best poly-time approx. possible assuming P NP [CFK03, FLT04, MBM+05] – For arbitrary (correlated) characteristics – Usually optimal in experiments • Challenge: – Online algorithm – Fast adaptivity to Greedy ordering – Low run-time overhead A-Greedy: Adaptive Greedy stanfordstreamdatamanager 19 A-Greedy Which statistics are required Profiler: Maintains conditional Estimated statistics filter drop-rates and costs over recent tuples Combined in part for efficiency Re-optimizer: Ensures that filter ordering is Greedy for current statistics Executor: Processes tuples with current Greedy ordering stanfordstreamdatamanager Changes in filter ordering 20 A-Greedy’s Profiler • Responsible for maintaining current statistics – Filter costs – Conditional filter drop-rates -- exponential • Profile Window: Sampled statistics from which required conditional drop-rates can be estimated stanfordstreamdatamanager 21 Profile Window 4 4 1 2 3 4 1 2 3 7 1 5 6 7 1 2 4 8 F1 F2 F3 F4 1 0 1 0 stanfordstreamdatamanager 0 0 0 1 0 1 0 1 1 1 1 0 Profile Window 22 Greedy Ordering Using Profile Window F1 F2 F3 F4 F1 F2 F3 F4 1 0 1 0 2 2 3 2 0 0 0 1 1 0 1 0 F3 F1 F2 F4 3 2 2 2 0 2 1 F3 F2 F4 F1 3 2 2 2 2 0 1 1 0 0 1 0 0 0 1 0 0 0 0 1 1 Matrix View Greedy Ordering stanfordstreamdatamanager 23 A-Greedy’s Re-optimizer • Maintains Matrix View over Profile Window – Easy to incorporate filter costs – Efficient incremental update – Fast detection & correction of changes in Greedy order Details in [BMM+04]: “Adaptive Processing of Pipelined Stream Filters”, ACM SIGMOD 2004 stanfordstreamdatamanager 24 Next • Tradeoffs and variations of A-Greedy • Experimental results for A-Greedy stanfordstreamdatamanager 25 Tradeoffs • Suppose: – Changes are infrequent – Slower adaptivity is okay – Want best plans at very low run-time overhead • Three-way tradeoff among speed of adaptivity, run-time overhead, and convergence properties • Spectrum of A-Greedy variants stanfordstreamdatamanager 26 Variants of A-Greedy Algorithm Convergence Properties Run-time Overhead Adap. A-Greedy 4-approx. High (relative to others) Fast 1 0 1 0 0 0 0 1 1 0 1 0 0 1 0 0 0 1 0 0 0 0 1 1 Profile Window stanfordstreamdatamanager 3 2 2 2 2 0 1 1 0 Matrix View 0 Matrix View 27 Variants of A-Greedy Algorithm Convergence Properties Run-time Overhead Adap. A-Greedy 4-approx. High (relative to others) Fast Sweep 4-approx. Less work per sampling step Slow Local-Swaps May get caught in local optima Misses correlations Less work per sampling step Slow Lower sampling rate Fast Independent stanfordstreamdatamanager Matrix View 28 Experimental Setup • Implemented A-Greedy, Sweep, Local-Swaps, and Independent in StreaMon • Studied convergence properties, run-time overhead, and adaptivity • Synthetic stream-generation testbed – Can control & vary stream data and arrival properties • DSMS server running on 700 MHz Linux machine, 1 MB L2 cache, 2 GB memory stanfordstreamdatamanager 29 Avg. processing rate (tuples/sec) Converged Processing Rate 55000 50000 Optimal-Fixed Optimal Sweep Sweep A-Greedy A-Greedy 45000 40000 35000 Local-Swaps Local-Swaps 30000 Independent Independent 25000 20000 3 4 6 8 Number of filters stanfordstreamdatamanager 10 30 Avg. processing rate (tuples/sec) Effect of Correlation 55000 50000 Optimal-Fixed Sweep Optimal A-Greedy A-Greedy 45000 40000 35000 Independent Local-Swaps 30000 Independent 25000 20000 1 2 3 Correlation factor stanfordstreamdatamanager 4 31 Run-time Overhead Average time/tuple (microsecs) Tuple processing Profiling + Reopt. Overhead 250 200 150 100 50 0 Optimal A-Greedy stanfordstreamdatamanager Sweep Local-Swaps Independent 32 5000 4800 4600 4400 4200 4000 3800 3600 3400 3200 3000 Sweep Local-Swaps A-Greedy Independent 80 0 77 0 74 0 71 0 68 0 65 0 62 0 59 0 56 0 Stream data properties changed here 53 0 50 0 #Filters evaluated per 2000 tuples Adaptivity of time Progress ofProgress time (x1000 tuples processed) stanfordstreamdatamanager 33 Remainder of Talk • Adaptive caching for multiway joins • Current and future research directions • Related work stanfordstreamdatamanager 34 Stream Joins join results DSMS observations in the last minute Sensor R stanfordstreamdatamanager Sensor S Sensor T 35 MJoins (VNB04) ⋈T ⋈T ⋈S Window on R ⋈R Window on S stanfordstreamdatamanager ⋈S ⋈R Window on T 36 Excessive Recomputation in MJoins ⋈T ⋈R Window on R Window on S stanfordstreamdatamanager Window on T 37 Materializing Join Subexpressions Fullymaterialized join subexpression Window on R ⋈ ⋈ Window on S stanfordstreamdatamanager Window on T 38 Tree Joins: Trees of Binary Joins Fully-materialized join subexpression Window on S ⋈ S Window on R ⋈ R stanfordstreamdatamanager Window on T T 39 Hard State Hinders Adaptivity W R ⋈ WT WS ⋈ WT ⋈ ⋈ Plan switch ⋈ R S T stanfordstreamdatamanager ⋈ S R T 40 Can We Get Best of Both Worlds? MJoin ⋈S ⋈T ⋈R ⋈T ⋈R ⋈S Tree Join WR ⋈ W T ⋈ ⋈ R S T Θ Recomputation stanfordstreamdatamanager R S T Θ Less adaptive Θ Higher memory use 41 MJoins + Caches ⋈T Bypass pipeline segment ⋈R WR ⋈ WT S tuple Cache Probe Window on R Window on S stanfordstreamdatamanager Window on T 42 MJoins + Caches (contd.) • Caches are soft state – Enables plan switching with almost no overhead – Flexible with respect to memory availability • Captures whole spectrum from MJoins to Tree Joins, and plans in between • Challenge: adaptive algorithm to choose join operator orders and caches in pipelines stanfordstreamdatamanager 43 Adaptive Caching (A-Caching) • Adaptive join ordering with A-Greedy or variant – Join operator orders candidate caches • Adaptive selection from candidate caches – Based on profiled costs and benefits of caches • Adaptive memory allocation to chosen caches • Problems are individually NP-Hard – Efficient approximation algorithms scalable • Details in [BMW+05]: “Adaptive Caching for Continuous Queries”, ICDE 2005 stanfordstreamdatamanager 44 A-Caching (caching part only) List of candidate caches Estimated Profiler: statistics Re-optimizer: Ensures that Estimates costs and benefits maximum-benefit subset of candidate caches of candidate caches is used Combined in part for efficiency Add/remove caches Executor: MJoins with caches stanfordstreamdatamanager 45 Avg. processing rate (tuples/sec) Performance of Stream-Join Plans (1) 450000 ⋈ 400000 350000 300000 ⋈ 250000 U 200000 150000 ⋈ 100000 T 50000 0 MJoin TreeJoin A-Caching R S Arrival rates of streams are in the ratio 1:1:1:10, other details of input are given in [BMW+05] stanfordstreamdatamanager 46 Avg. processing rate (tuples/sec) Performance of Stream-Join Plans (2) 250000 200000 150000 100000 50000 0 MJoin TreeJoin A-Caching Arrival rates of streams are in the ratio 15:10:5:1, other details of input are given in [BMW+05] stanfordstreamdatamanager 47 Remainder of Talk • Current and future research directions • Related work stanfordstreamdatamanager 48 Research Directions Applications P2P Federation Streams PubSub ApplicationsSensors IR Privacy Data Mining Data Management System • System complexity is growing beyond control • Up to 80% of IT budgets spent on maintenance [McKinsey] – “People cost” dominates • Data Management Systems Data Formats & Access must be self-managing Records Web pages Partitions DataXML Formats Access Views Stored&procedures Web services Indexes Text OS/Hardware Windows SAN LinuxOS/Hardware NAS RAID PDA Local disks Remote DB stanfordstreamdatamanager – Autonomic Systems – Self-configuration, self-optimization, self-healing, and self-protection • Adaptive processing is key 49 New Techniques for Autonomic Systems & Adaptive Processing • Bringing more components under adaptive management – Ex: Parallelism, overload management, memory allocation, sharing data & computation across queries • Being proactive as well as reactive – Rio prototype system for adaptive processing in conventional database systems [BBD04] – Considering uncertainty in statistics to choose robust query execution plans [BBD04] – Plan logging: A new overall approach to adaptive processing of continuous queries [BB05] stanfordstreamdatamanager 50 Future Work in DSMSs • Expanding the declarative interface – Event detection (e.g., regular expressions), data cubes, decision trees and other data mining models • Scaling in data arrival rates – Graphical Processing Units (GPUs) as stream coprocessors, Stanford’s streaming supercomputer • Many others – Disk usage based on query and stream properties – Handling missing or imprecise data in streams – Handling hybrid workloads of continuous & regular queries stanfordstreamdatamanager 51 Other Work • More on StreaMon – Using stream properties for resource optimization [TODS] – Theory of pipelined processing [ICDT 2005] – System demonstration [SIGMOD 2004] • Stanford’s STREAM DSMS – Overview papers, source code release, web demo • Other aspects of a DSMS – Operator scheduling [SIGMOD 2003, VLDB Journal] – Continuous query language & semantics [VLDB Journal] – Memory requirements for continuous queries [PODS 2002, TODS] stanfordstreamdatamanager 52 Other Work (contd.) • Adaptive query processing architectures – Taxonomy of past work, next steps [CIDR 2005] – Adaptive processing for conventional database systems [Rio system, Technical report] – Concurrent use of multiple plans for same query [Technical report] • Summer internship – Compressing relations using data mining [SIGMOD 2001] stanfordstreamdatamanager 53 Related Work (Brief) • Adaptive processing of continuous queries – E.g., Eddies [AH00], NiagaraCQ [CDT+00] • Adaptive processing in conventional databases – Inter-query adaptivity, e.g., Leo [SLM+01], [BC03] – Intra-query adaptivity, e.g., Re-Opt [KD98], POP [MRS+04], Tukwila [IFF+99] • New approaches to query optimization – E.g., parametric [GW89, INS+92, HS03], expectedcost based [CHS99, CHG02], error-aware [VN03] • Other DSMSs – E.g., Aurora, Gigascope, Nile, TelegraphCQ stanfordstreamdatamanager 54 Summary • New trends demand adaptive query processing – New applications, e.g., continuous queries, data streams – Increasing data sizes, query complexity, overall system complexity • CS-wide push towards Autonomic Systems • Our goal: Adaptive Data Management Systems – StreaMon: Adaptive Data Stream Engine – Rio: Adaptive Processing in Conventional Databases • Google keywords: “shivnath”, “stanford stream” stanfordstreamdatamanager 55