Course Assessment-team Report (CAR) Civil Engineering Undergraduate Program College of Engineering

advertisement

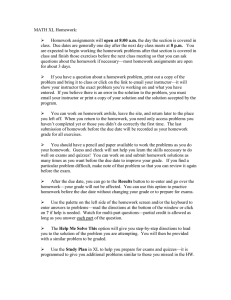

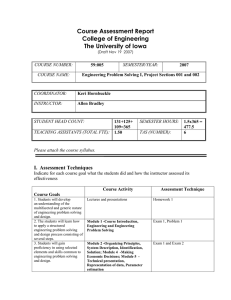

Course Assessment-team Report (CAR) Civil Engineering Undergraduate Program College of Engineering The University of Iowa Instructor: K Hornbuckle Course number: Assessment Team Members: Keri Hornbuckle, Allen Bradley, Gene Parkin Date of Assessment 59:005 ABCD (lectures with some discussion of projects) 6/1/07 Semester/year: Fall 2006 Please attach the course syllabus and the Course Outcomes Worksheet (COW) showing course goals and their mapping to CE Program outcomes, with the assessment methods for each goal. Course Goal Assessment Please indicate which of the following assessment techniques were used in the course and your judgment of their usefulness in regards to assessing accomplishment of the Course Learning Objectives. Assessment Technique Homework Exams (FINAL only) Quizzes Projects (Major) Written Reports (other than projects) Oral Reports Student Self-Evaluation Student Peer-Evaluation Course Portfolios (project based) Class Surveys (e.g., EASY) Instructor Observation Other [SPIF Comments] Pre and Post Tests Not Used Used, provided useful data x x x x x* x* x* x* x* x x x x ** * Used in project section of this course, not in lecture ** New assessment added in 2006 Page 1 of 4 Used, not recommended Instructor Perception of Class Overall Performance in Meeting Course Goals (Assessment includes both the lecture and the project component of the course) Course Learning Goal (from Course Program Outcome Rating (1 – 6) Description) 1. Students will develop an understanding of the c(*), e(*),i(*) 5 multifaceted and generic nature of engineering problem solving and design. 2. The students will learn how to apply a c(*), e(*) 6 structured engineering problem solving and design process consisting of several steps. 3. Students will gain proficiency in using a(*) 6 selected elements and skills common to engineering problem solving and design. 4. Students will improve their communication g(*), 6 skills through oral and written reports. 5. Students will solve open-ended problems d(*) 6 working in teams. 6. Students will learn to use several engineering k(*) 6 software "tools" useful in problem solving. 7. The student will be able identify and describe 5 selected engineering systems and subsystems, and apply the appropriate fundamentals and unifying concepts to solve problems 8. The student will learn basic elements of g(*),b(*) 6 acceptable graphical presentation and analysis of data. 9. Students will recognize the importance of e(*) 6 economic considerations in the design process and will be able to apply basic economic relationships in making decisions. 10. Students will become aware of the role of life- h(*) 4 cycle assessment as part of a design process. Note: 1. Select Course Learning Goals that directly contribute to Program Outcomes 2. Rating 1 is lowest, 6 is highest (as with ACE Form) 3. Rating metric based on instructor’s integral impression of overall class performance in exams, homework, projects, etc. * denotes moderate contribution to the outcome ** denotes substantial contribution to the outcomes Page 2 of 4 ABET Outcomes a) – k) (Used for core-course assessments) Engineering graduates will have the following attributes: (a) an ability to apply knowledge of mathematics, science, and engineering; (b) an ability to design and conduct experiments as well as to analyze and interpret data; (c) an ability to design a system, component, or process to meet desired needs; (d) an ability to function on multidisciplinary teams; (e) an ability to identify, formulate, and solve engineering problems; (f) an understanding of professional and ethical responsibility; (g) an ability to communicate effectively in oral (o), written (w), and graphical (g) forms; (h) the broad education necessary to understand the impact of engineering solutions in a global and societal context; (i) a recognition of the need for and an ability to engage in lifelong learning; (j) a knowledge of contemporary issues; (k) an ability to use the techniques, skills, and modern engineering tools necessary for successful engineering practice; Page 3 of 4 Comments and Changes in the Course from Previous Years (Course content is provided in the appendix associated with this course) Students are more sophisticated with computer tools. We have included a series of evening workshops about using Excel for 4 years. We have noticed that each year the students’ need for this course declines. This year only about half of the students completed the optional Excel workshop. The skill level for Excel is not very high, however, and many students showed improvement in their Excel skills after completing the course. This was assessed and observed through homework and through the pre- post-test. The section on lifecycle / sustainability was revised. The material was removed as a stand-alone section and instead more examples and problems were presented that used sustainability as content. We made renewed attempts to bring research into the classroom. This year we used ongoing work with fragrance compounds in the Great Lakes to illustrate the utility of mass balances. We also used rainfall data to illustrate strategies for plotting complex data and developing mathematical models. We introduced MatLab software for the first time this year. We added this to our curriculum because students are becoming more competent with Excel and other computer software and in order to link EPS1 with EPSII, where MatLab is used extensively. We added a pre-assessment and post course assessment this year. The test was run online through our course web site. Assessment Results and Consequent Actions Taken: ACE /EASY comments indicate considerable diversity in student perception of the course. Some students are comfortable and pleased with the learning content. Other students are disillusioned with the lecture style, time commitment required, and the challenge of the exams. Several students mentioned that the lecture and project parts are not well integrated. Several students commented that the course was too easy. Other students said the course was too hard and that there was too much homework. The ACE/EASY scores for the lecture portion of the course were good to very good (4-5 out of 6) for the instructors and average (3 out of 6) for the textbook. These scores are typical for large undergraduate courses. Other factors that were surveyed this year, including facilities, communication, and course organization were similarly rated good to very good. Table 1. Sample ACE/EASY (1=poor, 6 =excellent): Select Mean Median Mean Median EASY/ACE Score Score Score Score question Section Section Section Section A A B B Page 4 of 4 Mean Score Section C Median Score Section C Instructor 3.83 was effective Textbook was 2.88 helpful 4.00 4.40 5.00 4.48 5.00 3.00 2.82 2.50 2.93 3.00 This year we used an online pre and post test to assess course learning. The pre- and post tests were administered online with the pass-word projected software package, ICON, provided by the University of Iowa. The test included 12 questions, of which two were eliminated for the post test. The nature of the questions are summarized in Figure 3. The questions are included in the appendix of this report. The questions covered the major topics of the course, except Questions 11 and 12. These questions were not related to a particular topic or course outcome so were eliminated from the post test. Most questions were multiple choice and indicated by M-S in Table 3. Question 9 and 11 required a calculation input and Question 8 was a survey that did not have a wrong answer (“Have you used Matlab before?”). All the questions were mapped to course outcomes on the accompanying Course Outcomes Worksheet (COW) for this course. Table 3. Topics for each question in Pre Test. The Post test included the same question but omitted questions 11 and 12. The pre- and post- tests do not substitute for the detailed evaluations completed for the two midterm exams given in class. These exams are not multiple-choice and require a clear problembased approach. See appendix for this course to see examples of the mid-term exams. Page 5 of 4 The overall results of the pre and post test are shown below. Figure 1. General results for EPS1 Pretest, Fall 2006. Figure 2. General results for EPS1 Post-test, Fall 2006. Page 6 of 4 Students performed better for all questions in the post test than in the pretest. The percent correct is color coded in the figures above. The bars in blue indicate those questions where more than 90% of students answered the questions correctly. The bars in green indicate less than 8090% of students answered the question correctly. The bars in yellow indicate 70-80%, and the bars in orange indicate a success rate of 60-70%. The largest improvement was observed for Question 4, which was on the topic of energy balance (“Can there be two different but correct outcomes of an energy balance on the production of ethanol?”). We had included a lecture about energy balance with ethanol as the subject. Ethanol energy balances were used as a demonstration of the importance of the boundaries, definitions and assumptions. Students clearly learned that these factors have great impact on the outcome. In the pre-test, many students answered “Yes, it depends on political leanings”. Only 3% noted the correct answers. In the post test many more students (77%) answered “Yes, it depends on whether the energy tracked is foreign oil, fossil fuel, or total energy” or “Yes, it depends on whether the boundary of the system is defined by the property line of the ethanol plant or the state of Iowa.” Both these answers were graded as correct while the political leanings answer was graded as incorrect. Question 5 concerned engineering economics. Students were asked to determine what data was needed in order to compare the costs of two projects with different costs over capital, salvage, operating costs. Students demonstrated learning in this area: 32% of students gave correct answers in the pretest and 78% gave correct answers in the post test. Questions 9 and 10 required the students to interpret growth data from a semi-log plot of bacteria versus time. Question 9 asked student to read a point on the graph. Their success on this question improved from 36% to 83%. Question 10 required students to interpret the growth of bacteria as linear, exponential, or following the power law. Before the course, only 44% of students could answer correctly. After the course, 88% could answer correctly. Recommended Changes to the Course Goals and Assessment Methods: Although it does not provide the level of detail that the two midterms and the homework provides, the pre- and post- test exercise provided very useful data to the instructors. 2006 was the first time this strategy was used to assess course learning outcomes. We can see that learning improved in all areas. However three questions had correct responses from less than 70% of students. These areas included the differences between constraints and criteria in engineering problem solving and the importance of boundaries and assumptions in energy balances. The latter is of less concern because the pretest performance was very poor (3% correct). The definition of constraints ad criteria should be reiterated throughout the course. In the past, this has only been discussed at the beginning and apparently did not sink in very well. There continues to be a challenge in integrating the lecture and projects portions of the class. The most common area of integration concerns engineering economics. This topic is covered in all the projects and in detail in the lecture. Other topics are covered in some of the projects but not all. The major challenge is finding time for the professors to meet together. Page 7 of 4 Questions Administered in the Pre and Post Test for ESP1 (59:005, Fall 2006) Page 8 of 4 Page 9 of 4 Page 10 of 4 Page 11 of 4