CS 345 Ch 4. Algorithmic Methods Spring, 2015

advertisement

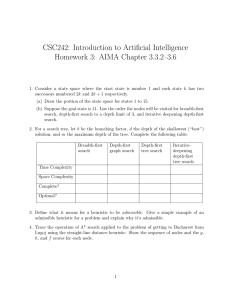

CS 345 Ch 4. Algorithmic Methods Spring, 2015 Misconceptions about CS Computer Science is the study of Computers. “Computer Science is no more about computers than astronomy is about telescopes, biology is about microscopes, or chemistry is about beakers and test tubes. Science is not about tools. It is about how we use them and what we find out when we do.” Michael Fellows and Ian Parberry in Computing Research News Computer Science is the study of the uses and applications of computers and software. Learning to use a software package is no more a part of computer science than driver education is a branch of automotive engineering. A wide range of people use computer software, but it is the computer scientist who is responsible for specifying, designing, building, and testing software packages and the computer systems on which they run. Computer Science is the study of how to write computer programs In computer science, it is not only the construction of a high-quality program that is important but also the methods it embodies, the services it provides, and the results it produces. One can become so enmeshed in writing code and getting it to run that one forgets a program is only a means to an end, not an end in itself. A Definition of CS Computer Science is the study of algorithms, including their 1. Formal and mathematical properties 2. Hardware realizations 3. Linguistic realizations 4. Applications Norman E. Gibbs and Allen B. Tucker, “A Model Curriculum for a Liberal Arts Degree in Computer Science,” Communications of the ACM, 29(3), (1986): 204. Algorithm • Derived from the name of a Persian Mathematician: Mohammed ibn Musa al Khowarizmi. (c. 825 A.D.) • A procedure for solving a mathematical problem in a finite number of steps that frequently involves repetition of an operation; broadly: a step-by-step method for accomplishing some task Expressing Algorithm • Pseudocode – Is there a “standard” pseudocode? • Flowchart: Traversing a Data Structure • Many times a problem can be represented by some data structure, and the solution to the problem is found by traversing the data structure. • Think of finding the maximum or minimum of a list of values. Graphs and Trees • A common data structure for representing a problem is a graph. Recall that a tree is just a special type of graph known as a DAG. • Graphs have two basic forms of traversals: a breadth-first or a depth-first traversal. BFT’s and DFT’s • When a general graph is traversed in a breadth-first or a depth-first manner, the nodes of the graph and the edges of the graph that were traversed form a tree. • This tree is called either the Breadth-First Tree (BFT), sometimes the Breadth-First Search Tree, or the Depth-First Tree (DFT), also called the Depth-First Search Tree. Depth-First Tree DFT( Node S ) mark S for each node X adjacent to S that is not marked do visit X DFT( X ) Breadth-First Tree BFT( Node S ) mark S put S in a queue while the queue is not empty do remove node W from the queue for each node X adjacent to W that is not marked do mark X visit X put X in the queue A B C D E F Tree Traversals • Depth-First – Pre Order – In Order – Post Order • Breadth-First – Level Order • Data Structures and Space Requirements Some Uses Of Traversals • Binary Search Tree and In Order Traversal • Expression Tree and – Pre Order: Prefix notation – Post Order: Postfix notation – In Order: Infix notation • State Space Search – Examples: Water-Jug problem, 16-Puzzle, and Stack Permutation. Backtracking • A very general technique that also traverses a tree. The traversal is a depth-first traversal. • Used with problems that deal with a set of solutions or an optimal solution satisfying some constraints. • Because it is depth-first, backtracking is often implemented recursively. Backtracking II • Solutions often are expressed as an n-tuple, (x1,…xn) where xi are from a finite set Si • When a node is reached that cannot be part of the solution, the remainder of the branch is pruned: the algorithm backs up to the parent node and is restarted. • A similar method that traverses the tree in a breadth-first manner is called Branch and Bound. Examples of Backtracking • N-Queens problem • Sum of Subset problem • Knight’s Tour problem Divide And Conquer • Divide and Conquer means to break a problem into two or more parts, solve each part separately, and merge the results together. • When can divide-and-conquer be used? Divide and Conquer II Divide and Conquer can be used when: (1) There is a natural way of dividing a problem in parts. (2) There is a natural way of merging solutions to the divided problem. (3) The run-time order of the solution to the problem is (N2) or higher. Examples of Divide and Conquer • Bubble Sort of 1,000 elements. • Merge Sort • Maximum Subsequence Sum Greedy Algorithms • Often applies to situations in which the problem can be viewed as a sequence of decisions or choices. • Develops an approach in which we chose, at each step, the “most attractive” choice. • Advantages: Simple and natural algorithms. • Disadvantage: Does not work in every situation. Greedy Properties • Greedy algorithms do not work in all cases. It can be very difficult to tell when a greedy approach works. • There are two key properties a problem must exhibit • Greedy-Choice Property • Optimal Substructure Property Greedy Choice Property A globally optimal solution can be arrived at by making a locally optimal choice. Optimal Substructure Property A problem exhibits optimal substructure if an optimal solution to the problem contains within it optimal solutions to subproblems. Example: Single Source Shortest Path Examples of Greedy Method • Minimum coin problem – US Coins – Slobovian Coins • Knapsack problem – 0/1 (integer) version – fractional (continuous) version • Lazy Contractor (pp 87-89) Prim’s Alg. Dynamic Programming • Overlapping Subproblems – As in Divide-and-Conquer, we want to divide the problem into subproblems. – Pascal’s Triangle and the Binomial Theorem – Divide and Conquer does more work than necessary to solve this problem. • Weary Traveler Problem (p. 89) – Rather than a minimum spanning tree (lazy contractor), the weary traveler is looking for a minimum path. – A Greedy Solution does not solve this problem because a Greedy method cannot “look ahead”. Quote from page 91 “Dynamic planning can be thought of as divide-and-conquer taken to the limit: all subproblems are solved in an order of increasing magnitude, and the results are stored in some data structure to facilitate easy solutions to larger ones.” Steps in a Dynamic Solution 1. View the problem in terms of subproblems. 2. Devise a recursive solution. 3. Replace the recursion with a table and compute an optimal solution in a bottom-up fashion. Examples of Dynamic Programming • Slobovian Coin Problem • 0/1 Knapsack Problem • Longest Common Subsequence Problem Memoized Solution • A memoized solution is a cross between a dynamic programming solution and a recursive solution. • Uses the control structure of recursion, but records the result of each recursive call in an array. This way, each subproblem is calculated only once.