Sampling Distribution of Means: Basic Theorems

advertisement

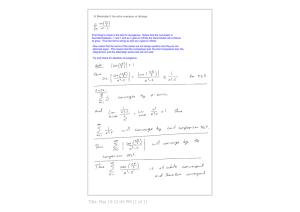

Sampling Distribution of Means: Basic Theorems 1. M X is an unbiased estimate of x . Consider N samples each consisting of 2 observations sampled at random 2 from a single population with mean xand variance X . X ij is the i-th observation in the j-th sample. T.j is the sum (total) of the 2 observations in the j-th sample. Observation 1 X11 X12 1 2 Sample 2 X21 X22 T T.1 T.2 . . . . . . . . j X1j . . . . N X1N X2j T.j . . . . X2N T.N X 1 X 1 j / N E ( X 1 ) and X X 2 j / N E ( X 2 .) 2 j j T T. j / N ( X 1 j X 2 j ) / N X 1 j / N X 2 j / N j X 1 . X 2. j j j E (T. j ) E ( X 1 ) E ( X 2 ) Or, more generally, for samples of n observations: X X 2 X 3 ... X J X N ) X 1 X 2 ... Xj ..... Xn . E ( X 1 X 2 ... X j ... X n ) E ( X 1 ) E ( X 2 ) ... E ( X j ) ... E ( X n ) As N approaches infinity the distribution of the i-th observation over repeated random samples approaches the distribution of the population from which the i-th observation was drawn. If all Xiare sampled from the same population, then X J is a constant for all i, X . T n X T / n T / n n X / n X Tj/n M.j M X or , E ( M . j ) E ( Xj.) Q.E.D. 2. The variance of the sampling distribution of means for random and independent samples of size n is given by the variance of the population 2 2 from which the samples were divided by the sample size: X / n. Note that T 2 (T . j T ) 2 / N . j 2: For samples of size T . j ( X 1 j X 2 j ) ( X1 X 2 ) ( X 1 j X1 ) ( X 2 j X 2 ). xˆ1 j xˆ 2 j 2 2 2 T ( xˆ1 j xˆ 2 j ) 2 / N ( xˆ1 j 2 xˆ1 j xˆ 2 j xˆ 2 j ) / N X1 2 xˆ1 j xˆ 2 j / N x 2 j j 2 2 j j j and xˆ1 j xˆ 2 j / j xˆ xˆ 2 2 1j j 2j j If X1j is independent of X 2 j , then 0 and xˆ1 j xˆ 2 j 0 T X 1. X 2. 2 2 j 2 Or more generally, if all Xij are independent, for random samples of n observations: 2 ( x1 . x2. ...... x j. .... xn. ) x1. 2 x 2. 2 ... xi. 2 .... xn. 2 As N approaches infinity the distribution of the i-th observation over repeated random samples approaches the distribution of the population from which the i-th observations was drawn. If all Xij are sampled from the same population, then xi. 2 is a constant for all i, 2 . T n x 2 x 2 T / n 2 T 2 / n 2 n x 2 / n 2 x 2 / n and T . j / n M . j M X / n 2 2 Q.E.D. 3. s X ( X i M x ) 2 /( n 1) is an unbiased estimate of i Consider the following data matrix. 2 X2 . Observation 1 X11 X12 1 2 Sample 2 ….. i …… n ……….M X21 Xi1 Xn1 M.1 X22 Xi2 Xn2 M.2 . . . . . . . . j X1j . . . . N X1N X2j Xij . . M.j . . X2N Xnj XiN i XnN n M.N .. Note that X ij / nN M . j / N j i j i / n X j To obtain the mean squared deviation of each observation in the data matrix about the grand mean of all observations, we may proceed as follows: X i1 .. X i1 M .1 M .1 .. ( X i1 M .1 ) ( M .1 .. ) ( X i1 .. ) 2 ( X i1 M .1 ) 2 2( X i1 M .1 )( M .1 .. ) ( M .1 .. ) 2 (X i1 .. ) 2 ( X i1 M .1 ) 2 2( M .1 .. ) ( X i1 M .1 ) n( M .1 .. ) ( M .1 .. ) 2 j i ( X ..) / n s (M ) ( X ..) / n s (M 2 2 i1 .1 .1 j 2 i 2 2 i1 j i ( X j .j .1 j ) 2 j ..) 2 / nN s. j / N (M .1 ) 2 / N 2 ij i j j As N approaches infinity the distribution of all N observations in the data matrix approaches the distribution of the population from which each X ij was sampled. ( X ij ) 2 / nN X 2 j i and X s. j / N (M . j .. ) 2 / N 2 2 j s M 2 2 j 3. continued E(s X ) s X M 2 2 2 2 and M X / n (Theorem 1) 2 2 E (s X ) S X X / n 2 2 2 n 2 2 E (s x ) x n 1 2 or n 1 2 X n E[( n 2 2 )s X ] x . n 1 s X ( X i M X ) 2 / n xi / n 2 ( 2 i n i 2 n 2 2 2 )s x ( ) xi / n xi / n 1 s x . n 1 n 1 i j and E(s x ) s x 2 2 2 4. From Theorems 2 and 3it follows that: sM s x / n 2 2 Is an unbiased estimate of M (with n-1 degrees of freedom). 2 Note that: 2 2 2 2 s M s x / n s x / n xi / n(n 1) i