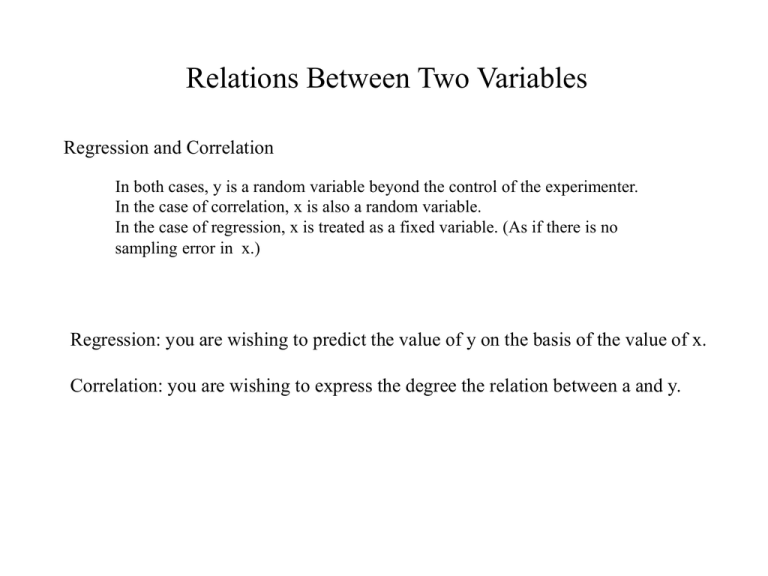

Relations Between Two Variables Regression and Correlation

advertisement

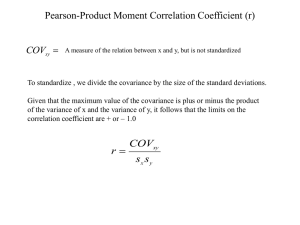

Relations Between Two Variables Regression and Correlation In both cases, y is a random variable beyond the control of the experimenter. In the case of correlation, x is also a random variable. In the case of regression, x is treated as a fixed variable. (As if there is no sampling error in x.) Regression: you are wishing to predict the value of y on the basis of the value of x. Correlation: you are wishing to express the degree the relation between a and y. Scatter Diagram or Scatter Plot X axis (abscissa) = predictor variable Y axis (ordinate) = criterion variable Positive Negative Perfect None Covariance COVxy is a number reflecting the degree to which two variable vary or change in value together. ( x x )( y y ) n 1 n = the number of xy pairs. Using an example of collecting RT and error scores. If a subject is slow (high x) and accurate (low y), then the d score for the x will be positive and the d score for the y will be negative; their product will be negative. If a subject is slow (high x) and inaccurate (high y), then the d score for the x will be positive and the d score for the y will be positive; their product will be positive. If a subject is fast (low x) and accurate (low y), then the d score for the x will be negative and the d score for the y will be negative; their product will be positive. If a subject is fast (low x) and inaccurate (high y), then the d score for the x will be negative and the d score for the y will be positive; their product will be negative. Illustrative Trends (x x) Sub. x 1 2 3 4 5 100 200 300 400 500 1 2 3 4 5 1 2 3 4 5 100 200 300 400 500 100 200 300 400 500 -200 -100 0 100 200 -200 -100 0 100 200 -200 -100 0 100 200 ( y y) y 20 15 10 5 0 0 5 10 15 20 10 5 20 5 10 10 5 0 -5 -10 ( x x )( y y ) -2000 -500 0 -500 -2000 -10 -5 0 5 10 2000 500 0 500 2000 0 -5 10 -5 0 0 500 0 -500 0 Those subjects who are fast make more errors. Total = -5000 Those subjects who are fast make fewer errors. Total = 5000 There is no trend. Total = 0 Scatter plots of data from previous page. We can see a trend after all. 100 200 300 400 500 Scale Issues (Sec.) (Min.) x (x x) y ( y y) 1 3 -4 -2 5 13 -8 0 32 0 5 7 9 0 2 4 9 17 21 -4 4 8 0 8 32 1 3 5 7 -4 -2 0 2 300 780 540 1020 -430 0 -240 240 1920 0 0 480 9 4 1260 480 1920 ( x x )( y y ) Total = 72 Total = 4320 Sub 1 2 3 4 5 X 2 3 2 4 4 Y 10 12 12 15 12 COVxy ( x x )( y y ) n 1 What is the covariance? The absolute value of the covariance is a function of the variance of x and the variance of y. Thus, a covariance could reflect a strong relation when the two variances are small, but maybe express a weak relation when the variances are large. Linear Relation is one in which the relation can be most accurately represented by a straight line. xnew c1 ( xold ) c2 Remember: a linear transformation The general equation for a straight line: y bx a (a is the y intercept and b is the slope of the line.) b y y2 y1 x x2 x1 3 2 1 .5 31 2 If x = 8 then, y = .5(8) + 1.5 = 5.5 A = 1.5 When the relation is imperfect: (not all points fall on a straight line.) Why are the points not on the line? We draw the “best fit” using what is called the “least-squares” criterion. Why squares? See optional link on simultaneous equations for a closer look at the idea of least-squares. Regression Line: Example Subject Stat. Score (x) GPA (y) 1 110 1.0 2 112 1.6 3 118 1.2 4 119 2.1 5 122 2.6 6 125 1.8 7 127 2.6 8 130 2.0 9 132 3.2 10 134 2.6 11 136 3.0 12 138 3.6 GPA 4 3 2 1 110 120 130 140 Statistics Score We wish to minimize y ( y y ) 2 The predicted value of y for a given value of x y by x a y by = the slope minimizing the errors predicting y ay = y-axis minimizing the errors predicting y ( x x )( y y ) by COVxy sx2 (n 1) (x x)2 (n 1) For our example: by 0.074 What does this mean? ay y b x a n y bx Our working example: A = 2.275 – 0.074(125.25) = -7.006 The regression line for our data: y 0.074 x 7.006 Using the regression formula to predict: e.g., x = 124 y 0.074(124) 7.006 y 2.17 Note: If the x value you are inserting is beyond the range of the values used to construct the Formula, caution must be used. Remember: To minimize the sum of the squared deviations about a point, the mean is best. GPA ( y y)2 1.0 1.69 1.6 .49 1.2 1.21 2.1 .04 2.6 .09 1.8 .25 2.6 .09 2.0 .09 3.2 .81 2.6 .09 3.0 .49 3.6 .169 y 27.3 y 2.3 ( y y) Note: Using our GPA and Statistic Scores data 7.03 sy 11 = .79 We could call this a type of Standard Error” of y. 2 7.03 Using only the mean of y to predict y, all y values would be the mean. Using X, sy .x ? Which MODEL is superior? Why? Is there a reliable difference? Standard Error of the Estimate: similar to a standard deviation Where the relation is imperfect, there will be prediction error, whether one use the mean or the regression line. s y .x 2 ( y y ) ( n 2) Transformed…. n 1 sy .x sy (1 r r ) n 2 What is r? Residual Variance = What might create residual variance?