A Comparison of some Iterative Methods in Scientific Computing Shawn Sickel

advertisement

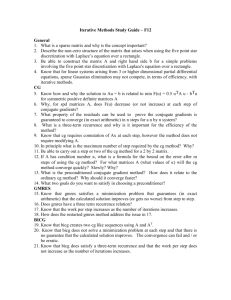

A Comparison of some Iterative Methods in Scientific Computing Shawn Sickel What is Gaussian Elimination? • Gaussian Elimination, GE, is a method for solving linear systems, taught in High School. Original Linear System After adding the equations 1 and 2, the next step is to eliminate this variable “y”. To eliminate the previous “y”, the line 3 was subtracted from line 2. Now “z” must be removed. To remove “z”, the opposite of line 3 will be added to line 4. This leaves 2*w = -2. Backwards Substitution Once a variable is pinned down, the process of backwards substitution begins. This process includes plugging in variables to find the solution, backwards. Why is the Gaussian Elimination method considered outdated and Inefficient? • This equation tells us how many steps are required in solving a linear system with the Gaussian Elimination method. • Each step creates a new matrix, so a whole new set of numbers is formed each time. • If this method is used on a 10x10 matrix, 430 steps will be required in the process. Nobody uses Gaussian Elimination in real applications. Why? Gaussian Elimination : Is too slow, -AndRequires too much memory. Real life linear systems can have dimensions that range from millions to billions. This amount of numerical figures is overwhelming even to the most powerful computer made today. The goal of this research is to study some iterative methods, then to compare them. They are: •Jacobian Method •Gauss-Seidel Method (GS) •Conjugate Gradient Method (CG) •BiConjugate Gradient Method (BiCG) •BiConjugate Gradient Stabilized Method (BiCGSTAB) Basic Iterative Methods The Jacobian Method and the GS Method are considered Basic Iterative methods. For these Methods, the p(G) Must be <1. This means, that the EigenValue with the largest magnitude must be less than 1 or the iterations will go in the completely opposite direction, and will never converge to find the solution. Alpha = 1 GS Vs. Jacobi Here, Alpha is set to 2. The GS method converges faster than the Jacobi Method because it uses more recent numbers to make its guess. Due to this, the EigenValues of the GS method will always be lower than the Jacobi method. They both require the Eigenvalues to be <1, but the further it is from 1, the faster the iterations will converge. Basic Iterative Methods have some Drawbacks Krylov Subspace Methods These methods use the ‘span’ of the matrix, which is called the Krylov Subspace. Conjugate Gradient Method (CG) BiConjugate Gradient Method (BiCG) BiConjugate Gradient Stabilized Method (BiCGSTAB) Basic Iterative Methods are extremely slow at converging when dealing with large matrices. Conditions and Properties of the Krylov Subspace Methods CG BiCG BiCGSTAB This method was designed to solve SPD matrices. This method is similar to the Cg method, but can also solve non-SPD matrices. Not all real life situations give perfect sets of data. BiCG requires the A-Transpose, which is ~~~~ BiCG converges faster, but cannot converge at all without the CG Vs. GS CG Vs. BiCG BiCGSTAB Vs. BiCG Conclusion • Basic Iterative Methods • Krylov Subspace Methods • Advantages / Disadvantages of the separate algorithms