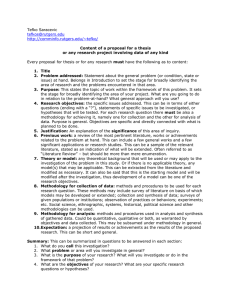

Lecture06 Search tactics.ppt

advertisement

Search strategy & tactics Governed by effectiveness & feedback tefkos@rutgers.edu; http://comminfo.rutgers.edu/~tefko/ Tefko Saracevic 1 Central ideas As a searcher you are striving toward doing effective searches • Searches are all about being effective – finding what is needed, desired • To measure effectiveness common measures of precision and recall are used • Various tactics are used to affect effectiveness • Application of tactics for a desired results is a second nature of professional searchers Knowing searching = also knowing tactics to reach toward desired effectiveness Tefko Saracevic 2 ToC 1. 2. 3. 4. Major concepts Measuring effectiveness Search tactics Feedback types Tefko Saracevic 3 can it be done? 1. Major concepts Effectiveness, relevance Tefko Saracevic 4 Some definitions • Search statement (query): – set of search terms with logical connectors and attributes file and system dependent • Search strategy (big picture): – overall approach to searching of a question • selection of systems, files, search statements & tactics, sequence, output formats; cost, time aspects • Search tactics (action choices): – choices & variations in search statements • terms, connectors, attributes Tefko Saracevic 5 Some definitions (cont.) • Move : – modifications of search strategies or tactics that are aimed at improving the results • e.g. from searching for digital AND libraries to digital(w)libraries (Dialog) or “digital libraries” (Scopus) • Cycle (particularly applicable to systems such as Dialog): – set of commands from start (begin) to viewing (type) results, or from a viewing to a viewing command Tefko Saracevic 6 Search strategy – big picture all kinds of actions from start to end • The entire approach to a search – selection of – files and sources to use – approaches in proceeding to search & combining • search terms • operators to use • fields to search – formats for viewing results – alternative actions if search yields • too much • too little – problem-solving heuristics Tefko Saracevic 7 Search tactics – specific actions connected with a given search as it progresses • A query - command line entered into a system in order to retrieve relevant information – terms, operators & attributes as allowed by a given system – vocabulary & syntax used in conjunction with connectors &/or limiters to search a system • Again: depends on a system how it is done – for example, a search statement might be: • in Dialog: b 47; ss (garbanzo? or chickpeas) and (hum?us or humus) • in Scopus you can enter: (garbanzo? or chickpeas) and (hum?us or humus) • how would you do that in ? Tefko Saracevic 8 Search performance is expressed in terms of: • Effectiveness : – performance as to objectives • to what degree did a search accomplish what desired? • how well done in terms of relevance? • Efficiency : – performance as to costs • at what cost and/or effort, time? Both are KEY concepts & criteria for selection of strategy, tactics & evaluation • But here we concentrate on effectiveness Tefko Saracevic 9 Effectiveness criteria • Search tactics chosen & changed following some criteria of accomplishment, such as: – – – – – none - no thought given – hard to imagine but happens sometimes relevance (most often) – is it relevant to a need, task, problem? magnitude (also very often)- is it to much retrieved to start with? output attributes- is it trustworthy? Authorities? Understandable? … topic – is it on the topic of the question? • Tactics are chosen or altered interactively to match given criteria - feedback plays an important role Knowing what choice of tactics may produce what results is key to professional searcher Tefko Saracevic 10 Relevance: key concept in IR & key criterion for assessing effectiveness “Relevant: having significant and demonstrable bearing on the matter at hand.” “Relevance: the ability (as of an information retrieval system) to retrieve material that satisfies the needs of the user.” Merriam Webster (2005) • Attribute/criterion reflecting effectiveness of exchange of inf. between people (users) & IR systems in communication contacts, based on valuation by people Tefko Saracevic 11 Variation in names • Relevance or relevant can be expressed in different terms – utility, pertinence, appropriate, significant, useful, germane, applicable, valuable … • But the concept still remains relevance That which we call relevance by any other word would still be relevance Relevance is relevance is relevance is relevance thank you Shakespeare and Gertrude Stein Tefko Saracevic 12 Some attributes of relevance – in IR - user dependent – users are the final judges at the end – multidimensional or faceted – users asses relevance on a number of factors, not only topic, but also authority, novelty, source … – dynamic – users may change relevance assessments & criteria as search progresses or they learn or the problem is modified – not only binary – users assess object not only as relevant/not relevant, but also more on a continuum (scale) - partially relevant included – intuitively well understood – nobody has to explain to users what it is Tefko Saracevic 13 Consistency Individual differences – consistency of assessment varies over time & between people to a (sometimes significant) degree – but (in)consistency is similar as in other processes e.g. indexing, classifying • Subject expertise affects consistency of relevance judgments. Higher expertise results in higher consistency and stringency. Lower expertise results in lower consistency and more inclusion. – relevance is not fixed; individual differences may be large • It is most helpful to discuss with a user what kind of documents they may asses as relevant, what are their criteria and then try to incorporate that in searching & own assessments Tefko Saracevic 14 Two major types of relevance – leading to a dichotomy of systems- & users-view of relevance: – Systems or algorithmic relevance • the way a system or algorithm assesses relevance • relation between a query & objects in the file of a system as retrieved or failed to be retrieved by a given algorithm – User relevance • the way a user or user surrogate (searcher, specialist …) assesses relevance • relation between information need or problem at hand of a user and objects retrieved or out there in general – But Topical relevance can be considered by both system & user • the degree to which topics or subjects of a query & topics or subjects of objects (documents) in a file or retrieved match • relation between topic in the query & topic covered by the retrieved objects, or objects in the file(s) of the system, or even in existence Tefko Saracevic 15 User relevance can be further distinguished as to: – Cognitive relevance or pertinence: • possible changes in user’s cognitive state due to objects retrieved • relation between state of knowledge & cognitive information need of a user and the objects provided or in the file(s) – Motivational or affective relevance • matching & satisfying user’s intentions, purposes, rationales, emotions • relation between intents, goals & motivations of a user & objects retrieved by a system or in the file, or even in existence. Satisfaction – Situational relevance or utility: • value of given objects or information for user’s situation or changes in situation • relation between the task or problem-at-hand & the objects retrieved (or in the files). Relates to usefulness in decision-making, reduction of uncertainty ... Tefko Saracevic 16 2. Measuring effectiveness Precision and recall Tefko Saracevic 17 How? The basic way by which effectiveness is established in IR searching is to compare User assessment of relevance System assessment of relevance Where user assessment is the gold standard Tefko Saracevic 18 Effectiveness measures Two measures are used universally: Precision: – of the stuff retrieved & given to user how much (what %) was relevant? – more formally: probability that given that an object is retrieved it is relevant, or the ratio of relevant items retrieved to all items retrieved Recall: – of the stuff that is relevant in the file how much (what %) was actually retrieved? – more formally: probability that given that an object is relevant it is retrieved, or the ratio of relevant items retrieved to all relevant items in a file Tefko Saracevic 19 Calculation Items judged RELEVANT Items RETRIEVED a Recall Tefko Saracevic = b No. of items retrieved & judged relevant Items NOT RETRIEVED Precision = Items judged NOT RELEVANT c No. of items retrieved & judged not relevant (junk) d No. of items not retrieved & relevant (missed relevant) No. of items not retrieved & not relevant (missed junk) a a+b High precision = maximize a, minimize b a High recall = maximize a, minimize c a+c 20 Examples of calculation • If a system retrieved 16 documents and only 4 were assessed as relevant by a user then precision is 25% • If a system had 40 documents in the file that were relevant but managed to retrieve only 12 of them then recall is 30% • Precision is easy to establish, recall is not • union of retrievals is used as a “trick” to establish relative recall • you do a number of searches or use a number of tactics or algorithms then you take together all that was retrieved (union) and have that assessed; then you can calculate a relative recall of each search, tactic or algorithm in respect to the union & see which one provides better or worse relative recall Tefko Saracevic 21 Interpretation: PRECISION • Precision= percent of relevant stuff you have in your set of answers retrieved – or conversely percent of junk (false drops) in the answer set – high precision = most stuff relevant – low precision = a lot of junk • Some users demand high precision – do not want to wade through much stuff – but it comes at a price: relevant stuff may be missed • Tradeoff almost always: high precision = low recall • we will get to tradeoff a bit later, but it is VERY important to consider Tefko Saracevic 22 Interpretation: RECALL • A file may have a lot of relevant stuff • Recall = percent of that relevant stuff in the file that you retrieved in your answer set – conversely percent of stuff you missed – high recall = you missed little – low recall = you missed a lot • Some users demand high recall (e.g. PhD students doing a dissertation; patent lawyers; researchers writing a proposal or article) – want to make sure that important stuff is not missed – but will have to pay a price of wading through a lot of junk • Tradeoff almost always: high recall = low precision Tefko Saracevic 23 3. Search tactics Tradeoff between precision and recall Using it for different tactics Tefko Saracevic 24 Aim of search tactics • Since there is no such thing as a perfect search: – the aim is to search in a way that will insure a given, chosen or desired, level of effectiveness • That means that we have to agree on – what do we mean by effectiveness in searching? • general agreement is that retrieval of relevant answers is the major criterion for effectiveness of searching – what measures do we use to express achievement of effectiveness in terms of relevance • general agreement is that we use measures of precision and recall (or derivatives) Thus many search tactics are geared toward achieving certain level of precision or recall Tefko Saracevic 25 Precision-recall trade-off • USUALLY: precision & recall are inversely related – higher recall usually means lower precision & vice versa Precision 100 % 0 Tefko Saracevic Recall 100 % 26 Precision-recall trade-off • It is like in life, usually: • you get some, lose some; you can't have your cake and eat it too • Usually, but not always (keep in mind these are probabilities) – when you have high precision most stuff you got is relevant or on the target but you also missed other stuff (could be a lot) that is relevant – it was left behind – when you have high recall you did not miss much but you also got junk (could be a lot) - you have to wade through it • There is price to pay either way – but then a lot of users are perfectly satisfied with and are aiming at high precision • give me a few good things, that is all what I need Tefko Saracevic 27 Effectiveness (Cleverdon’s) “laws” High precision in retrieval is usually associated with low recall. High precision = low recall High recall in retrieval is usually associated with low precision. High recall = low precision You – with your user – have to decide if you are aiming toward high recall or high precision & you have to be aware of & explain tradeoffs You use different tactics for high recall from those for high precision Tefko Saracevic Originally formulated by Cyril Cleverdon, a UK librarian, in 1960s, in first IR tests done in Cranfield, UK, thus “Cranfield tests” 28 Search tactics several ‘things’ or variables in a query can be selected & changed to affect effectiveness Variable What variation possible? 1. LOGIC choice of connectors among terms AND, OR, NOT, W … 2. SCOPE no. of terms linked - ANDs A AND B vs A AND B AND C 3.EXHAUSTIVITY for each concept no. of related terms - OR connections A OR B vs. A OR B OR C 4. TERM SPECIFICITY for each concept level in hierarchy broader vs narrower terms 5. SEARCHABLE FIELDS choice for text terms & non-text attributes e.g. titles only, limit as to years, various sources 6. FILE OR SYSTEM SPECIFIC CAPABILITIES using capabilities, options available e.g. given fields, ranking, sorting, linking Tefko Saracevic 29 Actions and consequences BUT: each variation has consequence in output if you do X then Y will happen Tefko Saracevic Action Consequence SCOPE - adding more ANDs Output size: down Recall: down Precision: up EXHAUSTIVITY - adding more ORs Output size: up Recall: up Precision: down USE OF NOTs - adding more NOTs Output size: down Recall: down Precision: up USING BROAD TERMS - low specificity Output size: up Recall: up Precision: down USING PHRASES - high specificity Output size: down Recall: down Precision: up 30 Tactics: what to do? • These “laws” lead to precision & recall devices – tactics to increase/decrease precision or recall Tefko Saracevic To increase precision To increase recall SCOPE -add more ANDs -- you restrict NOTs -add more NOTs -you eliminate EXHAUSTIVITY -add more ORs - you enlarge BROAD TERMS - you broaden terms PHRASES - you become more specific USE MORE TRUNCATION -you use grammatical variants of terms 31 Precision, recall devices With experience use of these devices will become second nature Tefko Saracevic NARROWING Higher precision BOADENNING Higher recall More ANDs Fewer ORs More NOTs Less free text More controlled Less synonyms Narrower terms More specific Less truncation More qualifiers More limits Building blocks Fewer ANDs More ORs Fewer NOTs More free text Fewer controlled More synonyms Broader terms Less specific More truncation Fewer qualifiers Fewer limits Citation growing 32 Other tactics (but that gets us in the next unit on advanced searching) • Citation growing: – – – – find a relevant document look for documents cited in look for documents citing it repeat on newly found relevant documents • Building blocks – find documents with term A – review – add term B & so on • Using different feedbacks – a most important tool Tefko Saracevic 33 4. Feedback Types. Berry picking Tefko Saracevic 34 Feedback in searching • Extremely important! • And constantly used, consciously or unconsciously • Formally: – the process in which part of the output of a system is returned to its input in order to regulate its further output • Simply put: – you search, find something that may be relevant, then look at it, then on the basis of AHA! change your next query or tactic to get better or more stuff Tefko Saracevic 35 Feedback in searching … • Any feedback implies loops – a completion of a process provides information for modification, if any, for the next process – information from output is used to change previous or create new input • In searching: – some information taken from output of a search is used to do something with next query (search statement) • examine what you got to decide what to do next in searching – a basic tactic in searching • Several feedback types used in searching – each used for different decisions Tefko Saracevic 36 Feedback types • Content relevance feedback – judge relevance of items retrieved – make decision what to do next • switch files, change exhaustivity … • Term relevance feedback – find relevant documents – examine what other terms used in those documents – search using additional terms • also called query modification & in some systems done automatically • Magnitude feedback – on the basis of size of output make tactical decisions • often the size so big that documents are not examined but next search done to limit size Tefko Saracevic 37 Feedback types (cont.) • Tactical review feedback – after a number of queries (search statements) in the same search review tactics as to getting desired outputs • review terms, logic, limits … – change tactics accordingly • Strategic review feedback – after a while (or after consultation with user) review the “big” picture on what searched and how • sources, terms, relevant documents, need satisfaction, changes in question, query … – do next searches accordingly – used in reiterative searching Tefko Saracevic 38 But then • There are other ways to look at searching where many of these things are combined • Here is one way that looks at the whole search as a process of complex wandering • Shifting exploration & feedback are implied Tefko Saracevic 39 Bates’ Berry-picking model of searching “…moving through many actions towards a general goal of satisfactory completion of research related to information need.” – query is shifting (continually) • as search progresses queries are changing • different tactics are used – searcher (user) may move through a variety of sources Elaborated by Marcia Bates UCLA • new files, resources may be used – think of your last serious search • isn’t this what you were doing? Tefko Saracevic 40 Berry-picking … – closer to the real behavior of information searchers – new information may provide new ideas, new directions • feedback is used in various ways – question is not satisfied by a single set of answers, but by a series of selections & bits of information found along the way • results may vary & may have to be provided in appropriate ways & means – you go as if berry-picking in a field Tefko Saracevic 41 A berry-picking evolving search (from the article) “1) The nature of the query is an evolving one, rather than single and unchanging, and 2) the nature of the search process is such that it follows a berrypicking pattern, instead of leading to a single best retrieved set.” Tefko Saracevic 42 Conclusions search tactics have to be mastered • Users are not concerned about searching but about finding • Effective searching is a prerequisite for finding the right stuff • Search tactics are critical for effective searching • They need to be understood and followed if you do X you can expect Y in order to get to Y you have to know what X to do Tefko Saracevic 43 Thank you M. C. Escher Tefko Saracevic 44