Software Testing

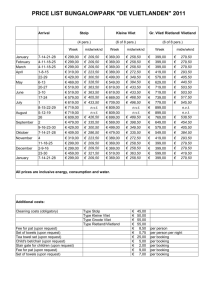

advertisement

Software Testing Nasty question Suppose you are being asked to lead the team to test the software that controls a new ATM machine. Would you take that job? What if the contract says you’ll be charged with maximum punishment in case a patient dies because of a mal-functioning of the software? SE, Testing, Hans van Vliet, ©2008 2 State-of-the-Art 30-85 errors are made per 1000 lines of source code Extensively tested software contains 0.5-3 errors per 1000 lines of source code Testing is postponed, as a consequence: the later an error is discovered, the more it costs to fix it. Error distribution: 60% design, 40% implementation. 66% of the design errors are not discovered until the software has become operational. SE, Testing, Hans van Vliet, ©2008 3 Relative cost of error correction 100 50 20 10 5 2 1 RE design code test operation SE, Testing, Hans van Vliet, ©2008 4 Lessons Many errors are made in the early phases These errors are discovered late Repairing those errors is costly SE, Testing, Hans van Vliet, ©2008 5 How then to proceed? Exhaustive testing most often is not feasible Random statistical testing does not work either if you want to find errors Therefore, we look for systematic ways to proceed during testing SE, Testing, Hans van Vliet, ©2008 6 Classification of testing techniques Classification based on the criterion to measure the adequacy of a set of test cases: coverage-based testing fault-based testing error-based testing Classification based on the source of information to derive test cases: black-box testing (functional, specification-based) white-box testing (structural, program-based) SE, Testing, Hans van Vliet, ©2008 7 Some preliminary questions What exactly is an error? How does the testing process look like? When is test technique A superior to test technique B? What do we want to achieve during testing? When to stop testing? SE, Testing, Hans van Vliet, ©2008 8 Error, fault, failure an error is a human activity resulting in software containing a fault a fault is the indiactor of an error a fault may result in a failure SE, Testing, Hans van Vliet, ©2008 9 When exactly is a failure? Failure is a relative notion: e.g. a failure w.r.t. the specification document Verification: evaluate a product to see whether it satisfies the conditions specified at the start: Have we built the system right? Validation: evaluate a product to see whether it does what we think it should do: Have we built the right system? SE, Testing, Hans van Vliet, ©2008 10 Point to ponder: Maiden flight of Ariane 5 SE, Testing, Hans van Vliet, ©2008 11 What is our goal during testing? Objective 1: find as many faults as possible Objective 2: make you feel confident that the software works OK SE, Testing, Hans van Vliet, ©2008 12 Test documentation (IEEE 928) Test plan Test design specification Test case specification Test procedure specification Test item transmittal report Test log Test incident report Test summary report SE, Testing, Hans van Vliet, ©2008 13 Software Testing black-box methods white-box methods Methods Strategies Test Case Design Strategies Black-box or behavioral testing (knowing the specified function a product is to perform and demonstrating correct operation based solely on its specification without regard for its internal logic) White-box or glass-box testing (knowing the internal workings of a product, tests are performed to check the workings of all independent logic paths) White-Box Testing ... our goal is to ensure that all statements and conditions have been executed at least once ... Why Cover? logic errors and incorrect assumptions are inversely proportional to a path's execution probability we often believe that a path is not likely to be executed; in fact, reality is often counter intuitive typographical errors are random; it's likely that untested paths will contain some Basis Path Testing White-box technique usually based on the program flow graph First, we compute the cyclomatic Complexity, or number of enclosed areas + 1, In this case, V(G) = 4 Next, we derive the independent paths: 1 Since V(G) = 4, there are four paths 2 3 4 5 7 8 6 Path 1: Path 2: Path 3: Path 4: 1,2,3,6,7,8 1,2,3,5,7,8 1,2,4,7,8 1,2,4,7,2,4,...7,8 Finally, we derive test cases to exercise these paths. Basis Path Testing Notes you don't need a flow chart, but the picture will help when you trace program paths count each simple logical test, compound tests count as 2 or more basis path testing should be applied to critical modules Control Structure Testing White-box techniques focusing on control structures present in the software Condition testing (e.g. branch testing) focuses on testing each decision statement in a software module, it is important to ensure coverage of all logical combinations of data that may be processed by the module (a truth table may be helpful) Data flow testing selects test paths based according to the locations of variable definitions and uses in the program (e.g. definition use chains) Loop testing focuses on the validity of the program loop constructs (i.e. simple loops, concatenated loops, nested loops, unstructured loops), involves checking to ensure loops start and stop when they are supposed to (unstructured loops should be redesigned whenever possible) Loop Testing Simple loop Nested Loops Concatenated Loops Unstructured Loops Graph-based Testing Methods Black-box methods based on the nature of the relationships (links) among the program objects (nodes), test cases are designed to traverse the entire graph Transaction flow testing (nodes represent steps in some transaction and links represent logical connections between steps that need to be validated) Equivalence Partitioning Black-box technique that divides the input domain into classes of data from which test cases can be derived An ideal test case uncovers a class of errors that might require many arbitrary test cases to be executed before a general error is observed Boundary Value Analysis Black-box technique that focuses on the boundaries of the input domain rather than its center BVA guidelines: 1. If input condition specifies a range bounded by values a and b, test cases should include a and b, values just above and just below a and b 2. If an input condition specifies and number of values, test cases should be exercise the minimum and maximum numbers, as well as values just above and just below the minimum and maximum values 3. Apply guidelines 1 and 2 to output conditions, test cases should be designed to produce the minimum and maxim output reports 4. If internal program data structures have boundaries (e.g. size limitations), be certain to test the boundaries Comparison Testing Black-box testing for safety critical systems in which independently developed implementations of redundant systems are tested for conformance to specifications Often equivalence class partitioning is used to develop a common set of test cases for each implementation Summary Do test as early as possible Testing is a continuous process Design with testability in mind Test activities must be carefully planned, controlled and documented. No single reliability model performs best consistently SE, Testing, Hans van Vliet, ©2008 29