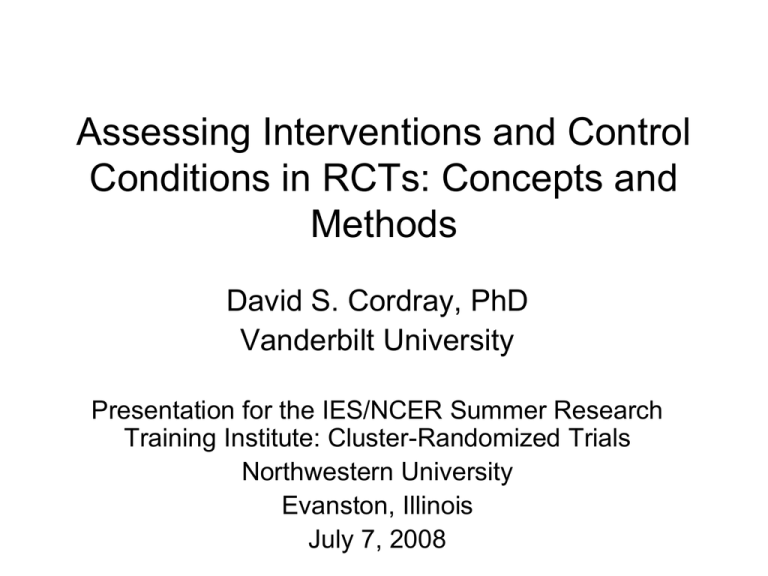

Assessing Interventions and Control Conditions in RCTs: Concepts and Methods

advertisement

Assessing Interventions and Control Conditions in RCTs: Concepts and Methods David S. Cordray, PhD Vanderbilt University Presentation for the IES/NCER Summer Research Training Institute: Cluster-Randomized Trials Northwestern University Evanston, Illinois July 7, 2008 Overview • Fidelity of Intervention Implementation: Definitions and distinctions • Conceptual foundation for assessing fidelity in RCTs, a special case. • Model-based assessment of implementation fidelity – – – – Models of change “Logic model” Program model Research model • Indexing Fidelity • Methods of Assessment – Sample-based fidelity assessment – Regression-based fidelity assessment • Questions and discussion Intervention Fidelity: Definitions, Distinctions, and Some Examples Dimensions Intervention Fidelity • Little consensus on what is meant by the term “intervention fidelity”. • But Dane & Schneider (1998) identify 5 aspects: – Adherence/compliance– program components are delivered/used/received, as prescribed; – Exposure – amount of program content delivered/received by participants; – Quality of the delivery – theory-based ideal in terms of processes and content; – Participant responsiveness – engagement of the participants; and – Program differentiation – unique features of the intervention are distinguishable from other programs (including the counterfactual) Distinguishing Implementation Assessment from Implementation Fidelity Assessment • Two models of intervention implementation, based on: – A purely descriptive model • Answering the question “What transpired as the intervention was put in place (implemented). – An a priori intervention model, with explicit expectations about implementation of core program components. • Fidelity is the extent to which the realized intervention (tTx) is “faithful” to the pre-stated intervention model (TTx) • Fidelity = TTx – tTx • We emphasize this model, but both are important Some Examples • The following examples are from an 8-year, NSF-supported project involving biomedical engineering education at Vanderbilt, Northwestern, Texas, Harvard/MIT (VaNTH, Thomas Harris, MD, PhD, Director) • The goal was to change the curriculum to incorporate principles of “How People Learn” (Bransford et al. and the National Academy of Sciences, 1999). • We’ll start with a descriptive question, move to model-based examples. Descriptive Assessment: Expectations about Organizational Change Figure 6: Implementation Cohort Design VaNTH Year 1 2 3 4 5 6 7 8 Increasing “infiltration of HPL in the full curriculum Student Cohorts Soph Jr Fr Soph Fr Sr A Jr Sr B Soph Jr Sr Fr Soph Jr Fr A thru G = Cohorts C Sr D Soph Jr Fr Soph Fr Yields a “strong” quasi-experimental design. Sr Jr Soph E Sr Jr F Sr G From: Cordray, Pion & Harris, 2008 Macro-Implementation Figure 8. Proportion of BME Courses Offered Using HPL-Inspired Material 0.8 0.6 0.4 0.2 20 05 - 20 06 20 04 - 20 05 20 03 - 20 04 20 02 - 20 03 20 01 - 20 02 0 20 00 - 20 01 Proportion of BME courses 1 Academic year Vanderbilt N o rthwestern T exas From: Cordray, Pion & Harris, 2008 Changes in Learning Orientation 3.5 Cohort A Cohort B Cohort C Figure 9. Actual Pattern of Change for the Cohort Effect on Intrinsic Motivation: Vanderbilt University C lass Standing Intrinsic Motivation Sen io r Ju nio r Soph 2.5 Fres h 3.5 Cohort A 2.5 Cohort B Sen io r Ju nio r From: Cordray, Pion & Harris, 2008 Soph 1.5 Fres h Intrinsic Motivation Figure 7. Predicted Pattern of Change for the Dose-Response Cohort Effect on Instrinsic Motivation C lass Standing Model Based Fidelity Assessment: What to Measure? • Adherence to the intervention model: – (1) Essential or core components (activities, processes); – (2) Necessary, but not unique to the theory/model, activities, processes and structures (supporting the essential components of T); and – (3) Ordinary features of the setting (shared with the counterfactual groups (C) • Essential/core and Necessary components are priority parts of fidelity assessment. An Example of Core Components” Bransford’s HPL Model of Learning and Instruction • John Bransford et al. (1999) postulate that a strong learning environment entails a combination of: – – – – Knowledge-centered; Learner-centered; Assessment-centered; and Community-centered components. • Alene Harris developed an observation system (the VOS) that registered novel (components above) and traditional pedagogy in classes. • The next slide focuses on the prevalence of Bransford’s recommended pedagogy. Challenge-based Instruction in HPL-based Intervention Courses: The VaNTH Observation System (VOS) 35 30 Percentage of Course Time Using Challengebased Instructional Strategies 25 20 Treatment 15 10 5 0 Year 2 Year 3 Year 4 Adapted from Cox & Cordray, in press Challenge-based Instruction in “Treatment” and Control Courses: The VaNTH Observation System (VOS) 35 30 Percentage of Course Time Using Challengebased Instructional Strategies 25 20 Control Treatment 15 10 5 0 Year 2 Year 3 Year 4 Adapted from Cox & Cordray, in press Student-based Ratings of HPL Instruction in HPL and non-HPL Courses We also examined the same question from the students point of view through surveys (n=1441): Scale Knowledge, Learner, Assessment (KLA) Community BME Program HPL Courses Non-HPL Courses Effect Size Mean Sd N (Courses) Mean Sd N (Courses) VU 58.2 8.74 34 53.0 7.96 16 0.60 NU 51.1 8.28 17 41.0 12.11 22 0.93 UT 52.7 2.51 2 44.9 13.00 10 0.69 VU 13.6 2.82 34 14.0 2.90 16 - 0.15 NU 13.9 4.58 17 10.6 3.93 22 0.80 UT 17.3 4.57 2 9.9 5.50 10 1.40 From: Cordray, Pion & Harris, 2008 Implications • Descriptive assessments involve: – Expectations – Multiple data sources – Can assist in explaining outcomes • Model-based assessments involve: – Benchmarks for success (e.g., the optimal fraction of time devoted to HPL-based instruction) – With comparative evidence, fidelity can be assessed even when there is no known benchmark (e.g., 10 Commandments) – In practice interventions can be a mixture of components with strong, weak or no benchmarks • Control conditions can include core intervention components due to: – Contamination – Business as usual (BAU) contains shared components, different levels – Similar theories, models of action • To index fidelity, we need to measure, at a minimum, intervention components within the control condition. Conceptual Foundations for Fidelity Assessment within Cluster Randomized Controlled Trials Linking Intervention Fidelity Assessment to Contemporary Models of Causality • Rubin’s Causal Model: – True causal effect of X is (YiTx – YiC) – RCT methodology is the best approximation to the true effect • Fidelity assessment within RCT-based causal analysis entails examining the difference between causal components in the intervention and counterfactual condition. • Differencing causal conditions can be characterized as “achieved relative strength” of the contrast. – Achieved Relative Strength (ARS) = tTx – tC – ARS is a default index of fidelity Infidelity and Relevant Threats to Validity • Statistical Conclusion validity – Unreliability of Treatment Implementation (TTX-tTx) : Variations across participants in the delivery receipt of the causal variable (e.g., treatment). Increases error and reduces the size of the effect; decreases chances of detecting covariation. • Construct Validity – cause [(TTx – tTx) –(TC-tC)] – Forms of Contamination: • – Compensatory Rivalry: Members of the control condition attempt to out-perform the participants in the intervention condition (The classic example is the “John Henry Effect”). – Treatment Diffusion: The essential elements of the treatment group are found in the other conditions (to varying degrees). External validity – generalization is about (tTx-tC) – Variation across settings, cohort by treatment interactions Treatment Strength .45 .40 .35 Outcome 100 TTx t tx .30 90 Infidelity Yt 80 Achieved Relative Strength =.15 .25 .20 txC .15 TC “Infidelity” 85 75 Yc (85)-(70) = 15 70 65 .10 60 .05 55 .00 50 d 85 70 0.50 30 d Yt Yc sd pooled d 0.50 Expected Relative Strength =.25 In Practice…. • Identify core components in both groups – e.g., via a Model of Change • Establish bench marks for TTX and TC; • Measure core components to derive tTx and tC – e.g., via a “Logic model” based on Model of Change • Research methods – With multiple components and multiple methods of assessment; achieved relative strength needs to be: • Standardized indices of fidelity – Absolute – Average – Binary • Converted to Achieved Relative Strength, and • Combined across: • Multiple indicators • Multiple components • Multiple levels (HLM-wise) Indexing Fidelity Absolute – Compare observed fidelity (tTx) to absolute or maximum level of fidelity (TTx) Average – Mean levels of observed fidelity (tTx and tC) Binary – Yes/No treatment receipt based on fidelity scores (both groups) – Requires selection of cut-off value Indexing Fidelity as Achieved Relative Strength Intervention Strength = Treatment – Control Achieved Relative Strength (ARS) Index t t ARS Index ST Tx C • Standardized difference in fidelity index across Tx and C • Based on Hedges’ g (Hedges, 2007) • Corrected for clustering in the classroom Average ARS Index X1 X2 3 2( n 1) p g ( ) (1 ) 1 ST 4( nTx nC ) 9 N 2 Group Difference Sample Size Adjustment Clustering Adjustment Where, X 1 = mean for group 1 (tTx ) X 2 = mean for group 2 (tC) ST = pooled within groups standard deviation nTx = treatment sample size nC = control sample size n = average cluster size p = Intra-class correlation (ICC) N = total sample size Example –The Measuring Academic Progress (MAP) RCT • The Northwest Evaluation Association (NWEA) developed the Measures of Academic Progress (MAP) program to enhance student achievement • Used in 2000+ school districts, 17,500 schools • No evidence of efficacy or effectiveness • The upcoming example presents heuristics for translating conceptual variables into operational form. MAP’s Simple Model of Change Feedback Professional Development Achievement Differentiated Instruction Conceptual Model for the Measuring Academic Progress (MAP) Program Operational Intervention Model: MAP Academic Schedule MAP Activity Fall Semester Aug Sept PD1 PD2 No v De c Jan PD 3 Feb Mar Apr PD4 Change Diff Instr Full Implementation Interval Use Data Data Sys State Testing Oct Spring Semester May Final RCT Design: 2-Year Wait Control Translating Model of Change into Activities: the “Logic Model” From: W.T. Kellogg Foundation, 2004 Moving from Logic Model Components to Measurement The MAP Model: Feedback Achievement Professional Development Differentiated Instruction Resources: Activities: Grouping of students Four training sessions 3 Computer Adaptive Testing On-line resources DesCarte system Resources: Continuous assessment Outcomes & Measures Outcomes & Measures Outcomes & Measures Outcomes & Measures Attendance Testing completed State tests Knowledge Acquisition Access DesCarte Changes in pedagogy MAP assessments Fidelity Assessment Plan for the MAP Program Measuring Resources, Activities and Outputs • Observations – Structured – Unstructured • Interviews – Structured – Unstructured • • • • Surveys Existing scales/instruments Teacher Logs Administrative Records Sampling Strategies • Census • Sampling – Probabilistic • Persons (units) • Institutions • Time – Non-probability • Modal instance • Heterogeneity • Key events Key Points and Future Issues • Identifying and measuring, at a minimum, should include model-based core and necessary components; • Collaborations among researchers, developers and implementers is essential for specifying: – Intervention models; – Core and essential components; – Benchmarks for TTx (e.g., an educationally meaningful dose; what level of X is needed to instigate change); and – Tolerable adaptation Points and Issues • Fidelity assessment serves two roles: – Average causal difference between conditions; and – Using fidelity measures to assess the effects of variation in implementation on outcomes. • Should minimize “infidelity” and weak ARS: – Pre-experimental assessment of TTx in the counterfactual condition…Is TTx > TC? – Build operational models with positive implementation drivers • Post-experimental (re)specification of the intervention: For example ….. Intervention and Control Components 10 9 8 7 6 5 4 3 2 1 0 Infidelity T Planned T Obser PD Asmt Asmt=Formative Assessment C Planned C Observ PD Dif Inst PD= Professional Development Diff Inst= Differentiated Instruction 10 9 8 7 6 5 4 3 2 1 0 Asmt Dif Inst Augmentation of Control 10 9 8 7 6 5 4 3 2 1 0 Dif in Theory Dif as Obs PD Asmt Dif Inst Questions and Discussion Small Group Projects Overview • Logistics: – Rationale for the group project – Group assignments – Resources • ExpERT (Experimental Education Research Training) Fellows • Parameters for the group project • Small group discussions Rationale for the Project • Rationale for the group projects: – Purpose of this training is to enhance skills in planning, executing and reporting cluster RCTs. • Various components of RCTs are, by necessity, presented serially. • The ultimate design for an RCT is the product of: – Tailoring of design, measurement, and analytic strategies to a given problem. – Successive iterations as we attempt to optimize all features of the design. • The project will provide a chance to engage in these practices, with guidance from your colleagues. About Group Assignments…. • We are assuming that RCTs need to be grounded in specific topical areas. • There is a diversity of topical interests represented. • The group assignments may not be optimal. • To manage the guidance and reporting functions we need to have a small number of groups. Resources • ExpERT Fellows: – Laura Williams – Quantitative Methods and Evaluation – Chuck Munter – Teaching and Learning – David Stuit – Leadership and Policy Parameters of the Proposal • IES goal is to support research that contributes to the solution of education problems. • RFA IES-NCER-2008-1 provides extensive information about the proposal application and review process. • Proposals are reviewed in 4 areas: – – – – Significance Research Plan Personnel Resources • For our purposes, we’ll focus on Significance and the Research Plan. Significance Research Plan Awards/Duration Group Project Report • Each group will present its proposal on Thursday (60 minutes each – 45 minutes for the proposal – 15 minutes for discussion • Ideally, each report will contain: – Problem statement, intervention description, rationale for why it should work (10-15 minutes) – Overview of the research plan • • • • • • Samples Groups and Assignment Power Fidelity Assessment Outcomes Impact Analysis Plan • Use tables, figures and bullet points in your presentation Expectations • You will produce a rough plan – Some details will be guesses • The planning processes is often iterative, with the need to revisit earlier steps and specifications. • Flexibility helps…. Initial Group Interactions • Meet with your assigned group (45 minutes) to assess “common ground” • Group discussion of “common issues”