OpenFlow Switch Limitations

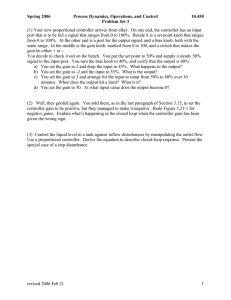

advertisement

OpenFlow Switch Limitations Background: Current Applications • Traffic Engineering application (performance) – Fine grained rules and short time scales – Coarse grained rules and long time scales • Middlebox provision (perf + security) – Fine grained rule and long time scales • Network services – Load balancer: fine-grained/short-time – Firewall:fine-grained/long-time • Cloud Services – Fine grained/long-time scales Background: Switch Design Network Controller 13Mbs Switch CPU+Mem 35Mbs 250GB Hash Table TCAM OpenFlow Background: Flow Table Entries • OpenFlow rules match on 14 fields – Usually stored in TCAM (TCAM is much smaller) – Generally 1K-10K entries. • Normal switches – 100K-1000K entries – Only match on 1-2 fields Background: Switch Design Network Controller 13Mbs Switch CPU+Mem 35Mbs 250GB Hash Table TCAM OpenFlow Background: Network Events • Packet_In (flow-table expect, pkt matches no rule) – Asynch from switch to controller • Flow_mod (insert flow table entries) – Asynch from controller to switch • Flow_timeout (flow was removed due to timeout) – Asynch from switch to controller • Get Flow statistics (information about current flows( – Synchronous between switch & controller – Controller sends request, switch replies Background: Switch Design 3. Controller run code to process event Network Controller 13Mbs Switch CPU+Mem 2. CPU create packet-in event, and sends to controller 35Mbs From: Theo To: Bruce 1. Check Flow table, If no match then Inform CPU Background: Switch Design 4. Controller creates flow event and sends a flow_mod event 13Mbs Network Controller Switch CPU+Mem 35Mbs From: theo, to: bruce, send on port 1 Timeout: 10 secs, count: 0 From: Theo To: Bruce 2. CPU processes flow_mod and insert into TCAM Background: Switch Design 4. Controller creates flow event and sends a flow_mod event Network Controller 13Mbs Switch CPU+Mem 2. CPU processes flow_mod and insert into TCAM 35Mbs From: theo, to: bruce, send on port 1 Timeout: 10 secs, count: 1 From: Theo To: Bruce Background: Switch Design Network Controller 13Mbs Switch CPU+Mem 35Mbs From: Theo To: Bruce From: theo, to: bruce, send on port 1 Timeout: 10 secs, count: 1 1. 2. 3. 4. Check Flow table Found matching rule Forward packet Update the count Background: Switch Design Network Controller 13Mbs Switch CPU+Mem 35Mbs From: Theo To: John From: theo, to: bruce, send on port 1 Timeout: 10 secs, count: 1 1. Check Flow table 2. No matching rule … now we must talk to the controller Background: Switch Design Network Controller 13Mbs Switch CPU+Mem 35Mbs From: Theo To: John From: theo, to: ***, send on port 1 Timeout: 10 secs, count: 1 1. 2. 3. 4. Check Flow table Found matching rule Forward packet Update the count Background: Switch Design Network Controller 13Mbs Switch CPU+Mem 35Mbs From: Theo To: Cathy From: theo, to: ***, send on port 1 Timeout: 10 secs, count: 1 1. 2. 3. 4. Check Flow table Found matching rule Forward packet Update the count Background: Switch Design • Problem with Wild-card – Too general – Can’t find details of individual flows – Hard to do anything finegrained Switch CPU+Mem 35Mbs From: theo, to: ***, send on port 1 Timeout: 10 secs, count: 1 Background: Switch Design • Doing fine-grained things • Think hedera – Find all elephant flows – Put elephant flows on diff path • How to do this? – Controller sent get-stat request – Switch respond will all stats – Controller goes through each request – Install special paths Switch CPU+Mem 35Mbs From: theo, to: bruce, send on port 1 Timeout: 1secs, count: 1K From: theo, to: john, send on port 1 Timeout: 10 secs, count: 1 Background: Switch Design • Doing fine-grained things • Think hedera – Find all elephant flows – Put elephant flows on diff path • How to do this? – Controller sent get-stat request – Switch respond will all stats – Controller goes through each request – Install special paths Switch CPU+Mem 35Mbs From: theo, to: bruce, send on port 3 Timeout: 1secs, count: 1K From: theo, to: john, send on port 1 Timeout: 10 secs, count: 1 Problems with Switches • TCAM is very small can only support a small number of rules – Only 1k per switch, endhost generate lots more flows • Controller install entry for each flow increases latency – Takes about 10ms to install new rules • So flow must wait!!!!!! – Can install at a rate of 13Mbs but traffic arrives at 250Gbp • Controller getting stats for all flows takes a lot resources – For about 1K, you need about MB – If you request every 5 seconds then you total: Background: Switch Design Network Controller 13Mbs Switch CPU+Mem 35Mbs 250GB Hash Table TCAM Problems with Switches • TCAM is very small can only support a small number of rules – Only 1k per switch, endhost generate lots more flows • Controller install entry for each flow increases latency – Takes about 10ms to install new rules • So flow must wait!!!!!! – Can install at a rate of 13Mbs but traffic arrives at 250Gbp • Controller getting stats for all flows takes a lot resources – For about 1K, you need about MB – If you request every 5 seconds then you total: Getting Around TCAM Limitation • Cloud centric solutions – Use Placement tricks • Data Center centric solutions – Use overlay: use placement tricks • General technique: Difane – Use Detour routing DiFANE DiFane • Creates a hierarchy of switches – Authoritative switches • Lots of memory • Collectively stores all the rules – Local switches • Small amount of memory • Stores a few rules • For unknown rules route traffic to an authoritative switch Packet Redirection and Rule Caching Authority Switch Ingress Switch First packet Following packets From: theo To: bruce Everything else From: theo To; cathy Egress Switch Hit cached rules and forward 23 Three Sets of Rules in TCAM Type Cache Rules Priority Field 1 Field 2 Action Timeout 210 00** 111* Forward to Switch B 10 sec In ingress switches 209 1110 11** Drop reactively installed by authority switches 10 sec … … … … … 110 00** 001* Forward Trigger cache manager Infinity … Authority In authority switches 109 0001 0*** Drop, proactively installed by controller Rules Trigger cache manager … … … … 15 0*** 000* Redirect to auth. switch … … … … Partition In every switch 14 … Rules proactively installed by controller … 24 Stage 1 The controller proactively generates the rules and distributes them to authority switches. 25 Partition and Distribute the Flow Rules Controller Distribute partition information Flow space AuthoritySwitch B Authority Switch A Authority Switch C Authority Switch B Ingress Switch Authority Switch A accept reject Egress Switch Authority Switch C 26 Stage 2 The authority switches keep packets always in the data plane and reactively cache rules. 27 Packet Redirection and Rule Caching Authority Switch Ingress Switch Egress Switch First packet Following packets Hit cached rules and forward A slightly longer path in the data plane is faster than going through the control plane 28 Bin-Packing/Overlay Bin-Packing/Overlay Virtual Switch • Virtual switch has more Mem than hardware switch – So you can install a lot more rules in virtual switches • Create an overlay between virtual switches – Install fine-grained in virtual switches – Install normal OSPF rules in HW – Can implement everything in virtual switch • Has overlay draw-backs. Bin-Pack in data Centers • Insight: traffic is between certain servers – If server placed together then their rules are only inserted in one switch Getting Around CPU Limitations • Prevent controller from being in flow creation loop – Create clone rules • Prevent controller from being in decision loops – Create forwarding groups Clone Rules • Insert a special wild card rule • When a packet arrives switch makes a micro-flow rule itself – Micro-flow inherits all properties of the wildcard rule From: Theo To: Bruce Switch CPU+Mem 35Mbs From: theo, to: ***, send on port 1 Timeout: 10 secs, count: 1 Clone Rules • Insert a special wild card rule • When a packet arrives switch makes a micro-flow rule itself – Micro-flow inherits all properties of the wildcard rule Switch CPU+Mem 35Mbs From: theo, to: ***, send on port 1 Timeout: 10 secs, count: 1 From: theo, to: Bruce, send on port 1 Timeout: 10 secs, count: 1 Fr To Forwarding Groups • What happens when there’s a failure? – Port 1 goes down? – Switch must inform the controller • Instead, have backup ports – Each rule also states backup Switch CPU+Mem 35Mbs From: theo, to: ***, send on port 1 Timeout: 10 secs, count: 1 From: theo, to: Bruce, send on port 1 Timeout: 10 secs, count: 1 Forwarding Groups • What happens when there’s a failure? – Port 1 goes down? – Switch must inform the controller • Instead, have backup ports – Each rule also states backup Switch CPU+Mem 35Mbs From: theo, to: ***, send on port 1, backup: 2 Timeout: 10 secs, count: 1 From: theo, to: Bruce, send on port 1, backup2 Timeout: 10 secs, count: 1 • How do I do load balancing? – Something like ECMP? – Or server load-balancing? • Currently, – Controller installs rules for each flow do load balancing when installing – Controller can do get stats, and load balance later Switch CPU+Mem 35Mbs From: theo, to: ***, send on port 1 Timeout: 10 secs, count: 1 From: theo, to: Bruce, send on port 1 Timeout: 10 secs, count: 1 Forwarding Groups • Instead, have port-groups – Each rule specifies a group of ports to send on • When micro-rule is create – Switch can assign ports to micro-rules • in a round robin matter • Or based on probability Switch CPU+Mem 35Mbs From: theo, to: ***, send on port 1,2,4 Timeout: 10 secs, count: 1 From: theo, to: Bruce, send on port 1 Timeout: 10 secs, count: 1 Forwarding Groups • Instead, have port-groups – Each rule specifies a group of ports to send on • When micro-rule is create – Switch can assign ports to micro-rules • in a round robin matter • Or based on probability Switch CPU+Mem 35Mbs From: theo, to: ***, send on port 1(10%), 2(90%) Timeout: 10 secs, count: 1 From: theo, to: Bruce, send on port 2 Timeout: 10 secs, count: 1 Getting Around CPU Limitations • Prevent controller from polling switches – Introduce triggers: • Each rule has a trigger and sends stats to the controller when the threshold is reached • E.g. if over 20 pkts match flow, – Benefits of triggers: • Reduces the number entries being returned • Limits the amount of network traffic Summary • Switches have several limitations – TCAM space – Switch CPU • Interesting ways to reduce limitations – Place more responsibility in the switch • Introduce triggers • Have switch create micro-flow rules from general rules