ch 12-part1 (connected word).ppt

advertisement

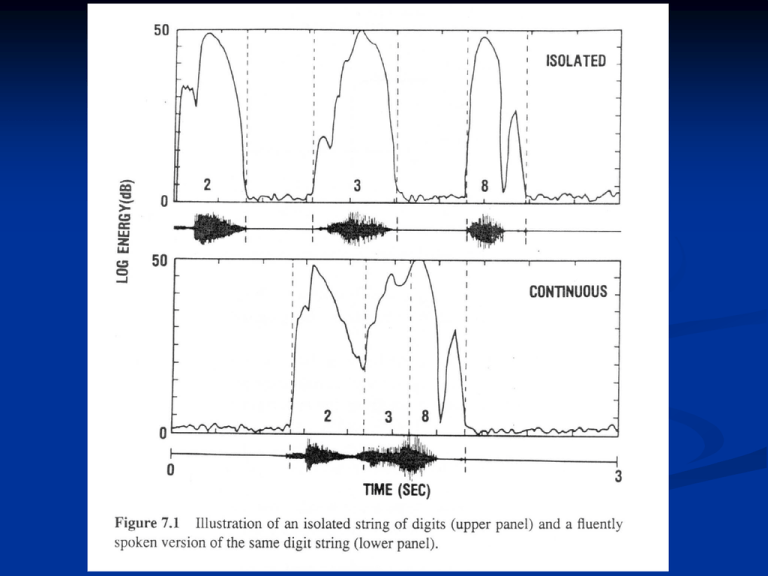

The connected word recognition problem

Problem definition:

Given a fluently spoken sequence of words, how

can we determine the optimum match in terms

of a concatenation of word reference patterns?

To solve the connected word recognition problem, we

must resolve the following problems

connected word recognition

Test pattern sequence of vectors :

T {t (1), t (2), , t ( M )} {t (m)}mM1

The set of word reference patterns (templates or models) :

Ri {ri (1), ri (2), , ri ( N i )} 1 i V

N i is the duration of the i - th reference pattern

The problem is to find R* , the optimum sequence of word

reference patterns :

R * {Rq*(1) Rq*(2 ) Rq*(3) Rq*( L ) }

connected word recognition

consider constructi ng an arbitrary " super reference" pattern

R s of the form :

R Rq (1) Rq ( 2) Rq (3) Rq ( L ) {r (n)}

s

s

Ns

n 1

in which N s is the total duration of the concatenat ed refernce

pattern R s

connected word recognition

The time aligned distance between R s and T is :

D( R s , T ) min

M

w( m )

s

d

(

t

(

m

),

r

( w(m)))

m 1

where d(.,.) is a local spectral distance measure and

w(.) is a warping function.

Optimize D :

D * mins D( R s , T )

R

M

min

min

Lmin L L max q (1), q ( 2 ),, q ( L )

1 q ( i ) V

and

min

w( m )

R * arg mins D( R s , T )

R

s

d

(

t

(

m

),

r

( w(m)))

m 1

connected word recognition

The required computatio n is :

M .L N q ( )

M .L.N L

L

1

V

CL

V (grid points)

3

3

e.g., M 300, L 7, N 40, and V 10,

then, C L 2.8 10 (grid points)

11

connected word recognition

The alternative algorithms:

Two-level dynamic programming approach

Level building approach

One-stage approach and subsequent

generalizations

Two-level dynamic programming algorithm

Two-level dynamic programming algorithm

D(v, b, e) min

w( m )

e

d (t (m), r (w(m)))

m b

v

~

D(b, e) min [ D(v, b, e)] best score

1 v V

~

N (b, e) arg min [ D(v, b, e)] best reference index

1 v V

Two-level dynamic programming algorithm

~

D (e) min [ D(b, e) D 1 (b 1)]

1b e

Step 1, Initializa tion

D 0 (0) 0,

D (0) ,

1 Lmax

Step 2, Loop on e for 1

~

D 1 (e) D(1, e), 2 e M

Step 3, Resursion, Loop on e for 2,3, , Lmax

~

D 2 (e) min [ D(b, e) D1 (b 1)], 3 e M

1b e

~

D 3 (e) min [ D(b, e) D2 (b 1)], 4 e M

1b e

~

D (e) min [ D(b, e) D 1 (b 1)], 1 e M

1b e

Step 4, Final Solution

D * min [ D ( M )]

1 Lmax

Two-level dynamic programming algorithm

Computation cost of the two-level DP algorithm is:

C 2 L V . M . N (2 R 1) (grid points)

The required storage of the range reduced algorithm is:

S 2L 2M (2 R 1)

e.g., for M=300, N=40, V=10, R=5

C=1,320,000 grid points

And S=6600 locations for D(b,e)

1

1

D (m), m11 (1) m m12 (1)

2

1

D (m), m21 (1) m m22 (1)

D (m), mV 1 (1) m mV 2 (1).

V

1

m1 (1) min [mv1 (1)]

1 v V

m2 (1) min [mv 2 (1)]

1 v V

D (m) min [ D (m)]

B

v

1 v V

N (m) arg min [ D (m)]

B

1 v V

F (m) F

B

N B ( m )

v

( m)

m1 (2) min [mv1 (2)]

1 v V

m2 (2) min [mv 2 (2)]

1 v V

D min [ D (m)].

*

1 Lmax

B

R RB RA RA RB

*

L(m) (m 1) / 2

U (m) 2(m 1) 1

m 1

L(m) max

,2(m M ) ()

2

1

U (m) min 2(m 1), (m M ( Lmax )

2

CLB V . Lmax . N . M / 3

S LB 3M . Lmax

grid po int s

D (m)

1 min

.

m1 ( 1)mm2 ( 1)

m

B

1

D ( m)

1

S arg

max

M T . 1 m S

m1 ( 1) m m2 ( 1)

m

B

D 1 (m)

2

2

S arg

max

M T . 1 m S .

m1 ( 1) m m2 ( 1)

m

B

1

1

c(m) arg

D* min

min

c ( m 1) n c ( m 1)

min

1 Lmax M END m M

[ D (m 1, n)]

v

[ DB (m)].