1.5 Constraint Qualifications

advertisement

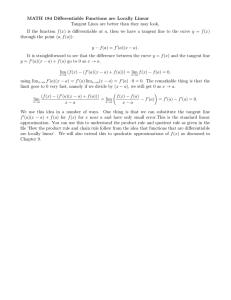

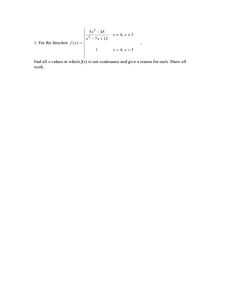

Spring 2016 Version A TEXT FOR NONLINEAR PROGRAMMING Thomas W. Reiland Statistics Department North Carolina State University Raleigh, NC 27695-8203 Office: (919) 515-1939 Email: reiland@ncsu.edu Table of Contents Chapter I: Optimality Conditions..................................................Error! Bookmark not defined. § 1.1 Differentiability.................................................................Error! Bookmark not defined. § 1.2. Unconstrained Optimization ............................................Error! Bookmark not defined. § 1.3 Equality Constrained Optimization...................................Error! Bookmark not defined. § 1.3.1 Interpretation of Lagrange Multipliers..........................Error! Bookmark not defined. § 1.3.2 Second order Conditions – Equality Constraints ..........Error! Bookmark not defined. § 1.3.3 The General Case ..........................................................Error! Bookmark not defined. § 1.4 Inequality Constrained Optimization ...............................Error! Bookmark not defined. § 1.5 Constraint Qualifications (CQ) ......................................................................................... 2 § 1.6 Second-order Optimality Conditions ...............................Error! Bookmark not defined. § 1.7 Constraint Qualifications and Relationships Among Constraint Qualifications ..... Error! Bookmark not defined. Chapter II: Convexity ...................................................................Error! Bookmark not defined. § 2.1 Convex Sets ......................................................................Error! Bookmark not defined. § 2.2 Convex Functions ............................................................Error! Bookmark not defined. § 2.3 Subgradients and differentiable convex functions ............Error! Bookmark not defined. § 1.5 Constraint Qualifications (CQ) Consider x0 A E n . Intuitively, h will be considered a tangent vector of A at x0 if h leads us from x0 into A. Definition 1.5.1 Let x0 A E n and z E n ; if there exists 0 such that x 0 z A for some 0 , then z is a linear tangent vector of A at x0. This definition is too restrictive for our purposes. For example, a circle has no nonzero linear tangent vectors. However, a circle does have “curvilinear tangent vectors.” Definition 1.5.2 A curvilinear tangent vector of A at x0 is a vector h such that h is the derivative dx(0) h at t 0 of a continuous arc x(t ), 0 t , in A having x(0) x0 . dt Remark: (1) Every linear tangent vector is a curvilinear tangent vector. (2) dx(0) dt h implies more than that h is tangent to the arc at x(0) x0 . Generalized Curvilinear Tangent Vector Example 1-31: Let A be defined by g1 ( x) x22 x13 0, g 2 ( x) x2 x3 0 . (i) A ( x1 , x2 ) E 2 : x2 x13 Curvilinear tangent vectors at x 0 (0, 0)T are ( x1 , x2 ) : x2 0 t x(t ) 3 t dx(t ) (ii) A ( x1 , x2 ) : x1 0, x2 x12 dt t 0 1 0 ( x1 , x2 ) : x1 0, x2 x1 Curvilinear tangent vectors at x 0 (0, 0)T are ( x1 , x2 ) : x2 max(0, x1 ) t 1 (1) e.g. x(t ) 3 is on the curve x2 x13 ; dx(t ) dt t 0 0 t t 1 (2) e.g. x(t ) is on the curve x2 x1 ; dx(t ) dt t 0 t 1 (iii) A ( x1 , x2 ) : x1 0, x2 x12 (x , x ) : x 0, 2 x x 1 x 2 3 1 3 1 Curvilinear tangent vectors at x 0 (0, 0)T are ( x1, x2 ) : x1 0, x2 0) ( x1, x2 ) : x1 0, 2 3 x1 x2 13 x1 1 2 1 Let x 0 (0, 0)T and let the arc x(t ) be the curve x13 x2 extending from (0, 0)T into the 1 dx(0) x( y) x(0) third quadrant. The curve is tangent to h at x 0 but lim h . y 0 dt y 0 t 1 1 1 y i.e. x(t) 3 , 0 t , so lim 3 lim 2 h y 0 y t y y 0 y 0 -h is not a curvilinear tangent vector since it does not point back into A (see figure below) This concept is also too restrictive; we can generalize the idea by replacing a curve with a directionally convergent sequence of points. Definition 1.5.3 Let w E n be a unit vector and x0 A E n ; w is a sequential tangent vector xk x0 k 0 k 0 k 0 of A at x if there exist a sequence x A such that x x , x x , and lim k w . k x x 0 In other words, the sequence x k converges to x0 in the direction w. Example 1-32: A E 2 contains the unit circle; choose x 0 0 0 . Let w 1 0 ; w is a T sequential tangent vector of A at x0 since x k 1 k T T 0 satisfies the definition: x k 0 0 x 0 , x k x 0 T 1 1 1 xk lim k lim k k lim w k x k 0 k 0 0 T Now let w 1 2 since 3 . Then w is a sequential tangent vector of A at x 0 0 0T 2 x A where x k k lim k xk xk 1 2k 1 2k lim k T 3 works. 2k T 1 2k 2 lim w k 3 1 k 2 3 Definition 1.5.4 Let x A E n ; the closed cone of tangents of A at x, denoted S ( A, x ) , is the set of z E n such that there exists a sequence x k A converging to x and a sequence of nonnegative numbers (scalars) k such that the sequence k ( x k x) converges to z. Remark: S ( A, x ) contains all the nonnegative multiples of the sequential tangent vectors w in Definition 1.5.3. Can always let a k 1 k . x x Remark: Every curvilinear tangent h of A at x0 is in S ( A, x ) . Proof: If x(t ), 0 t , is a continuous arc in A having x(0) x0 and dx(0) dt 0 k h , then choose a k k and x k x . We have x k A since k k , lim xk lim x k k x(0) x0 , and lim a k ( x k x 0 ) lim k k k x(0) h . QED. x k Geometric Interpretation of the close cone of tangents S ( A, x ) Translate A by subtracting x from each element of A. A* = A – x. Let x k be a sequence in the translated set, x k 0 , which converges to the origin. Construct a sequence of half-lines from the origin passing through x k . These half-lines converge to a half-line that will be a member of S ( A, x ) . The union of all half-lines formed by taking all such sequences is S ( A, x ) . Example 1-33: Let A ( x1 , x2 ) : ( x1 4) ( x2 2) 1 and x 4 3 2 lies on the boundary of A. 2 2 T 3 which 2 Translate A by subtracting x from each element in A. 2 2 1 3 A ( x1 , x2 ) : x1 x 1 2 2 2 By taking x k ’s on the boundary of A* converging to 0 0 , we generate T sequences of half-lines converging to a line that is the ordinary tangent line to the curve defined by the boundary of A* at the origin. The tangent line satisfies 3 x1 1 x2 0 . 2 2 * Repeating this process for all sequences in the interior of A converging to T S ( A, x) ( x1 , x2 ) : 3 x1 1 x2 0 . 0 0 , we get the following: 2 2 Remark: Let g ( x1 , x2 ) ( x1 4) 2 ( x2 2)2 1 0 Then 1 Z 1 ( x) z E 2 : z T g ( x) 0 z E 2 : z1 3 z2 0 z E 2 : 3 z1 z2 0 2 2 Definition 1.5.5 Let A E n ; the dual cone of A, denoted A , is A x E n : xT y 0 y A . From the definition, this is obviously a cone and can never be empty since 0 A . Example 1-34: See examples (1) – (5) below with associated figures. (1) Let A E n is a subspace, then A A ; the dual equals the orthocomplement of A. The dual cone is a generalization of orthogonality between subspaces. If C is a nonempty convex cone, then C (C) E n . (2) A x E 2 : 0 x2 x1 (3) A x E 2 : x2 0 (4) A x E 2 : x2 x1 (5) A En A x E 2 : x1 0, x1 x2 0 A x E 2 : x1 0, x2 0 A 0 A 0 Lemma 1.21 Suppose that x 0 X . The set Z 1 ( x0 ) Z 2 ( x0 ) is empty if and only if f ( x0 ) Z 1 x0 . Proof: Z 1 ( x0 ) Z 2 ( x0 ) if and only if z Z 1 ( x0 ), zT f ( x0 ) 0 Thus by definition, f ( x0 ) Z 1 x0 ) . Lemma 1.22 Suppose that x0 is a local minimum point for Problem (P). Then f ( x0 ) S X , x0 . Remark: Suppose X E n (i.e. unconstrained problem) or x0 int( X ) (i.e. problem essentially unconstrained), then S X , x 0 E n and S X , x0 0 so we are left with the usual unconstrained result f ( x0 ) 0 . Proof: Let z S ( X , x0 ) . Then there exists x k X , converging to x 0 and a sequence of nonnegative numbers k such that k ( x k x0 ) z . f is differentiable at x 0 . f ( x k ) f ( x 0 ) ( x k x 0 )T f ( x 0 ) x k x 0 ( x k ; x 0 ) (where β is a function that converges to zero as k approaches infinity) T k f ( x k ) f ( x 0 ) k ( x k x 0 ) f ( x 0 ) k ( x k x 0 ) ( x k ; x 0 ) As k , k ( x k x 0 ) ( x k ; x 0 ) , and since x k X and x0 is a local minimum, k f ( x k ) f ( x 0 ) converges to a nonnegative limit. T 0 lim k f ( x k ) f ( x0 ) lim k ( x k x0 ) f ( x 0 ) zT f ( x 0 ) k k Therefore, f ( x0 ) S X , x0 . QED Theorem 1.23 Generalized Karush-Kuhn-Tucker (K-K-T) Conditions Let x be a local minimum to Problem (P) and suppose that Z 1 ( x ) S X , x . Then there exist E m and E p such that the following are true: m p i 1 j 1 f ( x ) igi ( x ) j h j ( x ) 0 i gi ( x ) 0, i 1,, m 0 (i) (ii) (iii) Proof: Suppose x is a local minimum to Problem (P). By Lemma 1.22, f (x ) S X , x . If Z 1 ( x ) S X , x , then f ( x ) Z 1 ( x ) . By Lemma 1.21, Z 1 ( x ) Z 2 ( x ) . By Theorem 1.18, (i) – (iii) hold. QED x En Consider Problem (P’): Min f ( x) s.t. gi ( x) 0, i 1,, m h j ( x) 0, j 1,, p x0 Corollary 1.24 Let x be a solution of (P’) and suppose Z 1 X , x S X , x . Then there exist E and E such that the following are true: m p m p i 1 j 1 f ( x ) igi ( x ) j h j ( x ) 0 gi ( x ) 0, i 1,, m 0 i And p m ( x )T f ( x ) igi ( x ) j h j ( x ) 0 i 1 j 1 Remark: It is possible to find points satisfying Karush-Kuhn-Tucker conditions (Theorem 1.23) that are not feasible. Example 1-35: Min f ( x) x1 x E2 g1 ( x) 16 ( x1 4) 2 x22 0 h1 ( x) ( x1 3) 2 ( x2 2) 2 13 0 Using the K-K-T conditions we locate three candidates: T x1 0 0 1 18 , 1 0 T x 2 6.4 3.2 1 3 40 , 1 15 T x3 3 3 2 1 0, 1 13 26 Since the contours of f are just vertical lines, we can see that x1 , x 2 are local minimum points and x3 is a local maximum point for Max f ( x) x1 . I ( x1 ) I ( x2 ) 1 Z 1 ( x1 ) z E 2 : g1 ( x1 )T z 0, h1 ( x1 )T z 0 z E 2 : 8 0 z 0, 6 4 z 0 z E 2 : z1 0, z2 3 z1 2 Z ( x ) S X , x S X , x1 z E 2 : z1 0, z2 3 z1 2 Z 1 ( x1 ) S X , x1 1 1 1 Z 2 ( x1 ) z E 2 : f ( x1 )T z 0 z E 2 : 1 0 z 0 z E 2 : z1 0 So Z 1 ( x1 ) Z 2 ( x1 ) 6 z z E At x 2 , Z 1 ( x 2 ) z E 2 : z1 0, z2 17 S ( B , x 0 ) x : g ( x 0 ) x 0 S B, x g ( x ) , E 0 0 T m , 0 1 2 : z1 0 Comments concerning Lemma 1.22: Suppose that x 0 is a solution to Problem (P). Then f ( x0 ) S X , x0 . Many results concerning optimality conditions for nonlinear programming problems (e.g. Karush-Kuhn-Tucker conditions, Lagrange multipliers) are special cases of Lemma 1.22. For special problems, the form of S X , x0 must the determined. This will be developed below. Lemma 1.25 Suppose g : E n E m is differentiable at x 0 , suppose there exists z E n such that g ( x 0 ) z 0 , and let B x : g ( x) g ( x 0 ) . Then S ( B; x 0 ) x E n : g ( x 0 ) x 0 , and thus S B; x0 g ( x0 )T , E m , 0 . Proof: Part 1 Let z E n be such that g ( x 0 ) z 0 ; then g ( x0 )( z ) 0 and g ( x0 ( z )) g ( x0 ), such that 0 , for some 0 . Define x k x 0 ( z ) ; x k B and x k x 0 as k . k k Let k ; then k ( x k x0 ) z , thus k ( x k x0 ) z . Then z S ( B, x0 ) and y : g ( x 0 ) y 0 S(B,x 0 ) . Now let x be such that g ( x0 ) x 0 . Then g ( x0 ) ( z) (1 ) x 0, (0,1) ( z ) (1 ) x S B; x 0 , (0,1) Letting 0 , we have x S B; x 0 . Thus far we have shown x : g ( x ) x 0 S B; x . 0 0 Part 2 Let x S B, x 0 ; then there exist x k B such that x k x 0 and k 0 such that k ( x k x0 ) x . Since g is differentiable at x 0 , g ( x k ) g ( x 0 ) g ( x 0 )( x k x 0 ) x k x 0 ( x k ; x 0 ) , where ( x k x0 ) 0 as k . Since x k B, g ( x k ) g ( x0 ) , and 0 lim k g ( x k ) g ( x0 ) lim g ( x0 ) k ( x k x0 ) g ( x0 ) x . k k Hence S B; x 0 x : g ( x 0 ) x 0 . QED Remark: g ( x 0 ) z 0 says that the gradients g1 ( x 0 ), , g m ( x 0 ) are in an open halfspace. In particular, this condition holds if the gradient vectors are linearly independent ( i.e. if g ( x0 ) has full rank). This follows from Lemma 1.19. Consider Problem (P’’): Min f ( x) x En s.t. gi ( x) 0, i 1,, m g1 Notation: g g m X x : g ( x) 0 I i : gi ( x 0 ) 0 g I ( x 0 ) k n : rows are the gradients of the active constraints Lemma 1.22 If x0 is a solution to Problem (P’’), then f ( x0 ) S X , x0 . Now S X , x0 S x : g ( x) g ( x ) 0 , x . 0 I 0 I By Lemma 1.25, if there exist y such that g I ( x 0 ) y 0 , then S X , x 0 x : g I ( x 0 ) x 0 and S X , x g ( x ) , 0 0 T I 0 . So f ( x0 ) S X , x0 f ( x0 ) g I ( x0 )T ˆ , ˆ 0, ˆ E k Define i 0, i I . Then f ( x0 ) g ( x 0 )T 0 , i gi ( x 0 ) 0 , and 0 (which are just the K-K-T conditions). Remark: The multiplier corresponding to f ( x 0 ) is positive since we are assuming there exist y such that g I ( x 0 ) y 0 . If no such y exists, then we have only the following: This implies x : g ( x ) x 0 S X , x S X , x 0 x : g I ( x 0 ) x 0 0 I 0 (see Part 2 of the proof for Lemma 1.25) g I ( x0 )T , 0 S X , x 0 . If no such y exists, the by Lemma 1.19, there exist nonzero 0 such that g I ( x 0 )T 0 and one obtains the Fritz-John Conditions (Theorem 1.20) : there exist 0 0, 0, (0 , ) 0, 0f ( x 0 ) g I ( x 0 )T 0 . Consider the equality constrained problem: Min f ( x) x E n s.t. h j ( x) 0, j 1, , p f, hj’s continuously differentiable h1 h hp X x E n : h( x ) 0 Lemma 1.26 Let h : E n E p be continuously differentiable at x 0 , and let C x E n : h( x) h( x 0 ) . If h( x 0 ) has full rank, then S (C ; x 0 ) x : h( x 0 ) x 0 and hence S C; x h( x ) , 0 E p . 0 T Thus, Lemma 1.22 says f ( x0 ) S X ; x0 . This means that f ( x0 ) h( x0 )T . p In other words, f ( x0 ) j h j ( x 0 ) 0 (which is just Lagrange Multipliers). j 1 Consider the general nonlinear programming problem: Min f ( x) x En s.t. gi ( x) 0, i 1,, m h j ( x) 0, j 1, , p f, gi’s, hj’s are continuously differentiable g g1 gm T I i : g ( x ) 0 h h1 hp T X x E n : gi ( x) 0, i 1, , m; h j ( x) 0, j 1, , p 0 i Lemma 1.27 Let v1 : E n E m and v2 : E n E p be differentiable and continuously differentiable, respectively, at x 0 . If v2 ( x 0 ) is of full rank, if there exist y such that v1 ( x 0 ) y 0 and v2 ( x 0 ) y 0 , and if C x : v1 ( x) v1 ( x 0 ), v2 ( x) v2 ( x 0 ) , then S (C; x 0 ) x E n : v1 ( x 0 ) x 0, v2 ( x 0 ) x 0 And thus S (C; x ) v ( x ) 0 0 T 1 v2 ( x 0 )T , 0 Now Lemma 1.22 says that if x 0 solves Problem (P), then f ( x0 ) S X ; x0 . Note that S ( X , x0 ) S x E n : g I ( x) g ( x0 ) 0, h( x) h( x0 ) 0 , x0 . By Lemma 1.27, if there exist y E n such that g I ( x 0 ) y 0 and h( x0 ) y 0 , and if h( x 0 ) is full rank; then S X , x 0 x : g I ( x 0 ) x 0, h( x 0 ) x 0 and S X , x g ( x ) h( x ) , 0 0 T 0 T I 0 . So f ( x0 ) S X , x0 implies that there exist ˆ E k , ˆ 0, E p such that f ( x0 ) g I ( x0 )T ˆ h( x0 )T . Define i 0, i I ; then you get the following conditions (K-K-T). f ( x0 ) g ( x0 )T h( x 0 )T 0 i gi ( x 0 ) 0, i 1, , m 0 Remark: The assumption that there exist y E n such that g I ( x 0 ) y 0 and h( x0 ) y 0 , and h( x 0 ) is at full rank comprise a constraint qualification. It holds if gi ( x 0 ), i I , and h j ( x0 ) are linearly independent.