GE 2013-14 Assessment Report

advertisement

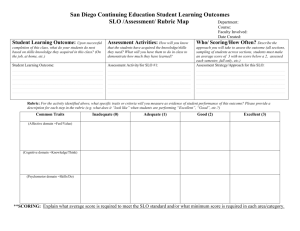

2013-2014 Annual Program Assessment Report Please submit report to your department chair or program coordinator, the Associate Dean of your College and the assessment office by Monday, September 30, 2013. You may submit a separate report for each program which conducted assessment activities. College: -NADepartment: -NAProgram: General Education Assessment liaison: BETH LASKY, Director, GE and ANU THAKUR, Coordinator, Academic Assessment Team members: Ashley Samson, Kinesiology Beto Gutierrez, Chicana/o Studies Gigi Hessamian, Communication Studies Mintesnot Woldeamanuel, Urban Studies and Planning Nina Golden, Business Law 1. Overview of Annual Assessment Project(s). Provide a brief overview of this year’s assessment plan and process. In 2013-14, GE assessment involved implementation of the plans for the assessment of the Comparative Cultural Studies section of GE at CSUN. a. Presentation of Assessment/Grading rubric at department meetings, and to Associate Deans in an effort to encourage widespread use of the rubric for grading and assessment of reflection assignments, especially in the CCS courses. b. Indirect assessment – A survey was developed to assess student motivation. This was administered as a pre-test and posttest in the Spring 2014 semester. c. Presentation at WASC ARC 2014. 1 2. Assessment Buy-In. Describe how your chair and faculty were involved in assessment related activities. Did department meetings include discussion of student learning assessment in a manner that included the department faculty as a whole? A questionnaire was sent to faculty teaching Comparative Cultural Studies courses through their department chairs. A group of faculty representing different colleges and different ranks were chosen from those who responded to the survey and indicated their interest in participating in the assessment of the GE section. The extent of buy-in across campus after presentation of the assessment/grading rubric at department meetings is yet to be determined. Department chairs and faculty have been asked to update the assessment team on ways in which faculty might be using the rubric in their courses and in department-level assessment efforts. 3. Student Learning Outcome Assessment Project. Answer items a-f for each SLO assessed this year. If you assessed an additional SLO, copy and paste items a-f below, BEFORE you answer them here, to provide additional reporting space. 3a. Which Student Learning Outcome was measured this year? The following goal and student learning outcomes are listed for the Comparative Cultural Studies section of GE. The rubric measures SLOs 1 through 4. Goal: Students will understand the diversity and multiplicity of cultural forces that shape the world through the study of cultures, gender, sexuality, race, religion, class, ethnicities and languages with special focus on the contributions, differences, and global perspectives of diverse cultures and societies. Student Learning Outcomes Students will: 1. Describe and compare different cultures; 2. Explain how various cultures contribute to the development of our multicultural world; 3. Describe and explain how race, ethnicity, class, gender, religion, sexuality and other markers of social identity impact life experiences and social relations; 4. Analyze and explain the deleterious impact and the privileges sustained by racism, sexism, ethnocentrism, classism, homophobia, religious intolerance or stereotyping on all sectors of society; 2 5. Demonstrate linguistic and cultural proficiency in a language other than English. 3b. Does this learning outcome align with one or more of the university’s Big 5 Competencies? (Delete any which do not apply) Critical Thinking Oral Communication Written Communication Quantitative Literacy Information Literacy 3c. Does this learning outcome align with University’s commitment to supporting diversity through the cultivation and exchange of a wide variety of ideas and points of view? In what ways did the assessed SLO incorporate diverse perspectives related to race, ethnic/cultural identity/cultural orientations, religion, sexual orientation, gender/gender identity, disability, socio-economic status, veteran status, national origin, age, language, and employment rank? 3d. What direct and/or indirect instrument(s) were used to measure this SLO? Direct assessment: A rubric was developed in 2012-13 to rate randomly selected reflection papers from CCS courses. Indirect assessment: A pre- and post-survey was used to gauge student motivation in the GE courses. 3e. Describe the assessment design methodology: For example, was this SLO assessed longitudinally (same students at different points) or was a cross-sectional comparison used (Comparing freshmen with seniors)? If so, describe the assessment points used. Assessment in the GE section only involved the students enrolled in the courses in the given semester. The student motivation survey was administered to the same students at the start and end of the semester. The courses involved in the assessment represent five colleges across campus. 3f. Assessment Results & Analysis of this SLO: Provide a summary of how the results were analyzed and highlight findings from the collected evidence. The results from the rubric were assessed in 2012-13. The results indicated unsatisfactory inter-rater reliability. The team intends to repeat the assessment in Fall 2014. The results from the pre- and post-survey await analysis. This will be done in Fall 2014. 3 3g. Use of Assessment Results of this SLO: Describe how assessment results were used to improve student learning. Were assessment results from previous years or from this year used to make program changes in this reporting year? (Possible changes include: changes to course content/topics covered, changes to course sequence, additions/deletions of courses in program, changes in pedagogy, changes to student advisement, changes to student support services, revisions to program SLOs, new or revised assessment instruments, other academic programmatic changes, and changes to the assessment plan.) This determination will be made after the data analysis and assessment in Fall 2014. The team hopes to find that in addition to the faculty on the team some faculty members across campus are using the rubric in their courses. If this determination is made the data from a variety of courses may be consolidated and assessed. 4. Assessment of Previous Changes: Present documentation that demonstrates how the previous changes in the program resulted in improved student learning. - NA – 5. Changes to SLOs? Please attach an updated course alignment matrix if any changes were made. (Refer to the Curriculum Alignment Matrix Template, http://www.csun.edu/assessment/forms_guides.html.) -NA6. Assessment Plan: Evaluate the effectiveness of your 5 year assessment plan. How well did it inform and guide your assessment work this academic year? What process is used to develop/update the 5 year assessment plan? Please attach an updated 5 year assessment plan for 2013-2018. (Refer to Five Year Planning Template, plan B or C, http://www.csun.edu/assessment/forms_guides.html.) The determination of how GE assessment will progress over the next five years will be made in discussion with the Office of Undergraduate Studies and Office of Academic Assessment. 7. Has someone in your program completed, submitted or published a manuscript which uses or describes assessment activities in your program? Please provide citation or discuss. 4 Although no manuscript was written, the team’s work was presented at WASC ARC. Lasky, B., Thakur, A., Hessamian, G., Woldeamanuel, M., Golden, N., & Sampson, A. (2014). GE Rubric/Assessment at CSUN: Starting Small and Scaling Up. Paper presented at the 2014 WASC Academic Resource Conference held at Los Angeles, CA in April 2014. 8. Other information, assessment or reflective activities or processes not captured above. Beth Lasky and Anu Thakur have started work on assessment of the Critical Thinking section of GE. A team of faculty was chosen in Spring 2014. A strategic planning meeting was held in late Spring 2015 and the team designed a diagnostic instrument to be administered in their classes at the start of the Fall 2014 semester. This effort will continue through 2014-15. 5