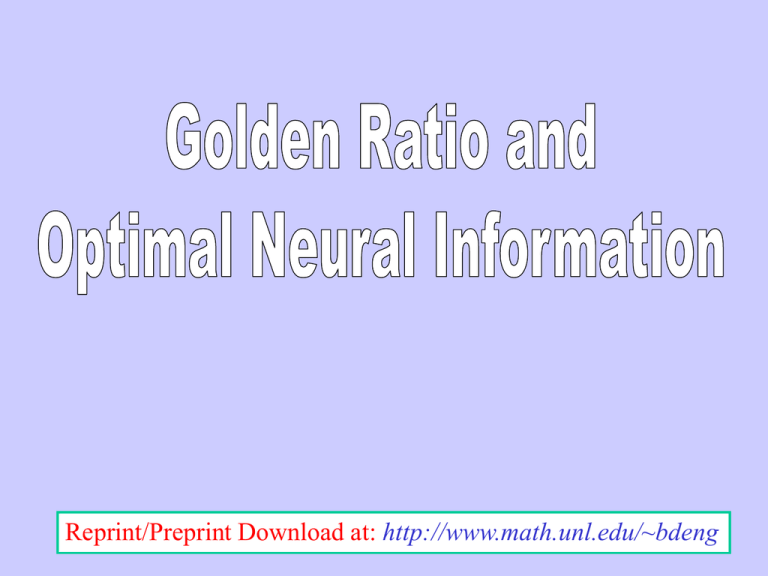

Title Reprint/Preprint Download at:

advertisement

Reprint/Preprint Download at: http://www.math.unl.edu/~bdeng

Title

Golden Ratio f :

1

f

f + f2 = 1

f=

5 1

0.6180...

2

f2

• Pythagoreans (570 – 500 B.C.) were the first to know

that the Golden Ratio is an irrational number.

• Euclid (300 B.C.) gave it a first clear definition as

‘ the extreme and mean ratio’.

1

f2

f3

f

• Pacioli’s book ‘The Divine Proportion’ popularized

the Golden Ratio outside the math community (1445 – 1517).

• Kepler (1571 – 1630) discovered the fact that

Fn 1 Fn f, Fn the nth Fibonacci number.

• Jacques Bernoulli (1654 – 1705) made the connection

between the logarithmic spiral and the golden rectangle.

• Binet Formula (1786 – 1856)

1 / f f

n

n

Fn

5

• Ohm (1835) was the first to use the term

‘Golden Section’.

Nature

Neurons Models

Rinzel & Wang (1997)

Bechtereva & Abdullaev (2000)

1

time

3T

Neurons

1T

(1994)

seedtuning

SEED Implementation

Spike Excitation Encoding & Decoding(SEED)

3 2 4 3 3 2 2 11 3 …

Encode

Signal

Channel

Decode

Mistuned

Information System

Alphabet:

A =if{0,1}

In general,

A = {0, …, n-1}, P({0}) = p0 ,…, P({n –1}) = pn –1,

Message:

11100101…

then eachs =

average

symbol contains

Information System:

Ensemble

messages,

E(p) = (–

p0 ln p0 –of…

– pn –1 ln characterized

pn –1 ) / ln 2 by

probabilities:

bits of information, symbol

call it the

entropy. P({0})= p0 , P( {1})= p1

Example:

Probability

for a particular message s0… sn –1 is

1},

equal #probability

P({0})=P({1})=0.5.

# of

1s w/

ps … psAlphabet:

= p0# ofA0s=p{0,

,

where

of

0s

+

#

of

1s = n

1

Message:

…011100101…

0

n

The average

symbol

for a typical message is

Then

each probability

alphabet contains

p0 p1

(#

of

0s)

/

n

(#

of

1s)

/

n

1/n

E

=

ln

2

/

ln

2

=

1

bit

(ps … ps ) = p0

p1

~ p0 p1

0

n information

of

Entropy

log ½ p

-ln p / ln 2

log ½ p

-ln p / ln 2

0

0

,

1

Let p0 = (1/2)

= (1/2)

p1 = (1/2)

= (1/2) 1

Then the average symbol probability for a typical message is

(– p ln p – p ln p ) / ln 2

E( p )

p0 p1

1/n

0

0

1

1

:

(ps … ps ) ~ p0 p1 = (1/2)

= (1/2) 0

0

n

By definition, the entropy of the system is

E(p) = (– p0ln p0 – p1ln p1) / ln 2 in bits per symbol

Entropy

Bit rate

Golden Ratio Distribution

Bit rate

SEED Encoding:

Sensory Input Alphabet: Sn = {A1 , A2 , … , An } with probabilities {p1, …, pn}.

Isospike Encoding: En = {burst of 1 isospike, … , burst of n

isospikes}

Message: SEED isospike trains…

Idea Situation: 1) Each spike takes up the same amount of time, T,

2) Zero inter-spike transition

Then, the average time per symbol is

Tave (p) = Tp1 + 2Tp2+… +nTpn

And, The bit per unit time is

rn (p) = E (p) / Tave (p)

time

3T

Theorem: (Golden Ratio Distribution) For each n r 2

1T

8

rn* = max{rn (p) | p1 + p2+… +pn = 1, pk r 0} = _ ln p1 / (T ln 2)

for which pk = p1k and p1 + p12 +… + p1n = 1.

In particular, for n = 2, p1 = f, p2 = f 2 .

In addition, p1(n) ½ as n .

Golden Ratio Distribution

Bit rate

Generalized Golden Ratio Distribution = Special Case:

Tk = m k, Tk / T1 = k

Golden Sequence

(Rule: 110, 01)

1

10

101

10110

10110101

1011010110110

101101011011010110101

# of 1s

(Fn)

1

1

2

3

5

8

13

# of 0s

(Fn-1)

Total

(Fn + Fn –1 = Fn +1)

0

1

1

2 tile} f

P{fat

3 tile} f2

P{thin

5

8

(# of 1s)/(# of 0s) = Fn /Fn-1 1/f, Fn+1 = Fn + Fn -1,

> Distribution: 1 = Fn /Fn+1 + Fn -1 /Fn+1 , > p1 f , p0 f 2

Title