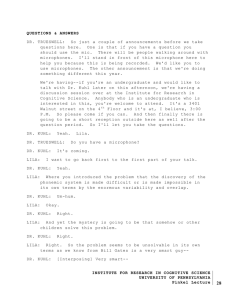

INSTITUTE FOR RESEARCH IN COGNITIVE SCIENCE UNIVERSITY OF PENNSYLVANIA

advertisement