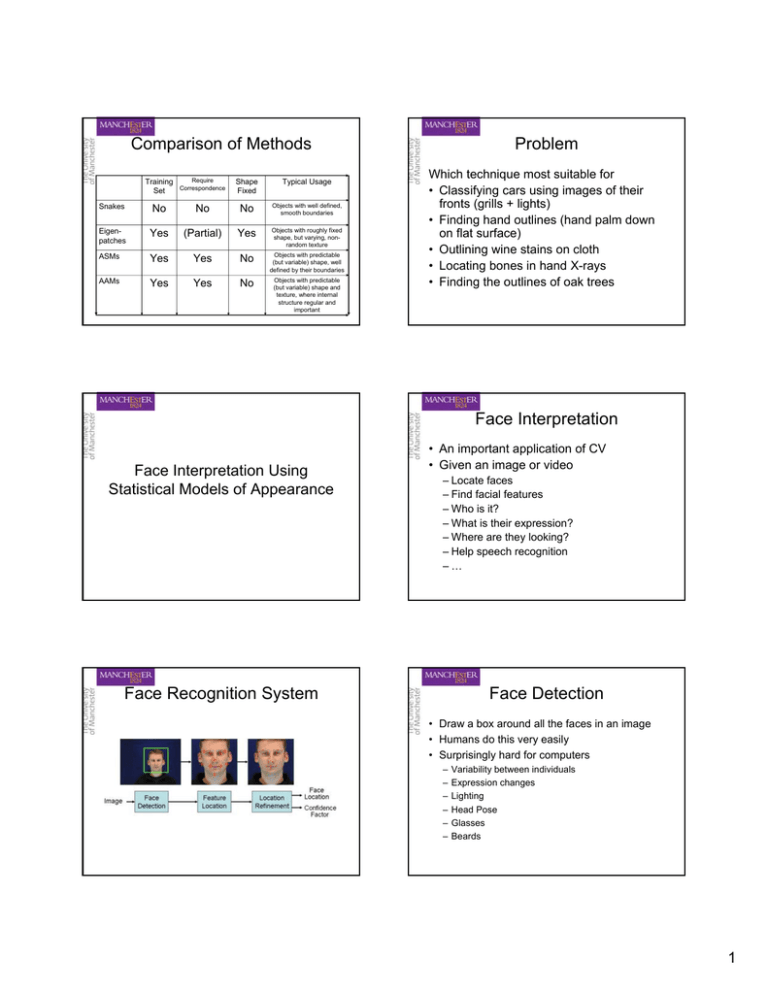

Comparison of Methods Problem

advertisement

Comparison of Methods Training Set Require Correspondence Shape Fixed Typical Usage Snakes No No No Objects with well defined, smooth boundaries Eigenpatches Yes (Partial) Yes Objects with roughly fixed shape, but varying, nonrandom texture ASMs Yes Yes No Objects with predictable (but variable) shape, well defined by their boundaries AAMs Yes Yes No Objects with predictable (but variable) shape and texture, where internal structure regular and important Problem Which technique most suitable for • Classifying cars using images of their fronts (grills + lights) • Finding hand outlines (hand palm down on flat surface) • Outlining wine stains on cloth • Locating bones in hand X-rays • Finding the outlines of oak trees Face Interpretation Face Interpretation Using Statistical Models of Appearance • An important application of CV • Given an image or video – Locate faces – Find facial features – Who is it? – What is their expression? – Where are they looking? – Help speech recognition –… Face Recognition System Face Detection • Draw a box around all the faces in an image • Humans do this very easily • Surprisingly hard for computers – – – – – – Variability between individuals Expression changes Lighting Head Pose Glasses Beards 1 Face Detection Two common approaches 1) Feature based – Search for possible eyes/noses/mouths – Group together to find face Searching for Features • Where does a feature appear – What size and orientation is it? • Search image at all locations, sizes and angles 2) Whole-face Detection – Search for rectangles containing whole face • Orientation invariant features – Need only search at all locations and scales Evaluating Match • How do we evaluate an image patch – Is this image patch an example of my model? • Classification problem • Each patch is either – foreground (target structure) or – background Comparing Patches • Simplest model: A single template – Just an patch from a single image M(x,y) • Measure difference to target patch D( I ( x, y ), M ( x, y )) Feature Detection • Image patch with intensities g • Use a background model to classify pmodel (g) P(model) > pbackground (g) P(background) • This only works if the PDFs are good approximations – often not the case Measuring differences • Sum of squares difference D( I , M ) = ∑ x , y ( I ( x, y ) − M ( x, y )) 2 • Assumes Gaussian noise • Sensitive to outliers • Apply a threshold to detect object • Could estimate foreground/background PDFs for D(I,M) 2 Measuring differences Measuring differences • Sum of absolute differences • Correlation D ( I , M ) = ∑ x , y | I ( x, y ) − M ( x, y ) | D( I , M ) = ∑ x , y I ( x, y ).M ( x, y ) • Assumes exponential noise p(n) ∝ exp(− | x | / σ ) • More robust than sum of squares • Normalized correlation D( I , M ) = ∑ x , y Pre-processing steps σI σM Gradients • Advantageous to pre-process images • Gradients invariant to constant offsets – Apply normalization to remove lighting effects etc – Normalized correlation commonest example I ( x, y ) → ( I ( x, y ) − µ I ) ( M ( x, y ) − µ M ) . ( I ( x, y ) − µ I ) Gx I Gy I ( x, y ) → (Gx ( x, y ), G y ( x, y )) σI I D( I , M ) = ∑ x , y ,k ( I ( x, y , k ) − M ( x, y, k )) 2 G I → G = (Gx2 + G y2 ) Rectification Rectification • Split gradient image into +/-ive images if > 0 ⎧0 R− ( x, y ) = ⎨ − I ( x , y ) if ≤ 0 ⎩ ⎧ I ( x, y ) if > 0 R+ ( x, y ) = ⎨ if ≤ 0 ⎩0 Gx Gx + Gx − Gx + Gx − Gy Gy+ Gy − Gy+ Gy − I Gradient Rectified Smoothed (Smoothed) 3 Feature Detection Whole Face Detection • Pre-process image and patch • Compare image patches • Select good matches • Use classifier to detect face patches – Search at multiple scales • Optimal choice of pre-processing and match measure still a research issue Search for Facial Features Example training features • Search locally for individual features • Right Eye Local Search Predictions Left Mouth Corner Combining Feature Responses David Cristinacce • Good local search Poor local search • Original Detected Pts Sum Pairwise Pt Distributions Final Pts 4 Evaluating Results Further refinement • Sparse points found • Good starting position for model matching • Face AAM refinement leads to more accurate feature location BIOID – Obtain model parameters 23 people 1522 images Web cam, Office scenes, Mostly neutral Face Interpretation • Face parameters analyzed for – Expression – Head pose – Identity c Performance Evaluation XM2VTS (200 clients, 76 imposters) – 3 images/client for registration – 2 images/client + 8/imposter for test id = Pid c • Using manual annotations: EER=0.8% Learnt from training set – Representation seems OK – To compare two faces using parameters: ⎛ c (1) c ( 2) id • id ⎜ cid(1) cid( 2) ⎝ δ12 = cos −1 ⎜ ⎞ ⎟ ⎟ ⎠ Performance Evaluation XM2VTS (200 clients, 76 imposters) • Using manual annotations: EER=0.8% • Fully automatic system: EER=3.7% – Recognition: 1st choice correct 93% • Main source of error: Feature location • Small location error confuses system State of the Art • 2002 Face Recognition Vendor Tests – 10 proprietary face recognition systems – 121,000 mug-shot images of 37,000 people • Indoor `mugshot’ images – Best performer: 90% TP for 1% FA – Range 34%-90% • Outdoor `mugshot’ images with sample taken indoors on the same day – Best performer: 50% TP for 1% FA • Best identification rate 73% (controlled conditions) 5 Facial Behavior Overview of the model Franck Bettinger, Craig Hack Learning from a video sequence Part of training sequence Synthesis of new behaviors Face model modes (Inspired by work done at Leeds Uni. by David Hogg et.al.) Synthesising sequences Generated sequence Sample group models from VLMM Sample sub-trajectories from groups Reconstruct face from parameters F A B E E ... Training sequence Generated sequence ... ... Comparison with training set Volunteers unable to distinguish between real/generated sequences 6