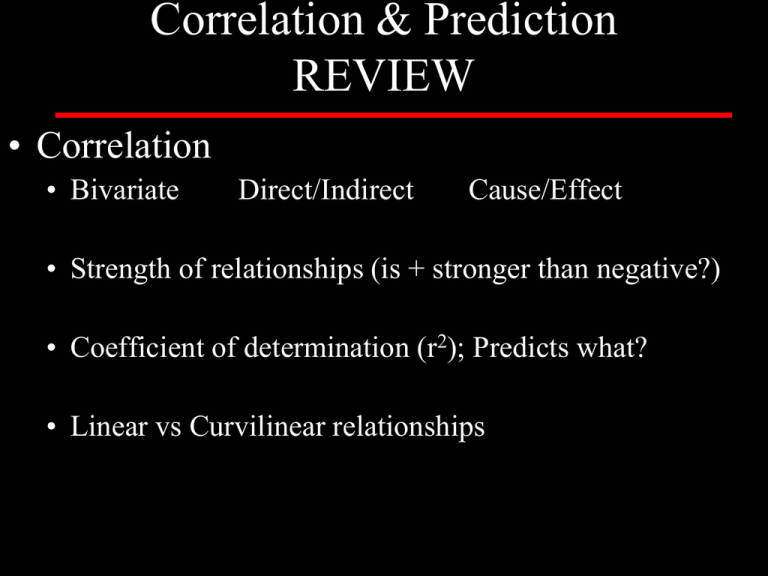

Correlation & Prediction REVIEW • Correlation

advertisement

Correlation & Prediction REVIEW • Correlation • Bivariate Direct/Indirect Cause/Effect • Strength of relationships (is + stronger than negative?) • Coefficient of determination (r2); Predicts what? • Linear vs Curvilinear relationships Inferential Statistics Used to infer sample characteristics to a population Table 5-2 Variable Classification Independent Dependent Presumed cause The antecedent Manipulated/measured by researcher Predicted from Predictor X Presumed effect The consequence Outcome (measured) Predicted to Criterion Y Common Statistical Tests • Chi-Square Determine association between two nominally scaled variables. • Independent t-test Determine differences in one continuous DV between ONLY two groups. • Dependent t-test Compare 2 related (paired) groups on one continuous DV. • One-Way ANOVA Examine group differences between 1 continuous DV & 1 nominal IV. Can handle more than two groups of data. What Analysis? IV DV Statistical Test 1 Nominal 1 Nominal Chi-Square 1 Nominal (2 groups) 1 Nominal (>2 groups) 1 continuous t-test 1 continuous One-Way ANOVA Some Examples • Chi-Square Gender and knee injuries in collegiate basketball players (Q angle) • Independent t-test Differences in girls and boys (independent groups; mutually exclusive) on PACER laps • Dependent t-test Pre and Post measurement of same group or matched pairs (siblings) on number of push-ups completed • One-Way ANOVA Major (AT, ES, PETE; IV >2 levels) and pre-test grade in this class Norm-Referenced Measurement HPHE 3150 Dr. Ayers Topics for Discussion • Reliability (variance & PPM correlation support reliability & validity) • Consistency • Repeatability • Validity • Truthfulness • Objectivity • Inter-rater reliability • Relevance • Degree to which a test pertains to its objectives Reliability Observed, Error, and True Scores Observed Score = True Score + Error Score ALL scores have true and error portions True scores are impossible to measure 2 O b s e r v e d S c o r e V a r i a n c e = S o 2 E r r o r S c o r e V a r i a n c e = S e 2 T r u e S c o r e V a r i a n c e = S t Reliability THIS IS HUGE!!!! rxx ' S S 2 2 true observed S 2 observed S 2 S 2 error observed Reliability is that proportion of observed score variance that is true score variance TIP: use algebra to move S2t to stand alone as shown in formula above (subtract S2e from both sides of equation ) S2 o = S2 t + S 2 e •Desirable reliability > .80 •There is variation in observed, true & error scores •Error can be +(↑ observed scores) or –(↓ observed scores) •Error scores contribute little to observed variation •Error score mean is 0 •S2o = S2t + S2e •Validity depends on reliability and relevance •Observed variance is necessary •Generally, longer tests are more reliable (fosters variance) Table 6-1 Systolic Blood Pressure Recordings for 10 Subjects Subject 1 2 3 4 5 6 7 8 9 10 Sum (S) Mean (M) Variance (S2) S Observed BP 103 117 116 123 127 125 135 126 133 145 1250 125.0 133.6 11.6 = True BP 105 115 120 125 125 125 125 130 135 145 1250 125.0 116.7 + Error BP -2 +2 -4 -2 +2 0 +10 -4 -2 0 0 0 16.9╣ 10.8 4.1 ╣ Se is square root of S2e Reliability Coefficients • Interclass Reliability • Correlates 2 trials • Intraclass Reliability • Correlates >2 trials Interclass Reliability (Pearson Product Moment) • Test Retest (administer test 2x & correlate scores) • See Excel document (Norm-ref msmt examples) • Time, fatigue, practice effect • Equivalence (create 2 “equivalent” test forms) • Odd/Even test items on a single test • Addresses most of the test/retest issues • Reduces test size 50% (not desirable); longer tests are > reliable • Split Halves • Spearman-Brown prophecy formula Index of Reliability rxx ' The theoretical correlation between observed scores and true scores High I of R = low error Square root of the reliability coefficient If r=.81, I of R=.9 Compared to the Coefficient of Determination: r2 (shared variance) I of R vs C of Det. If r=.81 I of R =? C of Det=? Reliability So What? Find a friend and talk about: 1 thing you “got” today 1 thing you “missed” today; can they help? Reliability REVIEW • Inferential • Infer sample findings to entire population • Chi Square (2 nominal variables) • t-test (1 nominal variable for 2 groups, 1 continuous) • ANOVA (1 nominal variable for 2+ groups, 1 continuous) • Correlation • Are two variables related? • What happens to Y when X changes? • Linear relationship between two variables • Quantifies the RELIABILITY & VALIDITY of a test or measurement • Reliability (0-1; .80+ goal) • All scores: observed = true + error • rxx=S2t/S2o • proportion of observed score variance that is true score variance • Interclass reliability coefficients (correlates 2 trials) • Test/retest time, fatigue, practice effect • Equivalent reduces test length by 50% • Split-halves • Index of Reliability • Tells you what? • Related to C of D how? rxx ' Standard Error of Measurement RELIABILITY MEASURE SEM S 1 rxx ' S=standard deviation of the test rxx’=reliability of the test Reflects the degree to which a person's observed score fluctuates as a result of measurement errors EXAMPLE: Test standard deviation=100 SEM = 100 =100(.16) =100(.4) =40 1 .84 r=.84 SEM is the standard deviation of the measurement errors around an observed score EXAMPLE: Average test score=500 SEM=40 68% of all scores should fall between 460-540 (500+40) 95% of all scores range between: ? 420-580 Standard Error of Estimate (reflects accuracy of estimating a score on the criterion measure) VALIDITY MEASURE Standard Error Standard Error of Prediction SEE S 1 r 2 xy Standard Errors both are standard deviations SE of Measurement (reliability) SEM S 1 rxx ' SE of Estimate (criterion-related validity) SEE S 1 r 2 xy Factors Affecting Test Reliability 1) 2) 3) 4) 5) 6) 7) 8) Fatigue ↓ Practice ↑ Subject variability homogeneous ↓, heterogeneous ↑ Time between testing more time= ↓ Circumstances surrounding the testing periods change=↓ Test difficulty too hard/easy= ↓ Precision of measurement precise= ↑ Environmental conditions change=↓ SO WHAT? A test must first be reliable to be valid Validity Types THIS SLIDE IS HUGE!!!! • Content-Related Validity (a.k.a., face validity) • Should represent knowledge to be learned • Criterion for content validity rests w/ interpreter • Use “experts” to establish • Criterion-Related Validity • Test has a statistical relationship w/ trait measured • Alternative measures validated w/ criterion measure • Concurrent: criterion/alternate measured same time • Predictive: criterion measured in future • Construct-Related Validity • Validates theoretical measures that are unobservable Methods of Obtaining a Criterion Measure • Actual participation (game play) • Skills tests, expert judges • Perform the criterion (treadmill test) • Distance runs, sub-maximal swim, run, cycle • Heart disease (developed later in life) • Present diet, behaviors, BP, family history • Success in grad school • GRE scores, UG GPA Interpreting the “r” you obtain THIS IS HUGE!!!! Correlation Matrix for Development of a Golf Skill Test (From Green et al., 1987) Playing golf Long putt Chip shot Pitch shot Middle distance shot Playing golf 1.00 Long putt .59 1.00 Chip shot .58 .47 1.00 Pitch shot .54 .37 .35 1.00 Middle distance shot .66 .55 .61 .40 1.00 Drive -.65 -.62 -.48 -.52 -.79 Drive What are these? Concurrent Validity coefficients 1.00 Interpret these correlations Actual golf score Criterion Putting Trial 1 Putting Trial 2 Actual golf score 1.00 Putting T1 .78 1.00 Putting T2 .74 .83 1.00 Driving T1 .58 .21 .25 Driving T2 .68 .25 .30 Observer 1 .48 .34 .40 Observer 2 .39 .30 .41 Driving Trial 1 Driving Trial 2 Observer Observer 1 2 What are these? 1.00 Concurrent .70 1.00 Validity coefficients .43 .38 .47 .35 1.00 .50 1.00 Interpret these correlations Actual golf score Putting Trial 1 Actual golf score 1.00 Putting T1 .78 1.00 Putting T2 .74 .83 Putting Trial 2 Driving Trial 1 Driving Trial 2 Observer Observer 1 2 What are these? 1.00 Reliability coefficients Driving T1 .58 .21 .25 1.00 Driving T2 .68 .25 .30 .70 1.00 Observer 1 .48 .34 .40 .43 .38 1.00 Observer 2 .39 .30 .41 .47 .35 .50 1.00 Interpret these correlations Actual golf score Actual golf score 1.00 Putting T1 .78 Putting Trial 1 Putting Trial 2 Driving Trial 1 Driving Trial 2 Observer Observer 1 2 1.00 What is this? Putting T2 .74 .83 1.00 Driving T1 .58 .21 .25 1.00 Driving T2 .68 .25 .30 .70 1.00 Objectivity coefficient Observer 1 .48 .34 .40 .43 .38 1.00 Observer 2 .39 .30 .41 .47 .35 .50 1.00 Concurrent Validity This square represents variance in performance in a skill (e.g., golf) Concurrent Validity The different colors and patterns represent different parts of a skills test battery to measure the criterion (e.g., golf) Concurrent Validity Error The orange color represents ERROR or unexplained variance in the criterion (e.g., golf) Remember: ↑error = ↓ validity Concurrent Validity A B C D Consider the Concurrent validity of the above 4 possible skills test batteries Concurrent Validity D – it has the MOST error and requires 4 tests to be administered A B C D Which test battery would you be LEAST likely to use? Why? Concurrent Validity C – it has the LEAST error but it requires 3 tests to be administered A B C Which test battery would you be MOST likely to use? Why? D Concurrent Validity A or B – requires 1 or 2 tests to be administered but you lose some validity A B C Which test battery would you use if you are limited in time? D