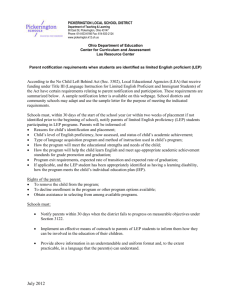

Impact of Bilingual Education Programs on Limited English Proficient

advertisement