Pertemuan 09 & 10 Pengujian Hipotesis Mata kuliah : A0392 - Statistik Ekonomi

advertisement

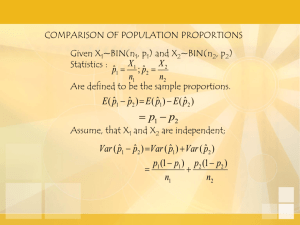

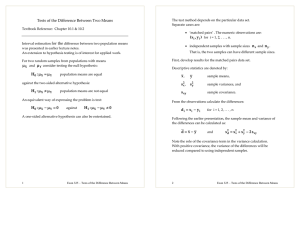

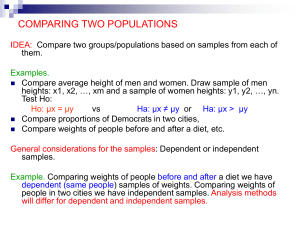

Mata kuliah Tahun : A0392 - Statistik Ekonomi : 2010 Pertemuan 09 & 10 Pengujian Hipotesis 1 Outline Materi : • Uji hipotesis rata-rata dan proporsi • Uji hipotesis beda dua rata-rata • Uji hipotesis beda dua proporsi 2 Introduction • When the sample size is small, the estimation and testing procedures of large samples are not appropriate. • There are equivalent small sample test and estimation procedures for m, the mean of a normal population m1-m2, the difference between two population means s2, the variance of a normal population The ratio of two population variances. 3 The Sampling Distribution of the Sample Mean • When we take a sample from a normal population, the sample mean x has a normal distribution for any sample size n, and x-m z s/ n x-m is not normal! s/ n • has a standard normal distribution. • But if s is unknown, and we must use s to estimate it, the resulting statistic is not normal. 4 Student’s t Distribution • Fortunately, this statistic does have a sampling distribution that is well known to statisticians, called the Student’s t distribution, with n-1 degrees of freedom. x-m t s/ n •We can use this distribution to create estimation testing procedures for the population 5 mean m. Properties of Student’s t •Mound-shaped and symmetric about 0. •More variable than z, with “heavier tails” • • • Shape depends on the sample size n or the degrees of freedom, n-1. As n increase , variability of t decrease because the estimate s is based on more and more information. As n increases the shapes of the t and z distributions become almost identical. Applet 6 Using the t-Table • • Table 4 gives the values of t that cut off certain critical values in the tail of the t distribution. Index df and the appropriate tail area a to find ta,the value of t with area a to its right. For a random sample of size n = 10, find a value of t that cuts off .025 in the right tail. Row = df = n –1 = 9 Column subscript = a = .025 t.025 = 2.262 7 Small Sample Inference for a Population Mean m • The basic procedures are the same as those used for large samples. For a test of hypothesis: Test H 0 : m m 0 versus H a : one or two tailed using the test statistic x - m0 t s/ n using p - values or a rejection region based on a t - distributi on with df n - 1. 8 Example A sprinkler system is designed so that the average time for the sprinklers to activate after being turned on is no more than 15 seconds. A test of 5 systems gave the following times: 17, 31, 12, 17, 13, 25 Is the system working as specified? Test using a = .05. H 0 : m 15 (working as specified) H a : m 15 (not worki ng as specified) 9 Example Data: 17, 31, 12, 17, 13, 25 First, calculate the sample mean and standard deviation, using your calculator or the formulas in Chapter 2. xi 115 x 19.167 n 6 ( x) 115 x 2477 n 6 7.387 s n -1 5 2 2 2 10 Example Data: 17, 31, 12, 17, 13, 25 Calculate the test statistic and find the rejection region for a =.05. Test statistic : Degrees of freedom : x - m 0 19.167 - 15 t 1.38 df n - 1 6 - 1 5 s / n 7.387 / 6 Rejection Region: Reject H0 if t > 2.015. If the test statistic falls in the rejection region, its p-value will be less than a = .05. 11 Conclusion Data: 17, 31, 12, 17, 13, 25 Compare the observed test statistic to the rejection region, and draw conclusions. H 0 : m 15 H a : m 15 Test statistic : t 1.38 Rejection Region : Reject H 0 if t 2.015. Conclusion: For our example, t = 1.38 does not fall in the rejection region and H0 is not rejected. There is insufficient evidence to indicate that the average activation time is greater than 15. 12 Approximating the p-value • You can only approximate the p-value for the test using Table 4. Since the observed value of t = 1.38 is smaller than t.10 = 1.476, p-value > .10. 13 Applet The exact p-value • You can get the exact p-value using some calculators or a computer. p-value = .113 which is greater than .10 as we approximated using Table 4. One-Sample T: Times Test of mu = 15 vs mu > 15 Variable Times Variable Times N 6 Mean 19.17 95.0% Lower Bound 13.09 StDev 7.39 T 1.38 SE Mean 3.02 P 0.113 14 Testing the Difference between Two Means Asin Chapter9, independent random samplesof sizen1 and n2 are drawn from populations1 and 2 with means μ1 and μ2 and variancess 12and s 22 . Since the samplesizesare small,the twopopulations must be normal. •To test: •H0: m1-m2 D0 versus Ha: one of three where D0 is some hypothesized difference, usually 0. 15 Testing the Difference between Two Means •The test statistic used in Chapter 9 z x1 - x2 s12 s22 n1 n2 •does not have either a z or a t distribution, and cannot be used for small-sample inference. •We need to make one more assumption, that the population variances, although unknown, are equal. 16 Testing the Difference between Two Means •Instead of estimating each population variance separately, we estimate the common variance with 2 2 •And the resulting ( n 1 ) s ( n 1 ) s 1 2 2 s2 1 test statistic, n1 n2 - 2 has a t distribution x1 - x2 - D0 with n1+n2-2=(n1t 1)+(n2-1) degrees of 1 2 1 s freedom. n n 2 1 17 Example • Two training procedures are compared by measuring the time that it takes trainees to assemble a device. A different group of trainees are taught using each method. Is there a difference in the two methods? Use a = .01. Time to Assemble Method 1 Method 2 Sample size 10 12 Sample mean 35 31 Sample Std Dev 4.9 4.5 H 0 : m1 - m 2 0 H a : m1 - m 2 0 Test statistic : x1 - x2 - 0 t 1 2 1 s n1 n2 18 Applet Example • Solve this problem by approximating the pMethod 1 Method 2 value using Time to Assemble Table 4. Sample size 10 12 Sample mean 35 31 Sample Std Dev 4.9 4.5 Calculate : 2 2 ( n 1 ) s ( n 1 ) s 1 2 2 s2 1 n1 n2 - 2 9(4.9 2 ) 11(4.52 ) 21.942 20 Test statistic : 35 - 31 t 1 1 21.942 10 12 1.99 19 Example p - value : P(t 1.99) P(t -1.99) 1 P(t 1.99) ( p - value) 2 df = n1 + n2 – 2 = 10 + 12 – 2 = 20 .025 < ½( p-value) < .05 .05 < p-value < .10 Since the p-value is greater than a = .01, H0 is not rejected. There is insufficient evidence to indicate a difference in the population means. 20 Testing the Difference between Two Means •If the population variances cannot be assumed equal, the test statistic t x1 - x2 s12 s22 n1 n2 2 s s n1 n2 df 2 ( s1 / n1 ) 2 ( s22 / n2 ) 2 n1 - 1 n2 - 1 2 1 2 2 •has an approximate t distribution with degrees of freedom given above. This is most easily done by computer. 21 The Paired-Difference Test •Sometimes the assumption of independent samples is intentionally violated, resulting in a matched-pairs or paired-difference test. •By designing the experiment in this way, we can eliminate unwanted variability in the experiment by analyzing only the differences, di = x1i – x2i •to see if there is a difference in the two population means, m1-m2. 22 Example Car 1 2 3 4 5 Type A 10.6 9.8 12.3 9.7 8.8 Type B 10.2 9.4 11.8 9.1 8.3 • One Type A and one Type B tire are randomly assigned to each of the rear wheels of five cars. Compare the average tire wear for types A and B using a test of hypothesis. • But the samples are not independent. The pairs of H 0 : m1 - m 2 0 responses are linked because H a : m1 - m 2 0 measurements are taken on the same car. 23 The Paired-Difference Test To test H 0 : m1 - m 2 0 we test H 0 : m d 0 using the test statistic d -0 t sd / n where n number of pairs, d and sd are the mean and standard deviation of the difference s, d i . Use the p - value or a rejection region based on a t - distributi on with df n - 1. 24 Example Car 1 2 3 4 5 Type A 10.6 9.8 12.3 9.7 8.8 Type B 10.2 9.4 11.8 9.1 8.3 Difference .4 .4 .5 .6 .5 H 0 : m1 - m 2 0 H a : m1 - m 2 0 Test statistic : di Calculated .48 n di - d -0 .48 - 0 t 12.8 sd / n .0837 / 5 2 sd d 2 i n -1 n .0837 25 Example Car 1 2 3 4 5 Type A 10.6 9.8 12.3 9.7 8.8 Type B 10.2 9.4 11.8 9.1 8.3 Difference .4 .4 .5 .6 .5 Rejection region: Reject H0 if t > 2.776 or t < -2.776. Conclusion: Since t = 12.8, H0 is rejected. There is a difference in the average tire wear for the two types of tires. 26 Key Concepts 27 Test statistic for proportions The test statistic is a value computed from the sample data, and it is used in making the decision about the rejection of the null hypothesis. z=p-p pq n Test statistic for proportions 28 Solution: The preceding example showed that the given claim results in the following null and alternative hypotheses: H0: p = 0.5 and H1: p > 0.5. Because we work under the assumption that the null hypothesis is true with p = 0.5, we get the following test statistic: z = p – p = 0.56 - 0.5 = 3.56 pq n (0.5)(0.5) 880 Figure 7-3 Inferences about Two Proportions Assumptions 1. We have proportions from two independent simple random samples. 2. For both samples, the conditions np 5 and nq 5 are satisfied. 31 Notation for Two Proportions For population 1, we let: p1 = population proportion n1 = size of the sample x1 = number of successes in the sample ^ p1 = x1 (the sample proportion) n1 q^1 = 1 – ^ p1 The corresponding meanings are attached to p2, n2 , x2 , ^ p2. and q^2 , which come from population 2. 32 Pooled Estimate of p and p 1 2 The pooled estimate of p1 and p2 is denoted by p. x1 + x2 p= n +n 1 2 q =1–p 33 Test Statistic for Two Proportions For H0: p1 = p2 , H0: p1 p2 , H1: p1 p2 , z= H0: p1 p2 H1: p1 < p2 , H1: p 1> p2 ^ )–(p –p ) ( p^1 – p 2 1 2 pq pq n1 + n2 34 Test Statistic for Two Proportions For H0: p1 = p2 , H0: p1 p2 , H1: p1 p2 , where p1 – p 2 = 0 p^ 1 p= x1 + x2 n1 + n2 x1 = n 1 H0: p1 p2 H1: p1 < p2 , H1: p 1> p2 (assumed in the null hypothesis) and and p^ 2 x2 = n2 q=1–p 35 Example: Example: n1= 200 x1 = 24 ^p1 = x1 = 24 = 0.120 n1 200 n2 = 1400 x2 = 147 ^p2 = x2 = 147 = 0.105 n2 1400 H0: p1 = p2, H1: p1 > p2 p = x1 + x2 = 24 + 147 = 0.106875 n1 + n2 200+1400 q = 1 – 0.106875 = 0.893125. Example: n1= 200 (0.120 – 0.105) – 0 z= x1 = 24 ^p1 = x1 = 24 = 0.120 n1 200 n2 = 1400 x2 = 147 ^p2 = x2 = 147 = 0.105 n2 1400 (0.106875)(0.893125) + (0.106875)(0.893125) 200 1400 z = 0.64 Example: n1= 200 x1 = 24 ^p1 = x1 = 24 = 0.120 n1 200 n2 = 1400 x2 = 147 ^p2 = x2 = 147 = 0.105 n2 1400 z = 0.64 This is a right-tailed test, so the Pvalue is the area to the right of the test statistic z = 0.64. The P-value is 0.2611. Because the P-value of 0.2611 is greater than the significance level of a = 0.05, we fail to reject the null hypothesis. Example: For the sample data listed in Table 8-1, use a 0.05 significance level to test the claim that the proportion of black drivers stopped by the police is greater than the proportion of white drivers who are stopped. n1= 200 x1 = 24 ^p1 = x1 = 24 = 0.120 n1 200 n2 = 1400 x2 = 147 ^p2 = x2 = 147 = 0.105 n2 1400 z = 0.64 Because we fail to reject the null hypothesis, we conclude that there is not sufficient evidence to support the claim that the proportion of black drivers stopped by police is greater than that for white drivers. This does not mean that racial profiling has been disproved. The evidence might be strong enough with more data. Example: n1= 200 x1 = 24 ^p1 = x1 = 24 = 0.120 n1 200 n2 = 1400 x2 = 147 ^p2 = x2 = 147 = 0.105 n2 1400 SELAMAT BELAJAR SEMOGA SUKSES SELALU 42 42