Computer-Assisted Methods Chapter 9

Chapter 9

Computer-Assisted Methods

There are relatively few applications in which completely automatic computer-based methods are easily employed to perform the various counting and measuring operations that yield stereological data. In practically all cases some human effort is required at least to oversee the image processing operations that make it ready for automatic measurement, to verify that the features that are of interest are actually extracted from the more complex image that is acquired. In quite a few cases, the computer is used only to tally human counting operations

(e.g., mouse clicks) while the human powers of recognition are used (or sometimes misused) to identify the features of interest. Even in this mode, the use of the computer is often worthwhile for image acquisition and processing to enhance visibility of the structure, and to keep the records accurately for later analysis. This chapter discusses the use of a computer to aid manual analysis, while the next one deals with computer-based measurements.

Getting the Image to the Computer

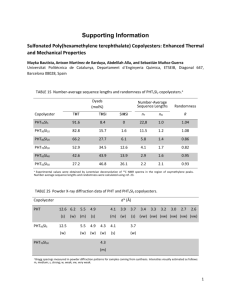

A typical computer-assisted system consists of the components shown in

Figure 9.1 (Inoue, 1986). In the majority of cases, the camera is a conventional video camera, usually a solid state camera. It may be either color or monochrome (“black and white”) depending on whether the application deals with color images. In most cases, biological tissue uses colored stains to delineate structures whereas in materials science color is less useful. The camera is connected to a “frame grabber”

(analog to digital converter, or digitizer board) installed in the computer. This reads the analog voltage signal from the camera and stores a digital representation in the form of pixels within the computer. This is then displayed on the computer screen, stored as desired, printed out, and used for computation.

There are many choices involved in most of these operations. Video cameras come in two principal flavors, tube type and solid state. The vidicon is a classic example of the former, the CCD of the latter. Tube cameras have a continuous light sensitive coating that is capable of higher resolution than the array of discrete transistors fabricated on the chip in a solid state camera, and have a spectral response that matches the human eye and conventional photography well. But most of the other advantages belong to the solid state camera. It has very little distortion (especially if electrical fields from a nearby transformer are present to distort the electron beam scanning in the tube camera), is inherently a linear device in terms of output signal versus light intensity, does not suffer from “bloom” in which bright areas spread to appear larger than they actually are, can be mounted in any orientation required by the microscope, and is generally less expensive for equivalent

183

184

User

Chapter 9

Printout

Camera Digitizer Memory Display

Optics

Processing

Storage

Figure 9.1. Block diagram of a typical computer-based imaging system.

performance because of the economics of manufacturing high-end consumer cameras in quantity.

Color sensitivity can be achieved in three ways: by splitting the incoming light to fall on three separate detectors (tube or CCD) each filtered to see just the red, green or blue; by using filters to sequentially capture the red, green and blue images; or by depositing a filter on the solid state chip that allows columns of transistors to detect the different colors (there are other more efficient geometries possible but they are more expensive to fabricate). The latter method gives only about one-half the lateral resolution of the former (it is acceptable for broadcast video because the color information has lower resolution than the brightness variations).

The single chip color camera is not so satisfactory for most technical applications, but much less expensive. Even with a monochrome camera, the use of a filter to eliminate infrared light, to which the solid state cameras are sensitive, can be very important because the imaging optics will not focus the light beyond the visible range to the same plane as the useful image.

The signal from the video camera is the same as used for standard broadcast television, and effectively limits the horizontal resolution of the image to less than 330 points and the grey scale resolution to less than 60 grey levels. Figure 9.2

illustrates the effect of changing pixel size on image representation, and Figure 9.3

shows the effect of varying the number of grey levels used. Digitizers often store the image in an array 640 pixels wide by 480 high because this corresponds to the dimensions of many computer screens, and use 256 grey levels because it corresponds to the size of one byte of memory, but this is partially empty magnification in both cases. If color information is being used, the composite signal produced by low end cameras is greatly inferior in color bandwidth to component (also called Svideo) signals with separate wires for luminance and chrominance. The consequence is blurring of color boundaries, which directly affects image measurements. Some high end cameras provide separate red, green and blue (R G B) signals.

Video digitizers are called frame grabbers because they record a single video frame lasting 1/30 (in Europe 1/25) of a second. Standard video is broadcast using

Computer-Assisted Methods 185

a

Figure 9.2. The effect of changing the size of a pixel in a grey scale and a color image

(clockwise from upper left pixels are doubled in size) is to obscure small features and boundaries. (For color representation see the attached CD-ROM.) interlace, so the frame actually consists of two fields, one with the odd number scan lines (1, 3, 5, 7, . . .) and then 1/60th of a second later the even scan lines (2, 4, 6,

8, . . .). For rapidly changing images, this can cause additional distortion of features.

But in most applications the image is stationary and the rapid speed of frame grabbing is not needed; indeed it causes a definite loss of quality because of electronic noise. Averaging several video frames, or (with more specialized cameras not used b a

Figure 9.3. The effect of changing the number of bits used for grey scale (a) and color plane representation (b) is to posterize the image. Depending on the quality of the image reproduction on screen or book page, the segments of the grey scale image with a large number of discrete steps may not be fully resolved. The color image shows that colors are significantly altered with only 2, 4 or 8 levels for red, green and blue planes. (For color representation see the attached CD-ROM.) b

186 Chapter 9

for standard video) collecting light for a longer period of time, produces much better quality images with more distinguishable grey levels.

Special cameras with many more transistors that can record images with more than 1000 ¥ 1000 pixels are available, but cost more than standard video cameras. Cooled cameras (to reduce electrical noise) with a large pixel array can be used for computer imaging, but in addition to their cost suffer from relatively slow image transfer. Most of these perform the digitzation within the camera and transmit digital values to the computer, and the time required to send millions of values limits either the portion of the image or the number of updates that can be viewed per second, so that focusing and field selection can be difficult.

The great advantage of digital cameras of this type is that with cooling of the chip and electronics, thermal noise is reduced and it becomes practical to integrate the photons striking the transistors for many seconds. This allows capturing very dim images; astronomical image and fluorescence microscopy are the two major applications.

There is another group of digital cameras that are aimed more at the consumer and general photography market than at the microscopy market. At the lower end of the price range, these have built in lenses that are not easily removed or adapted to microscope connection, and arrays that produce full color digital images of about 320 ¥ 240 resolution (sometimes the images can be made larger, but it is empty magnification). Recall that this is about the same as the resolution of a typical consumer level video camera connected to a frame grabber (indeed, the camera CCD is identical to that used in the video camera), but the cost is very low and no frame grabber is required. The interface to the computer is generally a relative slow (several seconds for image transfer) serial link, and many of the cameras come with cables and software for both Macintosh and Windows platforms. These offer some very interesting possibilities for capturing images that are to be used for interactive marking of points to count. For occasional use, simply attaching the camera so that it looks through the microscope eyepiece may be satisfactory.

The principal limitation of the low-end units is distortion of the image due to the limited optics.

Higher end units consist of a camera back that can be attached to standard lens mounts, which does allow their connection to microscopes. These may either capture full color images in a single pass, or use color filters to capture three images (red, green, and blue) sequentially which are then recombined in the computer. These can either be three separate filters inserted with a filter wheel, or a more costly LCD filter that has no moving parts and changes it transmission characteristics to produce the desired band pass. The image quality with such a setup can be quite high, approaching the resolution of

35 mm film.

Cameras in the bottom end of the range are available at this writing from many of the companies in the “traditional” photographic camera and consumer electronics businesses, such as Casio, Olympus, Kodak, etc. Higher performance units are sold by Sony, Nikon, Kodak, Polaroid and others. The evolution of consumer “handy-cam” cameras to ones with digital capability is also underway.

The latest generation of these units use digital tape to record the images (and

Computer-Assisted Methods 187

sound) instead of recording the analog signals. Many of these units are already capable of being computer controlled and it seems only a matter of time until the images can also be read, either from the tape or from the camera directly into computer memory. This would approximately double the resolution of images from these cameras, since the principal current limitations are in the electronics and not the CCD or optics.

There is another type of digital camera that does not require the large CCD array (which is the source of much of the cost of most units). The MicroLumina camera from Leaf/Scitex uses three parallel linear CCD arrays (one for red, one for green, one for blue) which are scanned across the film plane in the camera back (it mounts wherever Nikon backs can be attached) to record the image. With 12 bits each (a range of 2 12 or 4096 : 1) and a full resolution of 3400 ¥ 2700 pixels, these images rival film performance at a cost considerably below the cameras that use a large CCD array. They are not much more costly than a good quality color video camera and frame grabber board. The limitation, of course, is that the image capture time is over a minute (somewhat dependent on computer and hard disk speed) so the image must be a stationary one with adequate brightness, since each point is read for only a momentary time interval.

Display and Storage

One of the reasons that transferring the image to the computer in digital form is desirable is the flexibility with which it can be displayed on the computer screen (Foley & Van Dam, 1984). Some frame grabber boards also function as the display boards for the computer and directly show the image on the screen, but in most cases the image is held in memory and transferred to the display memory under program control. This allows for various modifications and enhancements that can increase the visibility of details, to assist the human operator in recognizing, locating and marking the features of interest. The processing possibilities are summarized below. Even without such steps, simple modification of the display brightness to maximize contrast range, adjust it nonlinearly (“gamma” adjustments) to increase contrast in either the bright or dark areas, adjust colors for changes in lighting, substitute colors (“pseudo color”) for grey scale values to make small variations visible, are more easily and reproducibly accomplished in the digital realm than by using analog circuits. They can also be accomplished without altering the original data.

Storing images for archival or reference purposes is one area where great care is needed in assembling a system. Images are large. A full scanned color image from the MicroLumina camera mentioned above occupies more than 26 megabytes of storage, either in ram memory or on disk. Obviously, this means that a computer with adequate memory to hold the entire image for user access, a large display to show many pixels at a time, and a fast processor to be able to access all of the data, are desirable. But computer systems have advanced rapidly in all of these areas and are expected to continue to do so. A system with a fast Pentium or Power PC processor, 128 Meg of ram or more, a display screen with at least 800 and perhaps more than 1000 pixel width and capable of “true color” (8 bits or 256 levels each for R,

188 Chapter 9

G and B), and a reasonably large (several gigabyte) hard disk can be had in a laptop at this writing.

The major questions have to do with long term storage of the images. Any hard disk will be filled quickly with images if many are to be kept. Less expensive, slower access, more permanent storage is therefore desirable. Tape systems have been widely used for computer disk backup, but because access is sequential and the tapes themselves are subject to stretching and wear if they are used often, this does not seem attractive for an image storage facility. Writable and rewritable CD-

ROM disks are widely regarded as the most suitable medium at the moment, with the latest generation of CDs providing expanded storage on a single disk from 600

Meg by about an order of magnitude, enough to store a useful number of images on a single platter. Access speed is good, but the only practical way to use CD-R

(as opposed to CD-RW) is to write all of the disk at one time, which means that conventional hard disk storage of the same size is required to assemble the files to be written.

The greatest problem with archiving images is having some way to find them again when they are needed. If pictures can be identified based on some independent scheme such as patient identification in medical imaging, date and sample number in forensics, etc., then the problem is relatively simple. In a research setting things get more complicated. It is very difficult to set up a data base that uses words to describe all of the important characteristics of each particular image. The dictionary of words must be very consistent and fairly small so that a word search of the data based will locate all of the relevant images. The discipline to create and maintain such a data base is hard to instill, and consistency between operators is poor.

It would be more desirable to actually have the ability to see the image, since recollection and recognition processes in human memory are extremely powerful. But archival storage implies that the images themselves are off-line, and only the data base with some means of identification is available to the user. A partial solution to this dilemma is offered by image compression. A very much smaller (in terms of storage requirements) version of an image can be produced using encoding algorithms that take advantage of redundancies in the pixel values within the image, or discard some frequency components. Compression methods that achieve useful compression ratios of 50 : 1 or higher are “lossy,” meaning that some of the data in the original image are permanently lost in the compressed version. These can be very important for measurement purposes—small features are missing, color and brightness values are distorted, and boundaries are blurred and shifted.

We will want to ensure that our archival storage system does not use a lossy compression method for the original versions of the images. But a compressed version may be quite suitable for inclusion in the image data base to help the user locate a particular image that cannot be completely or adequately described in words. The compression methods have been developed for digital video, putting movies onto CDs, transmission of images over the Internet, and other similar purposes. The emphasis has been on keeping those bits of the image that seem to be most important to human vision and discarding things that the human does not

Computer-Assisted Methods 189

particularly notice. A casual glance at an original image and a compressed version that requires only 1 or 2 percent of the storage space does not reveal substantial differences, which only become apparent when side-by-side higher magnification displays are used.

The compression methods most often used for still color or monochrome images are JPEG (Joint Photographers Expert Group) and fractal compression.

Fractal compression breaks the image into small blocks and seeks matches between blocks of different sizes. As these are found, the larger blocks can be discarded and replaced by duplicating the smaller ones. Even for compression ratios of 100 : 1 or more, the method offers fair visual fidelity. Because of patent issues, and because it is a very slow process that requires serious computation to compress the original images (decompression to view them after compression is very fast, however), fractal methods are not so widely used.

The JPEG method also breaks the image into blocks. Each 8 pixel wide square is transformed into frequency space using a discrete cosine transform, and the magnitude of the various frequency terms examined. Ones that are small, below a threshold set to accomplish the desired level of compression, are discarded. Repetitions or redundancies in the remaining terms are eliminated and the values stored in a sequence that allows the image to be reconstructed as the data are received rather than needing the entire image to be reconstructed at once (the method was developed to deal with image transmission in digital high resolution video). Both compression and decompression use similar computer routines, and are also supported by specialized hardware that can perform the process in real time. Many standard programs for image handling on standard computers perform JPEG compression and decompression entirely in software. The speed is quite satisfactory for the intended use for visual recognition in an image data base, as long as the original uncompressed images are still available in case measurements are to be made.

However, as shown in Figure 9.4, highly compressed images are not suitable for measurement purposes.

Transferring images to a computer also opens up many possibilities for printing them out, for example to include in reports. The quality of printers has steadily improved, and in particular dye-sublimation (also called dye-diffusion) printers offer resolution of at least 300 pixels per inch with full color at each point.

This should not be confused with laser printers, for which the “dots per inch” (dpi) specification may be higher. Because the “dots” from a laser printer are either on or off, it takes an array of them to create the illusion of grey scale or (for color versions) colors. The effective resolution is consequently much poorer than the dpi specification, and even the magazine or book images printed by high end image setters with dot resolution of well over 1200 dpi do not stand up to close scrutiny as photographic representations. Furthermore, color aliasing is particularly difficult to control.

Color printers work by depositing cyan, magenta and yellow inks or powders

(the complementary colors to red, green and blue) onto white paper. Figure 9.5 compares the behavior of additive (RGB) and subtractive (CMY) colors. By combining the inks in various proportions, a color “gamut” is achieved that is never as wide as additive color displays that can appear on video screens, particularly with respect

190 Chapter 9

a

Figure 9.4. Comparison of a 2 ¥ enlarged fragment of a light microscope image before and after it has been JPEG compressed with a ratio of 40 : 1. Color shifts, blurring or omission of lines, and block-shaped artefacts are all present. (For color representation see the attached CD-ROM.) b to fully saturated primary colors. Adding black ink (CMYK printing) does produce somewhat improved dark tones. In the dye-sublimation process, the inks from three

(or four) separate sources are combined by diffusion into a special coating on the print stock that allows them to mix and produce a true color instead of relying on the juxtaposition of colored dots to fool the eye. Some ink-jet printers also allow for blending of the inks on paper, rather than simply depositing them side by side.

While not as high in resolution as a photographic print, such hardcopy results of a

Figure 9.5. Additive (RGB) colors which begin with a black background and combine to produce other colors and white, with subtractive (CMY) colors which begin with a white background and combine to produce other colors and black. (For color representation see the attached CD-ROM.) b

Computer-Assisted Methods 191

images are relatively fast and not too expensive to produce. The main drawback is that every copy must be printed out separately. On the other hand, computer programs to process the images or at the very least to conveniently label them are widely available.

It is possible, of course, to record the computer display onto film. This can either be done directly using an image setter (which performs any necessary color separations and directly exposes film to produce the masters for printing), or a film recorder. The latter records the full color image onto a single piece of color film using a high resolution monochrome monitor. The image is separated into red, green and blue components and three exposures are superimposed through corresponding color filters. This is much more satisfactory than trying to photograph the computer screen, because it avoids the discrete red, green and blue phosphor dots used on the color video display, which trick the human eye into seeing color when they are small but can become noticeable when the image is enlarged. It also overcomes geometric distortions from the curved screens, as well as glare from reflected light and other practical problems.

Image Processing

Image processing is an operation that starts with an image and ends with another corrected or enhanced image, and should not be confused with image analysis. The latter is a process that starts with an image and produces a concise data output, reducing the very large amount of data that is required to store the original image as an array of pixels to a much smaller set of (hopefully relevant) data.

Image processing includes a family of operations that modify pixel values based on the original pixels in the image. It usually produces as its output another image that is as large as the original, in which the pixel values (brightness or color) have changed.

The overview of processing methods presented here is necessarily brief. A much more complete discussion of how and why these procedures are used can be found in John C. Russ (1998) “The Image Processing Handbook, 3rd Edition” CRC

Press, Boca Raton FL (ISBN 0-8493-2532-3. All of the examples shown in this chapter and the next were created using Adobe Photoshop, an inexpensive image processing program that supports most kinds of image capture devices and runs on both Macintosh and Windows platforms, using the Image Processing Tool Kit, a set of plug-ins for Adobe Photoshop distributed on CD-ROM that implements the various processing and measurement algorithms from The Image Processing

Handbook. Information on the CD is available over the world-wide-web

(http://members.aol.com/ImagProcTK). There are numerous books available that describe the mathematics behind the steps involved in image processing, for instance

Castleman, 1979; Gonzalez & Wintz, 1987; Jain, 1989; Pratt, 1991; Rosenfeld &

Kak, 1982.

The steps used in image processing may usefully be categorized in several ways. One classification is by operations that correct for various defects that arise in image capture (a common example is nonuniform illumination), operations that improve visibility of structures (often by relying on the particular characteristics of

192 Chapter 9

human vision) and operations that enhance the visibility or measurability of one type of feature by suppressing information from others (an example is enhancing boundaries or texture in an image while reducing the brightness difference between regions). We will examine operations in each of these categories in this chapter and the next.

Another basis for classification is how the operations function. This classification cuts across the other, with the same functional procedures capable of being used for each of the different purposes.

Point processes replace each pixel value (color or brightness) with another value that depends only on the original local value. Examples are increases in contrast and pseudo-coloring of grey scale images.

Neighborhood operations modify the pixel value based on the values of nearby pixels, in a region that is typically a few pixels across

(and is ideally round, although often implemented using a small square region for ease of computation). There are two subsets of these operations: ones that use arithmetic combinations of the pixel values, and rank operations that rank the values in the neighborhood into order and then keep a specific value from the ranked results.

Global operations typically operate in frequency space, by applying a Fourier transform to the image and keeping information based on the orientation and frequency of selected wave patterns of brightness in the image. These are particularly useful for removing period noise that can arise in image capture, or detecting periodic structures such as high resolution atom images in the presence of noise.

The most common imaging defects are:

Inadequate contrast due to poor adjustment of light intensity and camera settings;

Nonuniform brightness across the image resulting from lighting conditions, variations in sample thickness (in transmission viewing) or surface orientation (particularly in the SEM), or from optical or camera problems such as vignetting;

Noisy images, due to electronic faults or more commonly to statistical fluctuations in low signal levels (especially in SEM X-ray imaging, fluorescence microscopy, autoradiography, etc.)

Geometrical distortions resulting from a non-normal viewing orientation or non-planar surfaces (particularly in the SEM and TEM because of their large depth of field), or from nonlinear scan generators (particularly in the atomic force microscope and its cousins)

Requirement for several different images taken with different lighting conditions (different polarizations, different color filters, etc.) or other

Computer-Assisted Methods 193

signals (e.g. multiple X-ray maps in SEM) to be combined to reveal the important details.

It might be argued that some of these are not really imaging defects but rather problems with specimens or instruments that might be corrected before the images are acquired, and when possible this is the preferred course.

But in reality such corrections are not always possible or practical and images are routinely acquired that have these problems, which need to be solved before analysis.

Contrast Manipulation

The brightness range of many images is greater than the discrimination capability of the human eye, which can distinguish only about 20 grey levels at a time in a photograph. The contrast of a stored image can be manipulated by constructing a transfer function that relates the stored brightness value of each pixel to the displayed value. A straight line identity relationship can be varied in several ways to enhance the visibility of detail. These manipulations are best understood by reference to the image histogram, which is a plot of the number of pixels having each of the possible brightness levels. For a typical stored grey scale image this will be 256 values from white to black, and for a typical color image it will be three such plots for the red, green and blue planes, or the equivalent conversion to

HSI space.

HSI (Hue, Saturation and Intensity) space offers many advantages for image processing over RGB. Image capture and display devices generally use red, green and blue sensitive detectors and phosphors to mimic the three receptors in the human eye, which have sensitivity peaks in the long (red), medium and short (blue) portions of the visible spectrum. But our brains do not work directly with those signals, and artists have long known that a more useful space for thinking about color corresponds to hue (what most people mean by the “color”, an angle on the continuous color wheel that goes from red to yellow, green, blue, magenta and back to red), saturation (the amount of color, the difference for example between pink and red) and intensity (the same brightness information recorded by panchromatic “black and white” film). For microscopists, hue corresponds to the color of a stain, saturation to the amount of stain, and intensity to the local density of the section, for instance. Figure 9.6 shows an image broken down into the RGB and HSI planes.

Figure 9.7a shows an example of a histogram of a grey scale image. Note that the actual values of pixels do not cover the entire available range, indicating low contrast. Linearly stretching the brightness range with a transfer function that clips off the unused regions at either end (Figure 9.7b) improves the visible contrast significantly. This particular histogram also has several peaks (which typically correspond to structures present in the specimen) and valleys. These allow spreading the peaks to show variations within the structures, using histogram equalization

(Figure 9.7c). The equalization transfer function is calculated from the histogram and therefore is unique to each image.

194 Chapter 9

a

Figure 9.6. A light microscope color image separated into its red, green, blue (RGB) and hue, saturation, intensity (HSI) planes. Algebraic calculation allows conversion from one representation to the other. (For color representation see the attached CD-ROM.) b

Gamma adjustments to the image contrast compress the display range at either the bright or dark end of the range to allow more visibility of small changes at the other end. This is particularly useful when linear CCD cameras are used, as they record images that are visibly different than film or human vision (which have a logarithmic response). Figure 9.8 shows the effect of gamma adjustment. The same nonlinear visual response means that features are sometimes more easily distinguished in a negative image than in a positive, and this reversal of contrast is easily applied in the computer. Solarization, a technique borrowed from the photographic darkroom, combines contrast reversal at either the dark or bright end of the contrast range with expanded positive contrast for the remainder, to selectively enhance visibility in shadow or bright areas (Figure 9.8c).

Each of these techniques can typically be applied to color images as well. It is not usually wise to alter the RGB planes separately as this will produce radically shifted colors in the image. Transforming the color image data to HSI space allows many kinds of image processing to be performed on the separate hue, saturation and intensity planes. Many systems apply them to the intensity plane alone (which corresponds to the monochrome image that would be recorded by panchromatic film).

It is usually possible to modify a selected portion of an image, rather than the entire area, but this requires manual intervention to select the regions. When the image does not have uniform illumination, for any of the reasons mentioned above, it makes it difficult to recognize the same type of feature in different locations.

Correcting Nonuniform Brightness

Leveling the image brightness is typically done by dividing by or subtracting a reference image that represents the variation. Subtraction is the more common

Computer-Assisted Methods 195

a c

Figure 9.7. An SEM image with low contrast is shown with its histogram (a). Linearly expanding the display brightness range (b) increases the visual contrast, and equalization (c) broadens the peaks to show variations within the uniform regions.

approach, but technically should only be used when the image has been acquired by a logarithmic recording device (film, a densitometer, some tube type video cameras, or a CCD camera with appropriate circuitry).

There are several approaches to getting the reference image to use. Sometimes it can be acquired directly by removing the specimen and recording variations in illumination and camera response. More often the specimen itself causes the variation and the reference image must be derived from the original image. There are three basic methods used:

1. If there is some structure present throughout the image (either features or background) that can be assumed to be the same, the brightness of those points can be used to generate a polynomial curve of background as a b

196 Chapter 9

a c

Figure 9.8. Gamma adjustments nonlinearly change the display of grey values, in this light microscope example (a) increasing the brightness to show detail in shadow areas

(b). Solarization (c) reverses the contrast at the end of the brightness scale to create negative representations, in this example in dark areas.

function of location. This is easiest to automate when the structure comprises either the brightest or darkest pixels in each local area. Figure 9.9 shows an example. This approach works best when the nonuniform brightness results from lighting conditions and varies as a smooth function of position.

2. If the features can be assumed to be small in at least one direction, a rank operator can be used. This is a neighborhood operator that replaces each pixel in the image with the (brightest or darkest) value within some specified distance, usually a few pixels. If the features can be replaced by the background in this way, the resulting image is suitable for leveling. Figure 9.10

shows an example. This technique can handle abrupt changes in lighting that b

Computer-Assisted Methods 197

a c

Figure 9.9. Portion of a nonuniformly illuminated metallographic microscope image (a) with the background (b) produced by fitting a polynomial to the brightest points in a

9 ¥ 9 grid and the result of leveling (c).

often result from specimen variations but requires that the features be small or narrow.

3. Filtering the image to remove high frequency changes in brightness can be performed either in the spatial domain of the pixels or by using a Fourier transform to convert the image to frequency space. The spatial domain approach requires calculating a weighted average of each pixel with its neighbors out to a considerable distance in all directions, to smooth out local variations and generate a new background image that has only the gradual variations. This method is slow and has the limitations of both methods above and none of their advantages, but is sometimes used if neither of the other methods is provided in the software.

b

198 Chapter 9

a

Figure 9.10. Use of a rank operator to produce a background image from Figure 9.9a

by replacing each pixel with the brightest neighbor within a radius of 4 pixels (a), and the leveled result (b).

b

Reducing Image Noise

Noisy images are usually the result of very low signals, which in turn usually arise when the number of photons, electrons or other signals is small. The best solution is to integrate the signal so that the noise fluctuations average out. This is best done in the camera or detector itself, avoiding the additional noise and time delay associated with reading the signal out and digitizing it, but this depends upon the design of the camera. Video cameras for instance are designed to continuously read out the entire image every 1/30 or 1/25 of a second, so that averaging can only take place in the computer.

If the signal strength cannot be increased and temporal averaging of the signal is not possible, the remaining possibility is to perform some kind of spatial averaging. This approach makes the assumption that the individual pixels in the image are small compared to any important features that are to be detected. Since many images are digitized with empty magnification, we can certainly expect to do some averaging without losing useful information. The most common method is to use the same weighted averaging mentioned above as a way to generate a background image for leveling, but with a much smaller neighborhood. A typical procedure is to multiply each pixel and its neighbors by small integer weights and add them together, divide the result by the sum of the weights, and apply this method to all pixels (using only the original pixel values) to produce a new image. Figure

9.11 shows this process applied to an image with evident random noise, using the

“kernel” of weights shown. These weights fit a Gaussian profile and the technique is called Gaussian filtering. It is equivalent to a low pass filter in frequency space

(and indeed it is sometimes implemented that way).

Computer-Assisted Methods 199

a b c

0 0 0 0 1 0 0 0 0

0 0 2 4 6 4 2 0 0

0 2 8 21 28 21 8 2 0

0 4 21 53 72 53 21 4 0

1 6 28 72 99 72 28 6 1

0 4 21 53 72 53 21 4 0

0 2 8 21 28 21 8 2 0

0 0 2 4 6 4 2 0 0

0 0 0 0 1 0 0 0 0

Figure 9.11. A noisy fluorescence microscope image (a) and comparison of the result of smoothing it (b) using a Gaussian kernel of weights (c) with a standard deviation of 1.6

pixels vs. the application of a median filter (d) that replaces each pixel value with the median of the 9-pixel-wide circular neighborhood.

d

Although it is easily understood and programmed, this kind of smoothing filter is not usually a good choice for noise reduction. In addition to reducing the image noise, it blurs edges and decreases their contrast, and may also shift them. Since the human eye relies on edges—abrupt changes in color or brightness— to understand images and recognize objects, as well as for measurement, these effects are undesirable. A better way to eliminate noise from images is to use a median filter (Davies, 1988). The pixel values in the neighborhood (which is typically a few pixels wide, larger than the dimension of the noise being removed) are ranked in brightness order and the median one in the list (the one with an equal number of brighter and darker values) is used as the new value for the central pixel. This process is repeated for all pixels in the image (again using only the

200 Chapter 9

original values) and a new image produced (Figure 9.11). As discussed in Chapter

8 on statistics, for any distribution of values in the neighborhood the median value is closer to the mode (the most probable value) than is the mean (which the averaging method determines). The median filter therefore does a better job of noise removal, and accomplishes this without shifting edges or reducing their contrast and sharpness.

Both the averaging and median filtering methods are neighborhood operators, but ranking takes more computation than addition and so the cost of increasing the size of the neighborhood is greater. For color images the operations may either be restricted to the intensity plane of an HSI representation, or in some cases may be applied to the hue and saturation planes as well. The definition of a median in color space is the point whose distances from all neighbors gives the lowest sum of squares.

Rectifying Image Distortion

Distorted images of a planar surface due to short focal length optics and nonperpendicular viewpoints can be rectified if the details of the viewing geometry are known. This can be accomplished either from first principles and calculation, or by capturing an image of a calibration grid. In the latter case, marking points on the image and the locations to which they correspond on a corrected image offers a straightforward way to control the process. Figure 9.12 shows an example. Each pixel address in the corrected image is mapped to a location in the original image.

Typically this lies between pixel points in that image, and the choice is either to take the value of the nearest pixel, or to interpolate between the neighboring pixels. The latter method produces smoother boundaries without steps or aliasing, but takes longer and can reduce edge contrast.

Performing this type of correction can be vital for SEM images, due to the fact that specimen surfaces are normally inclined and the lens working distance is short. Tilted specimens are also common in the TEM, and low power light microscopes can produce images with considerable radial distortion. Even with ideal viewing conditions and optics the image may still be foreshortened in the horizontal or vertical direction due to errors in the digitization process. The electronics used for timing along each scan line have tolerances that allow several percent distortion even when properly adjusted, and some older frame grabbers made no attempt to grab pixels on an ideal square grid. Fortunately most systems are quite stable, and the distortion can be measured once using a calibration grid and a standard correction made to images before they are measured.

When the viewed surface is not planar, or worse still is not a simple smooth shape, the process is much more complicated. If the user has independent knowledge of what the surface shape is, then each small portion of it can be corrected by the same process of marking points and the locations to which they map. Effectively, these points subdivide the image into a tesselation of triangles (a Voronoi tesselation). Each triangle in the captured image must be linearly distorted to correspond to the dimensions of the corrected planar image, and the same mapping and interpolation is carried out. Sometimes the shape can be determined by

Computer-Assisted Methods 201

a b c

Figure 9.12. Foreshortening due to an angled view with a short focal length lens (a).

The vignetting is first corrected by leveling (b) and then the central portion of the image rectified using 20 control points (c).

202 Chapter 9

obtaining two views of the surface and identifying the same points in each. The parallax displacements of the points allow calculation of their position in space. This is stereoscopy, rather than stereology, and lies outside the scope of this text.

Real images may also suffer from blur due to out-of-focus optics or motion of the specimen during exposure. These can be corrected to some degree by characterizing the blur and removing it in frequency space but the details are beyond the intended scope of this text, and also beyond the capability of most desktop computer based imaging systems. The Hubble telescope pictures suffered from blurring due to an incorrect mirror curvature. As this defect was exactly known (but only after launch), the calculated correction was able to be applied to produce quite sharp images (the remaining problem of collecting only a small fraction of the desired light intensity was corrected by installing compensating optics). Blurring of images from confocal microscopes due to the light passing through the overlying parts of the specimen can be corrected in much the same way, by using the images from the higher focal planes to calculate the blur and iteratively removing it.

Enhancement

The same basic methods for manipulating pixel values based on the values of the pixels themselves and other pixels in the neighborhood can also be used for enhancement of images to reveal details not easily viewed in the original. Most of these methods become important when considering automatic computer-based measurement and are therefore discussed in the next chapter. The human visual system is quite a good image processing system in its own right, and can often extract quite subtle detail from images without enhancement, whereas computer measurement algorithms require more definite distinctions between features and background (Ballard & Brown, 1982).

There are some enhancement methods that take advantage of the particular characteristics of the human visual system to improve visibility (Frisby, 1980). The most common of these is sharpening, which increases the visibility of boundaries.

Local inhibition within the retina of the eye makes us respond more to local variations in brightness than to absolute brightness levels. Increasing the magnitude of local changes and suppressing overall variations in brightness level makes images look crisper and helps us to recognize and locate edges.

Figure 9.13 shows a very simple example. The neighborhood operator that was used is based on the Laplacian, which is a second derivative operator. The filter multiplies the central pixel by 5 and subtracts the four neighbor pixels above, below and to the sides; this is repeated for every pixel in the image, using the original pixel values. For pixels in smooth areas where the brightness is constant or gradually changing, this produces no change at all. Wherever a sudden discontinuity occurs, such as an edge running in any direction, this increases the local contrast; pixels on the dark side of the edge are made darker and those on the bright side are made brighter.

The more general approach than the Laplacian that mimics a photographic darkroom technique is the unsharp mask. Subtracting a smoothed version of the image from the original both increases contrast, reduces some local background

Computer-Assisted Methods 203

a

Figure 9.13. Light micrograph (a) showing the effect of a Laplacian filter (b) to increase the contrast of edges and sharpen the image appearance.

b fluctuations, and increases edge sharpness. Figure 9.14 shows an example in which this method is applied to the intensity plane of a color image.

Other edge enhancement operators that use combinations of first derivatives are illustrated in the next chapter. So are methods that respond to texture and orientation in images, and methods that convert the grey scale or color image to black and white and perform various operations on that image for measurement purposes.

a

Figure 9.14. Color light micrograph (a) showing the effect of an unsharp mask (b) to increase contrast and sharpen edges. A Gaussian smooth of the intensity plane from the original image with a diameter of six pixels is subtracted from the original; hue and saturation are not altered. (For color representation see the attached CD-ROM.) b

204

Overlaying Grids onto Images

Chapter 9

As discussed in Chapter 1 on manual counting, grids provide ways to sample an image. The lines or points in the grid are probes that interact with the structure revealed by the microscope section image. Counting (and in some cases measuring) the hits the grids make with particular structures generates the raw data for analysis. There are several advantages to using the computer to generate grids to overlay onto the image. Compared to placing transparent grids onto photographic prints, the computer method avoids the need for photography and printing. Compared to the use of reticles in the microscope, it is easier to view the image on a large screen and to have the computer keep track of points as they are counted and provide feedback on where counting has taken place. The computer is also able to generate grids of many different types and sizes as needed for particular images and purposes

(Russ, 1995a), and to generate some kinds of grids such as random points and lines which are not easy to obtain otherwise. It can also adjust the grid appearance or color for easy visibility on different images. Perhaps the greatest hidden advantage is that the computer places the grid onto the image without first looking at what is there; humans find it hard to resist the temptation to adjust the grid position to simplify the recognition and counting process, and the result is practically always to bias the resulting measurement data.

Overlaying a grid onto an image so that the user can conveniently use it to mark points requires that the grid show up well and clearly on the image yet not obscure it. One approach that works well in most cases is to display either black or white grids on color images, and color grids on grey scale pictures. The selection of black or white is usually based on whether there are many areas within the color image that are very dark or very light; if so, choose a white or black grid, respectively. Color grids on black and white images are usually easiest to see if they are colored in red or orange. Blue colors often appear indistinguishable from black, because the eye is not very sensitive at that end of the spectrum. On the other hand, sensitivity to green is so high that the colors may appear too bright and make it difficult to see the image (the green lines may also appear wider than they really are). But allowing the user to choose a color for the grid is easily supported, and solves problems of individual preference or color blindness. Figure 9.15 shows color and grey scale images with grids superimposed.

In generating the grid, there are several different kinds of grids that are particularly suitable for specific purposes. For example, a grid used to count points can consist of a network of lines, with the points understood to lie at the line intersections (or in some cases at the ends of the line segments), or a series of small circles, with the points of interest understood to lie at the centers of the circles. The circles have the advantage that they do not obscure the point being marked, which can be important for deciding which points to mark or count. The lines have the advantage that they impose a global order on the points and make it easier to follow from one point to the next, so that no points are accidentally overlooked. Sometimes both methods are used together. Figure 9.16 shows several specific kinds of grids to generate grids of lines and arrays of points. The routine for generating these grids is

Computer-Assisted Methods 205

a b

Figure 9.15. Example of grids overlayed on images on the computer screen: a) a black cycloid grid on a color light microscope image; b) a colored grid on a monochrome metallographic microscope image. (For color representation see the attached CD-ROM.) shown in Appendix 1. The listings shown there are in a specific Pascal-like macro language used by the public domain NIH-Image program, but serves to document the algorithms required. They are easily translated to other systems and languages.

The identical algorithms are included in the set of Photoshop plug-ins mentioned earlier (in that case they were written in C so that they could be compiled to run on both Macintosh and Windows versions of Photoshop).

The example of a point grid in Figure 9.16a is a simple array of points marked by crosses and hollow circles arranged in a square pattern with a spacing

206 Chapter 9

a b c

Figure 9.16. Some other types of grids that can be used: a) square array of points; b) staggered array of points, c) randomly placed points; d) circular lines; e) random lines.

Computer-Assisted Methods 207

d e

Figure 9.16.

Continued of 50 pixels. The similar example in Figure 9.16b places the points in a staggered array with a higher point density. It is most efficient to select a point density for sampling so that neighboring points only rarely fall within the same structural unit.

When the structure being sampled by the grid is itself random, then a regular array of grid provides random sampling, and is easiest for a human user to traverse without missing points. But for applications to structures that have their own regularity, such as man-made composites, it is necessary for the grid to provide the randomization. The point grid in Figure 9.16c distributes random points across the image to be used in such cases. This is done by generating random pairs of X, Y coordinates (and rejecting overlapping ones) until 50 points are marked. Counting with a random grid is more difficult than a regular one because it is easier to miss some points altogether. Changing this number to provide reasonable and efficient sampling of the structure is straightforward. A common strategic error in counting

208 Chapter 9

of images using grids is to use too many points. It is almost always better to count only a few events or occurrences of structure in each image, and to repeat this for enough different images to generate the desired statistical precision. This helps to assure that reasonable sampling of the entire specimen occurs.

The two examples of line grids shown in Figures 9.16d and 9.16e supplement those in Figure 9.15. A square grid can be used either to count intersections of structure with the lines ( P

L

) or to count the cross points in the grid as a point grid ( P

P

). If the image is isotropic (in the two dimensional plane of sampling), then any direction is appropriate for measurement and a square grid of lines in easiest for the user to traverse. However, when the structure itself may have anisotropy it is necessary to sample uniformly in all directions, which the circle grid provides. If the structure also has any regularity, then the grid must be randomized. The random line grid generates a series of random lines on the sample. If the section is itself a random one in the specimen, then the random line grid samples three-dimensional structures with uniform, isotropic and random probes.

The cycloid shape is appropriate for lines drawn on so-called vertical sections (Baddeley et al., 1986). The spatial distribution of the lines is uniform in threedimensional space when the section planes are taken with random rotation around a fixed “vertical” axis in the specimen. This method is particularly useful in measuring structures in specimens that are known not to be isotropic. Examples include metals that have been rolled or have surface treatments, specimens from plant and animal tissue, etc. The cycloid is easily drawn as a parametric curve as shown in the appendix. The individual arcs are drawn 110 pixels wide and 70 pixels high (110/70

= 1.571, a very close approximation to p /2 which is the exact width to height ratio of the cycloid). The length of the arc is twice its height, or 140 pixels. Alternating cycloids are drawn inverted and flipped to more uniformly sample the image.

For all of these grids, the number of points marked on the image, or the total length of grid lines drawn, is shown along with the image area. These values may be used later to perform the stereological calculations.

There are two principal methods used by computer programs to keep track of the user’s marks on the image employing a grid. One is to count the events as they occur. Typically these will be made using some kind of interactive pointing device, which could be a touch screen, light pen, graphics pad, etc., but in most cases will be a mouse as these are now widely used as the user interface to a graphical operating system (or the track pad, track ball or pointing stick that are employed as mouse substitutes). Counting the clicks as they occur gives a real-time updated count of events, but makes editing to correct mistakes a bit more difficult and requires some other form of user action to select between multiple categories of features that can be counted at once. It also requires some way to mark the points as they are identified by the user, and for this purpose colored labels are often placed on the image on the screen.

The second common method for counting user marks is to just allow placing any kind of convenient mark onto the screen, (Figure 9.17) selecting different colors to distinguish different classes of countable events, and then have the computer tally them up at the end. Given that most drawing programs have some kind of “undo” capability to edit mistakes, the ability to change the size and color of marks, and

Computer-Assisted Methods 209

Figure 9.17. Example of counting with a grid. Three different types of interface between the white and grey, black and grey and black and white phases have been marked along the circular grid lines in different colors. The report of the total length of the grid lines, the area of the image and the number of each class of mark allows calculation of the surface area per unit volume of each type of interface and the contiguity between phases. (For color representation see the attached CD-ROM.) tools to erase marks or cover them up with one of a different color, this allows the user quite a lot of freedom to mark up the image using fairly intuitive and straightforward tools. Of course, since the totaling up is performed at the end there is no continuously updated result.

Counting the features present in an image requires a computer algorithm to connect together all of the touching pixels that may be present. A simplified approach that can be used for the counting of marks is available if it can be assumed that the cluster of pixels constituting the mark is always convex and contains no holes. It is this simplified logic that is shown in the Appendix. Each pixel is examined in each line of the image. Pixels that match the selected classification color value are compared to the preceding pixel and to the three touching pixels in the line above. If the pixel does not touch any other identical pixels, then it represents the start of a mark and is counted. Additional pixels that are part of marks already counted are passed over. A more general approach is to use the same logic as employed for feature measurement and labeling in the next chapter, which is capable of dealing with arbitrarily complex shapes.

The number of marks in each class is reported to the user, who must combine it with knowledge of the grid (length of lines, area of image, number of grid points, etc.) to obtain the desired structural information. Other parameters such as the placement of the marks (to assess nonuniformities and gradients, for instance) are not usually extracted from the user marks, which means that they do not actually have to be placed on the exact grid points but can be put nearby leaving the actual points available for viewing to confirm accuracy.

210

Basic Stereological Calculations

Chapter 9

The two most common stereological measures that illustrate the use of counting user marks made employing a grid are the determination of volume fraction of each phase present, and the measurement of the surface area per unit volume. These and other stereological measures are discussed in much more detail in earlier chapters. There may be several different type of boundaries evident as lines in the image, each of which corresponds to a one kind of surface separating the structure in three dimensions. The user can select different colors to measure the various kinds of boundaries that are of interest.

The volume fraction of a phase is estimated by the number of grid points counted as lying within the phase, divided by the total number of points in the grid.

The word “phase” as used in the context of stereology, refers to any recognizable structure that may be present, even voids. If the volume fraction of one structure within another is desired, that is the ratio of the number of points in the inner structure divided by the sum of those points and those which lie within the outer structure. For example, the volume fraction of a cell occupied by the nucleus would be measured by marking points within the nucleus in one color, and points within the cell but outside the nucleus in a second color. Then the volume fraction of the cell occupied by the nucleus is N

1

/(N

1

+ N

2

). The same method would be used if there were many cells and nuclei within the image.

The surface area per unit volume is estimated from the number of places where the grid lines cross the boundary line corresponding to that surface. If the number of points is N, and the total length of the grid lines is L (in whatever units the image is calibrated in), then the surface area per unit volume is 2 · N / L . This measurement has a dimension, and so the image calibration is important. The dimension of area per unit volume is (1/units).

All stereological techniques require that the grid be randomly placed with respect to the features in the microstructure. When the structure itself and the acquisition of the image are random, this criterion is fulfilled by a regular grid of points or lines. There is one important and common case where orientation of the section plane is not randomized. In the so-called vertical sectioning case, the surface of the sample that is examined is perpendicular to an exterior surface, but multiple sections can be taken in different orientations that include that surface normal (actually, the method works whenever some defined direction within the structure can be consistently defined as the vertical direction, which lies in all section planes imaged).

In that case, in order to count intersections with lines that are uniformly oriented in three-dimensional space, the cycloid grid should be used. This is discussed in more detail elsewhere.

One of the common microstructural measurements used to characterize materials is the so-called “grain size.” This is actually a misnomer, since it does not actually have anything directly to do with the size of the grains. There are two different definitions of grain size included in the ASTM standard. One is derived from the surface area per unit volume of the grain boundaries in the material.

If this are measured by counting N intersections of a grid of total length L (in

Computer-Assisted Methods 211

millimeters) with the grain boundaries, then the ASTM standard grain size can be calculated as

G = 6.65 · Log ( L / N ) 3.3

where the logarithm is base ten. Note that depending on the units of the image calibration, it may be necessary to convert L to millimeters.

The second grain size method counts the number of grains visible in the image. The manual marking procedure can also be employed to mark each grain, without regard to the grid chosen. For grains that intersect an edge of the field of view, you should count those that intersect two edges, say the top and left, and ignore those that intersect the other two, say the bottom and right. By this method, if the number of grains is N and the image area is A (converted to square millimeters), then the grain size is

G = 3.32 · Log ( N / A ) 2.95

Note that these two methods do not really measure the same characteristic of the microstructure, and will in general only approximately agree for real structures. Usually the grain size number is reported only to the nearest integer.

More information about the interpretation of the counts, their conversion to microstructural information, and the statistical confidence limits that are a function of the number of counts can be found in other chapters. Stereological calculations from counting experiments are extremely efficient, and provide an unbiased characterization of microstructural characteristics. It is important to remember the statistical advantage of counting the features that are actually of interest rather than trying to determine the number by difference. However, for counting a grid with a fixed total number of points it is best to mark only the minor phase points and obtain the number of counts for the major phase by subtracting the number of marked points from the (known) total number of points in the grid.

Appendix

{Manual stereology macros for NIH Image.

Overlay grids on an image with arrays of lines or points (reports the number of points or the length of the lines in image units). Grids provided include three different point arrays and four line arrays, one of which is cycloids and one sine-weighted radial lines for vertical section method. Then use paintbrush set to any of the fixed colors (up to 6) to mark locations to be counted (e.g., where line grids cross feature boundaries). Finally, use macro to count marks in each class, and use results for stereological calculations. For more details, see the paper “Computer-Assisted Manual Stereology” in Journal of Computer Assisted

Microscopy, vol. 7 #1, p. 1, Mar. 1995

@ 1995–1998 John C. Russ—may be freely distributed provided that the documentation is included.}

Macro ‘Point Grid’;

Var k,x,y,xoff,pwd,pht,nrow,ncol: integer;

212

area,ppx: real; un: string;

Begin

GetPicSize(pwd,pht); nrow: = pht Div 50; ncol: = pwd Div 50; xoff: = (pwd 50*ncol) Div 2;

If (xoff < 25) Then xoff: = 25; y: = (pht 50*nrow) Div 2;

If (y < 25) Then y: = 25;

SetLineWidth(1); k: = 0;

Repeat {Until > pht} x: = xoff;

Repeat {Until > pwd}

MoveTo (x 5, y);

LineTo (x 1, y);

MoveTo (x + 1, y);

LineTo (x + 5, y);

MoveTo (x, y 5);

LineTo (x, y 1);

MoveTo (x, y + 1);

LineTo (x, y + 5); k: = k + 1; {counter} x: = x + 50;

Until ((x + 10) > pwd); y: = y + 50;

Until ((y + 20) > pht);

GetScale(ppx,un);

MoveTo (2,pht-6);

SetFont(‘Geneva’);

SetFontSize(10);

Write(‘Total Points = ’,k:3); area: = pwd*pht/(ppx*ppx);

End;

MoveTo (2,pht-18);

Write(‘Total Area = ’,area:10:3,‘sq.’,un);

Macro ‘Staggered Grid’;

Var i,k,x,y,xoff,yoff,pwd,pht,nrow,ncol: integer; area,ppx: real; un: string;

Begin

GetPicSize(pwd,pht); nrow: = pht Div 34; ncol: = pwd Div 50; xoff: = (pwd 50*ncol) Div 2;

If (xoff < 25) Then xoff: = 25; yoff: = (pht 34*nrow) Div 2;

If (yoff < 25) Then yoff: = 25;

SetLineWidth(1); k: = 0; i: = 0; y: = yoff;

Chapter 9

Computer-Assisted Methods

Repeat {Until > height} x: = xoff;

If (2*(i Div 2) = i)

Then x: = x + 25;

Repeat {Until > width}

MoveTo (x 5, y);

LineTo (x 2, y);

MoveTo (x + 2, y);

LineTo (x + 5, y);

MoveTo (x, y 5);

LineTo (x, y 2);

MoveTo (x, y + 2);

LineTo (x, y + 5);

MakeOvalRoi(x 2,y 2,5,5);

DrawBoundary;

KillRoi; k: = k + 1; {counter} x: = x + 50;

Until ((x + 25) > pwd); y: = y + 34; i: = i + 1;

Until ((y + 25) > pht);

GetScale(ppx,un);

MoveTo (2,pht-6);

SetFont(‘Geneva’);

SetFontSize(10);

Write(‘Total Points = ’,k:3); area: = pwd*pht/(ppx*ppx);

End;

MoveTo (2,pht-18);

Write(‘Total Area = ’,area:10:3,‘sq.’,un);

Macro ‘Cycloids’;

Var h,i,j,k,x,y,xoff,yoff,pwd,pht,nrow,ncol,xstep,ystep: integer; len,area,ppx,pi,theta: real; un: string;

Begin pi: = 3.14159265;

GetPicSize(pwd,pht); nrow: = pht Div 90; ncol: = pwd Div 130; xoff: = (pwd 130*ncol) Div 2; yoff: = (pht 90*nrow) Div 2;

{cycloids are 110 pixels wide ¥ 70 high, length 140}

SetLineWidth(1); h: = 0;

For j: = 1 To nrow Do

Begin y: = yoff + j*90 10;

For i: = 1 To ncol Do

Begin x: = xoff + (i 1)*130 + 10;

If (h Mod 4) = 0 Then

213

214 Chapter 9

Begin

MoveTo (x,y);

For k : = 1 To 40 Do

Begin theta: = (pi/40) *k; xstep: = Round(35*(theta-

Sin(theta))); ystep: = Round(35*

(1.0-Cos(theta)));

LineTo (x + xstep,y ystep);

End;

End;

If (h Mod 4) = 1 Then

Begin

MoveTo (x,y 70);

For k : = 1 To 40 Do

Begin theta: = (pi/40) *k; xstep: = Round(35*(theta-

Sin(theta))); ystep: = Round(35*

(1.0-Cos(theta)));

LineTo (x + xstep,y 70 + ystep);

End;

End;

If (h Mod 4) = 2 Then

Begin

MoveTo (x + 110,y);

For k : = 1 To 40 Do

Begin theta: = (pi/40) *k; xstep: = Round(35*

(theta-Sin(theta))); ystep: = Round(35*

(1.0-Cos(theta)));

LineTo (x + 110 xstep,y ystep);

End;

End;

If (h Mod 4) = 3 Then

Begin

MoveTo (x + 110,y 70);

For k : = 1 To 40 Do

Begin theta: = (pi/40) *k; xstep: = Round(35*

(theta-Sin(theta))); ystep: = Round(35*

(1.0-Cos(theta)));

LineTo (x + 110 xstep, y 70 + ystep);

End;

End; h: = h + 1;

End; {for i}

Computer-Assisted Methods

End; {for j}

GetScale(ppx,un); len: = h*140/ppx;

MoveTo (2,pht-6);

SetFont(‘Geneva’);

SetFontSize(10);

Write(‘Total Length = ’,len:10:4,‘ ’,un); area: = pwd*pht/(ppx*ppx);

End;

MoveTo (2,pht-18);

Write(‘Total Area = ’,area:10:3,‘ sq.’,un);

Macro ‘Square Lines’;

Var i,j,x,y,xoff,yoff,pwd,pht,nrow,ncol: integer; len,area,ppx: eal; un: string;

Begin

GetPicSize(pwd,pht); nrow: = pht Div 100; ncol: = pwd Div 100; xoff: = (pwd 100*ncol) Div 2; yoff: = (pht 100*nrow) Div 2;

If (xoff = 0) Then

Begin xoffset: = 50; ncol: = ncol 1;

End;

If (yoff = 0) Then

Begin yoff: = 50; nrow: = nrow 1;

End;

SetLineWidth(1);

For j: = 0 To nrow Do

Begin y: = yoff + j*100;

MoveTo (xoff, y);

LineTo (pwd xoff 1, y);

End;

For i: = 0 To ncol Do

Begin x: = xoff + i*100;

MoveTo (x,yoff);

LineTo (x,pht yoff 1);

End;

GetScale(ppx,un); len: = (nrow*(ncol + 1) + ncol*(nrow + 1))*100/ppx;

MoveTo (2,pht-6);

SetFont(‘Geneva’);

SetFontSize(10);

Write(‘Total Length = ’,len:10:4,‘ ’,un); area: = pwd*pht/(ppx*ppx);

MoveTo (2,pht-18);

215

216

End;

Write(‘Total Area = ’,area:10:3,‘ sq.’,un);

Macro ‘Circle Grid’; var i,j,x,y,xoff,yoff,pwd,pht,nrow,ncol: integer; len,area,ppx,pi: real; un: string;

Begin

GetPicSize(pwd,pht);

SetLineWidth(1); pi: = 3.14159265; nrow: = pht Div 120; ncol: = pwd Div 120; xoff: = (pwd 130*ncol) Div 2; yoff: = (pht 130*nrow) Div 2;

For j: = 1 To nrow Do

Begin y: = yoff + 15 + (j 1)*130;

For i: = 1 To ncol Do

Begin x: = xoff + 15 + (i 1)*130;

MakeOvalRoi(x,y,101,101);

DrawBoundary;

KillRoi;

End;

End;

GetScale(ppx,un);

Len: = nrow*ncol*pi*100/ppx;

MoveTo (2,pht-6);

SetFont(‘Geneva’);

End;

SetFontSize(10);

Write(‘Total Length = ’,Len:10:4,‘ ’,un); area: = pwd*pht/(ppx*ppx);

MoveTo (2,pht-18);

Write(‘Total Area = ’,area:10:3,‘ sq.’,un);

Macro ‘Radial Lines’; var step,i,j,pwd,pht:integer; x,y,theta,temp:real;

Begin step: = GetNumber(‘Number of Lines’,16,0);

SetPalette(‘GrayScale’,6);

AddConstant(7);

SetForegroundColor(1);

GetPicSize(pwd,pht); setlinewidth(1);

For i: = 0 To step Do

Begin theta: = i*3.14159265/step; x: = (pwd/2)*Cos(theta); y: = (pht/2)*Sin(theta);

MoveTo(pwd/2 x,pht/2 + y);

Chapter 9

Computer-Assisted Methods

LineTo(pwd/2 + x,pht/2 y);

End;

End;

Function ArcSin(X:real) : real; var temp:real;

Begin

End; if (X = 0) then temp: = 0 else if (X = 1) then temp: = 3.14159265/2 else if (X =1) then temp: =3.14159265/2 else temp: = ArcTan(x/sqrt(1 x*x));

ArcSin: = temp;

Macro ‘Sine-wt. Lines’; var step,i,j,pwd,pht:integer; x,y,theta,temp:real;

Begin step: = GetNumber(‘Number of Lines’,16,0);

SetPalette(‘GrayScale’,6);

AddConstant(7);

SetForegroundColor(1);

End;

GetPicSize(pwd,pht); setlinewidth(1);

For i: = 0 To step Do

Begin temp: = ( 1 + 2*i/step); theta: = ArcSin(temp); x: = (pwd/2)*Cos(theta); y: = (pht/2)*Sin(theta);

MoveTo(pwd/2 x,pht/2 + y);

LineTo(pwd/2 + x,pht/2 y);

End;

Macro ‘Horiz. Lines’; var space,i,pwd,pht:integer;

Begin space: = GetNumber(‘Line Spacing’,5,0);

SetPalette(‘GrayScale’,6);

AddConstant(7);

SetForegroundColor(1);

End;

GetPicSize(pwd,pht); setlinewidth(1); i: = (pht mod space)/2; if (i < 2) then i: = (space/2); while (i < pht) do

Begin

MoveTo(0,i);

LineTo(pwd 1,i); i: = i + space;

End;

217

218

Macro ‘Vert. Lines’; var space,i,pwd,pht:integer;

Begin space: = GetNumber(‘Line Spacing’,5,0);

SetPalette(‘GrayScale’,6);

AddConstant(7);

SetForegroundColor(1);

GetPicSize(pwd,pht); setlinewidth(1); i: = (pwd mod space)/2; if (i < 2) then i: = (space/2); while (i < pwd) do

Begin

MoveTo(i,0);

LineTo(i,pht 1); i: = i + space;

End;

End;

Chapter 9

Macro ‘Random Points’;

Var x,y,k,i,pwd,pht,limt: integer; ppx,area: real; un: string; collide: boolean;

Begin

GetPicSize(pwd,pht); limt: = 50;{number of points} k: = 1;

Repeat x: = Random*(pwd 20); {10 pixel margin around borders} y: = Random*(pht 20); collide: = false;

If (k > 1) Then {avoid existing marks}

For i: = 1 To (k 1) do

If (Abs(x-rUser1[i]) < 5) and (Abs(y-rUser2[i]) < 5)

Then collide: = true;

If Not collide Then

Begin rUser1[k]: = x; rUser2[k]: = y;

MakeOvalRoi(x + 6,y + 6,7,7);

DrawBoundary;

KillRoi; k: = k + 1;

End;

Until (k > limt);

GetScale(ppx,un); area: = pwd*pht/(ppx*ppx);

SetFont(‘Geneva’);

SetFontSize(10);

MoveTo (2,pht-18);

Write(‘Total Area = ’,area:10:3,‘sq.’,un);

Computer-Assisted Methods

End;

MoveTo (2,pht-6);

Write(‘Total Points = ’,k 1:4);

Macro ‘Random Lines’;

Var i,j,k,x1,x2,y1,y2,pwd,pht,len,limt: x,y,theta,m,area,ppx,dummy: real; un: string;

Begin integer;

GetPicSize(pwd,pht); len: = 0; k: = 0; limt: = 3*(pwd + pht); {minimum total length in pixels}

Repeat {Until length > limt} x: = Random*pwd; y: = Random*pht; theta: = Random*3.14159265; m: = Sin(theta)/Cos(theta); x1: = 0; y1: = y + m*(x1 x);

If (y1 < 0) Then

Begin y1: = 0; x1: = x + (y1 y)/m;

End;

If (y1 > pht) Then

Begin y1: = pht; x1: = x + (y1 y)/m;

End; x2: = pwd; y2: = y + m*(x2 x);

If (y2 < 0) Then

Begin y2: = 0; x2: = x + (y2 y)/m;

End;

If (y2 > pht) Then

Begin y2: = pht; x2: = x + (y2 y)/m;

End;

MoveTo(x1,y1);

LineTo(x2,y2); len: = len + Sqrt((x2 x1)*(x2 x1) + (y1 y2)*(y1 y2)) k: = k + 1;

Until (len > limt);

GetScale(ppx,un); area: = pwd*pht/(ppx*ppx);

SetFont(‘Geneva’);

SetFontSize(10);

MoveTo (2,pht-18);

Write(‘Total Area = ’,area:10:3,‘sq.’,un);

219

220

len: = len/ppx;

MoveTo (2,pht-6);

End;

Write(‘Total Length = ’,len:10:3,‘ ’,un);

Chapter 9

Macro ‘Count Marks’ ;

{note—this routine is VERY slow as a macro because it must access each pixel. The

Photoshop drop-in is much faster for counting features, and when used by NIH Image will perform exactly as this does and count the number of marks in each of the six reserved colors.}

VAR i,j,k,pwd,pht,valu,nbr,newfeat: integer;

Begin

GetPicSize(pwd,pht);

For i: = 1 To 6 Do rUser1[i]: = 0;

MoveTo(0,0);

For i: = 1 To pht Do

Begin

GetRow(0,i,pwd); newfeat: = 0; {start of a new image line—nothing pending}

For j: = 1 To (pwd 1) Do {skip edge pixels}

Begin valu: = LineBuffer[j]; {test pixel}

If ((valu = 0) or (valu > 6)) Then

Begin {pixel is not a fixed color}

If (newfeat > 0) Then {End of a line} rUser1[newfeat]: = rUser1[newfeat] + 1; newfeat: = 0;

End;

If ((valu >= 1) and (valu <= 6)) Then {a fixed color point}

Begin nbr: = LineBuffer[j 1]; {left side}

If (nbr <> valu) Then {test continuation of line}

Begin

If (newfeat > 0)Then {prev touching color} rUser1[newfeat]: = rUser1

[newfeat] + 1; newfeat: = valu;{start of a chord}

End;

For k: = (j 1) To (j + 1) Do {check prev line}

Begin nbr : = GetPixel(k,i 1);

If (nbr = valu) Then

Begin newfeat: = 0;{touches}

End;

End;

End;

End; {for j}

Computer-Assisted Methods 221

LineTo(0,i); {progress indicator because getpixel is

End; very slow}

End; {for i}

ShowMessage(‘Class#1 = ’,rUser1[1]:3,‘\Class#2 = ’,rUser1[2]:3,

‘\Class#3 = ’,rUser1[3]:3,‘\Class#4 = ’,rUser1[4]:3,

‘\Class#5 = ’,rUser1[5]:3,‘\Class#6 = ’,rUser1[6]:3);

{can substitute other output procedures as needed}