Distributed Resource Exchange: Virtualized Resource Management for SR-IOV InfiniBand Clusters

advertisement

Distributed Resource Exchange: Virtualized Resource

Management for SR-IOV InfiniBand Clusters

Adit Ranadive, Ada Gavrilovska, Karsten Schwan

Center for Experimental Research in Computer System (CERCS)

Georgia Institute of Technology, Atlanta, Georgia

{adit262, ada, schwan}@cc.gatech.edu

Abstract—The commoditization of high performance interconnects, like 40+ Gbps InfiniBand, and the emergence of lowoverhead I/O virtualization solutions based on SR-IOV, is enabling the proliferation of such fabrics in virtualized datacenters

and cloud computing platforms. As a result, such platforms

are better equipped to execute workloads with diverse I/O

requirements, ranging from throughput-intensive applications,

such as ‘big data’ analytics, to latency-sensitive applications,

such as online applications with strict response-time guarantees. Improvements are also seen for the virtualization infrastructures used in datacenter settings, where high virtualized

I/O performance supported by high-end fabrics enables more

applications to be configured and deployed in multiple VMs

– VM ensembles (VMEs) – distributed and communicating

across multiple datacenter nodes. A challenge for I/O-intensive

VM ensembles is the efficient management of the virtualized

I/O and compute resources they share with other consolidated

applications, particularly in lieu of VME-level SLA requirements

like those pertaining to low or predictable end-to-end latencies

for applications comprised of sets of interacting services.

This paper addresses this challenge by presenting a management solution able to consider such SLA requirements, by

supporting diverse SLA-aware policies, such as those maintaining

bounded SLA guarantees for all VMEs, or those that minimize the

impact of misbehaving VMEs. The management solution, termed

Distributed Resource Exchange (DRX), borrows techniques from

principles of microeconomics, and uses online resource pricing

methods to provide mechanisms for such distributed and coordinated resource management. DRX and its mechanisms allow

policies to be deployed on such a cluster in order to provide SLA

guarantees to some applications by charging all the interfering

VMEs ‘equally’ or based on the ‘hurt’, i.e. amount of I/O

performed by the VMEs. While these mechanisms are general,

our implementation is specifically for SR-IOV-based fabrics like

InfiniBand and the KVM hypervisor.

Our experimental evaluation consists of workloads representative of data-analytics, transactional and parallel benchmarks.

The results demonstrate the feasibility of DRX and its utility to

maintain SLA for transactional applications. We also show that

the impact to the interfering workloads is also within acceptable

bounds for certain policies.

I.

I NTRODUCTION

Current datacenter workloads exhibit diverse resource

and performance requirements, ranging from communicationand I/O-intensive applications like parallel HPC tasks, to

throughput-sensitive mapreduce-based applications, multi-tier

enterprise codes, to latency-sensitive applications like transaction processing, financial trading [28] or VoIP services [17].

c

978-1-4799-0898-1/13/$31.00 !2013

IEEE

Despite the increased popularity of virtualization and the

emergence of cloud computing platforms, some of these

classes of applications, however, continue to run on nonvirtualized, dedicated infrastructures, to reduce overheads and

avoid potential interference effects due to resource sharing.

This is particularly true for these applications’ I/O needs,

since, unlike existing hardware-supported methods for CPU

and memory resources, prevalent I/O devices in current cluster

and datacenter installations continue to introduce substantial

overheads in their shared and virtualized use.

Concerning I/O, commoditization of high-end fabrics like

40+Gbps InfiniBand and Ethernet, and hardware-level improvements for I/O virtualization like Single Root I/O Virtualization (SR-IOV) [18], are addressing some of these I/Orelated challenges, and are further expanding the class of

applications able to benefit from virtualization technology like

Xen, Microsoft HyperV, and VMware. Although these technology advances provide high levels of aggregate I/O capacity

and low-overhead I/O operations, a remaining challenge is

the ability to consolidate the above mentioned highly diverse

workloads across multiple shared, virtualized platforms. This

is because current systems lack the methods for fine-grained

I/O provisioning and isolation needed to control potential

interference and noise phenomena [19], [23], [26]. Specifically,

while SR-IOV-based devices provide low-overhead device access to multiple VMs consolidated on a single platform, this

hardware-supported device resource partitioning is insufficient

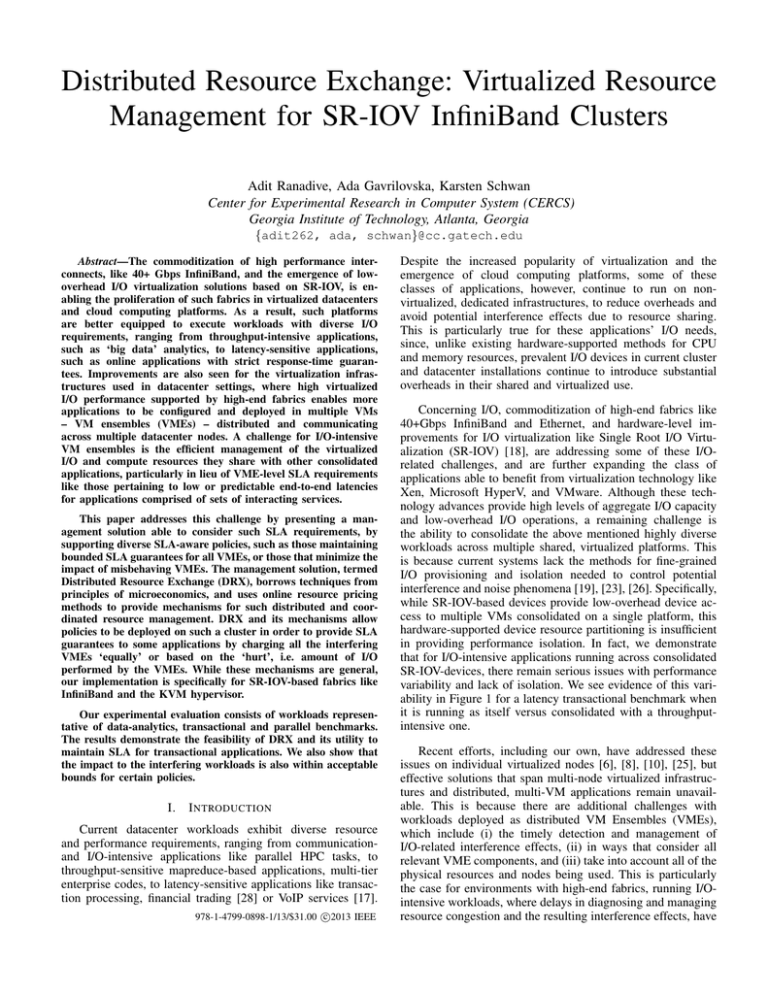

in providing performance isolation. In fact, we demonstrate

that for I/O-intensive applications running across consolidated

SR-IOV-devices, there remain serious issues with performance

variability and lack of isolation. We see evidence of this variability in Figure 1 for a latency transactional benchmark when

it is running as itself versus consolidated with a throughputintensive one.

Recent efforts, including our own, have addressed these

issues on individual virtualized nodes [6], [8], [10], [25], but

effective solutions that span multi-node virtualized infrastructures and distributed, multi-VM applications remain unavailable. This is because there are additional challenges with

workloads deployed as distributed VM Ensembles (VMEs),

which include (i) the timely detection and management of

I/O-related interference effects, (ii) in ways that consider all

relevant VME components, and (iii) take into account all of the

physical resources and nodes being used. This is particularly

the case for environments with high-end fabrics, running I/Ointensive workloads, where delays in diagnosing and managing

resource congestion and the resulting interference effects, have

significant impact on performance degradation [29]. Stated

technically, the effectiveness and timeliness of the performance

and isolation management operations concerning the I/O use

of distributed VM ensembles requires coordinated resource

management actions across the entire set of relevant distributed platform resources.

The specific contributions made by this research include the

following. (1) We present the design of the DRX framework

and its implementation for cluster servers interconnected with

InfiniBand SR-IOV fabrics, and virtualized with the KVM

hypervisor. (2) DRX integrates mechanisms for low-overhead

accounting of resource usage, usage-based charging, and dynamic resource price adjustment, which make it possible to

realize diverse resource management policies. (3) The importance of these mechanisms is illustrated through the implementation of two concrete policies: an (i) Equal-Blame (EB)

policy, which under increased demand, equally limits workloads’ resource allocations, e.g., through platform-wide price

configuration, and a (ii) Hurt-Based (HB) policy, where price

adjustments are made in a manner proportional to the ‘hurt’

being caused, i.e., the amount of I/O generated by VMEs.

(4) The realization of DRX leverages our own prior work,

which developed memory-introspection-based techniques for

lightweight accounting, i.e., monitoring of the use of I/O

resources in InfiniBand-(and similar) connected platforms [24],

and mechanisms for managing performance interference on

single node platforms through the use of appropriate charging

methods [25]. (5) Evaluations use representative application

benchmarks corresponding to transactional, data-analytics, and

parallel workloads. The results indicate the importance and

efficacy of our distributed management solution and make

current SR-IOV more feasible for SLA-driven workloads.

The remainder of the paper is organized as follows. Section II motivates the need for DRX resource management

It also provides background on the PCI Passthrough, SRIOV InfiniBand technologies used in modern virtualization

Latency Count for Interfered App

Latency Count for Non-Interfered App

45000

40000

35000

30000

Count

To achieve this goal, this paper proposes a resource management framework – Distributed Resource Exchange (DRX)

– for managing the performance interference effects seen by

distributed workloads deployed in shared virtualized environments. DRX borrows ideas from microeconomics principles

on managing commodities’ supply and demand, by managing

virtualized clusters as an exchange where resource allocations are controlled via continuous accounting, charging, and

dynamic price adjustment methods. DRX provides the basic

mechanisms for allocation, accounting, charging, and pricing

operations that are needed to support a range of resource management policies. Furthermore, these operations are performed

with consideration of entire VM ensembles and their resource

demands, and take into account inter-ensemble interference

effects, thereby improving the efficacy of DRX management

processes. Although the ideas used in the DRX design are general, the distributed, coordinated resource allocation actions it

enables are particularly important for virtualized clusters with

high-end fabrics, where the bandwidth and latency properties

of the interconnect make them suitable platforms for shared

deployment of both I/O- and communication-intensive workloads, and where, precisely because of the I/O-sensitive nature

of some of these distributed workloads, the need for lowoverhead, effective management actions is more pronounced.

Distribution of Request Latencies for a Financial App

50000

25000

20000

15000

10000

5000

0

0

50

100

150

Request Service Times (µs)

Fig. 1: Distribution of Latencies for a Non-Interfered v/s an

Interfered Financial Application.

infrastructures and assumed by DRX methods. In Section III,

we introduce and explain the design of Distributed Resource

Exchange (DRX). We describe the DRX mechanisms and how

they interact with each other in Section IV. In Section V we

describe two policies that use these mechanisms. Sections VI

describes our experimental methodology and measurement

results. Related work is surveyed in Section VII, followed by

conclusions and future work in Section VIII.

II.

BACKGROUND

DRX targets virtualized clusters with high-end fabrics for

which Single Root I/O Virtualization (SR-IOV) enables lowoverhead I/O operations for the hosted guest VMs. Although

SR-IOV creates and provides VMs with access to physical

device partitions, it does not provide fine-grain control needed

for performance isolation. This is illustrated with the results

shown Figure 1, where two collocated applications are running instances of the Nectere benchmark developed in our

own work [11] with different I/O requirements. The results

demonstrate the performance degradation experienced by one

of the workloads, despite the use of SR-IOV-enabled devices.

An additional challenge with SR-IOV devices like the

InfiniBand adapters used in our work, is that by providing

direct access to a subset of physical device resources, SR-IOV

techniques make it also difficult to monitor/account for the

VMs’ I/O usage, and to insert fine-grained controls needed

to manage the I/O resource (i.e., bandwidth) allocation made

to VMs. We leverage our prior work on using memoryintrospection techniques to estimate the VMs’ use of IB

resources [24], and on using CPU capping as a method to

gauge the VMs’ use of I/O resources, thereby also limiting

the amount of I/O they can perform and indirectly affecting

their I/O allocation.

We next present some detail of these key enabling technologies that drive the design and implementation of DRX.

PCI Passthrough. PCI passthrough allows PCI devices (SRIOV-capable or standard) to be directly accessible from guest

VMs, without the involvement of the hypervisor or host

OS, but requiring Intel’s VT-d [1] or AMD’s IOMMU [2]

extensions for correct address translation from guest physical

addresses to machine physical addresses [4]. The hypervisor

(e.g., KVM or Xen) is responsible for assigning the PCI

device (specified for passthrough) to the guest’s PCI bus and

removing it from the management domain’s PCI bus list – i.e.,

the device is under full control of the guest domain. While

providing guests with near-native virtualized I/O performance,

by bypassing the management domain, i.e., the hypervisor, it

becomes challenging to monitor and manage the guest’s I/O

behavior.

Single Root I/O Virtualization (SR-IOV) InfiniBand. With

SR-IOV [7], the physical device interface (i.e., the device Physical Function (PF)) and associated resources are ‘partitioned’

and exposed as Virtual Functions (VFs). One or more VFs are

then allocated to guest VMs in a manner that leverages PCI

passthrough functionality.

The current InfiniBand Mellanox ConnectX-2 SR-IOV

devices used in our work provide SR-IOV support by dividing the available physical resources, i.e., queue pairs (QPs),

completion queues (CQs), memory regions (MRs), etc., among

VFs and exposing this subset of resources as a VF. The

PF driver running in the management domain is responsible

for creating the number of VFs (in our case 16). Each of

these VFs are assigned to a guest using PCI passthrough. A

Mellanox VF driver residing in each guest is responsible for

device configuration and management. All natively supported

IB transports – RDMA, IPoIB or SDP – are also supported by

the VF driver.

IBMon. To monitor VMs’ usage of IB we use a tool called

IBMon developed in our prior research [24]. IBMon asynchronously tracks VMs’ IB usage via memory introspection

of the guests’ memory pages used by their internal IB (i.e.,

OFED) stack. In this manner, IBMon gathers information

concerning VM’s QPs, including application-level parameters

like buffer size, WQE index (to track completed CQEs), QP

number (uniquely identifies a VM-VM communication). These

are used to more accurately depict application IB usage.

KVM Memory Introspection. KVM provides support for a

libvirt function called virDomainMemoryPeek. The function

maps the memory pointed by guest physical addresses and

returns a pointer to the mapped memory. IBMon uses the

mapped memory and interprets CQE and QP information.

ResourceExchange Model. We abstract the way in which

VMs use QPs and CQs by generally describing how the

resources assigned to and used by VMs in the notion of

’Resos’, explained in detail in [25], where the Resos allocated

to each VM represent its permissible use of some physical

resource. Given Resos of different types, it is then possible to

charge VMs for their resource usage based on some providerlevel policy, where charging can be based on micro-economic

theories and their application to resource management [14],

[21].

III.

OVERVIEW OF THE D ISTRIBUTED R ESOURCE

E XCHANGE

The DRX architecture illustrated in Figure 2 is a multi-level

software structure spanning the machines and VM ensembles

using them. Its top-tier Platform Manager (PM) is responsible for enforcing cluster-wide policies and driving resource

Platform Manager

VME 1

VME 2

...

VME n

HA

HA

HA

HA

VM . . . VM

VM . . . VM

VM . . . VM

VM . . . VM

...

HA

VM . . . VM

Fig. 2: Distributed Resource Exchange Model.

allocation actions for one or more VM Ensembles (VMEs).

Each VME consists of multiple VMs corresponding to a single

cloud tenant – e.g., a single application, such as a multi-tier

enterprise workload, or a distributed MPI or MapReduce job.

For each VME, an Ensemble Manager (EM) monitors the

VME’s resource and performance needs, and drives necessary

interactions with the DRX management layer, such as to report

SLA violations.

EMs interact directly with the DRX Host Agent (HA) components deployed on each host. The HAs maintain accounting

information for the host’s VM’s resource usage, and manage

(reduce or increase) resource allocations based on the specific

pricing and charging policy established by the PM.

The resource management provided by DRX behaves like

an exchange. Participants (VMEs and their VMs) are allocated

credits called Resos, which in turn determine the resource

allocations made to each VM by the corresponding Host

Agent. DRX includes mechanisms for allocation, accounting,

and charging, performed by each HA in order to enforce

a given resource allocation policy. I/O congestion and the

resulting performance degradation are managed through dynamic resource pricing. Depending on resource supply and

demand (e.g., considering factors such as current price, number

of VMs and VMEs, amount of I/O usage, etc.), and in response to performance interference events, the PM determines

adjustments in the Resos price that a VM/VME should be

charged for I/O resource consumption. Finally, to deal with

dynamism in the workload requirements, DRX uses an epochbased approach: Resos allocations are determined and renewed

at the start of an epoch, based on overall supply and demand,

per-VM or VME accounting information, etc.; the resource

price, along with the charging function, determine the rate at

which the workload (some VM or component) will be allowed

to consume I/O resources. These mechanisms allow us to treat

physical resources like commodities which can then be bought

or sold from an ‘exchange’. Further, we can use economicsbased schemes to control the commodity supply and demand,

thereby affecting resource utilization, and the consequent VM

performance and interference effects. We describe these in

detail in Section IV.

The current DRX implementation divides Resos equally

among all VMs in a VME (additional policies can be supported

easily). Given our focus on data-intensive applications and the

abundance of CPU resources in multicore servers, the current

HA implementation charges its VMs only for their I/O usage,

for the duration of the epoch. I/O usage is obtained from IBMon, which samples each VM’s I/O queues to estimate its current I/O demand. Controlling I/O usage, however, is not easily

done in SR-IOV environments: (1) the VM-device interactions

bypass the hypervisor and prevent its direct intervention; and

(2) SR-IOV IB devices carry out I/O via asynchronous DMA

operations directly to/from application memory. Software that

’wraps’ device calls with additional controls would negate

the low-overhead SR-IOV bypass solution. The current HA,

therefore, relies on the relatively crude method of CPU capping

to indirectly control the I/O allocations available to the VM.

Our prior work prototyped this method for paravirtualized IB

devices [25]. With DRX, we have extended it to SR-IOV

devices.

The DRX infrastructure can be used for many purposes,

including tracking, charging, and usage control. Key to this

paper is its use for ensuring isolation for I/O-intensive distributed datacenter applications. Specifically, isolation must

be provided for a set of VMEs co-running on a cluster of

machines. This requires monitoring for and tracking distributed

interference across VMEs caused by their I/O activities. Such

interference occurs when VMEs share physical links, using

them in ways that cause one VME’s actions to affect the

performance of another. A more formal statement of distributed

interference considers VMs communicating across physical

links affected by other VMEs, using what we term DCCR

Distributed Causal Congestion Relationship. The formulation

below considers reduced performance by some VMi due to

interference by VMEs: mathematically shown as follows for a

VMi that has reduced performance

!

!

DCCR(i) = {V M Ej }! [VMk ∈ VMEj ∧ VMk ∈ P(VMi )]

(1)

The equation identifies the VME or set of VMEs that is

affecting the performance of VMi . Note that those VMEs also

contain VMs that are on the same physical machine as VMi ,

the latter denoted by the function P. Knowledge about this

potentially resulting in a large set of ’culprit’ VMs is the basis

on which DRX manages interference. The next step will be

to identify those VMs in that set that are actually causing the

interference being observed, followed by mitigation actions

that prevent them from doing so. The next section explains

the techniques and steps used in detail.

IV.

DRX R ESOURCE M ANAGEMENT M ECHANISMS

We use the concept of ‘Resos’ described in [25] as a

Resource currency for VMs, or EMs acting on behalf of

entire VMEs, in the case of DRX, to ‘buy’ resources for

their execution. In this section we explain how the components

described in the section before, interact to implement our DRX

mechanisms which use Resos.

A. Allocating, Accounting for Resos and Charging Resources

The PM is responsible for a global resource management

of the cluster and it ensures resources are allocated in an

appropriate manner to meet the resources of the VMs. However, to improve scalability for VM resource management,

the PM allocates a certain number of Resos per EM called

‘EM Allocation’. Each EM Allocation depends on the set

of all resources present in the cluster and on the resource

management policy, see Section V. It also depends on the

number of VMs present in each VME in order to avoid a

completely unfair distribution of resources. Further, each EM

is responsible for distributing Resos to its VMs. For the sake

of simplicity, we assume EMs distribute Resos to its VMs

‘equally’, unless we state otherwise is our policies. Since we

consider only CPU and IB resources for management, we

assign Resos to VMs only for these resources.

We use an ‘Epoch and Interval-based model’ for accounting

of Resources, where one epoch is equal to 60 seconds, and

each interval is 1 second. A certain number of Resos allows

the VM to buy resources from the host. Every epoch, the EM

distributes a new allocation of Resos to its VMs. Next, every

interval, the Host Agent deducts Resos from the VM’s Resos

allocation – i.e., charges VMs – to account for the CPU and I/O

consumed by each VM in that interval. Any resource allocation

that needs to be applied for a VM is performed based on the

resource management policies.

B. Resource Pricing

When VMs consume resources they spend Resos allocated

to them by their respective EM. In order to control the rate at

which applications consume resources, specifically I/O, and to

deal with possible congestion where other application’s VMs

are no longer able to receive their resource share and make

adequate progress, DRX dynamically changes the resource

price with granularity of VMEs. By increasing the price of

the resource, VMs can only afford a limited quantity since

they have a limited number of Resos with them. According to

Congestion Pricing principles [14], [21], this implies that the

demand for the resource would reduce, which in turn would

reduce the congestion of that resource. Next, we explain the

key concepts in the DRX resource pricing methods.

First, the price increase intrinsically depends on the amount

of congestion-caused Performance Degradation (PD) of a

VM/VME. We find the PD for a VM using IBMon to detect

changes in the IB usage. The PD is the percentage change

in the CQEs (for RDMA) or I/O Bytes (for IPoIB or RDMA

port counters) generated by the VM. To maintain a certain SLA

the VM needs to generate a required number of CQEs/Bytes.

When this CQE rate falls below the SLA, IBMon detects it

and we can report the difference between it and the SLA to

the PM.

Second, pricing is performed on a per-VME Basis. This

simplifies our ability to track prices across the cluster when

pricing for an entire VME and reduces the amount of communication performed between HAs and the PM. By performing

price changes on the entire VME, we can provide a faster

response to reduce congestion, rather than repeatedly changing

prices per VM. All VMEs that belong to the DCCR set

with a VM whose performance degradation triggers a price

adjustment, will have their price increased. The amount of the

price increase for each VME would depend on these factors

listed above, as well as on the cluster-wide policy.

Third, in order to be more flexible in changing the price

based on the policy define we use two policy-specific parameters αi and δPi , which affects how the price increases for a

VME i. The αi defines the weight or priority for the VME i

and is between 0 and 1. The δPi is the policy coefficient for

a VME i and is defined for each policy in Section V. We also

use the Old Price or OPi of the VME to find the New Price.

Using the factors described above, we generally define our

Pricing Function as follows:

N Pi = f (OPi , P Dx , δPi , αi )

a ratio of 9:1, the effective price increase for VME1 would

be 27% and VME2 3%. The PM can find the aggregated I/O

from the HAs to compute the I/O ratio between VMEs and

therefore adjust prices accordingly. The goal of this policy is

to charge the VMEs more based on the fraction of performance

degradation they caused. For this policy δPi and δCij for VME

i and VM j are as follows:

C. CPU Capping

Since we do not have explicit control over the RDMA I/O

performed by the VMs, we use the rather crude methods of

CPU capping to reduce the amount of I/O the VM actually

performs. We have shown in [25] that by throttling CPU we

can control the amount of I/O the VM performs. Therefore,

we again use the CPU capping mechanism provided by the

hypervisor to control the VM’s I/O usage. The capping degree

depends on the policy being implemented. In general, the

CPUCap for a VM depends on the New Price for the VME

i (NPi ), Old Cap for VM (OCji ), VME Priority (αi ) and a

cpucap policy co-efficient, δCji , which defines the conversion

of Price into a CPUCap. Generally, we define the new CPU

Cap for a VM j belonging to VME i as:

N Cij = f (OCij , N Pi , αi , δCij )

V.

P OLICIES FOR A D ISTRIBUTED R ESOURCE E XCHANGE

Given the various components and mechanisms of DRX in

Section III and IV we now describe various ways in which

these components can interact to provide distributed resource

management.

A. Equal-Blame Policy

This policy is implemented to show a naive method of

charging VMEs when there is congestion. In this case each

VME is charged, i.e., its price is increased, equally for all

VMEs responsible for congestion. For example, if the performance degradation reported by a HA is 30%, then with

2 VMEs in the DCCR of congested VME x, each VME’s

price is increased by 15%. The goal of this policy is to have

a lightweight and simple mechanism by which we can charge

VMEs on congestion occurrence. We define the Price and CPU

Cap functions for a VME i and VM j as follows:

N Pi = OPi + (P Dx ∗ δPi ∗ αi ) ∗ OPi

"

#

(N Pi − OPi )

OCij

∗

∗ 100 ∗ δCij

N Cij = OCij −

100

OPi

δPi =

1

N (DCCRx )

δCij =

ELh

RLji

where, N(DCCRx ) denotes the number of VMEs in the DCCR

set of VME x, ELh and RLji denote the % of Epoch Left on

HA h and % of Resos Left for VM j ∈ VME i respectively.

B. Hurt-Based Policy

In order to improve a naive policy, we now consider the

amount of I/O generated by a VME in order to increase price.

Therefore, price increases for a VME are directly proportional

to the I/O generated by the VME and the performance degradation. From the earlier example, if the VMEs perform I/O in

IOi

,

δPi = $N

k=0 IOk

δCij =

ELh

RLji

where, N denotes the number of VMEs in the DCCR set of

VME x and IOi the amount of IO performed by VME i. The

rest of the formula is the same as the Equal-Blame Policy.

VI.

E VALUATION

A. Testbed

Our testbed consists of 8 Relion 1752 Servers. Each server

consists of dual hexa-core Intel Westere X5650 CPUs (HT

enabled), 40Gbps Mellanox QDR (MT26428) ConnectX-2

InfiniBand HCA, 1 Gigabit Ethernet and 48GB of RAM. Our

host OS is RHEL6.3 OS with KVM and the guest OS’ are

running RHEL6.1. Each guest is configured with 1 VCPU

(pinned to a PCPU), 2GB of RAM and an IB VF. We use the

mlx4 core beta version of the drivers (based on OFED 1.5)

for the hosts and guests, configured to enable 16VFs, so we

can run upto 16VMs on each host. The IB cards are connected

via a 36-port Mellanox IS5030 switch.

B. Workloads

We use three benchmarks, each representing a different

type of cluster workload. Nectere [11], is a server-clientbased financial transactional workload with low latency characteristics. We measure its performance in terms of µs for

request completion. We use Hadoop’s Terasort [12] with a

10GB dataset as a representative for data analytic computing

to generate distributed interference. For Hadoop workloads

we use the job running time as the performance metric.

Linpack [13] (Ns = 300 1000 7500) is a characteristic MPI

workload for clusters and uses Gflops as its performance

metric. We run the workloads in a staggered manner, where

we start and let the Hadoop job run for 30s before starting the

Linpack job. Next, we start the Nectere workload and run all

the jobs till Nectere completes successfully. This ensures that

the workloads are performing sufficient I/O communication

before Nectere starts.

The Hadoop and Linpack workloads are configured to use

32 VMs each and 2 VMs for Nectere. In the ‘symmetric

configuration’ each physical machine is running 4 VMs of

each benchmark. Additionally, we also use 2 asymmetric configurations – Asymm1 and Asymm2. In Asymm1, we have more

Linpack and Hadoop VMs (upto 16 total) on the same physical

machine as the Nectere VMs. Therefore, this configuration

should cause more interference to the Nectere application. In

Asymm2, there is only one VM each of Linpack and Hadoop

along with the Nectere VM. This explores the other end of the

asymmetry, where there is minimal interference.

Each DRX policy ensures that when Nectere is running,

it maintains the CQE/s within a SLA limit of 15% (we can

Native Nectere(64KB) with Interfering Workloads and Policy Performance

Workload Performance with Policies and Symmetric Configuration

NoMgmt

CC-Dist

PC-Dist

EB-Local

60

CQE/s for 64 KB Nectere

4500

EB-Dist

HB-Local

HB-Dist

40

SLA=15%

3500

3000

Monitor Instance #

2500

0

500

1000

1500

2000

2500

3000

80

30

Request Latency (µs)

Performance Degradation %

50

4000

20

10

75

70

65

SLA=15%

60

55

50

Non-Interfered Server

Interfered Server

45

EB-Dist Policy

HB-Dist Policy

40

0

Nectere-Latency

Hadoop

0

Linpack

50000

100000

150000

200000

250000

300000

350000

400000

450000

Nectere Request #

Workload

Fig. 3: Effect of Policies on Performance of Nectere, Hadoop Fig. 4: Effect of Policies on the running average of Nectere

and Linpack Workloads.

CQE/s and Latency. High CQE/s denotes Low Latency.

-20

100

-22

100

VME IBMTU Price

Hadoop VME IBMTU Price

CPUCap

Linpack VME IBMTU Price

-19

Linpack CPUCap

-20

90

90

Hadoop CPUCap

-18

-18

80

80

-16

SLA=15%

70

-14

CPU Cap

70

CPU Cap

-16

SLA Diff %

SLA Diff %

-17

SLA=15%

-15

60

60

-12

-14

50

-13

-12

40

1

1.2

1.4

1.6

1.8

2

50

-10

-8

40

1

1.5

2

2.5

Price (Resos/MTU)

Price (Resos/MTU)

(a) Equal-Blame Policy

(b) Hurt-Based Policy

3

3.5

Fig. 5: Comparison of VME Prices, CPU Cap and SLA Difference for Hadoop and Linpack VMEs.

easily configure other values) from the base value. We design

our experiments in order to highlight some of the important

features of DRX and their impact on the selected workloads.

We also use two CPU Capping-only policies, denoted as CC

and PC. In CC (CPUCap), we decrease the CPU Cap steadily

by 5% (upto 25%) for the interfering VMs. In PC (Proportional

Capping), we decrease the CPU Cap for the interfering VMEs

based on their I/O Ratio metrics. Therefore, each VM would

have its CPU Cap reduced by a fraction of the 5% based on

the I/O Ratio. With these additional policies, we show the

importance of Pricing over only performing CPU Capping. We

have also configured two sub-types of policies for each of the

main policies. In one, all price changes for a VME apply to its

VMs across the entire cluster, termed a ‘Distributed’ policy.

In the other, the price changes for a VM apply only at the

HA that is reporting the congestion, termed a ‘Local’ policy.

Broadly, we divide the results into three different categories:

(i) policy performance, (ii) policy sensitivity to resources usage

patterns, and (iii) limitations and overhead of DRX in different

workload configurations, which we describe next.

C. Policy Performance

Figure 3 shows the impact of the distributed and local

policies on workload performance. The CC-Dist and PC-Dist

policies do help in reducing Nectere latency, but not to its

SLA level. These also have a much greater impact on the performance of Linpack and Hadoop, because of the continuous

capping. The EB-Local and HB-Local policies cannot reduce

the latency for Nectere below the SLA because Linpack VMs

on other machines are actually causing congestion by sending

data to its VMs collocated with Nectere. However, in the case

of the EB-Dist and HB-Dist policies, Nectere can meet its SLA

of 15%. This demonstrates the feasibility of resource pricing

as a vehicle to reduce congestion, as well as the importance

of performing distributed resource management actions, as

enabled by DRX.

Figure 4 shows the impact of each policy on the latency

of the Nectere application (bottom graph). It also shows the

change in the metric used by DRX to manage I/O performance

– CQE/s. Both the Equal-Blame and Hurt-Based policies

Workload Performance with Policies and Asymmetric Configuration

90

EB-Dist

HB-Dist

80

80

60

20

0

EB-Local-Asymm1 Policy

EB-Dist-Asymm1 Policy

HB-Local-Asymm1 Policy

HB-Dist-Asymm1 Policy

NoMgmt-Asymm1

EB-Local-Asymm2 Policy

EB-Dist-Asymm2 Policy

HB-Local-Asymm2 Policy

HB-Dist-Asymm2 Policy

NoMgmt-Asymm2

70

40

Nectere

HPL1

HPL2

Nectere

MRBench

HPL

Workload

Fig. 6: Comparison of DRX Policy Sensitivity with two

different workloads sizes.

are effective in reducing contention effects and providing

performance within the guaranteed SLA levels – they reach the

same value for latency, though their impact on the interfering

workload performance is different. Also, the HB-Dist policy

provides the least degradation of Hadoop and Linpack workloads while meeting the SLA for Nectere. The EB-Dist policy

degrades the workloads more since it increases the prices

equally for both VMEs which negatively impacts how fast the

CPUCap is reduced. As a result the EB-Dist policy is more

reactive or fast-acting to SLA violations as highlighted by the

CPU Cap reductions versus price increases shown in Figure 5a.

EB penalizes interfering VMs more than HB and assesses a

lower CPU Cap for Hadoop, Linpack at 60. Figure 5b, shows

the more slow-acting nature of the HB-Dist policy, where the

CPUCap of the interfering workloads is decreased much more

gradually than EB-Dist. HB-Dist allocates a higher CPU Cap

to Hadoop (71) and lower CPU Cap to Linpack (50) since

these are based on the I/O Ratio between the VMEs. For both

these policies the PM always responds to a SLA violation

messages within 5ms, therefore DRX always detects and acts

upon congestion in a timely manner.

Essentially, EB and HB policies serve two respective methods for SLA satisfaction – (1) fast-acting while not performing

graceful degradation of workloads, (2) slow-acting while

providing graceful degradation to other workloads. This result

highlights an important aspect of DRX: multiple policies can

be constructed and configured to meet the SLA values for

applications.

D. DRX Sensitivity to Resources

In order to evaluate the effect of resource usage patterns

on the effectiveness of DRX, we use two more workload

configurations. In one configuration we use an instance of

Nectere along with 2 Linpack instances. In the second, we

use an instance of Nectere along with the Hadoop MRBench

application and a smaller data size for Linpack. We observe

from Figure 6 that when the interfering workloads perform

similar amounts of I/O both EB and HB policies behave

equally well, however, since EB treats both VMEs similarly

at all times, and applies the same cap simultaneously, it

achieves a lower latency for Nectere. In the adjacent graph, HB

becomes more aggressive than EB and it caps both Linpack

and MRBench much more. This is because as HB performs the

capping, it leads to oscillations in which VME domanates the

I/O Ratio (> 95%), which forces HB to perform large amounts

of cap alternately on the VMEs. This is not evident in Figure 3

as the difference in the I/O Ratio between Hadoop and Linpack

is smaller. Therefore, we find that HB is more sensitive to large

Performance Degradation %

Performance Degradation %

DRX Sensitivity to Resource Usage Patterns

100

60

50

40

30

20

10

0

Nectere-Latency

Hadoop

Linpack

Workload

Fig. 7: Performance of Policies (Local and Distributed) with

Asymmetric Workload Configuration. Asymm1 and Asymm2

refer to the types of workload deployment.

swings in the I/O Ratio while EB is less sensitive to differences

in the generated I/O. Future policies will be extended with

mechanisms to detect such oscillations, and to further limit

their aggressivness under such circumstances.

E. Limitations and Overhead of DRX

We show in Figure 7 that for two different workload

configurations, the DRX policies affect them differently. When

there is a lot of interference in the Asymm1 configuration, none

of the policies can satisfy the Nectere SLA. This is because,

despite CPU capping, the VMs still generate sufficient I/O to

cause congestion for Nectere. In this case, having more support

from the hardware to control I/O would be very useful. In the

Asymm2 case where Nectere has minimal interference, DRX

ensures that other workloads are perturbed much less or not

at all. Here, both Linpack and Hadoop perform very close to

their baseline values. These results highlight the limited utility

of CPU Capping in extreme interference and also the low

overhead caused by DRX components and their management

actions.

VII.

R ELATED W ORK

In this section we briefly discuss prior research related to

DRX.

Distributed Rate Limiting for Networks. Many recent

efforts have explored distributed control for providing network

guarantees for cloud-based workloads. These have looked

at providing min-max fairness to workloads [22], providing

minimum bandwidth guarantees [9], or using congestion notifications from switches [5]. [20] provides a detailed survey

of these approaches. There are also other efforts that provide

network guarantees for per-tenant [16] and inter-tenant communication [3]. Authors in Gatekeeper [27] enforce limits per

tenant per physical machine by providing exact egress and

ingress bandwidth values. In DRX by providing prices and

setting CPU Cap limits, we similarly enforce network limits

per tenant per physical machine.

These approaches show that providing distributed control

for networks is becoming important for cloud systems. How-

ever, while these approaches may work well for Ethernetbased para-virtualized networks, they do not yet explore high

performance devices like InfiniBand or SR-IOV devices. DRX

borrows some ideas like minimum guarantees and tenant

fairness (VM ensembles are similar to tenants) from these

efforts to show that distributed control for networks is still

required and feasible for hardware-based virtualized networks.

Economics and Resource Management. DRX also relies

on the effects of Congestion Pricing on resource usage and

allocation. These ideas have been explored before in network

congestion avoidance [14], [21], platform energy management [30], as well as in market-based strategies to allocate

resources [15]. However, to our knowledge ours is the first

to combine congestion pricing to provide a distributed control

over InfiniBand network usage.

VIII.

C ONCLUSIONS AND F UTURE W ORK

This paper addresses the unresolved problem of crossapplication interference for distributed applications running

on virtualized settings. This problem occurs not only with

software-virtualized networking but also with newer high performance fabrics that use hardware-virtualization techniques

like SR-IOV, which grants VMs direct access to the network.

As a result, this removes the hypervisor from the communication path as well as the control over how VMs use the

fabric. The performance degradation from co-running set of

VMs is particularly acute for low latency applications used in

computational finance.

In this paper, we describe our approach called Distributed

Resource Exchange or DRX which offers hypervisor-level

methods to mitigate such inter-application interference in SRIOV-based cluster systems. We monitor VM Ensembles – a

set of VMs part of a distributed application – which enables

controls that apportion interconnect bandwidth across different

VMEs by implementing diverse cluster-wide policies. Two

policies are implemented in DRX: to assign ‘Equal Blame’

to interfering VMEs or to look at how much ‘Hurt’ they

are causing, and therefore showing the feasibility of such

distributed controls. The results demonstrate that DRX is able

to maintain SLA for low-latency codes to within 15% of the

baseline by controlling collocated data-analytic and parallel

workloads. Limitations of the DRX approach are primarily

due to its current method to mitigate interference, which is to

‘cap’ the VMs that over-use the interconnect and cause ‘hurt’.

Our future work, therefore, will consider utilizing congestion

control mechanisms present on current InfiniBand hardware to

mitigate the sending rate of certain QPs, in order to remove

our reliance on CPU Capping.

R EFERENCES

D. Abramson et al. Intel Virtualization Technology for Directed I/O.

Intel Technology Journal, 10(3), 2006.

[2] AMD I/O Virtualization Technology. http://tinyurl.com/a6wsdwe.

[3] H. Ballani, K. Jhang, T. Karagiannis, and C. K. et. al. Chatty Tenants

and the Cloud Network Sharing Problem. In NSDI, 2013.

[4] M. Ben-Yehuda, J. Mason, O. Krieger, J. Xenidis, L. V. Dorn,

A. Mallick, J. Nakajima, and E. Wahlig. Utilizing IOMMUs for

Virtualization in Linux and Xen. In Ottawa Linux Symposium, 2006.

[5]

B. Briscoe and M. Sridharan.

Network Performance Isolation in Data Centres using Congestion Exposure (ConEx), 2012.

http://datatracker.ietf.org/doc/draft-briscoe-conex-data-centre.

[6]

L. Cherkasova and R. Gardner. Measuring CPU Overhead for I/O

Processing in the Xen Virtual MachineMonitor. In USENIX ATC, 2005.

Y. Dong, Z. Yu, and G. Rose. SR-IOV Networking in Xen: Architecture,

Design and Implementation. In Proceedings of WIOV, 2008.

S. Govindan, A. R. Nath, A. Das, B. Urgaonkar, and A. Sivasubramaniam. Xen and co.: Communication-Aware CPU Scheduling for

Consolidated Xen-based Hosting Platforms. In VEE, 2007.

C. Guo, G. Lu, H. J. Wang, and S. Y. et. al. SecondNet: A Data Center

Network Virtualization Architecture with Bandwidth Guarantees. In

Proceedings of ACM CoNext, 2010.

D. Gupta, L. Cherkasova, R. Gardner, and A. Vahdat. Enforcing

Performance Isolation Across Virtual Machines in Xen. In Proc. of

MiddleWare, 2006.

V. Gupta, A. Ranadive, A. Gavrilovska, and K. Schwan. Benchmarking

Next Generation Hardware Platforms: An Experimental Approach. In

Proceedings of SHAW, 2012.

Apache Hadoop. http://hadoop.apache.org/.

High Performance Linpack. http://www.netlib.org/benchmark/hpl.

P. Key, D. Mcauley, P. Barham, and K. Laevens. Congestion Pricing

for Congestion Avoidance. Technical report, Microsoft Research, 1999.

K. Lai, L. Rasmusson, E. Adar, S. Sorkin, L. Zhang, and B. A.

Huberman. Tycoon: an Implemention of a Distributed Market-Based

Resource Allocation System. Technical report, HP Labs, Palo Alto,

CA, USA, 2004.

T. Lam, S. Radhakrishnan, A. Vahdat, and G. Varghese. NetShare:

Virtualizing Data Center Networks across Services. Technical Report

CS2010-0957, University of California, San Diego, 2010.

M. Lee, A. S. Krishnakumar, P. Krishnan, N. Singh, and S. Yajnik.

Supporting Soft Real-Time Tasks in the Xen Hypervisor. In VEE, 2010.

J. Liu. Evaluating Standard-Based Self-Virtualizing Devices: A Performance Study on 10 GbE NICs with SR-IOV Support. In IPDPS,

2010.

A. Menon, J. R. Santos, Y. Turner, G. J. Janakiraman, and

W. Zwaenepoel. Diagnosing Performance Overheads in the Xen Virtual

Machine Environment. In Proceedings of VEE, 2005.

J. Mogul and L. Popa. What We Talk About When We Talk About

Cloud Network Performance. ACM CCR, 2012.

R. Neugebauer and D. McAuley. Congestion Prices as Feedback Signals: An Approach to QoS Management. In ACM SIGOPS Workshop,

2000.

L. Popa, G. Kumar, M. Chowdhury, and A. K. et. al. FairCloud: Sharing

the Network in Cloud Computing. In ACM SIGCOMM, 2012.

X. Pu, L. Liu, Y. Mei, S. Sivathanu, Y. Koh, and C. Pu. Understanding

Performance Interference of I/O Workload in Virtualized Cloud Environment. In Proceedings of IEEE Cloud, 2010.

A. Ranadive, A. Gavrilovska, and K. Schwan. IBMon: Monitoring

VMM-Bypass InfiniBand Devices using Memory Introspection. In

HPCVirtualization Workshop, Eurosys, 2009.

A. Ranadive, A. Gavrilovska, and K. Schwan. ResourceExchange:

Latency-Aware Scheduling in Virtualized Environments with High

Performance Fabrics. In Proceedings of IEEE Cluster, 2011.

A. Ranadive, M. Kesavan, A. Gavrilovska, and K. Schwan. Performance

Implications of Virtualizing Multicore Cluster Machines. In HPCVirtualization Workshop, EuroSys, 2008.

P. V. Soares, J. R. Santos, N. Tolia, and D. Guedes. Gatekeeper:

Distributed Rate Control for Virtualized Datacenters. Technical Report

HPL-2010-151, HP Labs, 2010.

InterContinental Exchange. http://www.theice.com.

C. Wang, I. A. Rayan, G. Eisenhauer, K. Schwan, and et. al. VScope:

Middleware for Troubleshooting Time-Sensitive Data Center Applications. In MiddleWare, 2012.

H. Zeng, C. S. Ellis, A. R. Lebeck, and A. Vahdat. Currentcy: A

Unifying Abstraction for Expressing Energy Management Policies. In

Proceedings of the USENIX Annual Technical Conference, 2003.

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

[15]

[16]

[17]

[18]

[19]

[20]

[21]

[22]

[23]

[24]

[25]

[26]

[27]

[1]

[28]

[29]

[30]