Disclosures PQI Outline Methods in Completing

advertisement

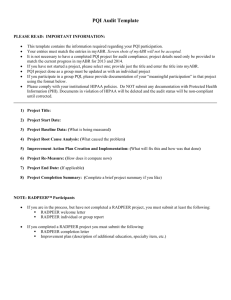

7/28/2012 Disclosures Methods in Completing Performance Quality Improvement (PQI) • None of the presenters have conflicts of i t interest t tto di disclose. l Jennifer L Johnson, Karen Brown G ff Geoffrey Ibb Ibbott, tt T Todd dd Pawlicki P li ki AAPM 54th Annual Meeting Charlotte, NC Professional Symposium 4:30PM - 6:00PM PQI • After this course attendees will be better able bl tto – Identify and define a PQI project – Identify and select measurement methods/techniques for use in the PQI project – Describe example(s) p of completed p p projects j Outline • Introduction and the ABR • PDSA • Resources and Tools – Incident learning systems, RCA – FMEA – Control Chart – Fishbone – Process Map – Pareto 1 Topics ABR$PQI$Update$ July$30,$2012 1.$$MOC$AtPAPGlance 2.$$PracQce$Quality$Improvement 3.$$Public$ReporQng$and$ConQnuous$CerQficaQon$$ Geoffrey$S.$Ibbo>,$Ph.D. Slides$from$David$Laszakovits,$M.B.A., Milton$Giberteau,$M.D.,$and James$Borgstede,$M.D. WHO IS ABMS? • ABMS sets the standards for the certification process to enable the delivery of safe, quality patient care • ABMS is the authoritative resource and voice for issues surrounding physician certification • The public can visit certificationmatters.org to determine if their doctor is board certified by an ABMS Member Board 3 WHAT IS ABMS MOC™? • A process designed to document that physician specialists, certified by one of the Member Boards of ABMS, maintain the necessary competencies to provide quality patient care • ABMS MOC promotes continuous lifelong learning for better patient care 4 ABMS of the Future MOC$Components Part$I:$$Professional$Standing !$$State$Medical$Licensure More robust More legislatively active Continuous MOC rather than 10 year cycles Involvement and promotion of institutional MOC Significant presence of primary care boards in ABMS governance Competition from rogue organizations for stature Part$II:$$Lifelong$Learning$and$SelfPAssessment ! Category$1$CME$and$Self$Assessment$Modules$ (SAMs) Part$III:$$CogniQve$ExperQse ! Proctored,$secure$exam$ Part$IV:$$PracQce$Performance$$$ ! PracQce$Quality$Improvement$(PQI) Topics 1.$$MOC$AtPAPGlance 2.##Prac(ce#Quality#Improvement 3.$$Public$ReporQng$and$ConQnuous$CerQficaQon$$ PQI$EvoluQon PQI$EssenQal$Elements ! Select$project,$metric(s),$and$goal ! Collect$baseline$data$ ! Analyze$data ! Create$and$implement$improvement$plan ! RePmeasure ! SelfPreflecQon ABR$ Individual$and$Group$ PQI$Templates* *Templates$include$all$ essenQal$elements$needed$ to$comply$with$ABR$ “meaningful$parQcipaQon”$ requirements The$Quality$Improvement$Process • Identify area needing improvement • Devise a measure • Set a goal • Develop an improvement plan • Implement for cycle #2 •Carry out the measurement plan ! Collect data • Analyze the data •Compare to goal •Root Cause Analysis Group$PQI$Criteria ! Group$consists$of$2$or$more$ABR$diplomates ! Group$Project$Team$Leader$$designated • Team$organizaQon,$meeQngs$and$record$keeping • Must$document$team$parQcipaQon ! Project$may$be$group$designed,$societyPsponsored,$or$ involve$a$registry ! Requires$at$least$3$team$meeQngs:$$ • Project$organizaQon$meeQng • Data$and$root$cause$analysis$meeQng • Improvement$plan$development Individual$ParQcipant:$$“Meaningful$ParQcipaQon” Changes$in$PQI$A>estaQon ! Individual$diplomate$MOC$PQI$credit$requires: • Documented$a>endance$at$>$3$team$meeQngs • PreparaQon$of$a$personal$selfPreflecQon$statement$ describing$the$impact$of$the$project$on$the$group$ pracQce$and$paQent$care • A>estaQon$on$ABR$Personal$Database$(PDB)$ • Access$to$project$records$in$the$event$of$an$ABR$ MOC$audit M E D E G A P O$ A>estaQon$conQnued… M E D E G A P $ O M E D E G A P $ O Topics 1.$$MOC$AtPAPGlance 2.$$PracQce$Quality$Improvement 3.##Public#Repor(ng#and#Con(nuous#Cer(fica(on## Specialty Board Certification Private Notfor-Profits State Medical Licensure Quality Organizations Changing$Landscape ! Relevance$of$ABMS/ABR$cerQficaQon$must$be$ demonstrated$to$the$public,$payers$and$the$government Certification MOC Private Notfor-Profits Maintenance of Licensure (MOL) Quality Organizations $ !$Medicine$is$experiencing$a$fusion$of$economics,$ quality,$safety$and$reimbursement,$so$we$must$work$ together$to$effecQvely$project$and$promote$our$specialty$ for$the$benefit$of$our$paQents !Accountability$and$transparency$remain$the$watchwords$ for$the$new$millennium Timeline$Leading$to$ ABMS$Public$ReporQng ! March&2009:&ABMS&BOD&adopted&a&standards&document&that& included&a&call&for&ABMS&to&make&info&about&cer>ficate&status& dates&and&MOC&par>cipa>on&status&available&to&the&public ! June&of&2010:&ABMS&BOD&approved&a&twoEpart&resolu>on: & &(1)&approved&public&display&of&MOC&par>cipa>on&by&ABMS&&&&& &&&&&&&star>ng&Aug&2011& & &(2)&MOC&par>cipa>on&status&reported&using&three&primary& &&&&&&&designa>ons: !&“Mee>ng&the&Requirements”&of&MOC !“Not&Mee>ng&the&Requirements”&of&MOC !“Not&Required&to&Par>cipate”&in&MOC&(Life>me&Cer>ficates)& About$Public$ReporQng ! If&not&us&then&who: ABMS$Public$ReporQng$cont… ! May&2011:&&&ABMS&MOC&Mee>ng:&Na>onal& Creden>alers&appeared&as&guests&and&stated&interest&in& some&way&to&verify&MOC&par>cipa>on&through&ABMS. ! It&was&recognized&that&the&boards&needed&>me&to& create&communica>ons&and&reach&out&to&their& diplomates,&some&of&whom&would&likely&want&to&enroll& in&MOC.& ! June&2011:&&ABMS&offered&extensions&of&one&year&to& boards&who&wanted&more&>me&to&for&communica>on ! ABR’s&request&for&the&maximum&oneEyear&extension& was&granted. ABR$Response$to$ABMS$Public$ ReporQng$Requirements ! ABR$online$verificaQon$of$board$eligibility$and$ MOC$parQcipaQon$statuses$in$coordinaQon$ with$ABMS$reporQng ! Link$from$ABMS$site$to$ABR$site$for$further$$$ clarificaQon$on$various$statuses ! Diplomate$lookPup$tool ! Immediate,$current$verificaQon$status ConQnuous$CerQficaQon ! CerQficate$will$no$longer$have$“valid$through”$ date$–$instead$conQnuing$cerQficaQon$will$be$ conQngent$on$meeQng$MOC$requirements ! Annual$lookPback$used$to$determine$MOC$ parQcipaQon$status.$ ! No$change$in$MOC$requirements$or$fees How$does$it$work? MOC&Year LookEback&date& Element(s)&Checked1 2013 3/15/2014 Licensure0 2014 3/15/2015 Licensure 2015 3/15/2016 Licensure,0CME,0SAMs,0Exam,0and0PQI0 2016& 3/15/2017 Licensure,0CME,0SAMs,0Exam,0and0PQI 2017& 3/15/2018 Licensure,0CME,0SAMs,0Exam,0and0PQI 2018 3/15/2019 Licensure,0CME,0SAMs,0Exam,0and0PQI 20XX 3/15/20XX Licensure,0CME,0SAMs,0Exam,0and0PQI 1&Status&Check&for&“Mee>ng&Requirements” Element Licensure Compliance&Requirement At0least0one0valid0state0medical0license CME SAMs At0least0750Category010CME0in0previous030years At0least060SAMs0in0previous030years Passed0any0ABR0CerLfying0or0MOC0exam0in0previous0100 years Completed0at0least010PQI0project0in0previous030years Exam PQI Advantages0of0ConLnuous0CerLficaLon ! If0you0have0two0or0more0LmeSlimited0 cerLficates,0they0are0synchronized. ! The0number0of0CME0and0SAMs0you0can0count0 per0year0is0unlimited ! You0may0take0the0MOC0exam0at0any0Lme,0as0long0 as0the0previous0MOC0exam0was0passed0no0more0 than0100years0ago ! BuiltSin0“catchSup”0period0of0one0year0–0sLll0 cerLfied ! Aligns0reporLng0more0closely0with0CMS,0TJC,0 credenLaling0and0state0licensing0boards Thank0You! QuesQons? Plan Act PDSA Quality Improvement Methodology Do Study Powerful Versatile Simple Identify a project Plan Ask questions Make predictions Set goals Identify data to be collected Do Carry out the plan Study Compare Analyze Summarize What changes will be made? Act Group Projects • • • • Individual participation Access to project materials Group structure Meeting minutes …to be successful at improvement, it takes the will to improve, ideas for improvement, and the skills to execute the changes. The Improvement Guide: A Practical Approach to Enhancing Organizational Performance Contact Information Karen Brown, MHP, CHP, DABR Penn State College of Medicine Milton S. Hershey Medical Center Email: kbrown4@hmc.psu.edu P: 717-531-5027 Resources • Langley, Gerald J.; Moen, Ronald D.; Nolan, Kevin M.; Nolan, Thomas W.; Norman, Clifford L.; Provost, Lloyd P. (2009-06-03). The Improvement Guide: A Practical Approach to Enhancing Organizational Performance (JOSSEY-BASS BUSINESS & MANAGEMENT SERIES). Wiley Publishing. Kindle Edition. • The American Board of Radiology. Maintenance of Certification Part IV: Practice Quality Improvement (PQI) 2012. http://www.theabr.org/sites/all/themes/abr-media/PQI_2012.pdf • Heath, Chip; Heath, Dan (2007-01-02). Made to Stick: Why Some Ideas Survive and Others Die. Random House, Inc.. Kindle Edition. Image Resources • iStockphoto www.istockphoto.com • photoXpress www.photoxpress.com • Everystockphoto www.everystockphoto.com 7/28/2012 Elements of PQI Projects PQI – Control Charts, Event Reporting and FMEA Reporting, Todd Pawlicki • • • • • Relevance to patient care R l Relevance tto diplomate's di l t ' practice ti Identifiable metrics and/or measurable endpoints Practice guidelines and technical standards An action plan to address areas for improvement – Subsequent remeasurement to assess progress and/or improvement http://www.theabr.org/moc-ro-comp4 Error Management Basis for Understanding Statistical Process Control • Three approaches to error management Tolerance Limits – Reactive, Proactive, Prospective – Incident learning systems • Reactive & Proactive – Failure Modes & Effects Analysis Action Limits Accept Action Limits Target • Prospective 1 7/28/2012 UNPL = x + 3 ⋅ mR chart Moving Range Sample number or Time mR 1.128 x= 1 N Sample number or Time LNPL = x − 3 ⋅ Sample number or Time Two Example Control Charts ∑x Individual values XmR chart XmR Chart Individu ual values Control Charts: Individual Values mR 1.128 (n = 1, and use d2 for n = 2) Event Reporting System • Clinical specifications – Set process requirements • Control chart limits – Quantify process performance http://www.ihe.ca/publications/library/archived/a-reference-guide-for-learning-fromincidents-in-radiation-treatment Pawlicki, Yoo, Court et al. Radiother Oncol 2008 2 7/28/2012 Investigation • All incidents are investigated • Depth D th and d priority i it off iinvestigation ti ti d depends d on – Severity of incident – Frequency of occurrence • Assessment – Impact and process domain(s) • Report – Causal analysis, corrective actions, and follow-up Choosing A Project From Events • By type – Clinical, occupational, operational, environmental, security/other • By impact – Critical, major, serious, minor – Near miss • By domain – Where in the Radiation Treatment process did the incident occur? Corrective Action Learning • Actions to address causes • Lessons learned are distilled and communicated • Supervisor S i responsible ibl ffor communication i ti • Quality Assurance Committee responsible for overall review of incident patterns • Communication requirements depending on incident severity – Target to improve system performance • Integrate with other business processes – Capital budgeting – Change management – Training • A Assign i tto iindividuals di id l • Follow up reports / data – Stop the press vs. Dept email 3 7/28/2012 Example: Forgetting bolus Example: Forgetting bolus Control Chart FMEA FMEA – Background • Failure Modes and Effects Analysis • History – Developed by the Aerospace industry ((~1960s) 1960s) – Provides a structured way of prioritizing risk reduction strategies. – Helps to focus efforts aimed at minimizing adverse outcomes. • In the electromechanical age – Widely applied in automotive and airline industries • Use – Most effective when applied before a design is constructed – Primarily a prospective tool 4 7/28/2012 FMEA – Vocabulary Risk Priority Number (RPN) • Failure Mode: How a part or process can fail to meet specifications specifications. Risk Priority Number = Severity • Cause: A deficiency that results in a failure mode; sources of variation. X Probability of Occurrence • Effect: Impact on customer if the failure mode is not prevented or corrected. X Probability of NOT being detected No input/control Responsible for operation FMEA – Metrics 1) MD consult 2) H&P 3) Database entry 4) Prescription dictated • Occurrence (O) 9) Sources Ordered 10) Sources inventoried 11) Sources delivered to Radiation Oncology 12) Sources inventoried into Rad Onc 16) Plaque insert 17) Patient survey 18) Room survey – Probability that the failure mode occurs Initial Patient Consult • Severity (S) – Severity of the effect on the final outcome resulting from the failure mode if it is not detected Source Acquisition Implant Processes leading to LDR Implant • Lack of Detectability (D) – Probability that the failure will NOT be detected Slide courtesy of Dan Scanderbeg Successful LDR Implant Treatment Plan Plaque Preparation 5) Source type selected 6) Hand calculation 7) Treatment plan 8) Source activity selected 13) Calibration check 14) Assembly 15) Sterilization 5 7/28/2012 Process Step Potential Failure Mode Effect of Failure Mode 5) Source type selected Wrong source type selected Wrong dose delivered 7 6) Hand calc Wrong depth or duration Wrong dose delivered 8 7) Tx Plan Wrong depth or duration Wrong dose delivered 9 8) Source activity selected Wrong source activity selected Wrong dose delivered 7 9 6 378 9) Order placed Wrong activity ordered Wrong dose delivered 5 8 6 14) Assembly Improper equipment used Wrong dose delivered/geogra phic miss 7 9 14) Assembly I Improper construction Seeds migrate 6 15) Sterilization Improper handling Seeds migrate 5 O rank S Rank List sorted in order of RPN (high to low) RPN ~ 550 used as cutoff D rank RPN Process Step Potential Failure Mode Effect of Failure Mode 5) Source type selected Wrong source type selected Wrong dose delivered 7 9 9 567 7) Tx Plan Wrong depth or duration Wrong dose delivered 9 9 7 567 14) Assembly Improper equipment used Wrong dose delivered/geographi c miss 7 9 9 567 240 6) Hand calc Wrong depth or duration Wrong dose delivered 8 9 7 504 9 567 8) Source activity selected Wrong source activity selected Wrong dose delivered 7 9 6 378 9 4 216 9) Order placed Wrong activity ordered Wrong dose delivered 5 8 6 240 9 4 180 14) Assembly Improper construction Seeds migrate 6 9 4 216 15) Sterilization Improper handling Seeds migrate 5 9 4 180 9 9 9 9 7 7 567 504 567 Slide courtesy of Dan Scanderbeg Scheduled Time of Case Scheduled Duration of Case Physics Start Time for Case Physics Stop Time for Case Paperwork & Notes D rank RPN Slide courtesy of Dan Scanderbeg Example of Analysis Case Identifier Physician S Rank Slide courtesy of Dan Scanderbeg Slide courtesy of Dan Scanderbeg Type of Case O rank Example of data tracking g • Over 3 weeks – physics brachy schedule was logged using Google Documents Use web-based form to gather data into Exceltype form for analysis. • Results – 20 of 26 (77%) of cases finished later than scheduled – Cases finished later than scheduled time • Max = 78 min • Ave A = 31.5 31 5 min i – 8 occurrences of cases booked back-to-back – 4 occurrences of cases doubled booked 6 7/28/2012 Next Steps • Create intervention to improve processes Summary • Control charts for analysis and deciding when to act • Document results • Event Recording System to identify issues • FMEA to prioritize effort 7 7/28/2012 Fishbone Diagram PQI – Fishbone, Process Maps and Pareto Charts Jennifer L Johnson, MS, MBA • Cause-and-effect diagram, Ishikawa diagram di • Identifies many possible causes for an effect or problem – Brainstorming – Sorts S t ideas id iinto t useful f l categories t i Tague, N R. The Quality Toolbox 2005 Fishbone Diagram ENVIRONMENT Fishbone Diagram • Cause enumeration diagram – Brainstorm B i t causes, th then group tto d determine t i headings • Process fishbone – Develop flow diagram of process steps (<10) – Fishbone each process step • Time-delay fishbone – Allow people to add over time (1-2 weeks) PEOPLE EQUIPMENT Tague, N R. The Quality Toolbox 2005 1 7/28/2012 Process Maps • Graphical representation of sequence of t k and tasks d activities ti iti ffrom start t t tto fi finish i h – Flow of inputs, resources, steps, and processes to create an output – May be color-coded by participant(s) – Value-added vs. nonvalue-added steps p Process Maps • “As-is” – depicts actual, current process in place l • “To-be” – depicts future after changes and improvements • Difference: value-added vs. nonvalueadded steps • Single diagram or hierarchy of diagrams Tague, N R. The Quality Toolbox 2005 Tague, N R. The Quality Toolbox 2005 Level 0 process flow map for opening an oncology clinical trial. Dilts D M , Sandler A B JCO 2006;24:4545-4552 Perks et al. IJORP 83(4) 2012 ©2006 by American Society of Clinical Oncology 2 7/28/2012 Pareto Chart • Bar graph – Length of bars represent frequency or cost (money or time) – Arranged from longest (left) to shortest (right) • Analyze frequency of causes or problems – Visually shows which situations are more significant Tague, N R. The Quality Toolbox 2005 Pareto Chart • Subtotal measurements in each category • Determine appropriate scale (y-axis) • Construct, label bars for each category Optional percentage g ((%)) for each category g y • Calculate p – (right vertical axis) Pareto Chart • Decide categories to group items • Decide appropriate measurement – Frequency, quantity, cost, or time • Decide period of time • Collect data, recording category or assemble bl existing i ti d data t Tague, N R. The Quality Toolbox 2005 Pareto Chart • Pareto Principle: 80% of effect comes from 20% of the causes • Measurement choice – Reflective of costs preferred (dollars, time, etc.) – If causes equal weighting in costs – use frequency • Calculate, draw cumulative sums (%) • Weighted Pareto chart (to normalize equal opportunities) Tague, N R. The Quality Toolbox 2005 Tague, N R. The Quality Toolbox 2005 3 7/28/2012 AIM Statement Baseline Metric IMRT To increase i the h rate off patient-specific i ifi quality assurance (PSQA) prior to the first treatment to 100% by July 2011 Cause Analysis Avg. Compliance Rate = 71.4% Legend: Physician Simulation to Start Treatment Patient Flow Simulation completedTherapist START Therapy Was patient given start date? Dosimetry • Create & evaluate process flow • Identify potential causes of failures • Create & evaluate tracking data (times, bottlenecks) • ID & examine cases in which QA was not completed Physics No Why not? Yes Patient Is it 5 business days? Issue No Why not? Yes Are all data available to do contours? No When available? Yes Dosimetry prepare for contouring MD notified Contours completed Dosimetrist notified Planning objectives provided? No Why not? 4 7/28/2012 Legend: Dosimetrist competes plan MD notified MD reviews plan Physician Proceed to planning Therapy Dosimetry Yes Plan approved? If IMRT, before 4PM No Why not? Plan reworked? Physics Yes Patient Issue Dosimetrist processes plan in Mosaiq Is script in Mosaiq No MD Notified QA/Chart Check Approved? Yes MD notified to sign plan in Mosaiq Yes Quality checklist item generated by 4pm QA/Chart Check prior to beginning XRT No Why Not? Patient start date changed? Yes Patient starts No MD Notified? Dry Run? Does patient start without QA? END Interventions Pareto Diagram of Physics Review IMRT QA "After Tx" Causes Sep 2010 - Feb 2011 80 100.0% • Division Grand Rounds (Jan 2011) 90.0% 70 70.0% 50 60.0% 50.0% 40 40.0% 30 30.0% 20 Cumulative Percentt Number of Causes 80.0% 60 20.0% 10 10.0% 0 0.0% LSDA ES PH BST Physics Review IMRT QA "After Tx" Causes Total 165 Cases LSDA (Late / Same Day Approval) ES (Early Start) PH (Physics Cause) BST (Boost Plan) – Communicated C i t d iimportance t off QA – Discussed ACR accreditation – Developed support from faculty and staff • Division Guidelines (Apr 1, 2011) – Eliminate late approvals pp for IMRT – Eliminate early patient start times for IMRT – IMRT QA and physics chart check prior to first treatment now required 5 7/28/2012 After Intervention IMRT Acknowledgments • CS&E team Avg. Compliance Rate = 99.3% – – – – – – Prajnan P j D Das Lei Dong James Kanke Michael Kantor Beverly Riley Tatiana Hmar-Lagroun • MDACC faculty & staff – – – – – – Thomas Buchholz Th B hh l Liao Geoffrey Ibbott Michael Gillin Rajat Kudchadker John Bingham • Q&S Council members References • Tague, Nancy R. The Quality Toolbox. 2 Edition. Milwaukee Wisconsin: ASQ Quality Press Milwaukee, Press, 2005 2005. nd 6