Document 14181053

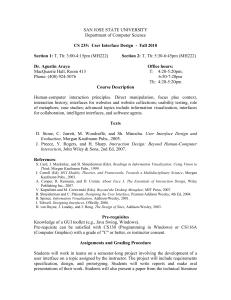

advertisement