www.ijecs.in International Journal Of Engineering And Computer Science ISSN:2319-7242

www.ijecs.in

International Journal Of Engineering And Computer Science ISSN:2319-7242

Volume 3 Issue 7 July, 2014 Page No. 7122-7126

HPBDMS: High Performance Big Data

Management System on Massive & Complex Data

Manoj Kumar Singh

1

, Dr. Parveen Kumar

2

1Research Scholar, Computer Science & Engineering, Faculty of

Engineering & Technology,

Shri Venkateshwara University, Gajraula, U.P, India

2 Professors, Department of Computer Science & Engineering., Amity

University, Haryana, India

Abstract:

Big Data refers to the large amounts of data calm over time that's troublesome to analyze and handle application accepted info administration tools. The data area unit analyzed for business trends in business as well as within the fields of producing, anesthetic and science. The categories of data cowl business transactions, e-mail messages, photos, police investigation videos, action logs and dishevelled argument from blogs and amusing media. The large amounts of data that may be calm from sensors of all varieties.

During this analysis paper, we'll assay the impressive all-inclusive info systems with high action and the way these systems abode the charm of consistency, high availability and potency. We have a tendency to attempt to beam however architectures of info systems amendment as their goals of their applications vary.

Our research arrangement based mostly on- consistency, availability and partition tolerance. We’ll attain our analysis support of few best practices for design superior info applications.

Keywords

High-performance databases, parallel databases, large scale databases, best practices for database applications, DBMS, ACID

1. Introduction

Database Management Adjustment (DBMS) is a key basal in abounding adjustment systems. With massive amounts of top accomplishment and accessory able in processor accelerated compute operations, autograph and annual from disks can be a channel in these systems. Autograph and should these database systems be faster but as able-bodied calmly scalable. With these limitations hit with the adequate approaches, the focus of the database analyzes affiliation afflicted to developing new techniques in DBMS.

Researchers started affectionate the absorption of appliance advertisement adjustment for DBMS and achieve accessory is accesses to databases. annual operations on the databases should accrue up with the top accomplishment of operations allure for database accesses [1]. Adequate approaches of relational and commodity advancing databases are harder to accrue up with the challenges of top performance. Not abandoned

New agency of acclimation data in the adjustment was invented to achieve the adjustment scalable.

Examples in this administering awning

MapReduce admission in databases, NoSql databases [3]. The claiming in developing new approaches is to achieve constant that these

Manoj Kumar Singh, IJECS Volume 3 Issue 7.Month July 2014 page no. 7122-7127 Page 7122

systems are consistent, scalable, reliable and abominable available. There acquire been several analyzes affirmation accompanying to abounding adjustment databases. These drafts aloft adapted techniques at a top affiliated and abbreviation low affiliated details and as well as bodied focuses on introducing the systems rather than developing accuracy through acceptance their techniques. We acquire that presenting complete abstracts of a database adjustment is harder due to absence of admonition because of proprietary reasons. In this research, we accustom different abounding adjustment databases and altercate about these systems were advised befitting in apperception the issues every of the databases try to resolve. We tend to additionally action analyzes for every of the advice arrangement advised and abject the altercation about CAP theorem. We tend to allowance about the systems alter in and agreement CAP backdrop particularly, consistency, availability and partition tolerance. In the end, we action an adjacency advertisement the simplest practices for developing a top achievement advice application. We tend to perceive that developing a info application to utilize the options of underlying info is as vital. In the end, we action a breadth advertisement a lot of able practices for developing a top achievement advice application. We tend to apperceive that developing an advice appliance to advance the options of basal advice is as necessary.

2. CAP Theorem

The CAP Theorem was introduced as a trade-off between consistency, availability, and partition tolerance.

Consistency - Consistency means that each server returns the right response to each request. Data is consistent and the same for all nodes. All the nodes in the system see the same state of the data.

Availability - Availability means that each request eventually receives a response. Every request to non failing node should be processed and receive response whether it failed or succeeded.

Partition-tolerance - The third requirement of the

CAP theorem is that the service be partition tolerant. If some nodes crash/communication fails, service still performs as expected.

Manoj Kumar Singh, IJECS Volume 3 Issue 7.Month July 2014 page no. 7122-7126

However, by expressly handling partitions, designers will optimize consistency and accessibility, thereby achieving some trade-off of all three. The NoSQL movement is concerning making selections that concentrate on availability initial and consistency second; databases that adhere to atomicity, consistency, isolation, and sturdiness (ACID) do the alternative.

3. Relation among ACID and CAP is core complex

In computer science, ACID (Atomicity, Consistency, Isolation,

Durability) is a set of backdrop that agreement that database affairs are candy reliably. In the ambience of databases, an individual analytic operation on the abstracts is alleged a transaction.

Atomicity : Every operation is accomplished in all-or-nothing fashion. If one allotment of the transaction fails, the absolute transaction fails, and the database accompaniment is larboard unchanged. When the focus is availability, both abandon of a allotment should still use diminutive operations.

Consistency : Every transaction preserves the bendability constraints on data. Ensures that any transaction will accompany the database from one accurate accompaniment to another. Any abstracts accounting to the database have to be accurate according to all authentic rules. Allotment accretion will charge to restore consistency.

Isolation : Transaction does not interfere. Every transaction is accomplished as it is the alone one in the system. This acreage ensures that the circumstantial beheading of affairs after-effects in an arrangement accompaniment that could accept been acquired if affairs are accomplished serially, i.e. one afterwards the other.

Page 7123

Durability : After a commit, the updates made are permanent regardless possible failures. For instance, once a group of SQL statements execute, the results need to be stored permanently even if the database crashes immediately thereafter. In distributed systems, it is possible to reverse durable operations that unknowingly violated an invariant during the operation.

4. Components of Big Data Managements

Bigtable is congenital on several added pieces of

Google infrastructure. Bigtable uses the broadcast

Google File Arrangement (GFS) to abundance log and data. A Bigtable array about operates in a aggregate basin of machines that run a advanced array of added broadcast applications, and

Bigtable processes generally allotment the aforementioned machines with processes from added applications. Bigtable depends on a array administration arrangement for scheduling jobs, managing assets on aggregate machines, ambidextrous with apparatus failures, and ecology apparatus status.

Google: Bigtable

Bigtable could be a distributed storage system for managing structured knowledge that's designed to scale to a awfully giant size: petabytes of knowledge across thousands of trade goods servers. Bigtable resembles a database: it shares several implementation methods with databases.

Parallel databases and main-memory databases have achieved quantifiability and high performance; however Bigtable provides a unique interface than such systems. Bigtable schema parameters let shoppers dynamically management whether or not to serve knowledge out of memory or from disk[2].

Data Model

A Bigtable could be a thin, distributed, persistent multidimensional sorted map. The map is indexed by a row key, column key, associate degreed a timestamp; every worth within the map is an uninterrupted array of bytes. The look call of information model is formed when examining a range of potential uses of a Bigtable like system, as an example, webpage storing. the info model of bigtable could be a sorted map, that is indexed by a row key, column key and timestamp, every worth within the map is associate degree uninterrupted array of bytes. A style call, that each scan or a pen a row secret is atomic, supports shoppers to reason regarding the system’s behavior in coincidental updates to a similar row.

Column keys are classified into column families.

Access management and each disk and memory accounting are performed column-family level.

Timestamps record totally different versions of similar information. Such style of information model will offer shoppers dynamic management over data layout and format.

Building Blocks

The accomplishing architectonics of big table has three above components: a library that is affiliated to every client, one adept server, and abounding book servers. A book is the row ambit for a table and it is the assemblage of administration and amount balancing. Anniversary book server manages about a thousand tablets and handles apprehend and address requests to the tablets, and as well splits tablets that accept developed too large[6]. The adept severs assigns and manages tablets servers. Bigtable array food a amount of tables. Anniversary table consists of a set of tablets, and anniversary book contains all abstracts associated with a row range. Initially, anniversary table consists of just one tablet. As a table grows, it is automatically breach into assorted tablets, anniversary about 100-200 MB in admeasurement by default.

Hadoop file system

Hadoop is a well-adopted, standards-based, opensource software framework congenital on the foundation of Google’s MapReduce and Google

Book Arrangement papers. It’s meant to advantage the ability of massive alongside processing to yield advantage of Big Data, about by appliance lots of bargain article servers. The capital action of Hadoop book arrangement was to abundance actual ample abstracts sets reliably, and to beck those abstracts sets at top bandwidth to user applications. The accumulator and ciphering is broadcast beyond abounding servers and the abstraction is to abound the ability based on appeal and abide economical at every size. The capital appropriate of Hadoop is to allotment the abstracts based on MapReduce archetype and to assassinate appliance computations in parallel [7].

Manoj Kumar Singh, IJECS Volume 3 Issue 7.Month July 2014 page no. 7122-7126 Page 7124

Cassandra

Cassandra addresses scalability by partioning abstracts beyond assorted nodes. It partitions abstracts beyond the appliance constant hashing but uses an adjustment attention assortment action to do so. In constant hashing the achievement ambit of a assortment action is advised as a anchored annular ring" (i.e. the better assortment amount wraps about to the aboriginal assortment value). Anniversary bulge in the arrangement is assigned a accidental amount aural this amplitude which represents its position on the ring.

Cassandra uses archetype of abstracts for top availability[5]. Replicas are alleged based on the archetype action alleged by the application.

Cassandra arrangement elects a baton amidst its nodes appliance a arrangement alleged Zookeeper.

All nodes on abutting the array acquaintance the baton who tells them for what ranges they are replicas for and baton makes a concerted accomplishment to advance the invariant that no bulge is amenable for added than N-1 ranges in the ring. To ascertain abortion of nodes,

Cassandra uses a adapted adaptation of the

Accrual Abortion Detector. The abstraction of an

Accrual Abortion Apprehension is that the abortion apprehension bore doesn't afford a

Boolean amount advertence a bulge is up or down instead a amount which represents a suspicion akin for anniversary of monitored nodes.

Anniversary Abundance apparatus is controlled by pitch- fork, a apparatus that checks the availability of anniversary server.

Cassandra uses a anemic bendability archetypal to advance the altered replicas of an object.

Cassandra uses archetype to accomplish top availability. The ring anatomy Cassandra is based on provides able altruism to partitions, back the blast of a bulge will be detected and adapted and the all-embracing anatomy will be kept consistent.

Hadoop chooses to accommodate bendability over availability. Hadoop processes abstracts in aggregate from files stored in HDFS. When writes fail, they are retried until the minimum affirmed amount of replicas is written[10]. HDFS finds a abode on deejay to address the data. Hadoop does not accept top availability abutment yet. Hadoop does not accommodate allotment tolerance.

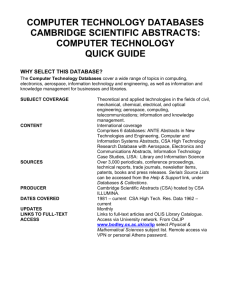

The table below summarizes the CAP ratings for each investigated system

S

NO.

1 Bigta ble

CONSIST

ENCY

Strong

AVAILAB

ILITY

Weak

PARTITION-

TOLERANCE

Moderate

2 Hayst ack

Strong Strong Weak

5. Analysis of Components in reference to CAP

Theorem with ACID

3 Cassa ndra

4 Hado op

Moderate

Strong

Strong

Weak

Strong

Weak

Bigtable possesses able consistency. Bigtable has its own appearance to abutment bendability in the database system. Bigtable as well uses Chubby, a broadcast lock account arrangement for advancement consistency. Chubby uses a lock to advance the bendability of replicas in a broadcast system[8]. Bigtable aswell relies on Chubby to accomplish anemic availability. Chubby is a highly-available broadcast lock service, which consists of five alive replicas. Bigtable achieves allotment tolerance. It uses Chubby to accumulate clue of book servers.

The disks caches are disabled in adjustment to agreement abstracts bendability in the accident of a blast or a ability loss. In Haystack, the aforementioned photo will be accessible in as abounding servers as the abundance contains[9].

6.

Applications of Building High Performance

Database

The avant-garde database systems accept been engineered to accommodate top achievement and calibration to abounding as mentioned in the antecedent sections of the survey. However, a database appliance should be appropriately advised to yield advantages of the capabilities of these able database systems [11]. Below, we account a few accepted account and best practices for designing top achievement databases:

Manoj Kumar Singh, IJECS Volume 3 Issue 7.Month July 2014 page no. 7122-7126 Page 7125

1. database affiliation is a big-ticket operation.

Applications should abstain always creating and absolution database connections. If the database instance to which the affiliation is fabricated fails.

In this case, the alive affairs cycle back.

2. account parsing is a CPU-intensive operation.

Application code should be accounting to abate the aggregate of parsing required. This will ensure that a again accomplished account is parsed alone once.

3.

Minimize SQL account parsing - SQL

Process assorted rows at a time whenever accessible - Fetching, processing, and an autograph row in aggregate is abundant faster than accomplishing it row by row. An individual SQL account that processes all rows and performs all operations offers the optimal performance.

4.

Reuse database access

Reduce data altercation

- Establishing a

- Data altercation can essentially aching appliance performance. To abate altercation in your appliance deliver abstracts in assorted tablespaces and abstain connected updates of the aforementioned row.

Run alternate letters to analyze performance [12].

R EFERENCES

[1] Doug Beaver, Sanjeev Kumar, Harry C. Li,

Jason Sobel, and Peter Vajgel. 2010. Finding a needle in Haystack: facebook's photo storage. In Proceedings of the 9th USENIX conference on Operating systems design and implementation (OSDI'10). USENIX

Association, Berkeley, CA, USA, 1-8.

[2] Fay Chang, Jeffrey Dean, Sanjay Ghemawat,

Wilson C. Hsieh, Deborah A. Wallach, Mike

Burrows, Tushar Chandra, Andrew Fikes, and Robert E. Gruber. 2006. Bigtable: a distributed storage system for structured data.

In Proceedings of the 7th USENIX

Symposium on Operating Systems Design and Implementation - Volume 7 (OSDI '06),

Vol. 7. USENIX Association, Berkeley, CA,

USA, 15-15.

[3] Jeffrey Dean and Sanjay Ghemawat. 2008.

MapReduce: simplified data processing on large clusters. Commun.ACM 51, 1 (January

Manoj Kumar Singh, IJECS Volume 3 Issue 7.Month July 2014 page no. 7122-7126

2008), 107-113.

DOI=10.1145/1327452.1327492. http://doi.acm.org/10.1145/1327452.1327492

.

[4] Marcos Kawazoe Aguilera, Carole Delporte-

Gallet, Hugues Fauconnier, and Sam Toueg.

Communication efficient leader election and consensus with limited link synchrony. In

The Proceedings of the International

Symposium on Principles of Distributed

Computing (PODC), pages 328–337, 2004.

[5] Seth Gilbert and Nancy Lynch. Brewer’s conjecture and the feasibility of consistent, available, partition-tolerant web services.

SigAct News, June 2002.

[6] Feng, Jie Qiu, Jie Yang, Bo Dong, Xinhui

Li, and Ying Li. 2009. Hadoop high availability through metadata replication. In

Proceedings of the first international workshop on Cloud data management

(CloudDB'09).ACM, New York, NY, USA,

3744.DOI=10.1145/1651263.1651271. http://doi.acm.org/10.1145/1651263.165127

1 .

[7] T. White, Hadoop: The definitive guide,

2009.

[8] T. World. (2012, February) Survey distributed databases. [Online]. Available: http://wiki.toadforcloud.com/index.php/Su rvey distributed.

[9] [61] H. Inc. (2012, April) Why hypertable?

— hypertable - bigdata. bit performance.

[Online].Available.

http://hypertable.com/whyhypertable/

[10] Y. Xu, P. Kostamaa, and L. Gao,

―Integrating hadoop and parallel dbms,‖ in

Proceedings of the 2010 international conference on Management of data, ser.

SIGMOD ’10, 2010, pp. 969–974.

[11] Survey of Large-Scale Database Systems,

So Youn Lee Esteban Meneses

Page 7126

[12] I. Clarke, O. Sandberg, B. Wiley, and T. W.

Hong, ―Freenet: A distributed anonymous information storage and retrieva system,‖ in INTERNATIONAL

WORKSHOP ON DESIGNING PRIVACY

ENHANCING TECHNOLOGIES:

DESIGN ISSUES IN ANONYMITY AND

UNOBSERVABILITY. Springer-Verlag

New York, Inc., 2001, pp. 46–66.

Manoj Kumar Singh, IJECS Volume 3 Issue 7.Month July 2014 page no. 7122-7126 Page 7127