Semantics for Big Data

AAAI Technical Report FS-13-04

Developing Semantic Classifiers for Big Data

Richard Scherl

Department of Computer Science & Software Engineering

Monmouth University

West Long Branch, NJ 07764

rscherl@monmouth.edu

Abstract

Here the use of machine learning to develop such filters is

explored.

Linked Data (Heath and Bizer 2011) is widely used,

even when the publishers of data do not utilize a recognized ontology for type categorization or for defining the

semantics of the properties. We can use the names of the

properties (links) without their definition in an ontology.

Page Rank (Brin and Page 1998) has proven useful in web

search and many variants have been developed (Manning,

Raghavan, and Schütze 2008; Baeza-Yates and Ribeiro-Neto

2011). Lisa Getoor and collaborators (Sen and Getoor 2007;

Lu and Getoor 2003) have worked on methods for linkedbased classification of web pages. Here a type of generalization of Page Rank is proposed for web pages that are part of

the space of Linked Data, but are not necessarily classified

by type and may have links that are errors.

When the amount of RDF data is very large, it becomes

more likely that the triples describing entities will contain errors and may not include the specification of a

class from a known ontology. The work presented here

explores the utilization of methods from machine learning to develop classifiers for identifying the semantic

categorization of entities based upon the property names

used to describe the entity. The goal is to develop classifiers that are accurate, but robust to errors and noise.

The training data comes from DBpedia, where entities

are categorized by type and densely described with RDF

properties. The initial experimentation reported here indicates that the approach is promising.

Introduction

The problem being addressed by this paper is the development of techniques to help in the search for entities within

large Linked Data sets. The entity is a thing or object of

some sort that has an identifying URI and is described

by RDF (subject, predicate, object) triples. The techniques

should be applicable in the context of Big Data, where the

number of RDF triples is large and is likely to contain errors.

A number of researchers (Hitzler and van Harmelen 2010;

d’Amato et al. 2010; Hitzler 2009; Buikstra et al. 2011;

Fensel and van Harmelen 2007; Jain et al. 2010b; Auer and

Lehmann 2010; Jain et al. 2010a) have pointed out that the

semantic web as originally conceived (based on classical

logic) does not usefully apply to the large amounts of material on the web today, even that portion with semantic annotations. They argue that what is needed are methods that

are probabilistic, inductive and robust to noise and errors, as

opposed to those that are deductive and make use of formally

defined ontologies in Owl.

Here, an attempt is made to utilize features of semantic

annotation, but without the full power of the semantic representation and semantic query languages such as SPARQL.

It is assumed that potentially relevant RDF triples have been

gathered through some sort of adaptation of traditional document retrieval techniques (for example (Neumayer 2012)).

Then filters would be useful to narrow down the entities towards those that fall into the category that the user wants.

Motivating Examples

Figure 1 shows a keyword search done with FactForge1 . The

keyword is “Paris” and as expected we get not only the capital of France, but many other RDF entities that have the

name “Paris.” In fact, FactForge has a variety of different

tools (including SPARQL) to narrow down the search using

the full semantic capabilities of RDF. But the method proposed here is designed to be used in circumstances where a

RDF type property is not available or where the value of the

property may be in error.

Another potential application is the Billion Triples Semantic Web Challenge2 . Here a large data set has been

crawled from the Web and the goal is to make sense of this

data. The classifiers being proposed here could be used to

classify the entities into types.

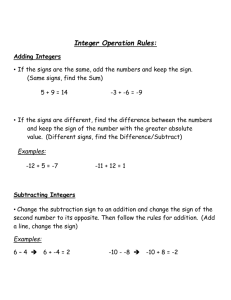

The Project

The proposed approach is to use machine learning methods from text classification to develop classifiers for the type

of a RDF entity based on the names of the properties used

to describe the entity. The intuition that forms the basis of

this work is that the names of the properties that are used

to describe an entity correlate very highly with the categories of which the entity is an instance. The text classifi1

Copyright c 2013, Association for the Advancement of Artificial

Intelligence (www.aaai.org). All rights reserved.

2

53

http://factforge.net

http://challenge.semanticweb.org/2012

Property

Value

dbpedia-owl:PopulatedPlace/area

•

1.0E-6

dbpedia-owl:PopulatedPlace/areaMetro

•

28163.530711793665

dbpedia-owl:PopulatedPlace/areaTotal

•

•

606.057217818624

606.1

dbpedia-owl:PopulatedPlace/areaUrban

•

5498.026760621261

dbpediaowl:PopulatedPlace/populationDensity

•

•

4447.4

4580.8704498109955

dbpedia-owl:area

•

1.000000 (xsd:double)

dbpedia-owl:areaCode

•

312, 773, 872

dbpedia-owl:areaLand

•

588445298.668339 (xsd:double)

dbpedia-owl:areaMetro

•

28163530711.793663 (xsd:double)

dbpedia-owl:areaTotal

•

•

606057217.818624 (xsd:double)

606100000.000000 (xsd:double)

dbpedia-owl:areaUrban

•

5498026760.621261 (xsd:double)

dbpedia-owl:areaWater

•

17870917.961318 (xsd:double)

dbpedia-owl:country

•

dbpedia:United_States

dbpedia-owl:elevation

•

181.965600 (xsd:double)

dbpedia-owl:foundingDate

•

•

1837-03-04 (xsd:date)

1837-03-04 (xsd:date)

dbpedia-owl:governmentType

•

•

dbpedia:Mayor–council_government

dbpedia:Cook_County,_Illinois

dbpedia-owl:isPartOf

•

•

•

dbpedia-owl:leaderName

•

dbpedia:Rahm_Emanuel

dbpedia-owl:leaderTitle

•

•

City Council

Mayor

•

(City in a Garden), Make Big Plans (Make No Small

Plans), I Will

•

3.000000 (xsd:float)

dbpedia-owl:motto

dbpedia-owl:percentageOfAreaWater

dbpedia:Mayor–council_government

dbpedia:DuPage_County,_Illinois

dbpedia:Illinois

!

Figure 2: DBpedia Chicago Entry

database(Auer et al. 2007) that is automatically created from

Wikipedia. DBpedia does contain semantic categorization

with regard to the type of object that is the subject of the

page.

DBpedia forms an important hub in the space of Linked

Data4 . There are links both within DBpedia and also outside

of Dbpedia. There are quite a number of different property

names used in DBpedia. Some are defined in RDF(S), OWL,

or FOAF. Others are defined in DBpedia. For this work the

prefixes are ignored. So, the source does not matter. Only the

RDF type labels are removed. Any further winnowing of

the labels is done automatically by the classifier algorithm.

Although the data is processed in RDF N-triple format, it is easier to visualize with the representation that

one sees when viewing DBpedia entries with a web

browser. For example, consider Figure 2 and Figure 3.

These show portions of the DBpedia entry for the city of

“Chicago.” Much has been removed as many links (e.g.

dbpedia-owl:birthPlace ) occur numerous times.

The selection shows that there are a number of link names

that are highly indicative of “Chicago” being a city.

Figure 1: Key Word Search

Experiments

A set of Python programs were developed to access DBpedia

and other sources of RDF data and to train and test off-theshelf implementations of machine learning algorithms. The

code makes use of existing libraries for processing RDF5 ,

cation method will distinguish between those that are useful and those which are not. The result will be a probabilistic classifier that is robust to noise and errors. For training

data, use is made of DBpedia3 , the semantically annotated

3

4

5

http://dbpedia.org

54

http://linkeddata.org

http://code.google.com/p/rdflab

is

property names). Using Bayes’ rule, we have:

•

dbpedia:John_P._Fardy

•

dbpedia:Taiwanese_American

•

dbpedia:1936_Republican_National_Convention

is dbpprop:rd1t4Loc of

•

dbpedia:1955_Davis_Cup

is dbpprop:rd2t1Loc of

•

dbpedia:1923_International_Lawn_Tennis_Challenge

•

dbpedia:1928_International_Lawn_Tennis_Challenge

dbpprop:placeofburial

of

is dbpprop:popplace of

is dbpprop:prev of

is dbpprop:rd3t1Loc of

is dbpprop:recLocation

of

dbpprop:recordLocation

dbpprop:regionServed

Generally, the denominator is dropped as indicated here

cmap = arg max P (c | d)P (c)

c∈C

•

dbpedia:Garage_rock

•

is

of

Is

dbpprop:regionalScenes

P (d | c) = P (ht1 , . . . , tnd i | c))

for the Multinomial Naive Bayes case and

of

•

dbpedia:Bill_Ayers

•

dbpedia:Steve_McMichael

is dbpprop:restingPlace

of

•

dbpedia:Harold_Washington

is dbpprop:restingplace

of

•

dbpedia:John_Hughes_(filmmaker)

is dbpprop:runnerup of

•

dbpedia:1967_Little_League_World_Series

is dbpprop:seat of

•

dbpedia:Cook_County,_Illinois

•

dbpedia:Proof_(play)

•

dbpedia:MS_Brahe

•

dbpedia:SS_Christopher_Columbus

is dbpprop:residence of

is dbpprop:resides of

is dbpprop:setting of

is dbpprop:shipBuilder

of

is

dbpprop:shipHomeport

P (d | c) = P (he1 , . . . , eM i | c)

for the Bernoulli Naive Bayes case.

The conditional independence assumption that each of the

attribute values is independent of the others given the class

is what gives the name Naive in Naive Bayes. With this assumption, the Multinomial computation becomes

Y

P (d | c) = P (ht1 , . . . , tnd i | c)) =

P (Xk = tk | c)

1≤k≤nd

where Xk is a random variable for position k in the document. So, P (Xk = t | c) is the probability that in a document of class c the term t will occur in position k. An

additional independence assumption is that the conditional

probabilities for a term is the same for all positions, allowing the random variable to be written as P (X = t | c). The

multinomial Naive Bayes therefore counts the number of occurrences of a term in a document, but does not include the

positions.

With the independence assumption, the Bernoulli Naive

Bayes formula becomes

Y

P (d | c) = P (he1 , . . . , end i | c)) =

P (Ui = ei | c)

of

!

Figure 3: DBpedia Chicago Entry

code for accessing DBpedia6 , machine learning algorithms7 ,

as well as other Python resources (Harrington 2012; Perkins

2010).

There are a variety of methods commonly used in

text classification (Manning, Raghavan, and Schütze 2008;

Baeza-Yates and Ribeiro-Neto 2011). The experiments reported here use the Naive Bayes method. Naive Bayes

(following the presentation in (Manning, Raghavan, and

Schütze 2008)) works by computing the maximum a posteriori probability (MAP)

1≤i≤M

where Ui is the random variable for vocabulary term i and

takes as values 0 for absence and 1 for presence. Hence

P (Ui = 1 | c) is the probability that in a document of class

c the term ti will occur in the document.

Multinomial Naive Bayes works by computing the probability of a document (here a list of

Qproperty names) d being

in a class c as P (c | d) ∝ P (c) 1≤j≤nd P (tj | c) where

P (tj | c) is the probability of the term (here property name)

occurring in a document of class c and P (c) is the prior probability of a document

Q occurring in class c. The formula is

P (c | d) ∝ P (c) 1≤j≤M P (ej | c) in the Bernoulli case,

were the representation of d is a vector of 0s and 1s.

cmap = arg max P (c | d)

c∈C

of the class c given the document d (in our case a list of

6

7

c∈C

•

•

of

is dbpprop:recorded of

c∈C

P (c | d)P (c)

P (d)

since we are only interested in the argmax and not in the

actual probability.

There are two interpretations of Naive Bayes used in text

dbpedia:Today's_Children

classification. In the Multinomial Naive Bayes the document

d is a sequence of terms ht1 , . . . , tnd i. In the Bernoulli Naive

dbpedia:Worldview_(radio_show)

Bayes model the document d is a binary vector he1 , . . . , eM i

(of dimensionality M the number of terms) indicating for

dbpedia:My_Heart_Will_Always_Be_the_B-Side_to_My_Tongue

each term whether or not it occurs in d. So for the computation of the conditional probability of the document given the

dbpedia:Gads_Hill_Center

class, we have

•

is

arg max P (c | d) = arg max

http://github.com/ogrisel/dbpediakit

http://scikit-learn.org, http://www.nltk.org

55

Intuition about RDF and semantic representation leads us

to expect that the number of times a property occurs in a

RDF description of an entity is not all that significant. A city

is a city regardless of the number of famous people born in

that city. But whether or not a property is stated is likely

to be significant. On this basis, one would predict that the

Bernoulli classifier will perform better.

The Naive Bayes Multinomial algorithm uses the training

set to compute P̂ (c) ( NNc )) and P̂ (tj | c) as estimates for

P (c) and P (tj | c). Laplace smoothing (adding one to each

count) is used to handle the cases where some terms do not

occur in the training data, in the computation

intuitions) were obtained with the NLTK (Bernoulli) Naive

Bayes classifier. For country we have, an accuracy of .8333,

a precision of positive of .75, of negative of 1.0, a recall of

positive of 1.0, and a recall of negative of .6666. On person

we have an accuracy of .8333, a precision of positive of .75,

of negative of 1.0, a recall of positive of 1.0, and a recall of

negative of .6666.

The version of naive Bayes in NLTK has a useful method

that shows the most informative features in a particular classifier. Here it was run with the number of features set to 10.

The result for the country classifier is given in Table 1. The

establishedevent = None

gini = None

populationcensus = None

demonym = None

gdpnominalyear = None

populationdensityrank = None

populationcensusyear = None

areasqmi = None

legislature = None

currency = None

Tct + 1

0

t0 ∈V (Tct + 1)

P̂ (tj | c) = P

where Tct is the count of the number of occurrences of t in

the documents of class c in the training set, N is the total

number of documents, and Nc is the number of documents

of class c. These estimates are used

Y

arg max P̂ (c | d) = arg ma xc∈C P̂ (c)

P̂ (tj | c)

c∈C

1≤k≤nd

to find find the best class cmap . In the Bernoulli case, the

formula used for learning is

P̂ (t | c) =

neg : pos

neg : pos

neg : pos

neg : pos

neg : pos

neg : pos

neg : pos

neg : pos

neg : pos

neg : pos

=

=

=

=

=

=

=

=

=

=

6.2 : 1.0

6.2 : 1.0

6.2 : 1.0

6.2 : 1.0

6.2 : 1.0

6.2 : 1.0

6.2 : 1.0

6.2 : 1.0

6.2 : 1.0

6.2 : 1.0

Table 1: Most Informative Features for Country Classifier

most informative features for the person classifier is given

in Table 2. From these displays, it is clear that the nonoccur-

(Nct + 1)

(Nc + 2)

givenname = None

birthplace = None

surname = None

surname = True

caption = True

wasderivedfrom = None

wordnet type = True

caption = None

areatotal = None

timezone = None

where Nct is the number of documents of class c containing

term t in the training set.

A small set of test entities was extracted from DBpedia8

for learning the category country and the category person.

Using the Scikitlearn multinomial naive Bayes classifier, the

accuracy9 for country is .8333, the precision10 of positive11

is .75, the precision for negative12 is 1.0, the recall13 of positive is 1.0 and the recall of negative is .666. For person the

accuracy is .666, with a positive precision of .6, a negative

precision of 1.0, a positive recall of 1.0 and a negative recall

of .333.

Using the Scikitlearn Bernoulli classifier on the country

data has an accuracy of only .333, a precision of positive

of 0, a precision on negative of .4, a recall on positive of 0,

and a recall on negative of .666. The Bernoulli classifier on

person has an accuracy of .666, a precision on negative of

1.0, a precision on positive of .6, a recall on negative of .333

and a recall on positive of 1.0.

These results do not match our intuitions reported earlier, since the Multinomial classifier is doing better than the

Bernoulli. On the other hand better results (that do match the

neg : pos

neg : pos

neg : pos

pos : neg

pos : neg

neg : pos

pos : neg

neg : pos

pos : neg

pos : neg

=

=

=

=

=

=

=

=

=

=

6.2 : 1.0

6.2 : 1.0

5.4 : 1.0

4.5 : 1.0

4.0 : 1.0

3.8 : 1.0

3.5 : 1.0

3.2 : 1.0

3.0 : 1.0

3.0 : 1.0

Table 2: Most Informative Features for Person Classifier

rence of particular property names is crucial in distinguishing between categories.

Current work is increasing the amount of DBpedia examples and the number of categories being considered. Additionally, experiments are underway with other methods of

classification.

Further tests

U.S. government data14 on the current members of congress

was downloaded in JSON format. Additionally, data from

the CIA World Factbook15 was downloaded in RDF format.

The NLTK Naive Bayes country classifier (multinomial)

was tested on 5 congress members. In each case, it classified

the entity as not a country. It was also tested on 5 countries

8

Since the full content of DBpedia was not used, the prior probability estimates do not contribute to the classifier.

9

Accuracy is the fraction of the classifications that are correct.

10

TruePositives

P recision = TruePositves+FalsePositives

11

Here a true positive result means that the example is an instance of the class.

12

Here a true positive result means that the example is not an

instance of the class.

13

TruePositives

Recall = TruePositives+FalseNegatives

14

15

56

http://www.govtrack.us/developers/api

http://wifo5-03.informatik.uni-mannheim.de/factbook/

from the CIA World Factbook and in each case it classified the entity as a country. The NLTK Naive Bayes person

classifier (multinomial) was tested on 5 congress members.

In each case, it classified the entity as a person. It was also

tested on 5 countries from the CIA World Factbook and in

each case it classified the entity as not a person.

Even though this test was surprisingly successful, it is

likely that more is needed to ensure that the classifier trained

on the terminology of one ontology’s properties will work

on another’s. Possibilities being investigated are to parse the

property names into potentially significant pieces and using

some form of stemming.

Buikstra, A.; Neth, H.; ten Teije, L. S. A.; and van Harmelen, F. 2011. Ranking Query Results from Linked Open

Data Using a Simple Cognitive Heuristic. In Proceedings to

the Workshop on Discovering Meaning On the Go in Large

Heterogeneous Data, collocated with The Twenty-second International Joint Conference on Artificial Intelligence (IJCAL2011), Barcelona, Spain.

d’Amato, C.; Fanizzi, N.; Fazzinga, B.; Gottlob, G.; and

Lukasiewicz, T. 2010. Combining semantic web search

with the power of inductive reasoning. In Deshpande, A.,

and Hunter, A., eds., Scalable Uncertainty Management:

Proceedings of the 4th Internatioal Conference, SUM 2010,

137–150. Springer.

Doan, A.; Madhavan, J.; Domingos, P.; and Halevy, A. Y.

2002. Learning to map between ontologies on the semantic

web. In Lassner, D.; Roure, D. D.; and Iyengar, A., eds.,

WWW, 662–673. ACM.

Euzenat, J., and Shvaiko, P. 2007. Ontology matching. Heidelberg (DE): Springer-Verlag.

Fensel, D., and van Harmelen, F. 2007. Unifying reasoning and search to web scale. Internet Computing, IEEE

11(2):96–95.

Harrington, P. 2012. Machine Learning in Action. Greenwich, CT, USA: Manning Publications Co.

Heath, T., and Bizer, C. 2011. Linked Data: Evolving the

web into a Global Data Space. Morgan & Claypool Publishers.

Hitzler, P., and van Harmelen, F. 2010. A reasonable semantic web. Semantic Web Journal 1(1):39–44.

Hitzler, P. 2009. Towards reasoning pragmatics. In Janowicz, K.; Raubal, M.; and Levashkin, S., eds., GeoSpatial Semantics, Third International Conference, FeoS 2009, Mexico

City, Mexico, December 3-4 2009, 9–25. Springer.

Jain, P.; Hitzler, P.; Sheth, A. P.; Verma, K.; and Yeh, P. Z.

2010a. Ontology alignment for linked open data. In International Semantic Web Conference (1), 402–417.

Jain, P.; Hitzler, P.; Yeh, P. Z.; Verma, K.; and Sheth,

A. P. 2010b. Linked data is merely more data. In AAAI

Spring Symposium: Linked Data Meets Artificial Intelligence. AAAI.

Lu, Q., and Getoor, L. 2003. Link-based classification. In

20th International Conference on Machine Learning.

Manning, C. D.; Raghavan, P.; and Schütze, H. 2008. Introduction to Information Retrieval. Cambridge, England:

Cambridge University Press.

Neumayer, R. 2012. Semantic and Distributed Entity Search

in the Web of Data. Ph.D. Dissertation, Department of

Computer and Information Science, Norwegian University

of Science and Technology.

Perkins, J. 2010. Python Text Processing with NLTK 2.0

Cookbook. Packt Publishing.

Sen, P., and Getoor, L. 2007. Link-based blassification.

Dept. of Computer Science Technical Report CS-TR4858,

University of Maryland.

Conclusion and Future Work

The paper presented here explores the development of classifiers for identifying the semantic categorization of RDF

entities based upon the semantic links referencing and referenced by the entity. Methods from text classification are

adapted to use the names of the links. The goal is to develop

classifiers that are accurate, but robust to errors and noise.

The training data comes from entities that are already semantically categorized. DBpedia was used as a testbed for

this approach. These preliminary experiments are very encouraging as the tests worked quite well.

The next stage of the project will extend this work with

binary classifiers to the development of a multi-label classifier. Dbpedia provides an elaborate ontology that can be

used as the basis for the multi-label classifier.

An additional application for this approach is to address the ontology matching problem (Euzenat and Shvaiko

2007). Building on previous work (Doan et al. 2002) on the

use of machine learning for ontology matching, one could

use classifiers (of the sort discussed in this paper) trained

on the categories in DBpedia to determine the relationship

between the DBpedia ontology and another ontology.

Acknowledgments

The author thanks Dr. Joe Chung of Monmouth University

for invaluable help with systems issues.

References

Auer, S., and Lehmann, J. 2010. Making the web a data

washing machine - creating knowledge out of interlinked

data. Semantic Web Journal 1(1-2):97–104.

Auer, S.; Bizer, C.; Kobilarov, G.; Lehmann, J.; Cyganiak,

R.; and Ives, Z. 2007. Dbpedia: a nucleus for a web of open

data. In Proceedings of the 6th international The semantic

web and 2nd Asian conference on Asian semantic web conference, ISWC’07/ASWC’07, 722–735. Berlin, Heidelberg:

Springer-Verlag.

Baeza-Yates, R., and Ribeiro-Neto, B. 2011. Modern Information Retrieval: the concepts and technology behind

search. Harlow, England: Addison Wesley.

Brin, S., and Page, L. 1998. The anatomy of a large-scale

hypertextual web search engine. In Seventh International

World-Wide Web Conference (WWW 1998).

57