Multi-modal communication Bencie Woll Deafness Cognition and Language Research Centre, UCL

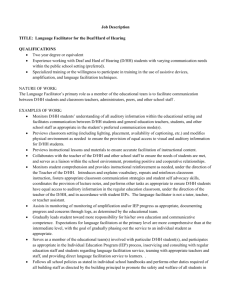

advertisement

Multi-modal communication Bencie Woll Deafness Cognition and Language Research Centre, UCL Outline • Introduction: the core language areas • The multi-channel nature of human communication • What can sign languages tell us – Speech and sign -- common neural bases? – fMRI studies of BSL and English • What can speechreading tell us? – The auditory cortex and (audio)-visual speech • Conclusions Do the contrasting properties of sign and speech constrain the underlying neural systems for language? The brain -- lateral view of left hemisphere Broca’s area (posterior inferior region of L frontal lobe) Wernicke’s area (posterior region of STG) *approximate location *approximate location B W Sylvian fissure Heschl’s gyrus containing primary auditory cortex Frontal lobe Parietal lobe Temporal lobe Occipital lobe Multi-channel communication • Human face-to-face communication is essentially audiovisual • Typically, people talk face-to-face, providing concurrent auditory and visual input • Visual input also includes gesture • Sign language is also multi-channel: signs, mouthing, gesture 4 Audio-visual processing • Observing a specific person talking for 2 min improves subsequent auditory-only speech recognition for this person. • Behavioral improvement in auditory-only speech recognition is based on activation in an area typically involved in face-movement processing. • These findings challenge unisensory models of speech processing, because they show that, in auditory-only speech, the brain exploits previously encoded audiovisual correlations to optimize communication Von Kriegstein et al (2008) 5 What can sign languages tell us? • Almost everything we know about language processing in the brain is based on studies of spoken languages (SpL) • With sign languages (SL), we can ask if what we have learned thus far is characteristic of language per se -- or whether it is specific to languages in the auditory-vocal modality What can sign languages tell us? • SL and SpL convey the same information – BUT… • Use different articulators and are perceived by different sensory organs • Do these differences, driven by the modalities in which language is expressed and perceived, have consequences for the neural systems supporting language? Speech and sign - common neural bases? • Our first question must be: Is the ‘core language network’ specific to languages that are spoken and heard? • We’ll start with lateralisation… Lateralisation of language functions • It is well-established that the brain’s left hemisphere (LH) is critical for producing and understanding speech – Damage to perisylvian areas (= areas bordering the sylvian fissure of the LH) results in aphasias (disordered language) – Damage to homologous (= the same on the other side) right hemisphere (RH) areas does not generally produce aphasias • This implies that language is predominantly leftlateralised Where in the brain is SL processed? 10 fMRI of BSL – Study 1 • sentence processing - comparing BSL with English • Deaf native signers and hearing non-signers • English translations presented audiovisually – Coronation Street is much better than Eastenders. – I will send you the date and time. – The woman handed the boy a cup. – Paddington is to the west of Kings Cross MacSweeney et al, 2002 16 BSL- Deaf native signers English – hearing native speakers 12 Results • Sign language and spoken language are both leftlateralised • More posterior activation during sign language processing than spoken language processing (greater movement) • Deaf native signers use classical language areas, including secondary auditory cortex, to process BSL • However, earlier studies suggesting activation within primary auditory cortex (lateral parts of Heschl's gyrus—BA 41) during sign perception are probably incorrect 13 Revisiting speechreading How does the brain do speechreading? • Are there differences between processing speech with sound and speech without sound? fMRI study 2. Comparing processing of sign, speech and silent speech (speechreading) • To what extent do the patterns of activation during speech perception and sign language perception differ? • To what extent do the patterns of activation during perception of silent and audio-visual speech differ? Capek et al, 2008 16 Silent speech (single words) Single signs • Signs and words activate a very similar network • Silent speech activates regions in deaf people’s brains that have been identified as auditory speech processing regions in hearing people Audiovisual speech (sentences) 17 Auditory cortex and speechreading • Superior temporal regions activated include areas traditionally viewed as dominant for processing auditory information in hearing people, including secondary auditory association cortex (BAs 22 & 42) (Campbell, 2008) • These findings are in agreement with those of Sadato et al (2005) on how lipreading activates secondary auditory cortex in deaf people, and Pekkola et al (2005) on how lipreading activates primary as well as secondary auditory cortex of hearing people 18 • When auditory cortex is not activated by acoustic stimulation, it can nevertheless be activated by silent speech in the form of speechreading • Mid-posterior superior temporal regions, are activated by silent speechreading in prelingually deaf people who have not had experience of heard speech 19 Phonological representation • Auditory • Audio-visual (language development in congenitally blind babies) • Articulatory • Orthographic (rough and bough) MacSweeney et al, 2008 20 fMRI study 3: Phonological judgements Activation during the: A) location task in deaf participants (n=20); B) rhyme task in deaf participants (n=20); C) rhyme task in hearing participants (n=24). 21 Speech processing and CI 22 Audio-visual language processing with cochlear implants • CI subjects present a higher visuo-auditory gain than that observed in normally hearing subjects in conditions of noise • This suggests that people with CI have developed specific skills in visuo-auditory interaction leading to an optimisation of the integration of visual temporal cueing with sound in the absence of fine temporal spectral information Rouget et al, 2007 23 Speechreading in Adult CI users • A progressive cross-modal compensation in adult CI users after cochlear implantation • Synergetic perceptual facilitation involving the visual and the recovering auditory modalities. This could lead to an improved performance in both auditory and visual modalities, the latter being constantly recruited to complement the information provided by the implant. Strelnikov et al, 2008 Speechreading and literacy • 29 7 & 8 year old deaf children (7 with CI) – Reading achievement was predicted by the degree of hearing loss, speechreading, and productive vocabulary. – Earlier vocabulary and speechreading skills predicted longitudinal growth in reading achievement over 3 years – speechreading was the strongest predictor of single word reading ability “deaf children’s phonological abilities may develop as a consequence of learning to read rather than being a prerequisite of reading, … in the early stages, development is mediated by speechreading”. Kyle & Harris, 2006, 2010 Harris & Terlektsi, 2011 Speechreading pre-implant • Superior temporal regions of the deaf brain, once tuned to visible speech, may then be more readily able to adapt to perceiving speech multimodally • This notion should inform preparation and intervention strategies for cochlear implantation in deaf children 26 Cross-modal activation and CI • It has been suggested that visual-toauditory cross-modal plasticity is an important factor limiting hearing ability in non-proficient CI users (Lee et al, 2001) • However, as we have seen, cross-modal activation is found in hearing as well as deaf people and enhances speech processing. Optimal auditory performance with a CI is associated with normal fusion of audiovisual input (Tremblay et al, 2010) 27 • A neurological hypothesis is being advanced which suggests that the deaf child should not watch speech or use sign language, since this may adversely affect the sensitivity of auditory cortical regions to acoustic activation following cochlear implantation • Such advice may not be warranted if speechreading activates auditory regions irrespective of hearing status • And if speechreading benefits literacy 28 Multi-modal communication • gives access to spoken language structure by eye, and, at the segmental level, can complement auditory processing. • has the potential to impact positively on the development of auditory speech processing following cochlear implantation 29 Speechreading • is strongly implicated in general speech processing (Bergeson et al, 2005) and in literacy development in both hearing and deaf children (Harris & colleagues) • capabilities interact with prosthetically enhanced acoustic speech processing skills to predict speech processing outcomes for cochlear implantees (Rouger et al, 2007, Strelnikov et al, 2008) • continues to play an important role in segmental speech processing post-implant (Rouger et al, 2008) 30 References Bergeson TR, Pisoni D & Davis RAO (2005) Development of Audiovisual Comprehension Skills in Prelingually Deaf Children With Cochlear Implants. Ear & Hearing 26 (2) 149-164 Campbell R (2008) The processing of audio-visual speech: empirical and neural bases. Philosophical Transactions of the Royal Society B 363(1493): 10011010. Capek CM, MacSweeney M, Woll B, Waters D, McGuire PK, David AS, Brammer MJ, Campbell R. (2008) Cortical circuits for silent speechreading in deaf and hearing people. Neuropsychologia 46(5): 1233-1241. Harris M & Terlektsi E (2011) Reading and Spelling Abilities of Deaf Adolescents With Cochlear Implants and Hearing Aids Journal of Deaf Studies and Deaf Education 16(1): 24-34. Kyle FE & Harris M (2006) Concurrent Correlates and Predictors of Reading and Spelling Achievement in Deaf and Hearing School Children Journal of Deaf Studies and Deaf Education 11(3): 273-288. Kyle FE & Harris M (2010). Predictors of reading development in deaf children: A 3-year longitudinal study. Journal of Experimental Child Psychology 107:3 229-243 Lee HJ, Giraud AL, Kang E, Oh SH, Kang H, Kim CS & Lee DS (2007). Cortical activity at rest predicts cochlear implantation outcome. Cerebral Cortex, 17:, 909917. Lee, DS, Lee JS, Oh SH, Kim SK, Kim JW, Chung JK, Lee MC & Kim CS (2001) Cross-modal plasticity and cochlear implants. Nature, 409: 149-150. MacSweeney M, Calvert GA, Campbell R, McGuire PK, David AS, Williams SC, Woll B. & Brammer MJ. (2002). Speechreading circuits in people born deaf. Neuropsychologia, 40, 801-807. MacSweeney M, Waters D, Brammer MJ, Woll B, & Goswami U (2008) Phonological processing in deaf signers and the impact of age of first language acquisition. Neuroimage 40: 1369-1379. McGurk, H. & MacDonald, J. (1976). Hearing lips and seeing voices. Nature, 264, 746-748. Mills, Anne E. (1987) The development of phonology in the blind child. In Dodd B & Campbell R (Eds.) Hearing by eye: The psychology of lip-reading, (pp. 145161). Hillsdale NJ: Lawrence Erlbaum Associates. Pekkola J, Ojanen V, Autti T, Jääskeläinen I, Möttönen R, Tarkiainen A, Sams M (2005). Primary auditory cortex activation by visual speech: an fMRI study at 3T Neuroreport 16(2) 125-128. Rouger J, Fraysse B, Deguine O & Barone P (2008). McGurk effects in cochlear-implanted deaf subjects. Brain Research, 1188::87-99. Rouger J, Lagleyre S, Fraysse B, Deneve S, Deguine O & Barone P (2007). Evidence that cochlear-implanted deaf patients are better multisensory integrators. Proceedings of the National Academy of Sciences USA, 104, 7295-7300. Sadato N, Okada T, Honda M, Matsuki K, Yoshida M, Kashikura K, Takei W, Sato T, Kochiyama T & Yonekura Y (2005). Cross-modal integration and plastic changes revealed by lip movement, random-dot motion and sign languages in the hearing and deaf. Cerebral Cortex 15: 1113-1122. Strelnikov K, Rouger J, Barone P & Deguine O (2009) Role of speechreading in audiovisual interactions during the recovery of speech comprehension in deaf adults with cochlear implants. Scandinavian Journal of Psychology 50: 437–444. Tremblay C, Champoux F, Lepore F, Théoret H (2010) Audiovisual fusion and cochlear implant proficiency. Restorative Neurology and Neuroscience, 28(2): 283-291. Von Kriegstein K, Dogan Ö, Grüter M, Giraud A-L, Kell CA, Grüter T, Kleinschmidt A & Kiebel SJ. (2008) Simulation of talking faces in the human brain improves auditory speech recognition. Proceedings of the National Aacademy of Sciences USA 105(18): 6747-6752.