Lecture Notes No. 17

2.160 System Identification, Estimation, and Learning

Lecture Notes No. 1

7

April 24, 2006

12. Informative Experiments

12.1 Persistence of Excitation

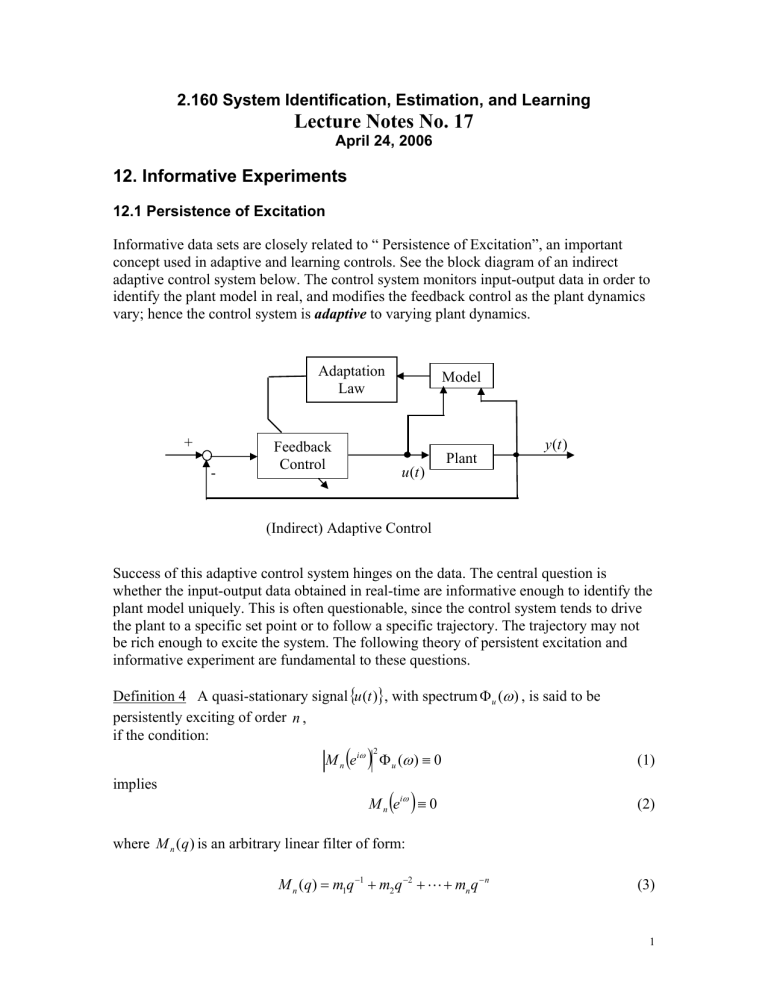

Informative data sets are closely related to “ Persistence of Excitation”, an important concept used in adaptive and learning controls. See the block diagram of an indirect adaptive control system below. The control system monitors input-output data in order to identify the plant model in real, and modifies the feedback control as the plant dynamics vary; hence the control system is adaptive to varying plant dynamics.

Adaptation

Law

Model

+

-

Feedback

Control u ( t )

Plant y ( t )

(Indirect) Adaptive Control

Success of this adaptive control system hinges on the data. The central question is whether the input-output data obtained in real-time are informative enough to identify the plant model uniquely. This is often questionable, since the control system tends to drive the plant to a specific set point or to follow a specific trajectory. The trajectory may not be rich enough to excite the system. The following theory of persistent excitation and informative experiment are fundamental to these questions.

Definition 4 A quasi-stationary signal

{ }

, with spectrum

Φ u

(

ω

) , is said to be if the condition:

M n

2 Φ u

(

ω

)

≡

0 implies

M n where is an arbitrary linear filter of form: n

≡

0

M n

( q )

= m

1 q

−

1 + m

2 q

−

2 + " + m n q

− n

(1)

(2)

(3)

1

Remarks:

1.

Note M n

2 Φ u

(

ω

) is the power spectrum of v ( t )

=

M ( q ) u ( t ) . Therefore, a signal u(t) that is persistently exciting of order cannot be filtered to zero by any

( n -1) st order moving average filter (3), hence it is called persistently exciting. u ( t ) M ( q ) v ( t )

2.

Consider function M n

( z ) M n

( z

−

1

) , associated with M n

( )

2

.

M n z M n

( z

−

1

)

=

( m z

1

−

1 + m z

2

−

2

=

( m

1

+ m z

" m z n

− n

)( m z

1

1 + m z

2

2 n 1

)( m

1

+ m z

" n

−

1

) m z n n

)

(4)

If a+ib is a zero of M n

( z ) M n

( z

−

1

) , a-ib is also a zero, since the function has all real coefficients. Also, if a+ib is a zero, then its reciprocal a a

2

−

+ ib b

2

is also a zero of the function since the function is symmetric with respect to the unit circle, z

↔ z

−

1

. See the figure below. This function can have at most ( n -1) zeros on the unit circle. Therefore, M n

( )

2 =

M n

( ) ( ) n

may be zero for at most ( n -1) different frequencies. In consequence, if

Φ u frequencies;

− π < ω

1

,

"

,

ω n

< π

(

ω

)

≠

0 for at least n different

then u ( t ) is persistently exciting. a

+ ib a a

2

−

+ ib b

2

The following lemma provides a useful method for checking persistence of excitation:

Lemma : Let u ( t ) be a quasi-stationary signal. Consider the n

× n matrix given by

R n

=

⎡

⎢

⎢

⎢

⎣ R u

R u

R u

( n

#

( 0 )

( 1 )

−

1 )

R u

( 1 )

R u

( 0 )

"

"

"

R u

R u

R

( n

( n u

−

−

( 0 )

1 )

2 )

⎤

⎥

⎥

⎥

⎦

∈

R n

× n (5)

Then u ( t ) is persistently exciting of order n if and only if R is non-singular. n

2

Proof

Put the coefficients of m

=

( m

1 m

2

M n

( q )

" m n into an n -dimensional vector:

)

T ∈

R n

×

1

.

Consider a quadratic form: m

T

R n m . It is known that the following two are equivalent

( R n

is non-singular )

⇔

( m

T

R n m

=

0 implies m

=

0 )

Compute m

T

R n m

T m R m

=

= ( m

( m

1

1

"

" m n

) ⎜

⎜

⎛

⎜

R u

(0)

R u

(1)

R u

(1)

R u

(0)

%

R u

(0)

⎞⎛ m m

1

2

⎞

⎟⎜ ⎟

⎟⎜ ⎟

⎟⎜ # ⎟

⎟⎜ ⎟ m n m n

) ⎜

⎜

⎛

⎜ m R

1 u

(0)

1

+ m R m R

2 u u

(1)

(1)

+ m R

2 u

(0)

# n u

+ "

(

−

1)

⎟

⎟

⎞

⎟ m R n

1 u

(

− + "

= n n ∑∑ i j m m R i i j u

( − − −

1)

) = ∑∑ i j i j u

(

− j )

= ∑ i

∑ j i j

( )

(

( i j )

) = ∑∑ i j

(

−

)

( )

=

E

∑ i

(

− i )

∑ j

( − j

) = [ n

( ) ( )

] i j

2

=

2

1

π

∫ π

− π

M n

( )

2 Φ u

Therefore, m

T

R n m

=

0 means M n

( )

2

Φ u

(6)

ω ≡

0, for almost all

ω

. Matrix R is nonn singular, is equivalent to M n

2 Φ u

(

ω

)

≡

0

→

M n

( q )

=

0

Therefore, u ( t ) n R n is non-singular.

12.2 Conditions for Informative Experiments

Based on the persistently exciting condition, how can we design experiments so that any two models of a model set can be distinguished, i.e. informative experiments?

Consider two models: i =1, 2 of a model set M *

,

3

G i

( q )

=

G

( q ,

θ i

)

, H i

( q )

=

H

( q ,

θ i

)

,

ε i

( q )

= ε ( ) i

=

H

1

−

1

⎡

⎢ −

1

−

2

+ −

H

ε

⎤

⎥

(7) and their difference

∆

G ( q )

=

G

2

( q )

−

G

1

( q ) ,

∆

H ( q )

=

H

2

( q )

−

H

1

( q )

Note

ε i

( t )

= y

= y

−

− t

( ) i [

H i

−

1

G i u

+

(

1

−

H i

−

1

) y

]

=

H i

−

1 y

−

H i

−

1

G i u

(8)

Let us compute the difference of prediction error between the two models:

∆ ε q

= ε

2 q

− ε

1 q

=

=

H

1

1

−

1 − −

1

H G u

1 1

−

1

[ y

−

G u

−

−

H

ε

ε

2

]

(9)

∆

G u H

=

H q

1

1

( )

[ ∆

( ) ( )

+ ∆ ε

2 t

Using the true system model: y ( t )

=

G

0

( q ) u ( t )

+

H

0

( q ) e

0

( t )

ε

2

( t ) can be written as

ε

2

( )

= −

2

1 − −

1

H G u

2 2

=

H

2

−

1

[

G u

0

+

H e

0 0

−

G u

2

]

]

(10)

(11)

=

1

H q

2

( )

(

( )

−

( )

)

( )

+

H q e t

Combining (9) and (11) yields,

∆ ε =

1

H q

1

( )

⎡

⎢

⎢ ⎛

⎢

⎢

⎜

∆ +

G q

−

G q

H q

2

( )

∆

( )

⎞

⎠

( )

+

( )

H q

2

( )

∆

H q e t

0

⎥

⎥

⎤

⎥

(12)

⎥

⎦

Suppose that the experiment is carried out in open-loop, so that u ( t ) and e

0

( t ) are uncorrelated. The mean of

∆ ε

2

( t ) is given by (See Lecture Note No.15. eq.(9))

4

E

∆ ε

2 =

1

2

π

∫

π

− π

1

H e

1

( ) 2

⎡

⎣

(13) where

λ =

0 0

and

2

= ∆

( )

+

( )

−

G e i

ω

( i

ω

)

2

Φ u

ω +

2

2 λ

0

⎤

⎦ d

ω

(14)

2 =

H

0

( ) ( )

H e

2

( i

ω

)

∆

H e i

ω

2

(15)

(16)

(17)

Therefore, E [

( ε

1 t

− ε

2 t

)

2 =

(

2

θ

2

)

− y t

1

θ

1

)

2

) ]

=

0 implies

Φ u

ω

A e i

ω

)

2 ≡

0 and B e i

ω

)

2 ≡

0 : both must be identically zero.

∆

Eq. (15)

( i

ω

)

≡

0

∆

G

2 Φ u

(

ω

)

≡

0

If (17) implies

∆

G

≡

0 , then the two models are equal, and the experiment is informative enough w.r.t. M

*

.

This last condition is basically equivalent to the persistently exciting condition given by

Definition 3 and Lemma.

Considering a concrete model for G ( q ,

θ

) leads to the following theorem.

Theorem 3 Consider a model set M * of SISO systems:

M

* =

{

θ θ θ ∈

D

M

*

}

(18)

G ( q ,

θ

)

=

B ( q ,

F

θ

( q ,

θ

)

)

= where G ( q ,

θ

) is a rational function: q

− n k

( b

1

1

+

+ f

1 b

2 q

−

1 q

−

1

+ "

+ " +

+ f n b n b q

− n f f q

−

( n b

−

1 )

)

(19)

5

and H ( q ,

θ

) is inversely stable. Then an open-loop experiment with an input that is persistently exciting of order n b

+ n f

is informative enough w.r.t. M

*

.

Proof

For two different models, G

1

( q ) and G

2

( q ) ;

∆

G ( q )

=

B

1

( q )

F

1

( q )

−

B

F

2

2

(

( q q )

)

=

B

1

( q ) F

2

( q )

−

B

2

( q ) F

1

( q )

F

1

( q ) F

2

( q )

(20)

Therefore (17) becomes

1

( ) ( ) n

−

2

( i

ω )

( ) ( )

2

Φ u

ω ≡

0 (21)

Relating B F

1 2

−

B F

2 1

to

Factoring out

M e n

( )

( )

− n k

in (15), we find that

does not change B F

2

−

B F

1

The remaining part is a polynomial of order n b

+ n f

−

1 .

Since the input u ( t ) is persistently exciting of order n

+ b i.e.

∆

G ( q )

≡

0 QED. n f

, (21) implies B F

2

−

B F

1

The point is:

(The order of persistent excitation)

≥

( The number of parameters to be estimated)

≡

0 ,

12.3 Signal-to-Noise Ratio and Convergence Speed

From Theorem 2 we know that, if a model set includes the true system, and the data set is informative enough with respect to the model set, the estimated model converges to the true system, i.e. consistent. Furthermore, from Theorem 3 we know that, as long as the input sequence has the order of persistent excitation greater than the number of parameters involved in the model set, the model converges to the true model. This convergence is guaranteed regardless the magnitude of noise. However, the convergence speed may depend on the noise magnitude or, more specifically, the signal-to-noise ratio.

The following is to examine the convergence characteristics.

Using the true system dynamics given by (10), the prediction error of a model

M

θ =

{

θ

( ),

θ

( );

θ ∈

D

M

} is given by

ε

( t ,

θ

)

=

=

H

H

θ

−

1

θ

−

1

[

[

(

G

0 y ( t

−

)

−

G

θ

G

θ

) u u ( t

( t

)

)

+

]

=

H

θ

−

1

(

(

H

0

−

[

H

G

0

θ

) e

0

−

( t

G

θ

)

]

) u

+

( t ) e

0

+

( t )

H

0 e

0

( t )

]

(22)

6

Note that the second term in the last expression,

(

H

0

−

H

θ

)

( ) , does not contain but is a function of (

−

1)

"

, because both H

0

and H

θ

( )

are monic. Therefore, the three

ε θ

is given by

Φ

ε

(

ω

,

θ

)

=

G

0

−

G

θ

2

H

θ

2

Φ u

(

ω

)

+

H

0

−

H

θ

2

H

θ

2

λ

0

+ λ

0

(23) u

ω

is the power spectrum of the input, and

λ

is the variance of the White

0 noise. Applying eq.(9) in Lecture Note No 15 to the above power spectrum

Φ

ε

ω θ

, we obtain the following result.

Theorem 4

ε θ

be the prediction error of a model in a linear time-invariant model set M

θ =

{ ( ), ( );

θ ∈

D

M

} . Assume that the true system given by y ( t )

=

G

0

( q ) u ( t )

+

H

0

( q ) e

0

( t ) and the model process are quasi-stationary processes, then the optimal parameter that minimizes the mean squared prediction error

V

=

1

2

E

ε θ

2

[ ( , ) ] is given by

θ ˆ = arg min

θ ∈

D

M

∫ π

− π

{ G e

0 i

ω

)

−

θ

( i

ω

)

2 ⋅

Φ u

θ

( i

ω

)

2

+

H e

0

( i

ω

)

−

θ

( i

ω

)

θ

( i

ω

)

2

2

λ ω

0

} d (24)

Φ u

ω

is the power spectrum of the input u ( t ), and

λ

is the variance of the White

0 noise e t

0

( ) .

The proof is obvious.

The convergence process of the above system is complicated. If we assume that the noise model is known or fixed:

θ

( )

=

( ) then (24) reduces to

θ ˆ = arg

θ min

∫

G

0

( e i

ω

)

−

G ( e i

ω

,

θ

)

2

⋅

Φ u

(

ω

)

H

*

( e i

ω

)

2 d

ω

(25)

(26)

The following observation can be made for this simplified expression.

Remarks:

7

•

The model is pushed towards the true system G o

(q) in such a way that the

• weighted mean squared difference in the frequency domain be minimized.

The weight,

H

Φ

* u

( e

(

ω i

ω

)

)

2

, is the ratio of the input power spectrum to the noise power spectrum (if the variance of e o

(t) is unity). In other words, it is a signal-tonoise ratio.

•

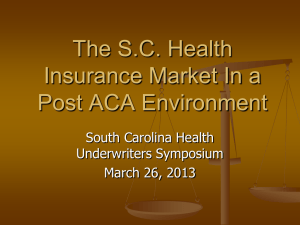

At frequencies where the signal-to-noise ratio is higher, the model converges to the true system more rapidly. See the figure below.

Good agreement for high S/N weight

G ( e i

ω

)

Model

True system

Poor agreement as the

S/N becomes small

High frequency noise

Input magnitude

8