2.160 Identification, Estimation, and Learning Lecture Notes No. 1

2.160 Identification, Estimation, and Learning

Lecture Notes No. 1

February 8, 2006

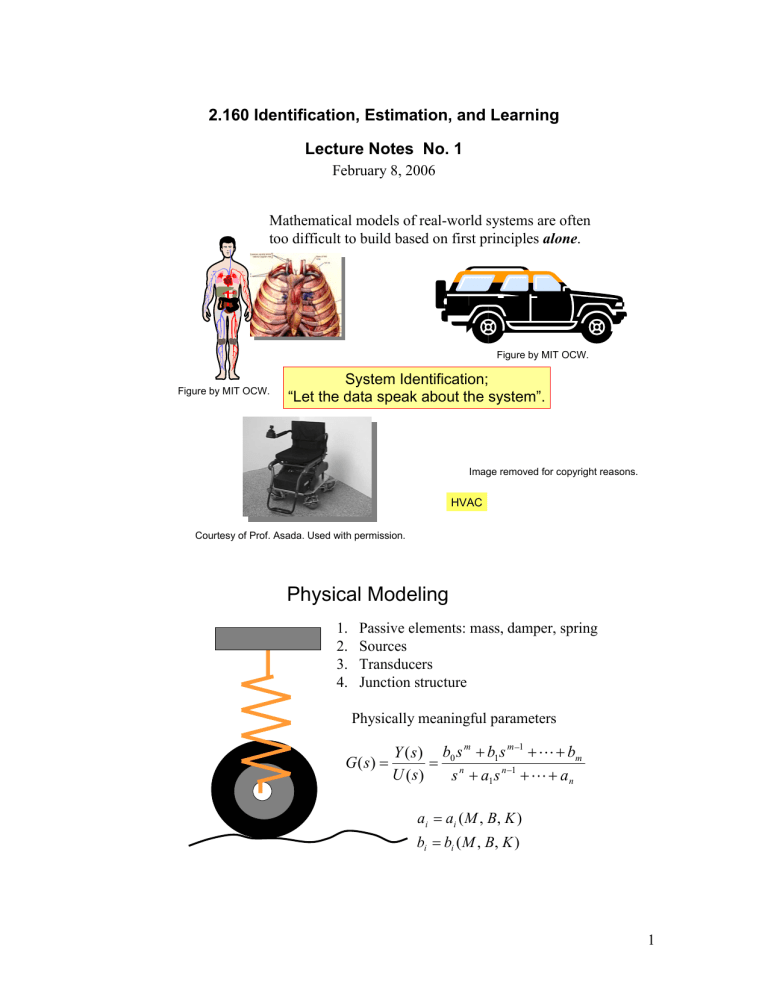

Mathematical models of real-world systems are often too difficult to build based on first principles alone .

Figure by MIT OCW.

Figure by MIT OCW.

System Ident cation;

“Let the data speak about the system”.

Image removed for copyright reasons.

HVAC

Courtesy of Prof. Asada. Used with permission.

Physical Modeling

1. Passive elements: mass, damper, spring

2. Sources

3. Transducers

4. Junction structure

Physically meaningful parameters

G ( s ) =

Y

U

(

( s s

)

)

= b

0 s s n m

+

+ b a

1

1 s s m − 1 n − 1 +

+ L

L +

+ a b n m a i b i

= a i

( M , B , K )

= b i

( M , B , K )

1

Input u( t)

System Identification

Black Box

Output y( t)

G ( s ) =

Y

U

(

( s s

)

)

= b

0 s s n m

+

+ b a

1

1 s s m − 1 n − 1

+ L + b

+ L + a n m

Physical modeling

Comparison Black Box

Pros

1.

Physical insight and knowledge

2.

Modeling a conceived system before hardware is built

Cons

1.

Often leads to high system order with too many parameters

2.

Input-output model has a complex parameter structure

3.

Not convenient for parameter tuning

4.

Complex system; too difficult to analyze

Pros

1.

Close to the actual input-output behavior

2.

Convenient structure for parameter tuning

3.

Useful for complex systems; too difficult to build physical model

Cons

1.

No direct connection to physical parameters

2.

No solid ground to support a model structure

3.

Not available until an actual system has been built

2

Introduction: System Identification in a Nutshell

b

2 b

1 b

3 u(t ) y(t )

FIR

Finite Impulse Response Model t y ( t ) = b

1 u ( t − 1) + b

2 u ( t − 2) + ⋅ ⋅ ⋅ ⋅ ⋅⋅ + m u ( − m )

Define θ : = [ b

1

, b

2

, ⋅ ⋅ ⋅ , b m

] T ∈ R m unknown ϕ ( t ) : = [ u ( t − 1 ), u ( t − 2 ), ⋅ ⋅ ⋅ , u ( t − m )] T ∈ R m known

Vector θ collectively represents model parameters to be identified based on observed data y(t) and ϕ ( t ) for a time interval of 1 ≤ t ≤ N .

Observed data: y ( ), ⋅ ⋅ ⋅ ⋅ ⋅⋅ , y ( N )

Estimate θ y t ) = ϕ ( t ) T θ

This predicted output may be different from the actual y(t) .

Find θ that minimize V

N

( θ )

V

N

( θ ) =

1

N

N ∑

( t t = 1 y ( ) − ˆ( t )) 2

θ ˆ = avg min

θ

V

N

( θ ) dV

N

( θ ) d θ

= 0

V

N

( θ ) =

1

N

N

∑

( t t = 1 y ( ) − ϕ T ( t ) θ ) 2

2

N

N

∑

( t t = 1 y ( ) − ϕ T ( t ) θ )( − ϕ ) = 0

N

∑ t t = 1 y ( ) ϕ ( t ) =

N

∑

( ϕ T ( t ) θ ) ϕ ( t ) t = 1

}

3

t

N

∑

= 1

( ϕ ( t ) ϕ T ( t )

θ = t

N

∑

= 1 y ( t ) ϕ ( t )

∴

R

N

θ ˆ

N

= R − 1

N

N

∑ t t = 1 y ( ) ϕ ( t )

Question1

What will happen if we repeat the experiment and obtain θ ˆ

N again?

Consider the expectation of θ ˆ

N when the experiment is repeated many times?

Average of θ ˆ

N

Would that be the same as the true parameter θ

0

?

Let’s assume that the actual output data are generated from y ( t ) = ϕ T ( t ) θ

0

+ e ( t )

θ

0 is considered to be the true value.

Assume that the noise sequence {e(t)} has a zero mean value, i.e. E[e(t)]=0, and has no correlation with input sequence {u(t)}.

θ ˆ

N

= R − 1

N

N

∑ t t = 1 y ( ) ϕ ( t ) = R − 1

N

N

∑ t = 1

[ ( ϕ T ( t ) θ

0

+ e ( t )

) ϕ ( t )

]

= R − 1

N

t

N

∑

= 1 ϕ ( t ) ϕ T ( t )

θ

0

+ R − 1

N

N

∑ ϕ ( t t = 1 t ) ( )

∴

R

N

θ ˆ

N

− θ

0

= R

N

− 1

N

∑ ϕ ( t t = 1 t ) ( )

Taking expectation

E [ θ ˆ

N

− θ

0

] = E

R − 1

N t

N

∑

= 1 ϕ ( t ) ( t )

= R − 1

N

N

∑ ϕ ( t ) ⋅ E [ t t = 1 e ( )] = 0

Question2

Since the true parameter θ

0 is unknown, how do we know how close

θ ˆ

N will be to θ

0

? How many data points, N , do we need to reduce the error

θ ˆ

N

− θ

0

to a certain level?

4

Consider the variance (the covariance matrix) of the parameter estimation error.

P

N

= E θ

N

− θ

0

)( ˆ

N

− θ

0

) ]

= E

R − 1

N t

N

∑

= 1 ϕ ( t ) ( t ) ⋅

R − 1

N

N s

∑

= 1 ϕ ( s ) ( s )

= E

R − 1

N t

N

=

N

∑∑

1 s = 1 ϕ ( t e t e s ) ϕ T ( s ) R − 1

N

= R − 1

N

t

N N

∑∑

= 1 s = 1 ϕ ( t ) E

[ e t e s )

] ϕ T ( s )

R − 1

N

Assume that {e(t)} is stochastically independent

E

[ e ( ) ( s )

]

=

E [ e

E [ t e 2 ( t s

)]

)]

=

=

λ

0 t t

=

≠ s s

Then P

N

= R − 1

N

t

N

∑

= 1 ϕ ( t ) λϕ T ( t )

R − 1

N

= λ R − 1

N

As N increases, R

N

tends to blow out, but R

N

/N converges under mild assumptions. lim

N → ∞

1

N

N

∑ ϕ ( t ) ϕ T ( t ) = lim t = 1

N → ∞

1

N

R

N

= R

For large N , R

N

≅ N R , R − 1

N

≅

1

N

R

θ ˆ

N

θ

0

N

P

N

=

λ

N

R − 1 for large N .

5

I. The covariance P

N

decays at the rate 1/N .

Parameters approach he limiting value at the rate of

1

N

II. The covariance is inversely proportional to

P

N

∝

λ magnitude R

R

N

=

r

M

11 r m 1

K

O

K r r

1 m

M mm

r ij

=

N

∑ t t = 1 u ( − i ) ( t − j )

III. The convergence of θ ˆ

N to θ

0 may be accelerated if we design inputs such that R is large.

IV.

The covariance does not depend on the average of the input signal. Only the second moment

What will be addressed in 2.160?

A) How to best estimate the parameters

What type of input is maximally informative?

• Informative data sets

• Persistent excitation

• Experiment design

• Pseudo Random Binary signals, Chirp sine waves, etc.

How to best tune the model / best estimate parameters

How to best use each data point

• Covariance analysis

• Recursive Least Squares

• Kalman filters

• Unbiased estimate

• Maximum Likelihood

6

B). How to best determine a model structure

How do we represent system behavior? How do we parameterize the model? i. Linear systems

• FIR, ARX, ARMA, BJ,…..

• Data compression: Laguerre series expansion ii. Nonlinear systems

• Neural nets

• Radial basis functions iii. Time-Frequency representation

• Wavelets

Model order: Trade-off between accuracy/performance and reliability/robustness

• Akaike’s Information Criterion

• MDL

7