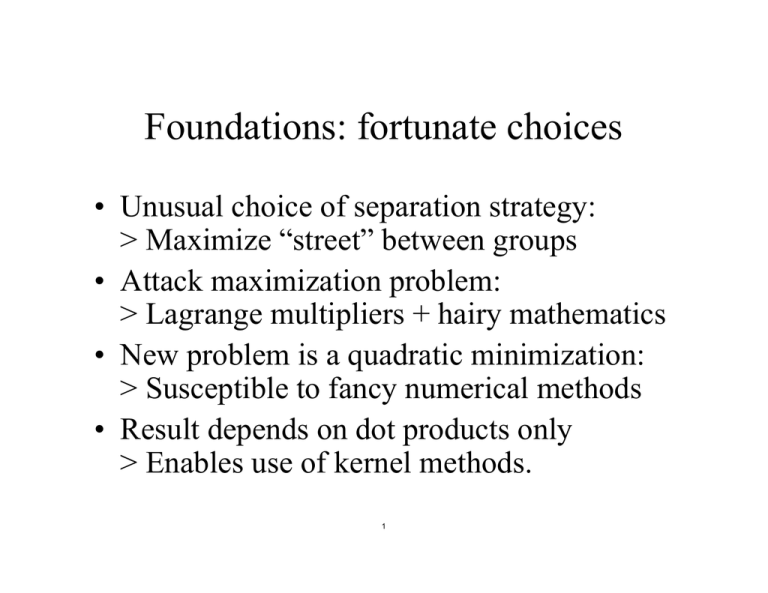

Foundations: fortunate choices

advertisement

Foundations: fortunate choices • Unusual choice of separation strategy: > Maximize “street” between groups • Attack maximization problem: > Lagrange multipliers + hairy mathematics • New problem is a quadratic minimization: > Susceptible to fancy numerical methods • Result depends on dot products only > Enables use of kernel methods. 1 Key idea: find widest separating “street” 2 Classifier form is given and constrained • Classify unknown u as plus if: f (u) = w ⋅ u + b > 0 • Then, constrain, for all plus sample vectors: f (x + ) = w ⋅ x + + b ≥ 1 • And for all minus sample vectors f (x − ) = w ⋅ x − + b ≤ −1 3 Distance between street’s gutters • The constraints require: w ⋅ x1 + b = +1 w ⋅ x 2 + b = −1 x1 • So, subtracting: w ⋅ (x1 − x 2 ) = 2 x2 • Dividing by the length of w produces the distance between the lines: w 2 ⋅ (x1 − x 2 ) = w w 4 From maximizing to minimizing… • So, to maximize the width of the street, you need to “wiggle” w until the length of w is minimum, while still honoring constraints on gutter values: 2 = separation w • One possible approach to finding the minimum is to use the method devised by Lagrange. Working through the method reveals how the sample data figures into the classification formula. 5 From maximizing to minimizing… • A step toward solving the problem using LaGrange’s method is to note that maximizing street width is ensured if you minimize the following, while still honoring constraints on gutter values. 1 2 2 w • Translation of the previous formula into this one, with ½ and squaring, is a mathematical convenience. 6 …while honoring constraints • Remember, the minimization is constrained • You can write the constraints as: yi ( w ⋅ x i + b ) ≥ 1 Where yi is 1 for plusses and –1 for minuses. 7 Dependence on dot products • Using LaGrange’s method, and working through some mathematics, you get to the following problem. When solved for the alphas, you then have what you need for the classification formula. 1 l Maximize ∑ ai − ∑ ai a j yi y j x i ⋅ x j 2 i , j =1 i =1 l Subject to l ∑a y i i = 0i and ai ≥ 0 i l Then check sign of f (u) = w ⋅ u + b = ( ∑ ai yi x i ⋅ u) + b i , j =1 8 Key to importance • Learning depends only on dot products of sample pairs. • Classification depends only on dot products of unknown with samples. • Exclusive reliance on dot products enables approach to problems in which samples cannot be separated by a straight line. 9 Example 10 Another example 11 Not separable? Try another space! Using some mapping, Ф Φ Problem starts here, 2D Dot products computed here, 3D 12 What you need • To get x1 into the high-dimensional space, you use Φ (x1 ) • To optimize, you need Φ (x1 ) ⋅ Φ (x 2 ) • To use, you need Φ (x ) ⋅ Φ (u) 1 • So, all you need is a way to compute dot products in highdimensional space as a function of vectors in original space! 13 What you don’t need • Suppose dot products are supplied by Φ(x ) ⋅ Φ(x ) = K (x , x ) 1 2 1 2 • Then, all you need is K (x , x ) 1 2 • Evidently, you don’t need to know what Ф is; having K is enough! 14 Standard choices • No change Φ (x ) ⋅ Φ(x ) = K (x , x ) = x ⋅ x 1 2 1 2 1 2 • Polynomial K (x , x ) = (x ⋅ x + 1) n 1 2 1 2 • Radial basis function − x1−x2 2 K (x , x ) = e 1 2 2σ 2 15 Polynomial Kernel 16 Radial-basis kernel 17 Another radial-basis example 18 Aside: about the hairy mathematics • Step 1: Apply method of Lagrange multipliers To minimize 1 w 2 2 subject to constraints yi ( x i ⋅ w + b ) ≥ 1 l 1 2 Find places where L = w − ∑ ai ( yi (x i ⋅ w + b) − 1) 2 i =1 has zero derivatives 19 Aside: about the hairy mathematics Step 2: remember how to differentiate vectors ∂w 2 ∂x ⋅ w = 2w and =x ∂w ∂w Step 3: find derivatives of the Lagrangian L l ∂L = w − ∑ ai yi x i = 0 ∂w i =1 l ∂L = ∑ ai yi = 0 ∂b i =1 20 Aside: about the hairy mathematics • Step 4: do the algebra, then ask a numerical analyst to write a program to find the values of alpha that produce an extreme value for: 1 l L = ∑ ai − ∑ ai a j yi y j x i ⋅ x j 2 i , j =1 i =1 l 21 But, note that • Quadratic minimization depends on only on dot products of sample vectors • Recognition depends only on dot products of unknown vector with sample vectors • Reliance on only dot products key to remaining magic 22 MIT OpenCourseWare http://ocw.mit.edu 6.034 Artificial Intelligence Fall 2010 For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms. 23